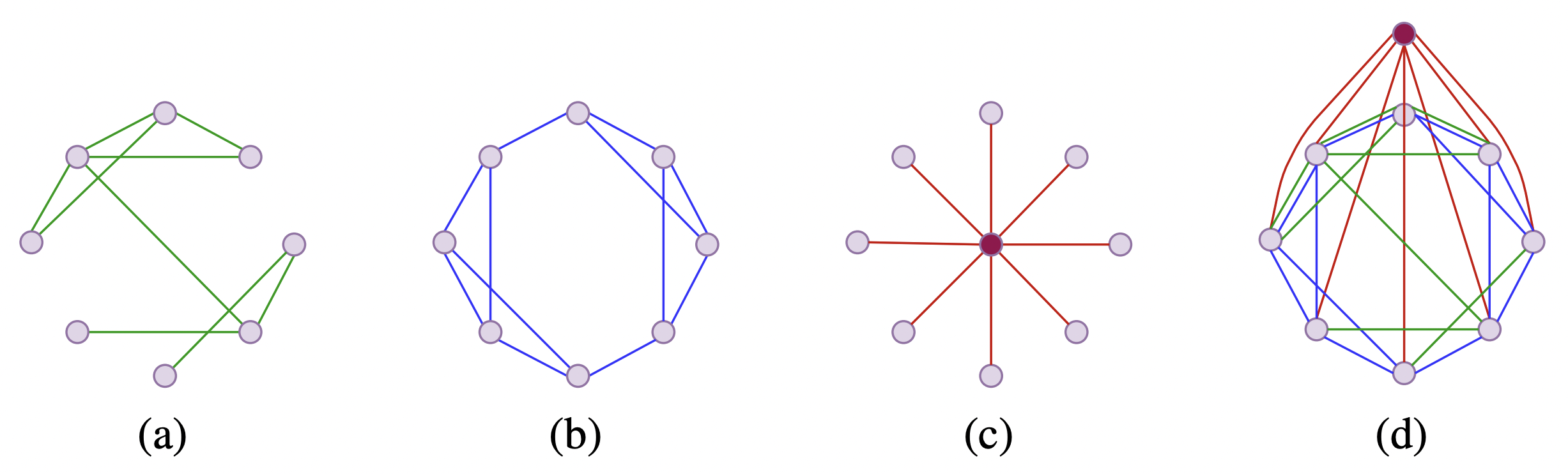

In this work we introduce new sparse transformers for graph data, and use them in the GraphGPS framework. Our sparse transformers outperform BigBird and Performer in all cases we tried, which have been mainly designed for the natural language processing context; in many cases we even get better results than full (dense attention) transformers. Our sparse transformer has three components: actual edges, expander graphs, and universal connectors or virtual nodes. We combine these components into a single sparse attention mechanism.

conda create -n exphormer python=3.9

conda activate exphormer

conda install pytorch=1.10 torchvision torchaudio -c pytorch -c nvidia

conda install pyg=2.0.4 -c pyg -c conda-forge

# RDKit is required for OGB-LSC PCQM4Mv2 and datasets derived from it.

conda install openbabel fsspec rdkit -c conda-forge

pip install torchmetrics

pip install performer-pytorch

pip install ogb

pip install tensorboardX

pip install wandb

conda clean --allconda activate exphormer

# Running Exphormer for LRGB Datasets

python main.py --cfg configs/Exphormer_LRGB/peptides-struct-EX.yaml wandb.use False

# Running Exphormer for Cifar10

python main.py --cfg configs/Exphormer/cifar10.yaml wandb.use False