By finishing the "LLM Twin: Building Your Production-Ready AI Replica" free course, you will learn how to design, train, and deploy a production-ready LLM twin of yourself powered by LLMs, vector DBs, and LLMOps good practices.

Why should you care? 🫵

→ No more isolated scripts or Notebooks! Learn production ML by building and deploying an end-to-end production-grade LLM system.

You will learn how to architect and build a real-world LLM system from start to finish - from data collection to deployment.

You will also learn to leverage MLOps best practices, such as experiment trackers, model registries, prompt monitoring, and versioning.

The end goal? Build and deploy your own LLM twin.

What is an LLM Twin? It is an AI character that learns to write like somebody by incorporating its style and personality into an LLM.

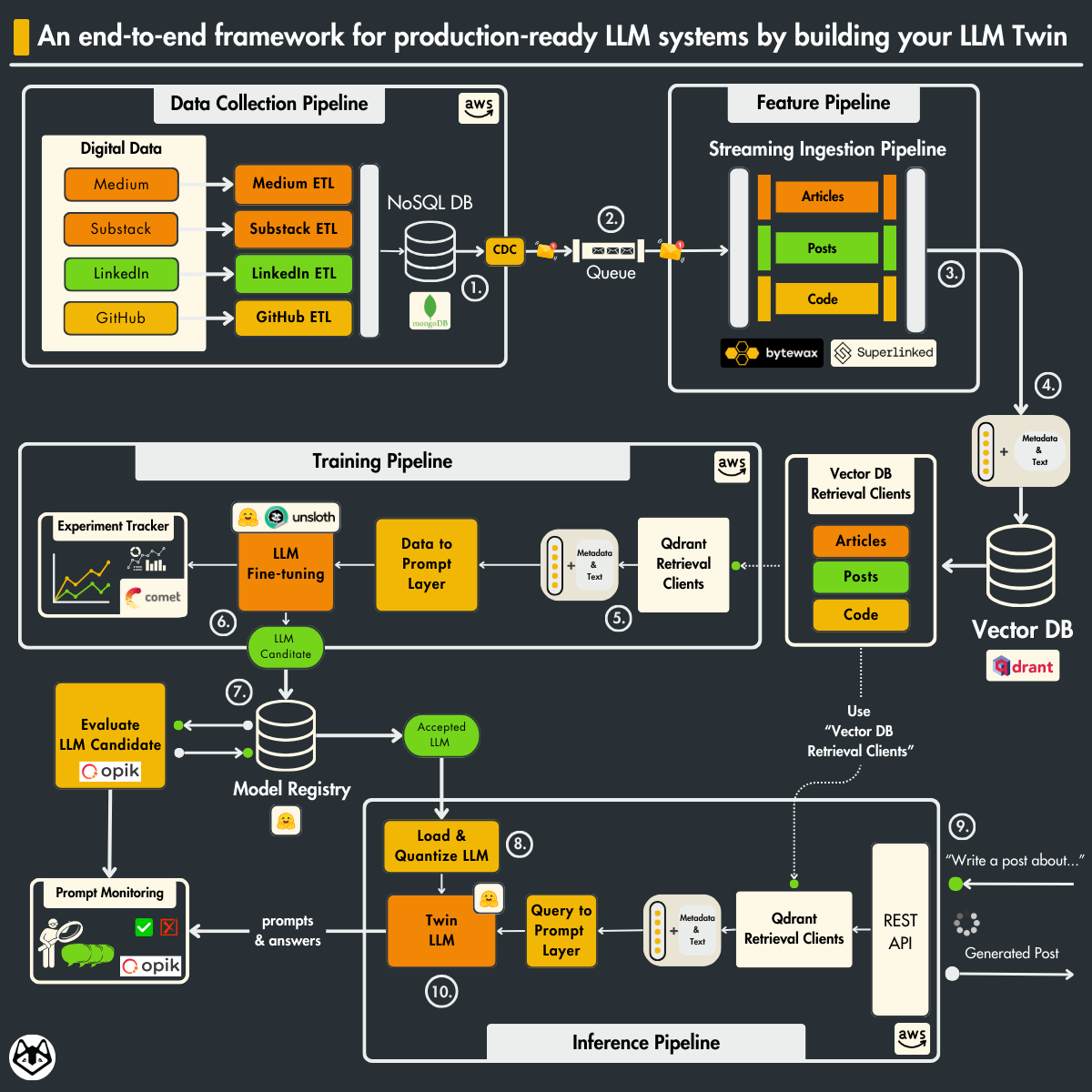

- 1. The architecture of the LLM twin is split into 4 Python microservices:

- 2. Who is this for?

- 3. How will you learn?

- 4. Costs?

- 5. Questions and troubleshooting

- 6. Lessons

- 7. Install & Usage

- 8. Meet your teachers!

- 9. License

- 10. Contributors

- 11. Sponsors

- 12. Next Steps ⬅

- Crawl your digital data from various social media platforms.

- Clean, normalize and load the data to a Mongo NoSQL DB through a series of ETL pipelines.

- Send database changes to a RabbitMQ queue using the CDC pattern.

- ☁️ Deployed on AWS.

- Consume messages from a queue through a Bytewax streaming pipeline.

- Every message will be cleaned, chunked, embedded and loaded into a Qdrant vector DB in real-time.

- In the bonus series, we refactor the cleaning, chunking, and embedding logic using Superlinked, a specialized vector compute engine. We will also load and index the vectors to Redis vector search.

- ☁️ Deployed on AWS.

- Create a custom dataset based on your digital data.

- Fine-tune an LLM using QLoRA.

- Use Comet ML's experiment tracker to monitor the experiments.

- Evaluate and save the best model to Comet's model registry.

- ☁️ Deployed on AWS SageMaker

- Load the fine-tuned LLM from Comet's model registry.

- Deploy it as a REST API.

- Enhance the prompts using advanced RAG.

- Generate content using your LLM twin.

- Monitor the LLM using Comet's prompt monitoring dashboard.

- In the bonus series, we refactor the advanced RAG layer to write more optimal queries using Superlinked.

- ☁️ Deployed on AWS SagaMaker

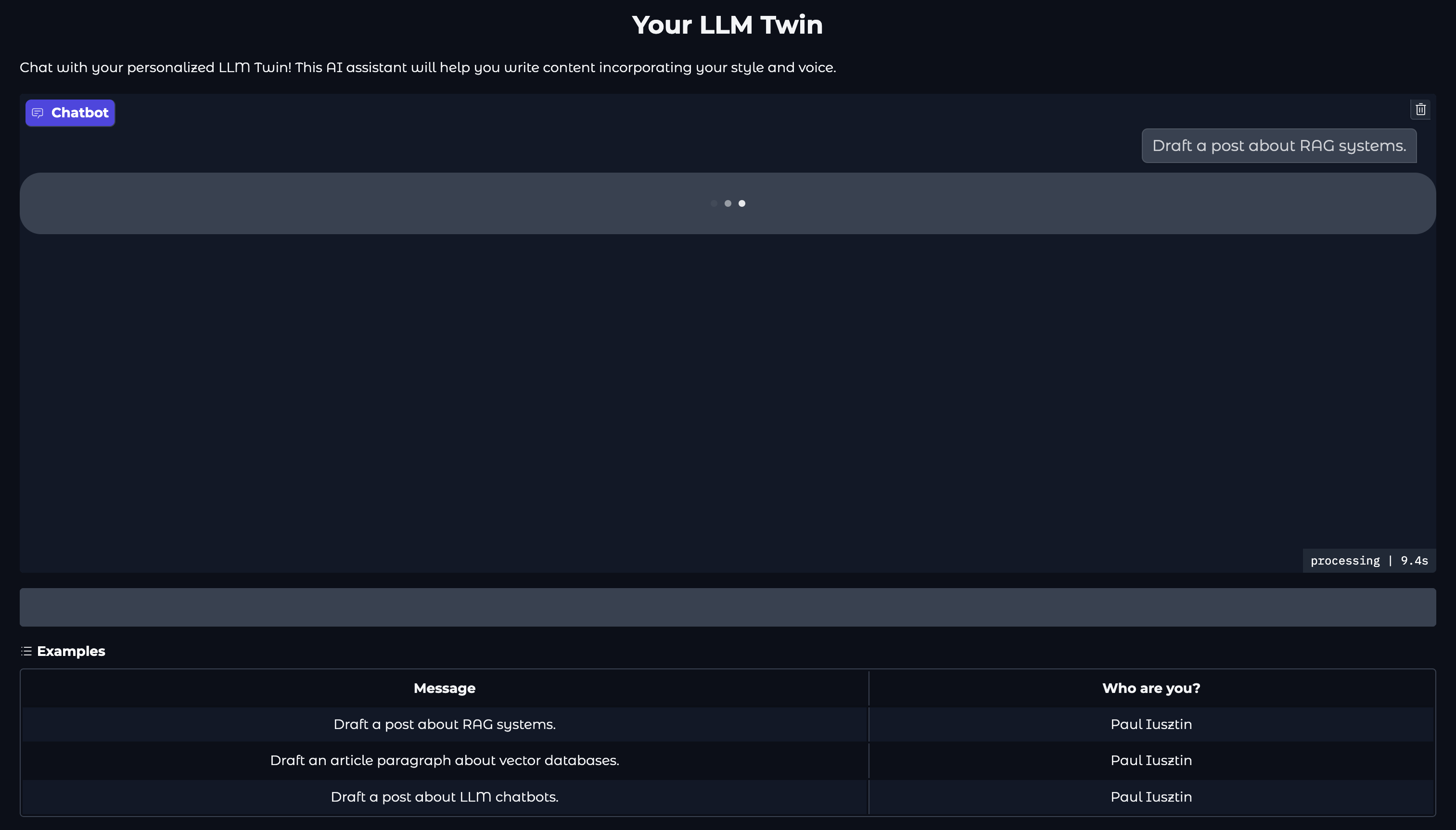

- Wrap up everything with a Gradio UI (as seen below) where you can start playing around with the LLM Twin.

Along the 4 microservices, you will learn to integrate 4 serverless tools:

- Comet ML as your ML Platform;

- Qdrant as your vector DB;

- AWS SageMaker as your ML infrastructure;

- Opik as your prompt evaluation and monitoring tool.

Audience: MLE, DE, DS, or SWE who want to learn to engineer production-ready LLM systems using LLMOps good principles.

Level: intermediate

Prerequisites: basic knowledge of Python, ML, and the cloud

The course contains 11 hands-on written lessons and the open-source code you can access on GitHub.

You can read everything and try out the code at your own pace.

The articles and code are completely free. They will always remain free.

If you plan to run the code while reading it, you have to know that we use several cloud tools that might generate additional costs.

Pay as you go

- AWS offers accessible plans to new joiners.

- For a new first-time account, you could get up to 300$ in free credits which are valid for 6 months. For more, consult the AWS Offerings page.

Freemium (Free-of-Charge)

Please ask us any questions if anything gets confusing while studying the articles or running the code.

You can ask any question by opening an issue in this GitHub repository by clicking here.

→ Quick overview of each lesson of the LLM Twin free course.

Important

To understand the entire code step-by-step, check out our articles ↓

The course is split into 12 lessons. Every Medium article represents an independent lesson.

The lessons are NOT 1:1 with the folder structure!

- Your Content is Gold: I Turned 3 Years of Blog Posts into an LLM Training

- I Replaced 1000 Lines of Polling Code with 50 Lines of CDC Magic

- SOTA Python Streaming Pipelines for Fine-tuning LLMs and RAG — in Real-Time!

- The 4 Advanced RAG Algorithms You Must Know to Implement

- Turning Raw Data Into Fine-Tuning Datasets

- 8B Parameters, 1 GPU, No Problems: The Ultimate LLM Fine-tuning Pipeline

- The Engineer’s Framework for LLM & RAG Evaluation

To understand how to install and run the LLM Twin code, go to the INSTALL_AND_USAGE dedicated document.

Note

Even though you can run everything solely using the INSTALL_AND_USAGE dedicated document, we recommend that you read the articles to understand the LLM Twin system and design choices fully.

The bonus Superlinked series has an extra dedicated README that you can access under the 6-bonus-superlinked-rag directory.

In that section, we explain how to run it with the improved RAG layer powered by Superlinked.

The course is created under the Decoding ML umbrella by:

|

Paul Iusztin Senior AI & LLM Engineer |

This course is an open-source project released under the MIT license. Thus, as long you distribute our LICENSE and acknowledge our work, you can safely clone or fork this project and use it as a source of inspiration for whatever you want (e.g., university projects, college degree projects, personal projects, etc.).

A big "Thank you 🙏" to all our contributors! This course is possible only because of their efforts.

Also, another big "Thank you 🙏" to all our sponsors who supported our work and made this course possible.

| Comet | Opik | Bytewax | Qdrant | Superlinked |

|

|

|

|

|

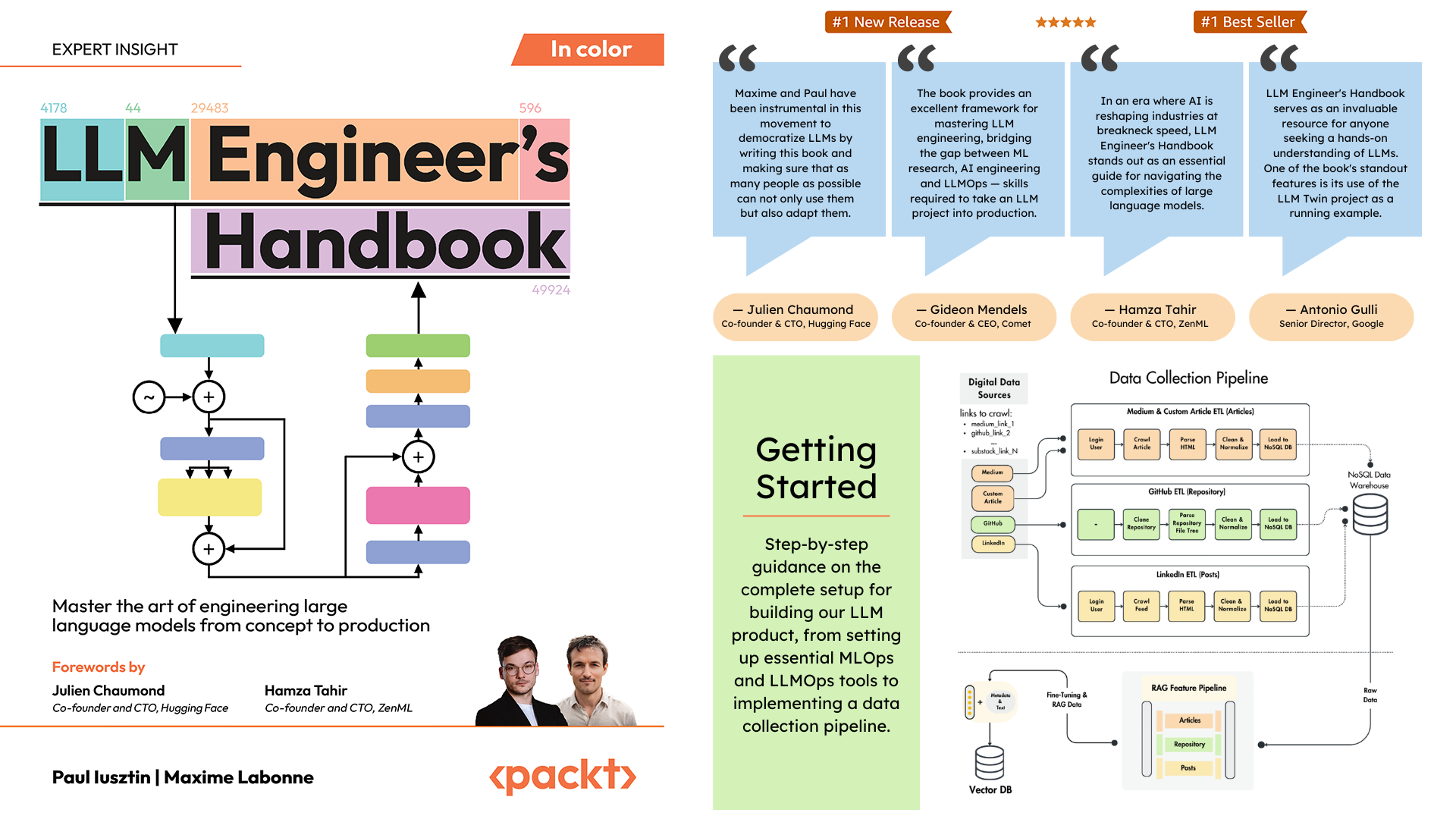

Our LLM Engineer’s Handbook inspired the open-source LLM Twin course.

Consider supporting our work by getting our book to learn a complete framework for building and deploying production LLM & RAG systems — from data to deployment.

Perfect for practitioners who want both theory and hands-on expertise by connecting the dots between DE, research, MLE and MLOps: