📝 Paper

🚀 Ask questions or discuss ideas on GitHub · WeChat

️🔥 Datasets download links and tutorials are being updated! Please stay tuned.

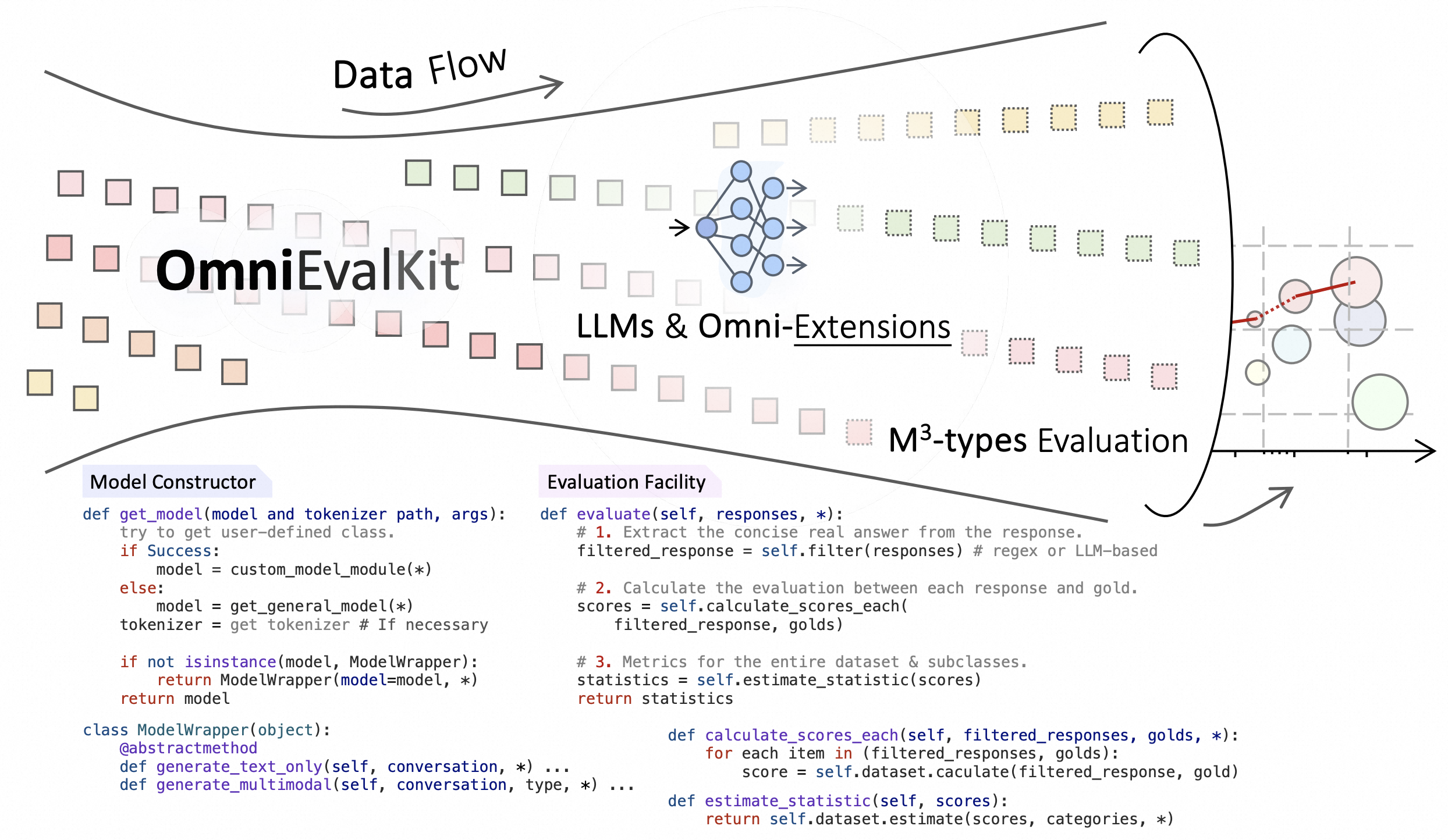

We retain various licenses at AIDC. This repository is just a frequently updated version. If you find OmniEvalKit useful, please cite our work.

| arc_challenge_25 | arc_easy_25 | bbh_3 | boolq | cmmlu | mmlu_5 | openbookqa | anli | cola | copa | glue | hellaswag_10 | aclue | eq_bench (*) | piqa | truthfulqa_mc2 | logieval | mathqa | hellaswag_multilingual_sampled | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baichuan-7B | 43.52 | 74.87 | 15.05 | 70.09 | 41.53 | 40.06 | 38.35 | 35.88 | 69.20 | 85.00 | 39.16 | 70.86 | 31.55 | 1.17 | 76.54 | 34.89 | 21.76 | 27.97 | 33.69 |

| Baichuan2-7B-Chat | 53.58 | 78.58 | 22.96 | 79.39 | 54.65 | 52.64 | 39.76 | 40.59 | 68.72 | 86.00 | 46.31 | 74.11 | 43.72 | 100 | 74.15 | 48.02 | 40.01 | 33.40 | 36.02 |

| Meta-Llama-3-8B-Instruct | 62.12 | 85.52 | 11.96 | 83.15 | 51.30 | 65.59 | 43.37 | 46.44 | 69.20 | 88.00 | 45.00 | 78.77 | 39.39 | 100 | 78.65 | 51.63 | 49.68 | 41.81 | 46.79 |

| Meta-Llama-3.1-8B-Instruct | 60.58 | 85.82 | 15.34 | 83.85 | 55.59 | 67.68 | 43.57 | 46.44 | 69.20 | 92.00 | 44.66 | 80.13 | 41.82 | 100 | 81.03 | 54.02 | 24.05 | 39.46 | 49.86 |

| Mistral-7B-OpenOrca | 62.63 | 87.12 | 15.05 | 86.70 | 42.56 | 60.67 | 45.78 | 50.72 | 69.20 | 90.00 | 59.64 | 83.54 | 37.86 | 91.23 | 82.97 | 52.26 | 22.07 | 36.38 | 45.44 |

| Phi-3-mini-128k-instruct | 63.31 | 86.32 | 35.73 | 85.72 | 39.88 | 68.68 | 45.58 | 49.75 | 68.91 | 88.00 | 52.00 | 80.30 | 35.10 | 99.42 | 79.98 | 53.70 | 52.93 | 39.10 | 36.65 |

| Phi-3-mini-4k-instruct | 63.31 | 87.25 | 40.00 | 86.27 | 40.35 | 69.28 | 46.59 | 52.50 | 69.01 | 89.00 | 63.12 | 80.64 | 36.11 | 100 | 80.03 | 57.75 | 51.46 | 39.80 | 36.79 |

| Phi-3-small-128k-instruct | 71.08 | 89.65 | 47.11 | 87.55 | 46.19 | 75.90 | 51.20 | 47.62 | 72.86 | 90.00 | 67.30 | 85.87 | 38.24 | 100 | 81.42 | 64.62 | 60.18 | 40.13 | 44.34 |

| Phi-3-small-8k-instruct | 70.82 | 89.02 | 49.99 | 87.80 | 49.91 | 75.74 | 51.41 | 52.31 | 72.47 | 89.00 | 68.55 | 84.32 | 39.73 | 100 | 81.81 | 64.29 | 59.73 | 44.49 | 44.24 |

| Qwen-1_8B | 38.05 | 68.14 | 15.09 | 65.93 | 54.59 | 43.58 | 33.73 | 33.00 | 69.20 | 80.00 | 40.97 | 60.83 | 32.42 | 42.69 | 73.10 | 38.09 | 22.58 | 28.01 | 30.62 |

| Qwen-1_8B-Chat | 38.82 | 67.51 | 12.66 | 67.31 | 56.65 | 42.29 | 35.94 | 35.72 | 69.20 | 74.00 | 40.44 | 56.23 | 41.46 | 51.46 | 71.66 | 41.66 | 29.77 | 29.18 | 29.86 |

| Qwen-7B | 51.79 | 80.13 | 15.06 | 68.01 | 62.71 | 57.00 | 44.98 | 37.44 | 66.41 | 87.00 | 65.60 | 78.32 | 49.86 | 51.46 | 77.81 | 47.91 | 23.66 | 34.57 | 39.20 |

| Qwen-7B-Chat | 51.71 | 81.36 | 25.80 | 79.57 | 60.61 | 56.60 | 45.38 | 44.78 | 69.20 | 83.00 | 67.83 | 76.58 | 51.31 | 94.15 | 77.98 | 51.55 | 42.30 | 33.27 | 38.73 |

| Qwen1.5-0.5B | 31.74 | 61.83 | 3.17 | 50.03 | 47.17 | 38.10 | 31.93 | 32.22 | 69.20 | 75.00 | 39.73 | 49.24 | 32.34 | 86.55 | 68.89 | 38.24 | 33.78 | 26.37 | 29.52 |

| Qwen1.5-0.5B-Chat | 30.03 | 59.13 | 12.38 | 39.88 | 35.29 | 32.06 | 31.53 | 33.53 | 69.20 | 72.00 | 37.40 | 44.58 | 28.80 | 52.05 | 66.33 | 42.94 | 27.42 | 24.76 | 28.53 |

| Qwen1.5-1.8B | 37.80 | 67.38 | 6.40 | 66.54 | 59.15 | 44.72 | 34.34 | 33.62 | 69.20 | 78.00 | 39.77 | 61.67 | 40.05 | 98.25 | 74.15 | 39.33 | 41.92 | 29.68 | 31.24 |

| Qwen1.5-1.8B-Chat | 39.68 | 68.94 | 13.41 | 69.91 | 57.62 | 44.10 | 36.14 | 34.09 | 69.20 | 79.00 | 58.73 | 60.36 | 43.92 | 68.42 | 72.88 | 40.57 | 35.56 | 30.15 | 30.90 |

| Qwen1.5-4B | 48.04 | 76.85 | 14.21 | 77.83 | 68.36 | 54.53 | 39.56 | 40.62 | 59.96 | 84.00 | 43.21 | 71.43 | 48.37 | 100 | 77.04 | 47.22 | 44.97 | 32.36 | 36.02 |

| Qwen1.5-4B-Chat | 43.26 | 74.45 | 22.01 | 79.48 | 61.82 | 53.68 | 36.75 | 37.38 | 69.20 | 82.00 | 38.75 | 69.67 | 47.00 | 97.08 | 75.87 | 44.74 | 43.77 | 32.63 | 35.08 |

| Qwen1.5-7B | 54.86 | 81.06 | 8.52 | 82.48 | 72.68 | 59.62 | 41.77 | 46.97 | 72.67 | 87.00 | 63.71 | 78.45 | 52.94 | 100 | 79.26 | 51.09 | 52.10 | 37.42 | 40.82 |

| Qwen1.5-7B-Chat | 56.57 | 82.37 | 13.35 | 83.85 | 69.71 | 60.29 | 42.77 | 50.03 | 69.10 | 86.00 | 57.16 | 78.64 | 53.42 | 94.74 | 75.93 | 53.54 | 53.31 | 38.73 | 40.48 |

| Qwen2-0.5B | 31.48 | 62.21 | 14.51 | 60.46 | 55.34 | 43.15 | 33.13 | 31.84 | 67.56 | 71.00 | 40.45 | 49.09 | 33.97 | 99.42 | 69.05 | 39.64 | 37.53 | 26.57 | 30.40 |

| Qwen2-0.5B-Instruct | 31.66 | 62.37 | 9.08 | 63.61 | 53.57 | 43.34 | 33.94 | 33.53 | 62.75 | 74.00 | 39.73 | 49.55 | 34.55 | 80.12 | 69.83 | 39.44 | 30.98 | 26.60 | 31.04 |

| Qwen2-1.5B | 44.11 | 73.27 | 13.05 | 72.45 | 70.97 | 54.86 | 36.55 | 35.88 | 69.20 | 81.00 | 49.99 | 66.99 | 47.89 | 96.49 | 75.32 | 46.06 | 47.01 | 31.26 | 35.90 |

| Qwen2-1.5B-Instruct | 42.83 | 72.81 | 11.02 | 76.51 | 69.95 | 55.33 | 37.15 | 38.38 | 69.20 | 82.00 | 52.87 | 67.35 | 47.62 | 98.83 | 75.82 | 43.25 | 43.32 | 32.96 | 36.14 |

| Qwen2-7B | 60.32 | 85.56 | 39.97 | 84.92 | 83.39 | 70.23 | 44.58 | 45.59 | 69.49 | 88.00 | 67.19 | 80.71 | 59.18 | 100 | 81.09 | 54.23 | 60.11 | 42.61 | 44.76 |

| Qwen2-7B-Instruct | 61.43 | 87.04 | 36.61 | 85.02 | 81.07 | 69.13 | 44.98 | 52.34 | 68.91 | 89.00 | 70.39 | 80.80 | 59.75 | 100 | 80.03 | 55.49 | 57.70 | 42.21 | 46.77 |

| RedPajama-INCITE-Chat-3B-v1 | 42.83 | 72.52 | 3.66 | 67.28 | 25.45 | 27.56 | 39.76 | 35.88 | 69.20 | 83.00 | 38.48 | 67.85 | 25.51 | 56.73 | 75.65 | 34.45 | 25.38 | 24.79 | 32.05 |

| Yi-1.5-6B | 57.08 | 83.04 | 37.83 | 80.24 | 70.41 | 62.44 | 41.77 | 42.00 | 68.43 | 85.00 | 45.60 | 77.97 | 50.50 | 100 | 80.09 | 44.03 | 54.07 | 41.37 | 36.47 |

| Yi-1.5-6B-Chat | 61.69 | 84.68 | 36.63 | 84.25 | 73.06 | 62.87 | 43.17 | 46.69 | 69.20 | 89.00 | 69.17 | 79.09 | 53.99 | 97.66 | 79.87 | 51.81 | 57.00 | 41.91 | 36.51 |

| Yi-1.5-9B | 61.86 | 85.69 | 48.99 | 85.75 | 74.37 | 67.35 | 45.18 | 47.78 | 69.01 | 90.00 | 42.72 | 80.49 | 55.86 | 64.33 | 80.92 | 46.74 | 59.29 | 44.32 | 40.66 |

| Yi-1.5-9B-Chat | 63.23 | 85.27 | 45.39 | 86.36 | 76.07 | 69.33 | 43.98 | 51.19 | 69.20 | 90.00 | 68.39 | 80.59 | 58.10 | 99.42 | 81.31 | 52.31 | 64.82 | 43.18 | 39.64 |

| Yi-6B | 55.80 | 81.94 | 30.13 | 74.89 | 74.13 | 62.63 | 41.77 | 40.88 | 69.39 | 84.00 | 44.71 | 76.66 | 52.01 | 98.83 | 78.65 | 41.86 | 53.94 | 34.07 | 35.88 |

| Yi-6B-Chat | 57.51 | 82.62 | 20.82 | 83.03 | 74.47 | 63.03 | 42.97 | 41.84 | 69.20 | 84.00 | 43.27 | 78.54 | 53.93 | 98.25 | 76.10 | 49.98 | 23.35 | 33.70 | 35.96 |

| Yi-9B | 61.01 | 84.30 | 43.44 | 85.69 | 73.04 | 67.47 | 42.17 | 48.06 | 69.20 | 86.00 | 42.76 | 78.94 | 55.62 | 100 | 79.31 | 42.42 | 59.73 | 38.99 | 40.04 |

| bloom-3b | 35.32 | 63.51 | 29.37 | 61.62 | 25.31 | 28.28 | 32.33 | 33.31 | 69.20 | 75.00 | 38.24 | 54.64 | 24.95 | 2.92 | 70.11 | 40.57 | 24.11 | 24.92 | 33.57 |

| bloom-7b1 | 39.76 | 69.61 | 20.23 | 62.81 | 25.18 | 27.93 | 35.94 | 33.53 | 69.20 | 73.00 | 38.58 | 62.07 | 25.45 | 2.34 | 73.32 | 38.89 | 25.57 | 25.53 | 35.98 |

| bloomz-3b | 35.41 | 62.46 | 11.90 | 93.30 | 39.01 | 33.31 | 38.96 | 38.59 | 69.01 | 79.00 | 69.85 | 55.15 | 33.02 | 26.90 | 74.32 | 40.35 | 36.13 | 25.13 | 32.21 |

| bloomz-7b1 | 42.66 | 69.53 | 45.50 | 91.68 | 43.40 | 36.65 | 42.17 | 39.44 | 69.20 | 80.00 | 74.47 | 63.00 | 35.12 | 8.19 | 77.32 | 45.22 | 37.21 | 27.00 | 35.24 |

| chatglm2-6b | 22.18 | 31.69 | 18.60 | 40.18 | 25.41 | 26.46 | 27.31 | 33.06 | 53.03 | 55.00 | 38.01 | 28.23 | 24.49 | 97.66 | 52.30 | 50.19 | 39.50 | 20.10 | 25.32 |

| chatglm3-6b | 42.41 | 68.69 | 68.65 | 78.65 | 54.09 | 50.90 | 36.75 | 40.41 | 69.30 | 80.00 | 46.18 | 66.93 | 44.10 | 4.09 | 72.05 | 49.90 | 0.06 | 30.08 | 31.47 |

| chatglm3-6b-base | 57.85 | 83.16 | 26.91 | 86.48 | 67.10 | 61.60 | 43.37 | 46.72 | 60.54 | 87.00 | 75.21 | 81.01 | 51.27 | 0 | 81.31 | 42.73 | 51.21 | 33.97 | 38.84 |

| deepseek-llm-7b-base | 51.79 | 80.72 | 27.43 | 72.29 | 41.94 | 46.84 | 43.78 | 36.47 | 69.20 | 84.00 | 40.44 | 78.59 | 31.17 | 15.79 | 79.48 | 35.00 | 33.78 | 29.51 | 40.30 |

| deepseek-llm-7b-chat | 54.69 | 82.66 | 6.24 | 83.24 | 47.96 | 51.41 | 45.78 | 41.84 | 69.20 | 85.00 | 45.38 | 79.61 | 39.23 | 100 | 80.37 | 47.88 | 39.25 | 31.46 | 41.02 |

| glm-4-9b | 66.81 | 87.84 | 25.93 | 86.88 | 74.25 | 74.96 | 45.58 | 47.34 | 69.20 | 89.00 | 44.96 | 84.41 | 56.83 | 0 | 80.92 | 43.63 | 66.60 | 42.45 | 51.73 |

| glm-4-9b-chat | 66.13 | 86.95 | 23.05 | 87.00 | 72.06 | 69.28 | 46.99 | 47.94 | 69.20 | 84.00 | 42.90 | 81.99 | 56.99 | 100 | 81.53 | 59.32 | 59.41 | 43.15 | 50.60 |

| internlm-chat-7b | 47.44 | 76.52 | 13.02 | 68.56 | 52.65 | 51.96 | 40.36 | 37.84 | 69.01 | 82.00 | 68.06 | 75.67 | 48.49 | 95.91 | 76.21 | 47.03 | 41.79 | 33.13 | 32.35 |

| internlm2-1_8b | 42.06 | 72.14 | 65.54 | 69.39 | 44.56 | 43.18 | 36.14 | 34.75 | 69.20 | 78.00 | 43.23 | 62.15 | 34.88 | 89.47 | 75.93 | 39.91 | 39.25 | 29.15 | 30.50 |

| internlm2-7b | 56.83 | 84.47 | 61.16 | 86.61 | 65.30 | 63.15 | 43.57 | 50.72 | 69.20 | 91.00 | 47.53 | 81.16 | 52.98 | 100 | 81.92 | 51.15 | 55.60 | 36.55 | 41.95 |

| internlm2-chat-1_8b | 43.00 | 72.47 | 37.52 | 79.24 | 46.63 | 45.23 | 36.14 | 41.78 | 68.82 | 78.00 | 62.34 | 60.55 | 42.97 | 89.47 | 75.49 | 42.15 | 39.12 | 29.38 | 29.74 |

| internlm2-chat-7b | 58.79 | 84.68 | 40.83 | 83.94 | 61.67 | 62.17 | 44.18 | 50.56 | 69.20 | 93.00 | 72.75 | 79.86 | 51.87 | 99.42 | 80.03 | 57.45 | 51.78 | 38.93 | 41.51 |

| internlm2_5-7b | 58.36 | 84.47 | 57.45 | 84.77 | 78.89 | 68.81 | 44.78 | 50.72 | 69.20 | 84.00 | 47.75 | 79.66 | 57.07 | 100 | 80.81 | 48.99 | 62.85 | 38.19 | 39.16 |

| internlm2_5-7b-chat | 60.75 | 84.97 | 52.97 | 88.65 | 78.64 | 69.88 | 43.17 | 50.75 | 69.20 | 88.00 | 46.38 | 80.83 | 57.05 | 100 | 81.48 | 54.56 | 62.09 | 42.31 | 40.02 |

| mistral-7b-sft-alpha | 59.39 | 84.60 | 35.31 | 85.50 | 39.80 | 59.84 | 42.57 | 38.94 | 69.20 | 93.00 | 41.90 | 82.55 | 32.50 | 100 | 82.25 | 43.59 | 48.92 | 35.75 | 44.86 |

| mistral-7b-sft-beta | 58.11 | 84.85 | 15.05 | 85.11 | 38.77 | 58.92 | 42.17 | 39.84 | 69.20 | 90.00 | 41.82 | 82.17 | 30.59 | 100 | 82.09 | 43.01 | 23.60 | 35.98 | 44.80 |

| phi-1 | 20.65 | 38.72 | 14.59 | 45.29 | 25.10 | 25.84 | 25.10 | 30.22 | 69.20 | 53.00 | 39.45 | 30.64 | 24.97 | 4.68 | 56.02 | 41.59 | 23.03 | 24.56 | 28.67 |

| phi-1_5 | 54.01 | 80.56 | 17.38 | 75.02 | 25.96 | 41.68 | 48.19 | 31.53 | 69.20 | 79.00 | 43.95 | 64.08 | 24.93 | 97.66 | 75.65 | 40.87 | 35.94 | 29.98 | 28.41 |

| vicuna-7b-v1.5 | 53.92 | 81.61 | 29.12 | 80.92 | 35.15 | 50.04 | 45.18 | 38.59 | 69.20 | 86.00 | 39.96 | 77.44 | 29.64 | 100 | 77.98 | 50.36 | 42.24 | 27.40 | 40.66 |

| zephyr-7b-alpha | 61.86 | 85.48 | 15.05 | 85.57 | 42.12 | 59.79 | 43.78 | 44.78 | 69.20 | 94.00 | 52.83 | 83.99 | 33.85 | 98.83 | 82.75 | 56.05 | 21.69 | 35.21 | 45.38 |

| zephyr-7b-beta | 63.99 | 85.14 | 15.05 | 84.86 | 40.83 | 59.59 | 43.17 | 42.78 | 69.49 | 90.00 | 48.77 | 84.28 | 33.57 | 98.25 | 81.42 | 55.13 | 21.76 | 35.91 | 46.02 |

| zephyr-7b-gemma-sft-v0.1 | 54.61 | 83.50 | 9.67 | 78.13 | 38.21 | 57.78 | 36.55 | 43.22 | 68.62 | 88.00 | 49.60 | 81.53 | 30.39 | 93.57 | 77.20 | 45.91 | 41.22 | 34.97 | 44.80 |

| zephyr-7b-gemma-v0.1 | 60.07 | 84.26 | 14.51 | 79.69 | 40.08 | 58.46 | 38.76 | 44.44 | 69.10 | 85.00 | 52.36 | 83.28 | 31.94 | 83.63 | 77.98 | 52.16 | 44.53 | 36.58 | 46.63 |

| ai2d_test | ccbench | hallusionbench (*) | mathvista_mini | mmbench_dev_cn | mmbench_dev_en | mme (*) | mmmu_val | mmstar | ocrbench (*) | realworldqa | scienceqa_test | scienceqa_val | seedbench_img | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bunny-Llama-3-8B-V | 63.70 | 28.04 | 59.41 | 25.20 | 77.11 | 81.64 | 1843.27 | 42.65 | 44.60 | 46 | 56.21 | 80.52 | 78.54 | 72.38 |

| MiniCPM-Llama3-V-2_5 | 77.33 | 36.08 | 57.94 | 46.00 | 72.56 | 75.72 | 1957.39 | 43.10 | 47.80 | 688 | 55.29 | 79.18 | 77.59 | 61.18 |

| MiniCPM-V | 55.76 | 41.57 | 58.99 | 31.60 | 75.95 | 76.95 | 1606.17 | 38.91 | 39.27 | 332 | 52.29 | 77.05 | 73.39 | 65.41 |

| MiniCPM-V-2 | 65.03 | 46.47 | 56.99 | 37.50 | 77.62 | 78.84 | 1737.47 | 36.76 | 39.80 | 589 | 55.82 | 80.07 | 75.92 | 66.69 |

| MiniCPM-V-2_6 | 73.06 | 50.00 | 65.72 | 47.90 | 82.21 | 83.95 | 2221.44 | 41.29 | 51.67 | 782 | 62.88 | 87.31 | 87.12 | 72.84 |

| Ovis-Clip-Llama3-8B | 67.00 | 24.51 | 61.51 | 32.00 | 78.05 | 79.12 | 1911.55 | 43.44 | 45.93 | 439 | 56.47 | 77.14 | 77.59 | 67.61 |

| Ovis-Clip-Qwen1_5-7B | 66.09 | 36.27 | 53.84 | 29.70 | 79.23 | 78.86 | 1698.96 | 36.31 | 44.13 | 458 | 59.08 | 82.75 | 80.83 | 69.53 |

| Phi-3-vision-128k-instruct | 78.40 | 25.10 | 63.20 | 38.30 | 71.06 | 80.20 | 1655.56 | 41.86 | 47.53 | 646 | 59.48 | 90.03 | 90.13 | 70.38 |

| Phi-3.5-vision-instruct | 77.59 | 27.06 | 64.67 | 39.50 | 74.31 | 81.50 | 1803.41 | 43.21 | 47.60 | 566 | 53.59 | 88.94 | 90.51 | 69.05 |

| Qwen-VL | 45.34 | 38.24 | 38.38 | 18.50 | 67.61 | 68.70 | 1328.74 | 31.11 | 33.20 | 334 | 40.26 | 60.98 | 57.94 | 51.03 |

| Qwen-VL-Chat | 63.50 | 44.31 | 55.52 | 29.30 | 73.62 | 72.46 | 1794.98 | 36.31 | 36.00 | 483 | 52.03 | 65.44 | 62.57 | 63.81 |

| Yi-VL-6B | 59.72 | 40.98 | 56.99 | 28.30 | 78.17 | 78.24 | 1881.30 | 38.35 | 37.60 | 333 | 52.29 | 71.89 | 70.62 | 66.84 |

| deepseek-vl-1.3b-chat | 51.33 | 37.45 | 49.63 | 28.60 | 70.62 | 73.27 | 1566.30 | 30.66 | 37.47 | 422 | 50.07 | 68.07 | 63.66 | 65.60 |

| deepseek-vl-7b-chat | 65.51 | 50.00 | 54.68 | 29.60 | 79.51 | 80.27 | 1759.84 | 36.54 | 40.47 | 471 | 54.90 | 81.41 | 76.39 | 69.64 |

| internlm-xcomposer2-7b | 62.24 | 54.31 | 61.62 | 22.00 | 77.66 | 70.11 | 1667.69 | 35.63 | 36.33 | 0.20 | 49.93 | 82.85 | 80.93 | 61.65 |

| internlm-xcomposer2-vl-1_8b | 70.34 | 38.43 | 62.67 | 41.10 | 74.41 | 77.41 | 1798.19 | 32.13 | 47.33 | 458 | 54.77 | 91.77 | 90.80 | 69.77 |

| internlm-xcomposer2-vl-7b | 79.44 | 37.45 | 66.25 | 44.80 | 82.12 | 84.25 | 2201.49 | 40.95 | 54.07 | 509 | 65.62 | 95.49 | 95.33 | 74.77 |

| llava-v1.5-7b | 53.92 | 25.10 | 53.52 | 25.80 | 69.99 | 73.48 | 1768.77 | 36.99 | 32.80 | 305 | 53.73 | 67.53 | 65.19 | 65.49 |

This project is licensed under Apache License 2.0 (http://www.apache.org/licenses/LICENSE-2.0, SPDX-License-identifier: Apache-2.0). As a special exception, the dataset ARC is licensed under cc-by-sa-4.0 (https://creativecommons.org/licenses/by-sa/4.0/legalcode, SPDX-License-Identifier: CC-BY-SA-4.0).

We have made reasonable efforts to ensure the integrity of the data in this project. However, due to the complexity of the data, we cannot guarantee that the datasets are completely free of copyright issues, personal information, or improper content under applicable laws and regulations. If you believe anything infringes on your rights or generates improper content, please contact us, and we will promptly address the matter. Users of this project are solely responsible for ensuring their compliance with applicable laws (such as data protection laws, including but not limited to anonymization or removal of any sensitive data before use in production environments).