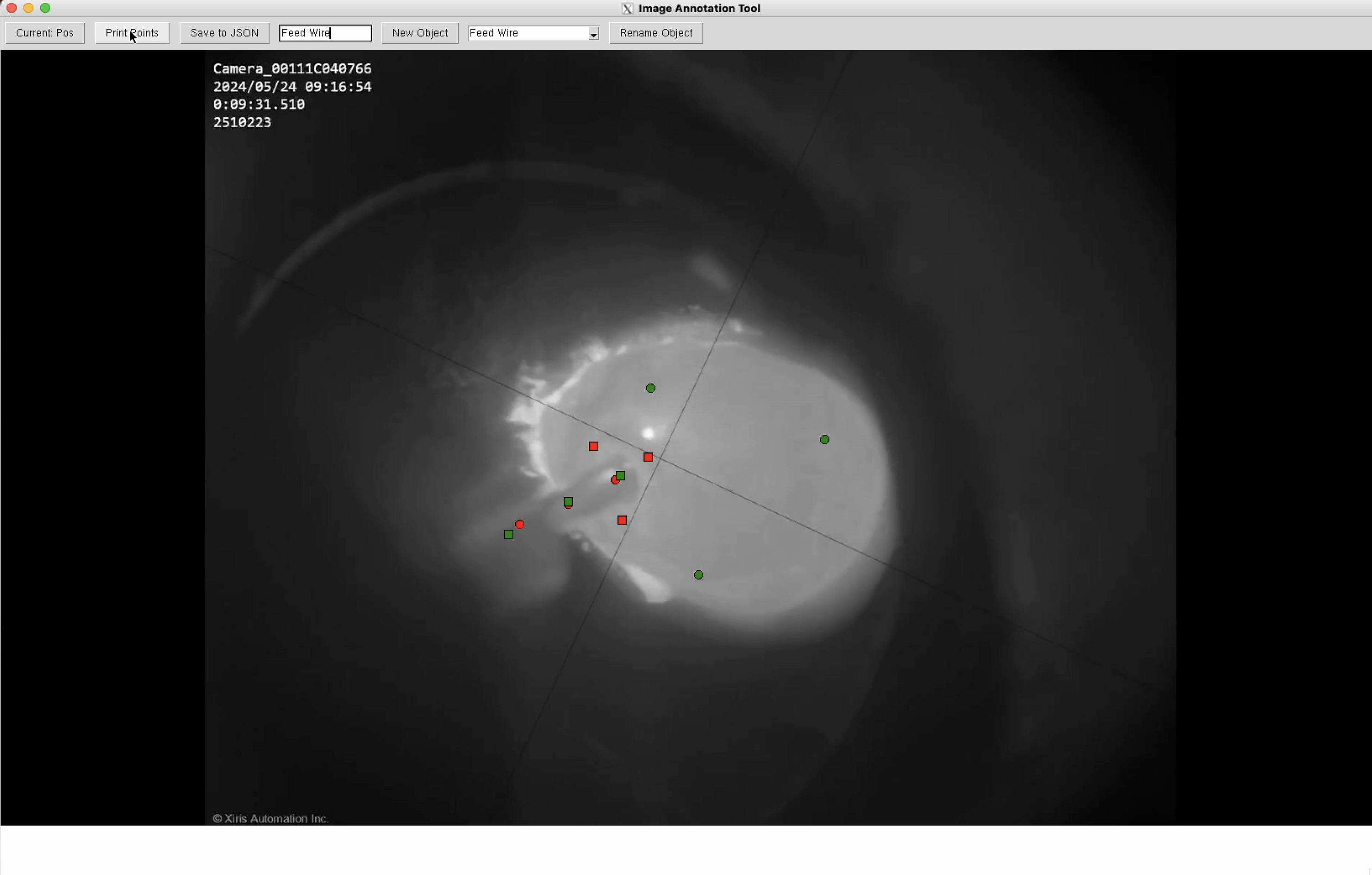

SAM2, released by META AI, is a deep learning framework for video segmentation. The model can segment objects in a video using a series of input point labels on an initial frame. Using the initial frame and input points, the model generates a segmentation mask for each labeled object and propagates the segmented masks throughout the frames of the video. For a more in-depth discussion of the mechanisms behind SAM2 and its architecture, see their paper.

Since SAM2 is a powerful state-of-the-art tool for video segmentation, this repo streamlines video frame splitting, the generation of input labels, and creation of the output segmentation video.

Though designed specifically for welding videos, this package can be used for other video domains. (Preprocessing may be required depending on the input video).

- Create a

condaenvironment - Install

python>=3.10andtorch>=2.3.1 - Clone the SAM2 GitHub Repo

- Follow installation instructions within SAM2's

README.md - Ensure ffmpeg is installed

- Reference

requirements.txtfor remaining package requirements

- Run from repo root:

python3 MeltSeg/main.py