The official implementation of GenPose++, as presented in Omni6DPose. (ECCV 2024)

- 2024.08.10: GenPose++ is released! 🎉

- 2024.08.01: Omni6DPose dataset and API are released! 🎉

- 2024.07.01: Omni6DPose has been accepted by ECCV2024! 🎉

- Release the Omni6DPose dataset.

- Release the Omni6DPose API.

- Release the GenPose++ and pretrained models.

- Release a convenient version of GenPose++ with SAM for the downstream tasks.

- Ubuntu 20.04

- Python 3.10.14

- Pytorch 2.1.0

- CUDA 11.8

- 1 * NVIDIA RTX 4090

conda create -n genpose2 python==3.10.14

conda activate genpose2conda install cudatoolkit=11

pip install torch==2.1.0 torchvision==0.16.0 torchaudio==2.1.0 --index-url https://download.pytorch.org/whl/cu118pip install -r requirements.txt cd networks/pts_encoder/pointnet2_utils/pointnet2

python setup.py installWe provide cutoop, a convenient tool for the Omni6DPose dataset. We provide two ways to install it. The detailed installation instructions can be found in the Omni6DPoseAPI. There we provide the installation instructions using the pip package manager.

sudo apt-get install openexr

pip install cutoop-

Download and organize the Omni6DPose dataset by following the instructions provided on the Omni6DPoseAPI page. Note that the

PAMdataset and the filesdepth_1.zip,coord.zip, andir.zipfrom theSOPEdataset are not required for GenPose++. You may omit downloading these files to save disk space. -

Copy the files from

Metato the$ROOT/configsdirectory. The organization of the files should be as follows:

genpose2

└──configs

├── obj_meta.json

├── real_obj_meta.json

└── config.py- We provide the trained checkpoints. Please download the files to the

$ROOT/resultsdirectory and organize them as follows:

genpose2

└──results

└── ckpts

├── ScoreNet

│ └── scorenet.pth

├── EnergyNet

│ └── energynet.pth

└── ScaleNet

└── scalenet.pthSet the parameter --data_path in scripts/train_score.sh, scripts/train_energy.sh and scripts/train_scale.sh to your own path of SOPE dataset.

-

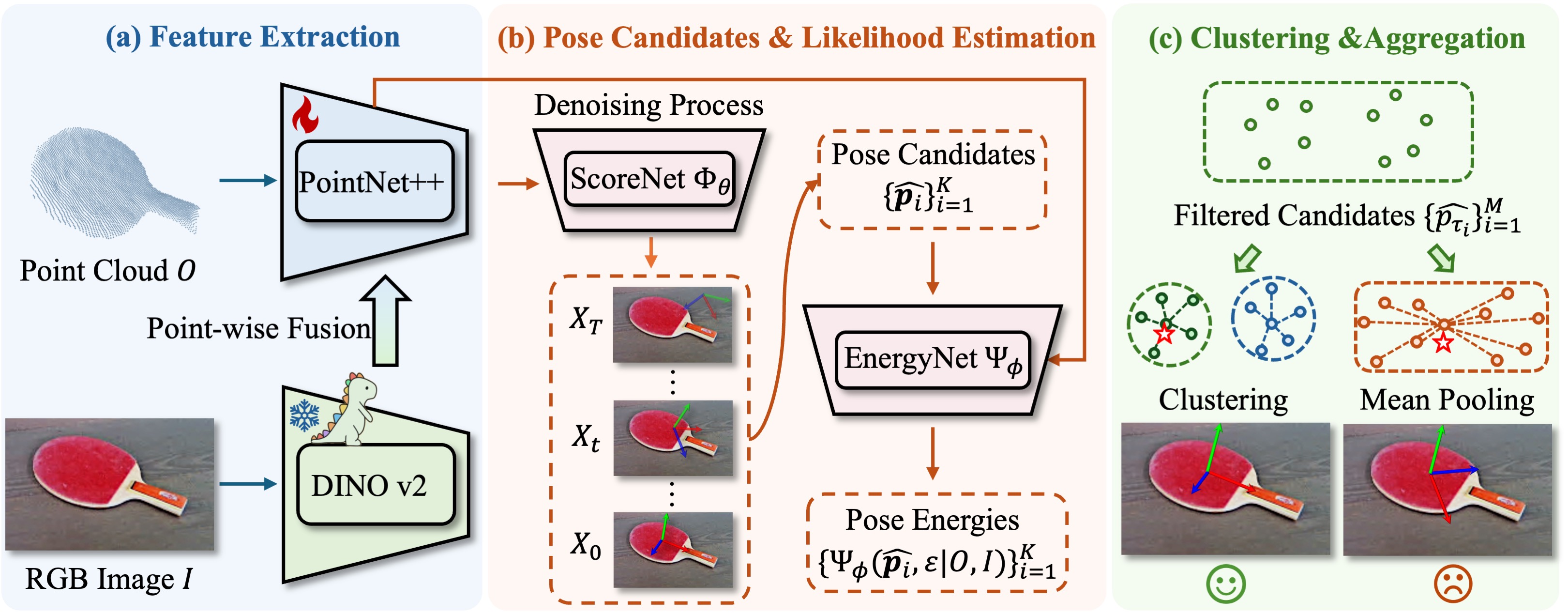

Train the score network to generate the pose candidates.

bash scripts/train_score.sh-

Train the energy network to aggragate the pose candidates.

bash scripts/train_energy.sh-

Train the scale network to predict the bounding box length.

The scale network uses the features extracted by the score network. You may need to change the parameter

--pretrained_score_model_pathinscripts/train_scale.shif you have trained your own score network.

bash scripts/train_scale.shSet the parameter --data_path in scripts/eval_single.sh to your own path of ROPE dataset.

bash scripts/eval_single.shbash scripts/eval_tracking.shpython runners/infer.pyIf you find our work useful in your research, please consider citing:

@article{zhang2024omni6dpose,

title={Omni6DPose: A Benchmark and Model for Universal 6D Object Pose Estimation and Tracking},

author={Zhang, Jiyao and Huang, Weiyao and Peng, Bo and Wu, Mingdong and Hu, Fei and Chen, Zijian and Zhao, Bo and Dong, Hao},

booktitle={European Conference on Computer Vision},

year={2024},

organization={Springer}

}If you have any questions, please feel free to contact us:

Jiyao Zhang: [email protected]

Weiyao Huang: [email protected]

This project is released under the MIT license. See LICENSE for additional details.