Yuzheng Liu* · Siyan Dong* · Shuzhe Wang · Yanchao Yang · Qingnan Fan · Baoquan Chen

SLAM3R is a real-time dense scene reconstruction system that regresses 3D points from video frames using feed-forward neural networks, without explicitly estimating camera parameters.

- Release pre-trained weights and inference code.

- Release Gradio Demo.

- Release evaluation code.

- Release training code and data.

- Clone SLAM3R

git clone https://github.com/PKU-VCL-3DV/SLAM3R.git

cd SLAM3R- Prepare environment

conda create -n slam3r python=3.11 cmake=3.14.0

conda activate slam3r

# install torch according to your cuda version

pip install torch==2.5.0 torchvision==0.20.0 torchaudio==2.5.0 --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt

# optional: install additional packages to support visualization

pip install -r requirements_vis.txt- Optional: Accelerate SLAM3R with XFormers and custom cuda kernels for RoPE

# install XFormers according to your pytorch version, see https://github.com/facebookresearch/xformers

pip install xformers==0.0.28.post2

# compile cuda kernels for RoPE

cd slam3r/pos_embed/curope/

python setup.py build_ext --inplace

cd ../../../- Download the SLAM3R checkpoints for the Image-to-Points and Local-to-World models from Hugging Face

from huggingface_hub import hf_hub_download

filepath = hf_hub_download(repo_id='siyan824/slam3r_i2p', filename='slam3r_i2p.pth', local_dir='./checkpoints')

filepath = hf_hub_download(repo_id='siyan824/slam3r_l2w', filename='slam3r_l2w.pth', local_dir='./checkpoints')or Google Drive:

Image-to-Points model and

Local-to-World model.

Place them under ./checkpoints/

To run our demo on Replica dataset, download the sample scene here and unzip it to ./data/Replica/. Then run the following command to reconstruct the scene from the video images

bash scripts/demo_replica.shThe results will be stored at ./visualization/ by default.

We also provide a set of images extracted from an in-the-wild captured video. Download it here and unzip it to ./data/wild/.

Set the required parameter in this script, and then run SLAM3R by using the following command

bash scripts/demo_wild.shWhen --save_preds is set in the script, the per-frame prediction for reconstruction will be saved at visualization/TEST_NAME/preds/. Then you can visualize the incremental reconstruction process with the following command

bash scripts/demo_vis_wild.shA Open3D window will appear after running the script. Please click space key to record the adjusted rendering view and close the window. The code will then do the rendering of the incremental reconstruction.

You can run SLAM3R on your self-captured video with the steps above. Here are some tips for it

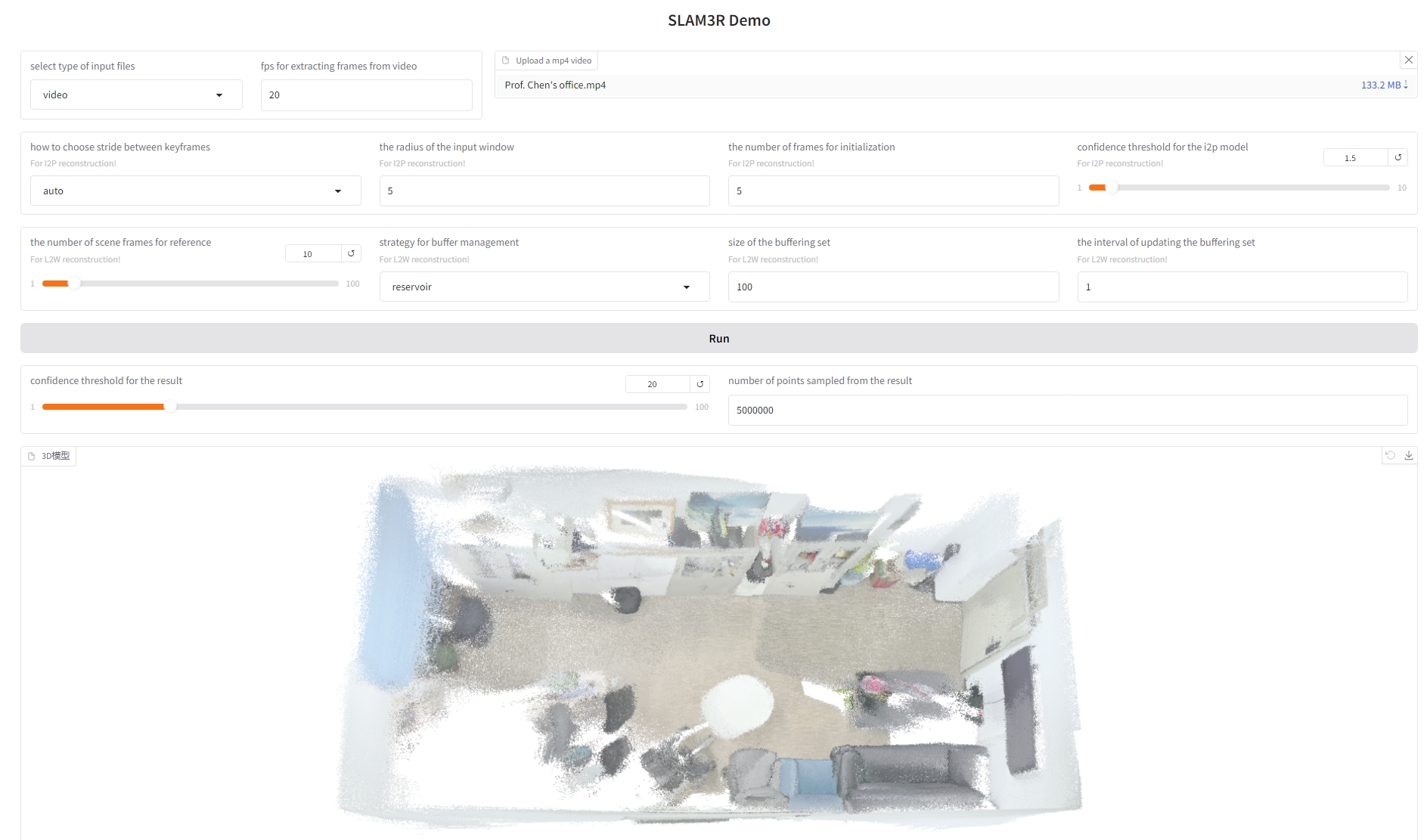

We also provided a Gradio interface, where you can upload a directory, a video or specific images to perform the reconstruction. After setting the reconstruction parameters, you can click the 'Run' button to start the process. Modifying the visualization parameters at the bottom allows you to directly display different visualization results without rerunning the inference.

The interface can be launched with the following command:

python app.pyIf you find our work helpful in your research, please consider citing:

@article{slam3r,

title={SLAM3R: Real-Time Dense Scene Reconstruction from Monocular RGB Videos},

author={Liu, Yuzheng and Dong, Siyan and Wang, Shuzhe and Yang, Yanchao and Fan, Qingnan and Chen, Baoquan},

journal={arXiv preprint arXiv:2412.09401},

year={2024}

}

Our implementation is based on several awesome repositories:

We thank the respective authors for open-sourcing their code.