Gradually warm-up(increasing) learning rate for pytorch's optimizer. Proposed in 'Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour'.

Example : Gradual Warmup for 100 epoch, after that, use cosine-annealing.$ pip install git+https://github.com/ildoonet/pytorch-gradual-warmup-lr.git

from warmup_scheduler import GradualWarmupScheduler

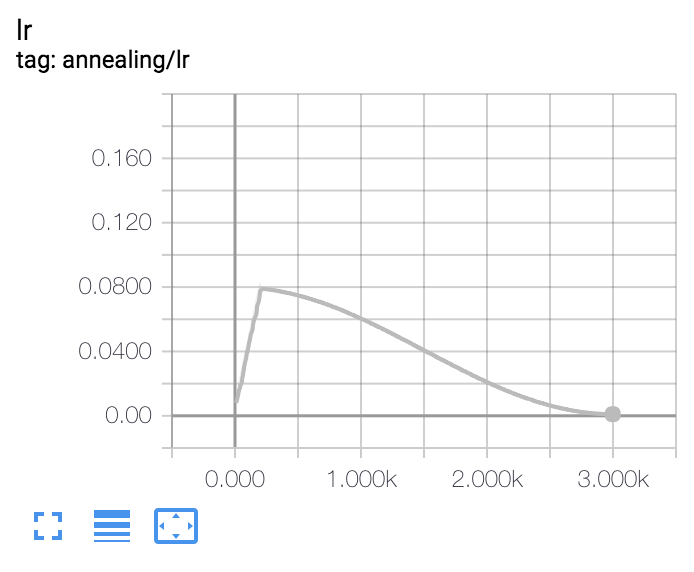

scheduler_cosine = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, max_epoch)

scheduler_warmup = GradualWarmupScheduler(optimizer, multiplier=8, total_epoch=10, after_scheduler=scheduler_cosine)

for epoch in range(train_epoch):

scheduler_warmup.step() # 10 epoch warmup, after that schedule as scheduler_plateau

...