Authors: Sidi Yang, Tianhe Wu, Shuwei Shi, Shanshan Lao, Yuan Gong, Mingdeng Cao, Jiahao Wang and Yujiu Yang

This is the official repository for NTIRE2022 Perceptual Image Quality Assessment Challenge Track 2 No-Reference competition. We won first place in the competition and the codes have been released now.

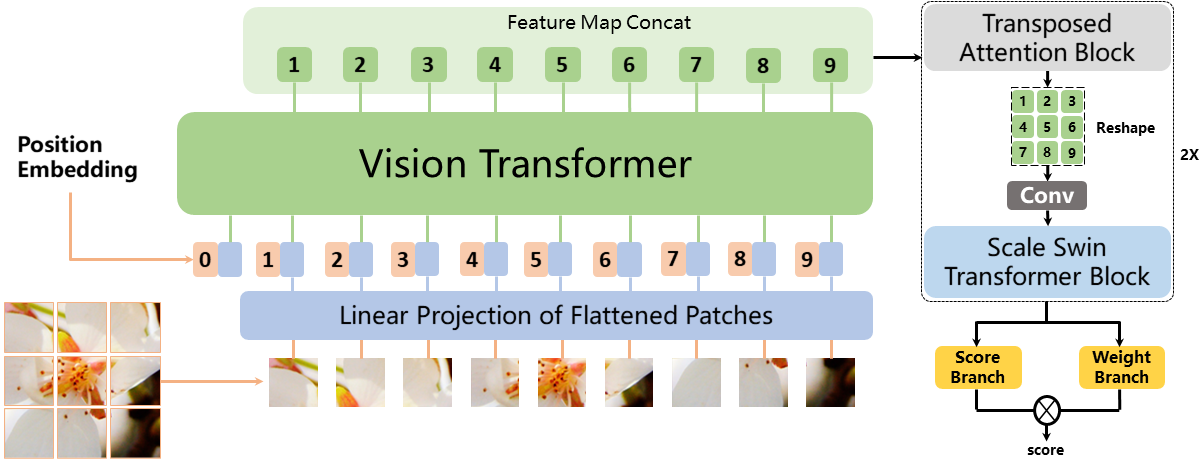

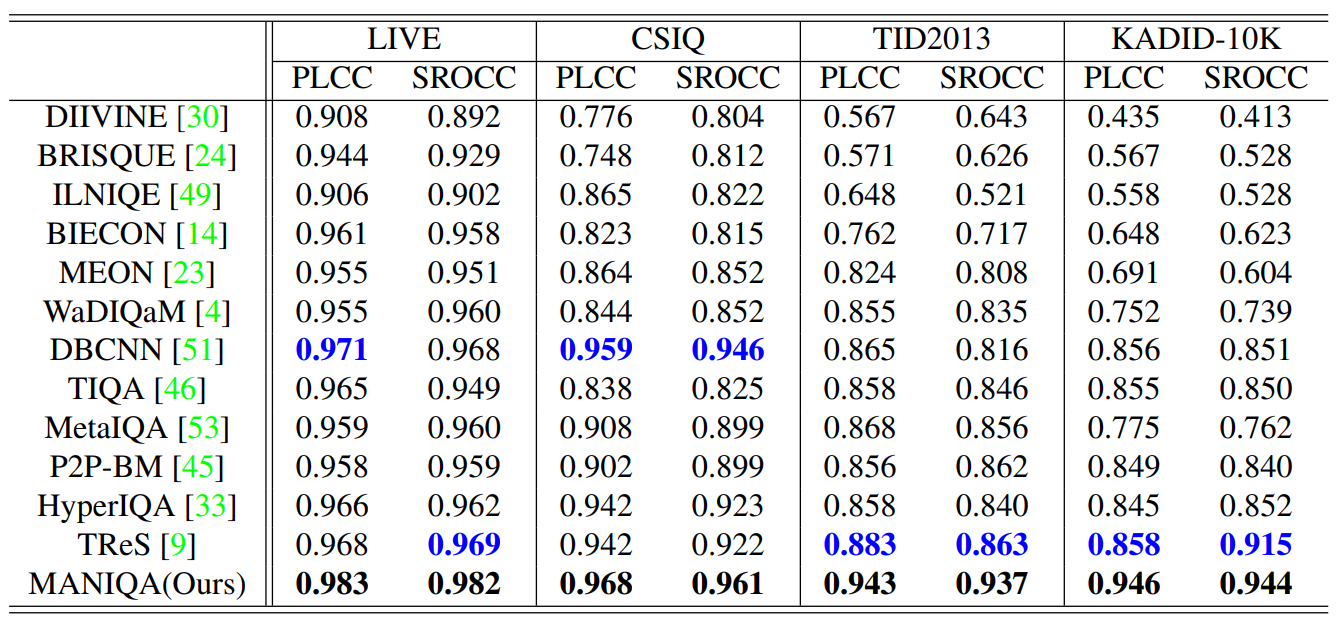

Abstract: No-Reference Image Quality Assessment (NR-IQA) aims to assess the perceptual quality of images in accordance with human subjective perception. Unfortunately, existing NR-IQA methods are far from meeting the needs of predicting accurate quality scores on GAN-based distortion images. To this end, we propose Multi-dimension Attention Network for no-reference Image Quality Assessment (MANIQA) to improve the performance on GAN-based distortion. We firstly extract features via ViT, then to strengthen global and local interactions, we propose the Transposed Attention Block (TAB) and the Scale Swin Transformer Block (SSTB). These two modules apply attention mechanisms across the channel and spatial dimension, respectively. In this multi-dimensional manner, the modules cooperatively increase the interaction among different regions of images globally and locally. Finally, a dual branch structure for patch-weighted quality prediction is applied to predict the final score depending on the weight of each patch's score. Experimental results demonstrate that MANIQA outperforms state-of-the-art methods on four standard datasets (LIVE, TID2013, CSIQ, and KADID-10K) by a large margin. Besides, our method ranked first place in the final testing phase of the NTIRE 2022 Perceptual Image Quality Assessment Challenge Track 2: No-Reference.

The training set is PIPAL22 and the validation dataset is PIPAL21. We have conducted experiments on LIVE, CSIQ, TID2013 and KADID-10K datasets.

NOTE:

- Put the MOS label and the data python files into data folder.

- The validation dataset comes from NTIRE 2021. If you want to reproduce the results on validation or test set for NTIRE 2022 NR-IQA competition, register the competition and upload the submission.zip by following the instruction on the website.

# Training MANIQA model, run:

python train_maniqa.py

Inference for PIPAL22 Validing and Testing

# Generating the ouput file, run:

python inference.py

- Platform: PyTorch 1.8.0

- Language: Python 3.7.9

- Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-104-generic x86_64)

- CUDA Version 11.2

- GPU: NVIDIA GeForce RTX 3090 with 24GB memory

Python requirements can installed by:

pip install -r requirements.txt

@misc{https://doi.org/10.48550/arxiv.2204.08958,

doi = {10.48550/ARXIV.2204.08958},

url = {https://arxiv.org/abs/2204.08958},

author = {Yang, Sidi and Wu, Tianhe and Shi, Shuwei and Gong, Shanshan Lao Yuan and Cao, Mingdeng and Wang, Jiahao and Yang, Yujiu},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Image and Video Processing (eess.IV), FOS: Computer and information sciences, FOS: Computer and information sciences, FOS: Electrical engineering, electronic engineering, information engineering, FOS: Electrical engineering, electronic engineering, information engineering},

title = {MANIQA: Multi-dimension Attention Network for No-Reference Image Quality Assessment},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

Our codes partially borrowed from anse3832 and timm.

[CVPRW 2021] Region-Adaptive Deformable Network for Image Quality Assessment (4th place in FR track)

[CVPRW 2022] Attentions Help CNNs See Better: Attention-based Hybrid Image Quality Assessment Network. (1th place in FR track)