In this project, I will demonstrate the skills learned about ensuring quality releases in the Udacity Nanodegree: DevOps Engineer for Microsoft Azure. Using Microsoft Azure.

In this project I will create a VM using Terraform, along with Packer to deploy an app to Azure AppService.

Then, I will test environments and run automated tests, using various tools such as Jmeter, Selenium, and Postman.

To finalize this project I will utilize Azure Log Analytics to provide insight into my application's behavior by querying custom log files.

| Dependency | Link |

|---|---|

| Python | https://www.python.org/downloads/ |

| Terraform | https://www.terraform.io/downloads.html |

| Packer | https://www.packer.io/ |

| JMeter | https://jmeter.apache.org/download_jmeter.cgi |

| Postman | https://www.postman.com/downloads/ |

| Selenium | https://sites.google.com/a/chromium.org/chromedriver/getting-started |

| Azure DevOps | https://dev.azure.com/ramonasaintandre0285/UdacityP3-ensuringquality |

| Azure CLI | https://aka.ms/cli_ref |

| Azure Log Analytics |

- This is the starter repo for the project.

git clone https://github.com/jfcb853/Udacity-DevOps-Azure-Project-3Login to Azure:

az login

Create the password based authentication service principal for your project and query it for your ID and SECRET data:

az ad sp create-for-rbac --name SPProject3 --query "{client_id: appId, client_secret: password, tenant_id: tenant}"

Make note of the password because it can't be retrieved, only reset.

Login to the Service Principal using the following command with you credentials from the previous step:

Login to the Service Principal using the following command with you credentials from the previous step:

az login --service-principal --username APP_ID --tenant TENANT_ID --password CLIENT_SECRET

Configure the storage account and state backend.

You will need to create a Storage Account, before you can us Azure Storage as a backend.

You can also use the commands in the azurestoragescript.sh file in the repo to this.

Click Here for instructions on creating the Azure storage account and backend.

Replace the values below in terraform/environments/test/main.tf files with the output from the Azure CLI:

* storage_account_name

* container_name

* key

* access_key

Create a Service Principal for Terraform and replace the below values in the terraform/environments/test/terraform.tfvars files with the output from the Azure CLI.

* subscription_id

* client_id

* client_secret

* tenant_id

Click Here if you need help with the steps for creating a service principal.

-

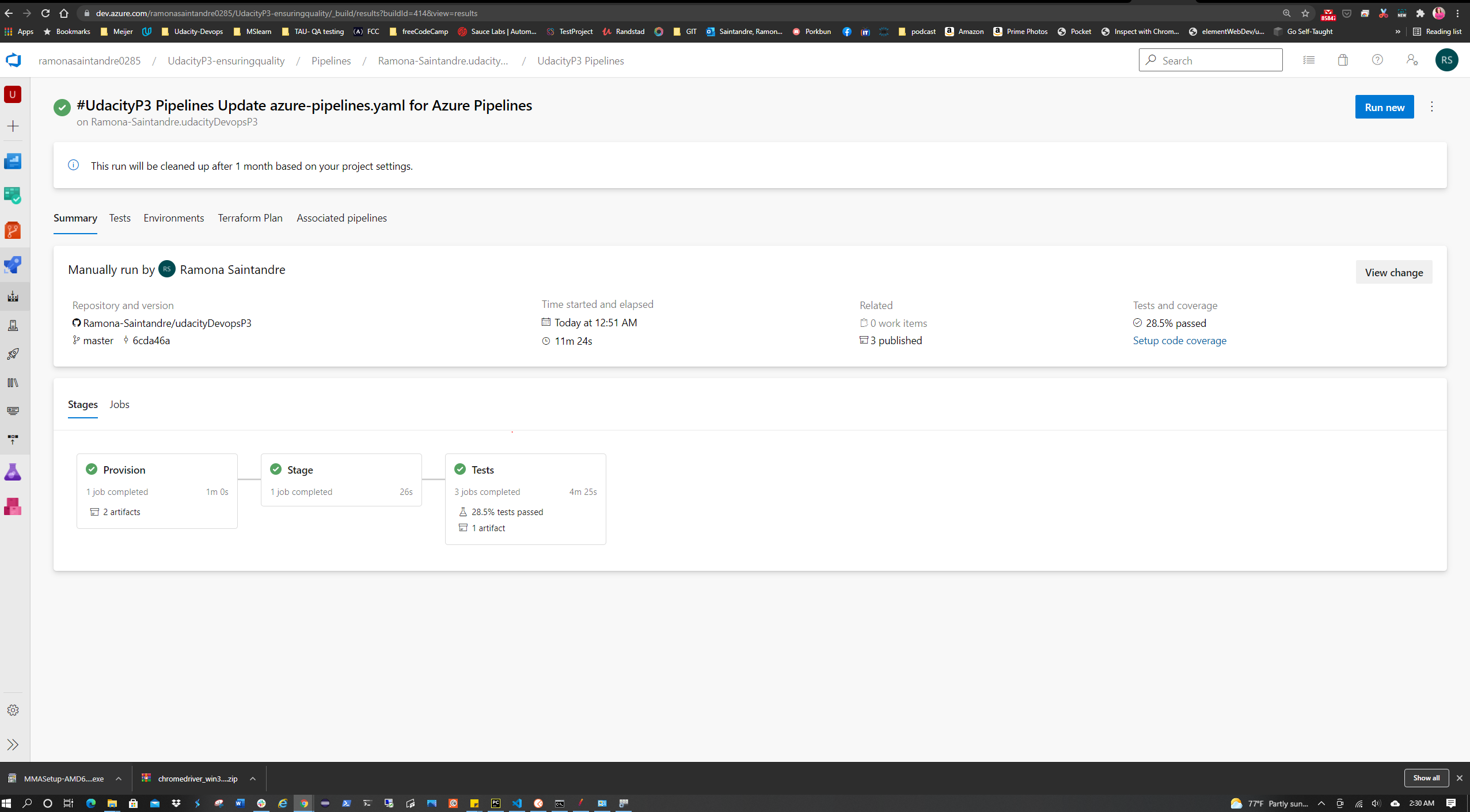

Create a new Azure Pipeline from the

azure-pipelines.yamlfile or start building one from scratch if you prefer. -

Create a new service connection: In Azure Devops go to Project Settings > Service Connections > New Service Connection > Azure Resource Manager > Next > Service Principal (Automatic) > Next > Subscription. After choosing your subscription provide a name for the service connection.

-

If the pipeline runs now it will fail since no resources are provisioned yet in Azure.

-

Create an SSH key to log in to your VM.

-

Click Here for instructions on setting up an SSH key.

-

This SSH key can be saved and added to Azure Pipelines as a secure file. I also loaded my terraform.tfvars to Azure Pipelines as a secure file along with a .env file with my access-key.

-

Create your Azure resources (Most can be provisioned via Terraform using the Pipeline by adding tasks and jobs to the

azure-pipelines.ymlfile utilizingterraform init,terraform plan, andterraform applycommands).

- Once the resources are deployed you will have to follow the instructions on setting up an environment in Azure Pipelines to register the Linux VM so your app can be deployed to it. You can find that documentation here. In Azure DevOps under Pipelines > Environments > TEST > Add resource > Select "Virtual Machines" > Next > Under Operating System select "Linux". You will be given a registration script to copy. SSH into your VM and paste this script into the terminal and run it. This will register your VM and allow Azure Pipelines to act as an agent to run commands on it.

- Build the FakeRestAPI and Automated Testing artifacts and publish them to the artifact staging directory in the Pipeline.

- Deploy the FakeRestAPI to your App Service on your VM. The URL for my webapp is (need to add URL)

It should look like the image below.

| Test Type | Technology | Stage in CI/CD pipeline | Status |

|---|---|---|---|

| Integration | Postman | Test Stage - runs during build stage | ✅ |

| Goal | Status |

|---|---|

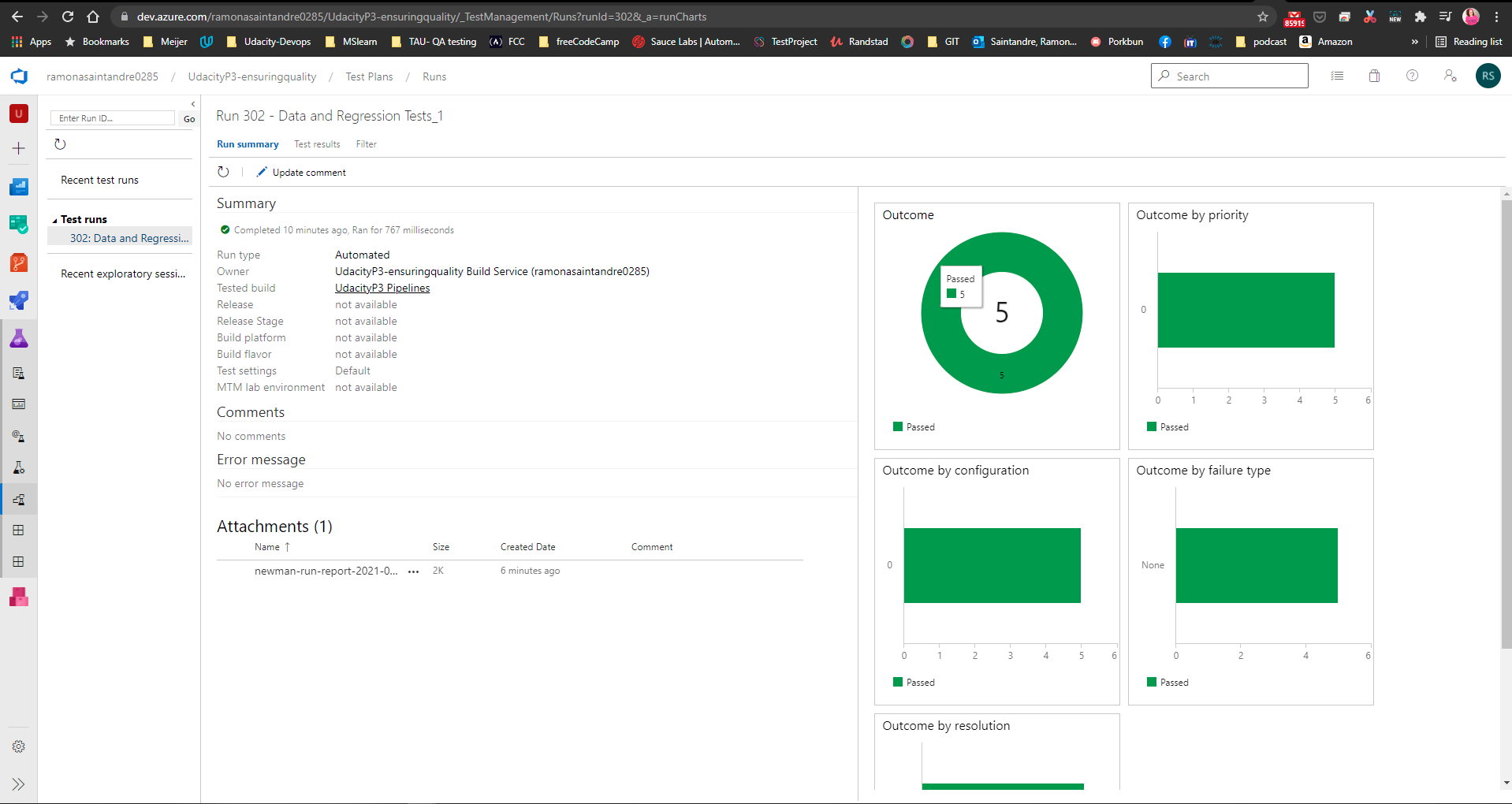

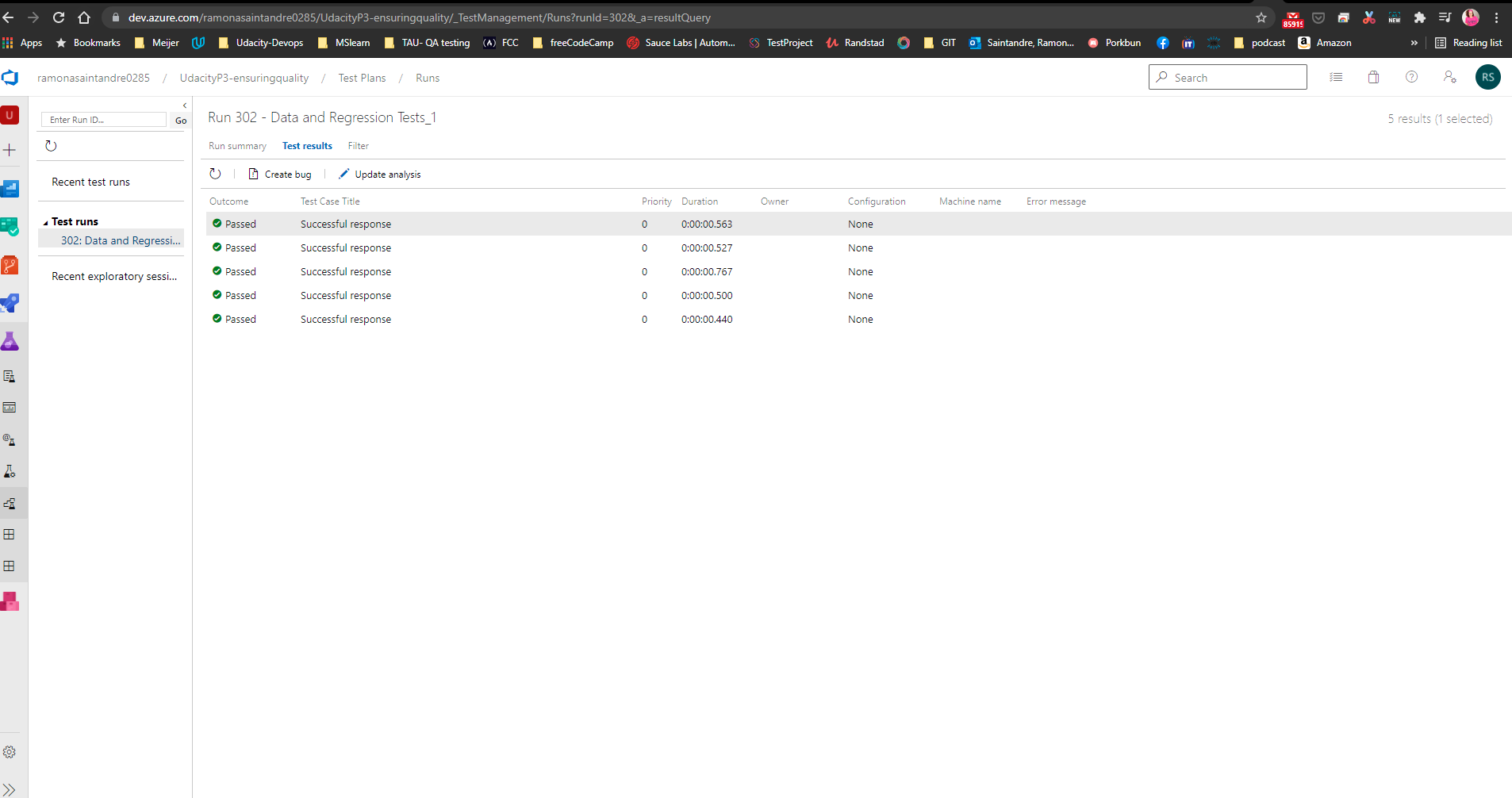

| Verify dummy API's can pass by running them through Newman | ✅ |

Install Postman.

With the starter files I was able to test the dummy API locally with different environment variables and then export the results as a collection to my project directory.

Once the collection files have been pushed to GitHub you will need to create a script to run a Data Validation and Regression test in the Pipeline and create a report of the results.

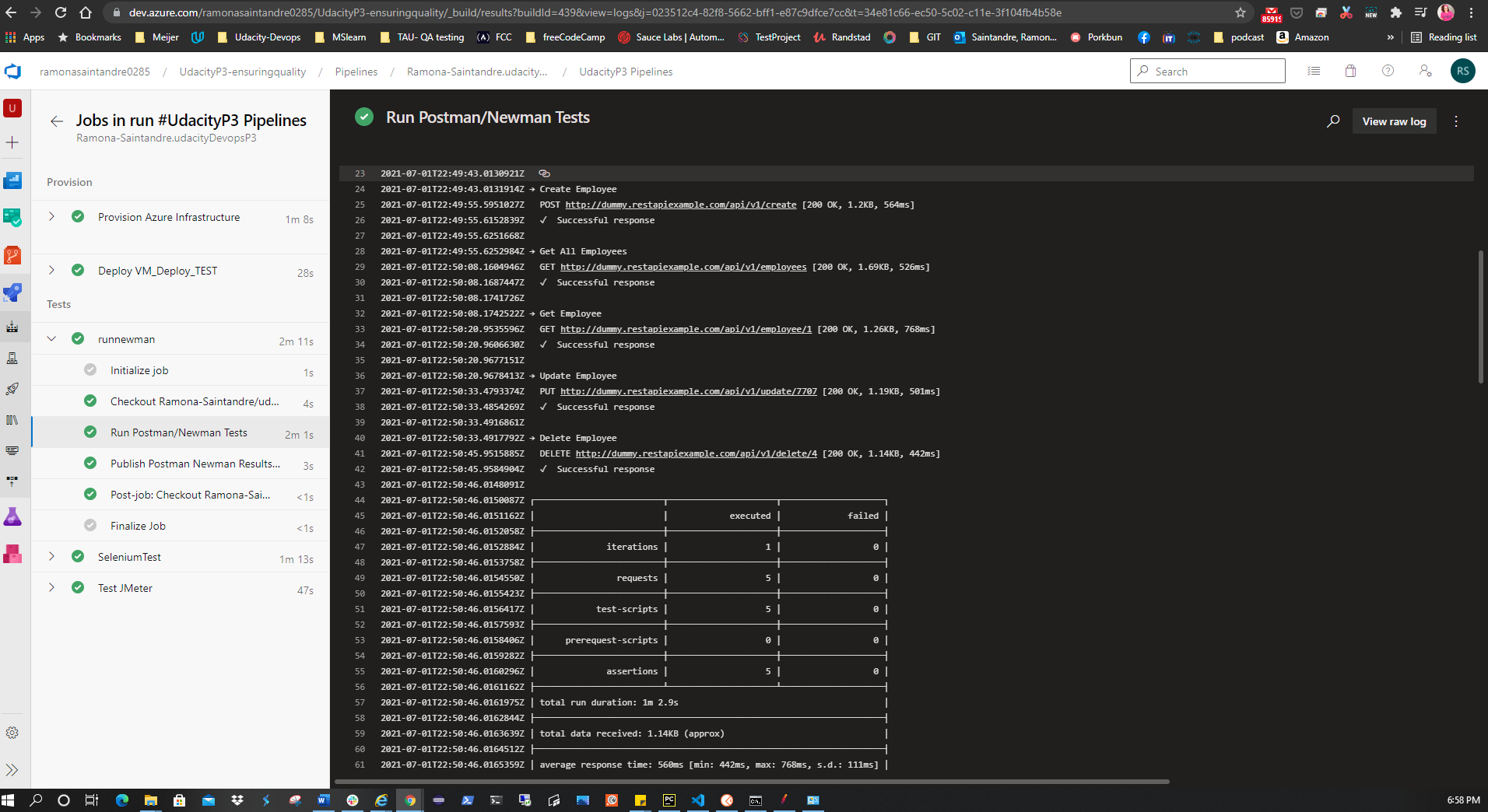

The command line Collection Runner

Newman

was used to perform these tests.

The following script was used:

sudo npm install -g newman reporter

echo 'Starting Tests...'

echo 'Running Regression Test'

newman run automatedtesting/postman/RegressionTest.postman_collection.json --delay-request 15000 --reporters cli,junit --suppress-exit-code /

echo 'Running Data Validation Test'

newman run automatedtesting/postman/DataValidation.postman_collection.json --delay-request 12000 --reporters cli,junit --suppress-exit-code

The results of the tests will also appear in the Pipeline output like so:

| Test Type | Technology | Stage in CI/CD pipeline | Status |

|---|---|---|---|

| Functional | Selenium | Test Stage - runs in custom linux VM | ✅ |

| Goal | Status |

|---|---|

| Direct the output of the Selenium Test Suite to a log file, and execute the Test Suite. | ✅ |

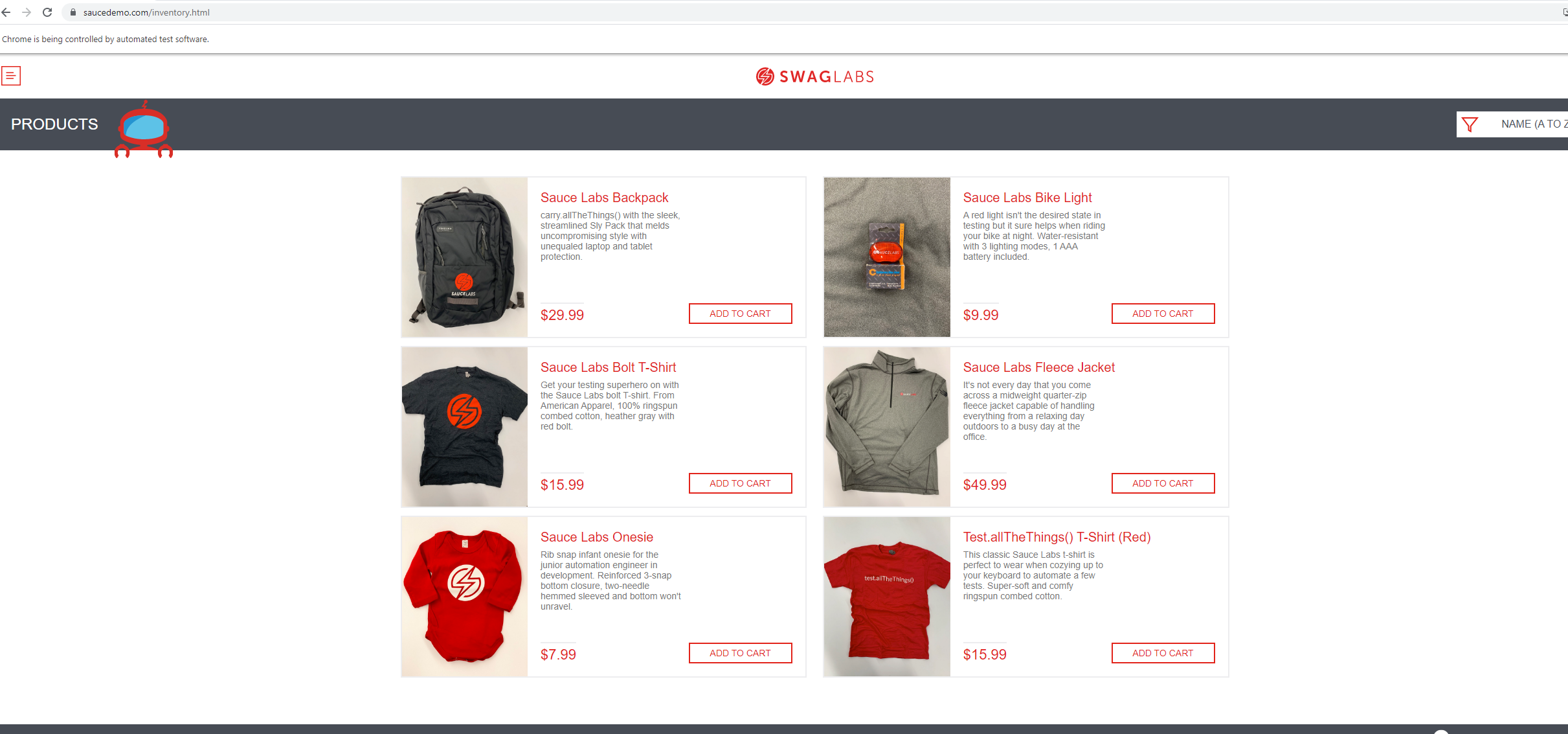

The project requires that Selenium is installed on the VM to test the UI functionality of https://www.saucedemo.com/ website.

ems were added to the shopping cart, and what items were removed from the shopping cart.

I created the python code locally and made sure the test was working before ingesting it into the Pipeline.

To this:

-

Download the latest Chrome driver. Make sure chromedriver is added to PATH.

-

Run

pip install -U selenium -

You can test Selenium by executing the

login.pyfile in the Selenium folder. It should open the site and add all items to the shopping cart and then remove them from the shopping cart. -

A script will need to be created to perform these tasks in the CLI in the Azure Pipelines at this point. Make sure the script includes logging the items being added and removed from the cart that can be sent to an output file.

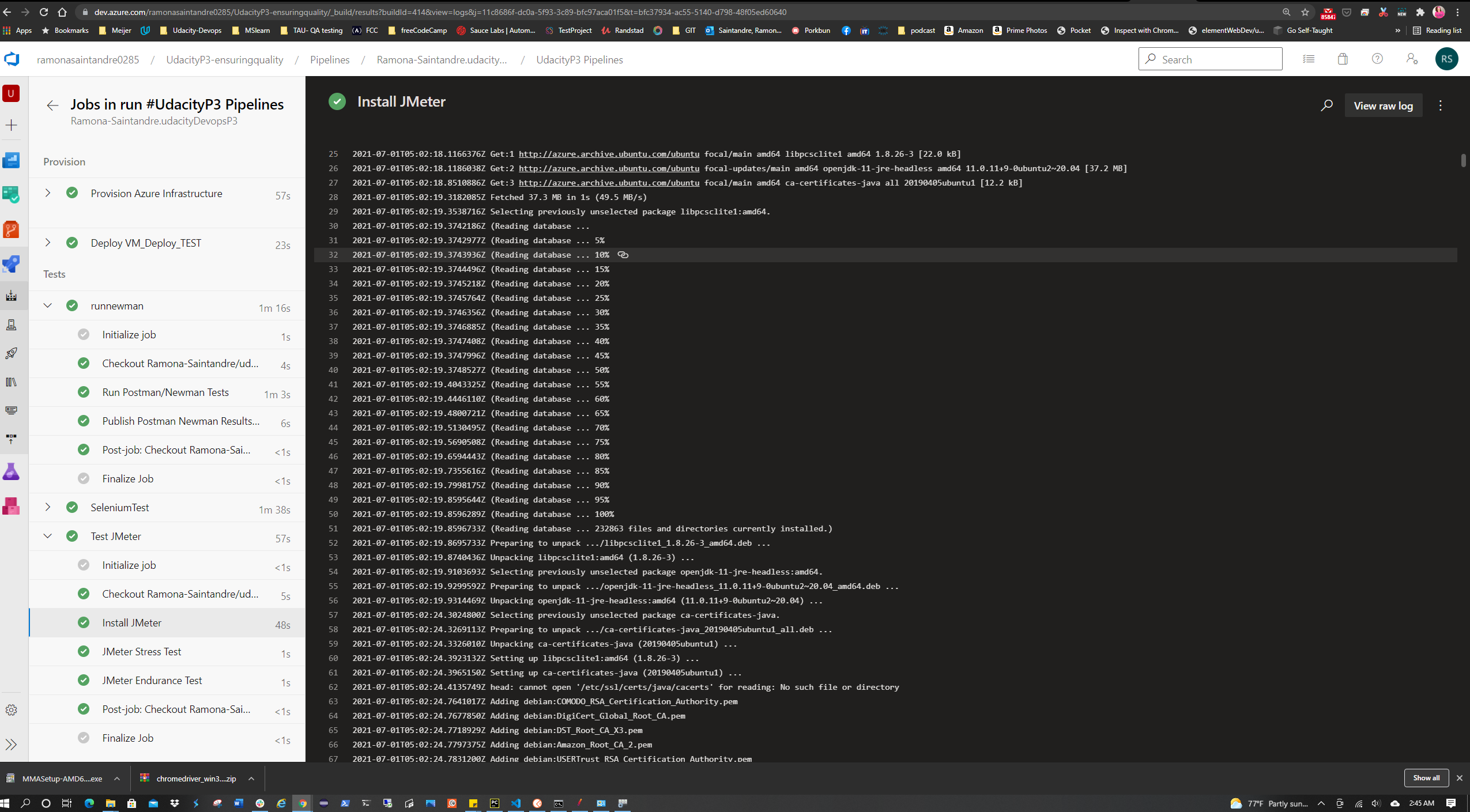

| Test Type | Technology | Stage in CI/CD pipeline | Status |

|---|---|---|---|

| Performance | JMeter | Test Stage - runs against the AppService | ✅ |

| Goal | Status |

|---|---|

| Stress Test | ✅ |

| Endurance Test | ✅ |

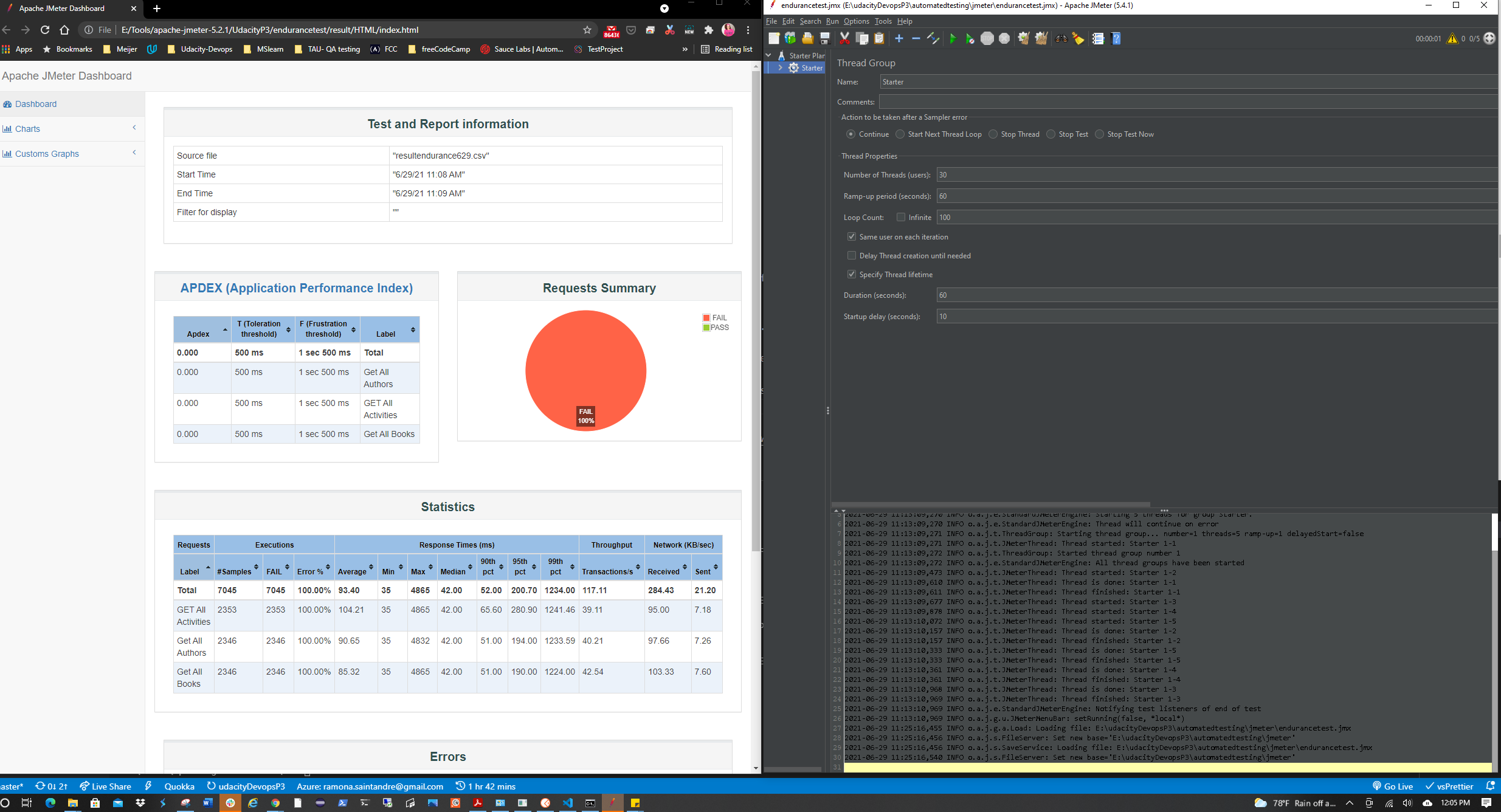

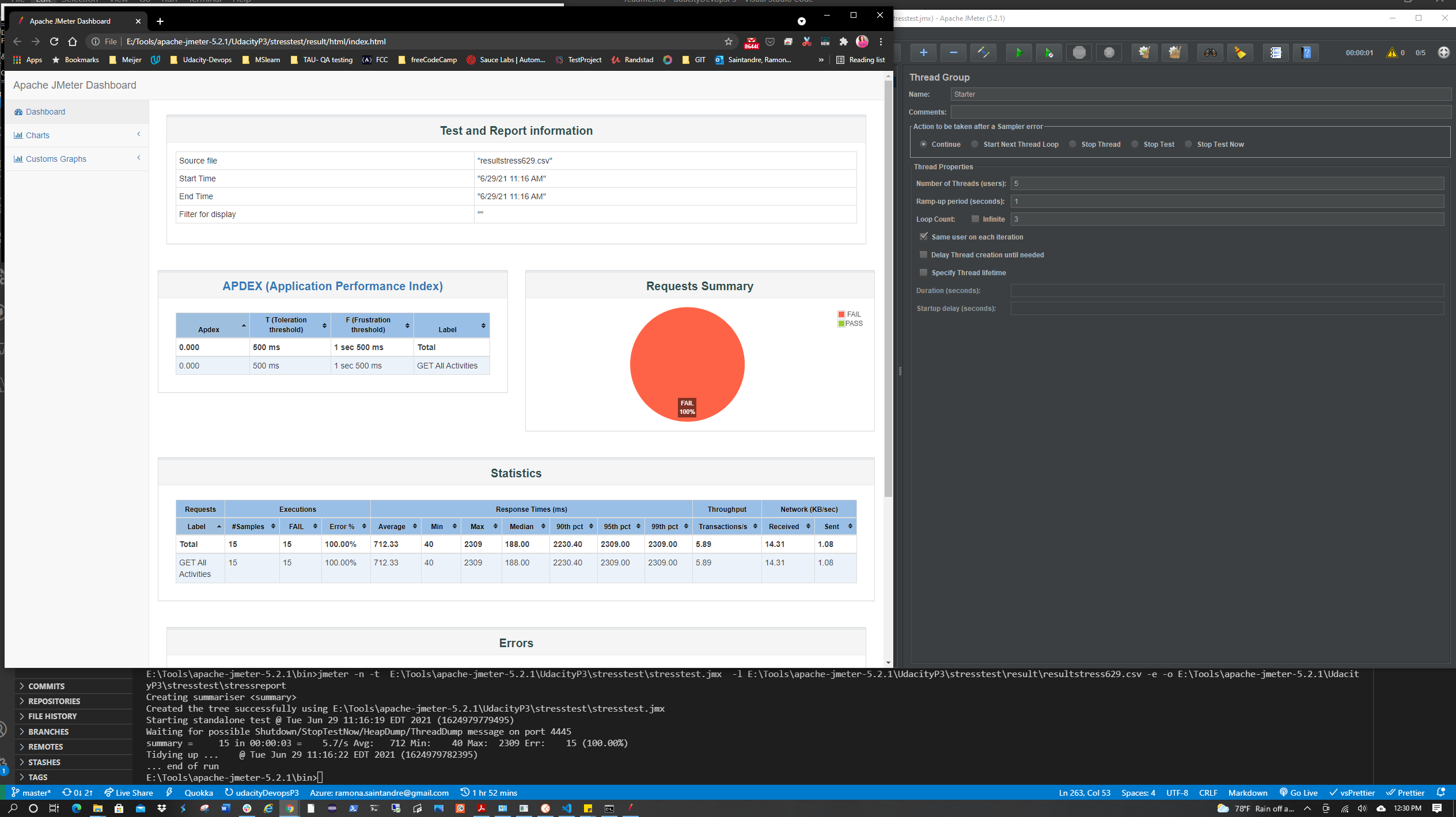

Two tests suites were created using the Starter API files.

(Stress Test and Endurance Test) You will need to replace the APPSERVICEURL with the Url of your AppService once it's deployed.

In the Pipeline scripts were created to install JMeter and run the Stress and Endurance Tests.

For the Pipeline two users were used for the tests so the free-tier resources wouldn't be maxed out.

Before submission the tests will need to be ran simulating 30 users for a max duration of 60 seconds.

The data output from these tests will need to generate an HTML report.

Note: This may also be done non-CI/CD by Installing JMeter and running the tests. Note: This may also be done non-CI/CD.

| Goal | Status |

|---|---|

| Configure custom logging in Azure Monitor to ingest this log file. This may be done non-CI/CD. | :check_mark: |

A Log Analytics Workspace will need to be created to ingest the Selenium Log output file.

This Resource

could be helpful in setting up Log Analytics.

Go to the app service > Diagnostic Settings > + Add Diagnostic Setting. Click AppServiceHTTPLogs and Send to Log Analytics Workspace. Select a workspace (can be an existing default workspace) > Save. Go back to the app service > App Service Logs. Turn on Detailed Error Messages and Failed Request Tracing > Save. Restart the app service.

Return to the Log Analytics Workspace > Logs and run a query such as

Return to the Log Analytics Workspace > Logs and run a query such as heartbeatto see some log results and check that Log Analytics is working properly.

In Log Analytics Workspace go to Advanced Settings > Data > Custom Logs > Add + > Choose File. Select the seleniumlog.txt file created from the Pipeline run. (I downloaded a copy to my local machine and uploaded a copy to the Azure VM via SSH) > Next > Next. Enter the paths for the Linux machine where the file is located, such as:

Give it a name and click Done.

Return to the Log Analytics Workspace > Virtual Machines. Click on your VM, then Connect. An agent will be installed on the VM that allows Azure to collect the logs from it. (Under Settings > Agents you should see you have "1 Linux Computer Connected")

Return to the Log Analytics Workspace > logs. Under Queries you can enter the name of the custom log created in the previous query and click Run. It's possible this could take a while to show logs. The logs may only show if the timestamp on the log file is updated after the agent was installed. You may also need to reboot the VM.

Note: This may also be done non-CI/CD.

| Goal | Status |

|---|---|

| Configure an Action Group (email) | ✅ |

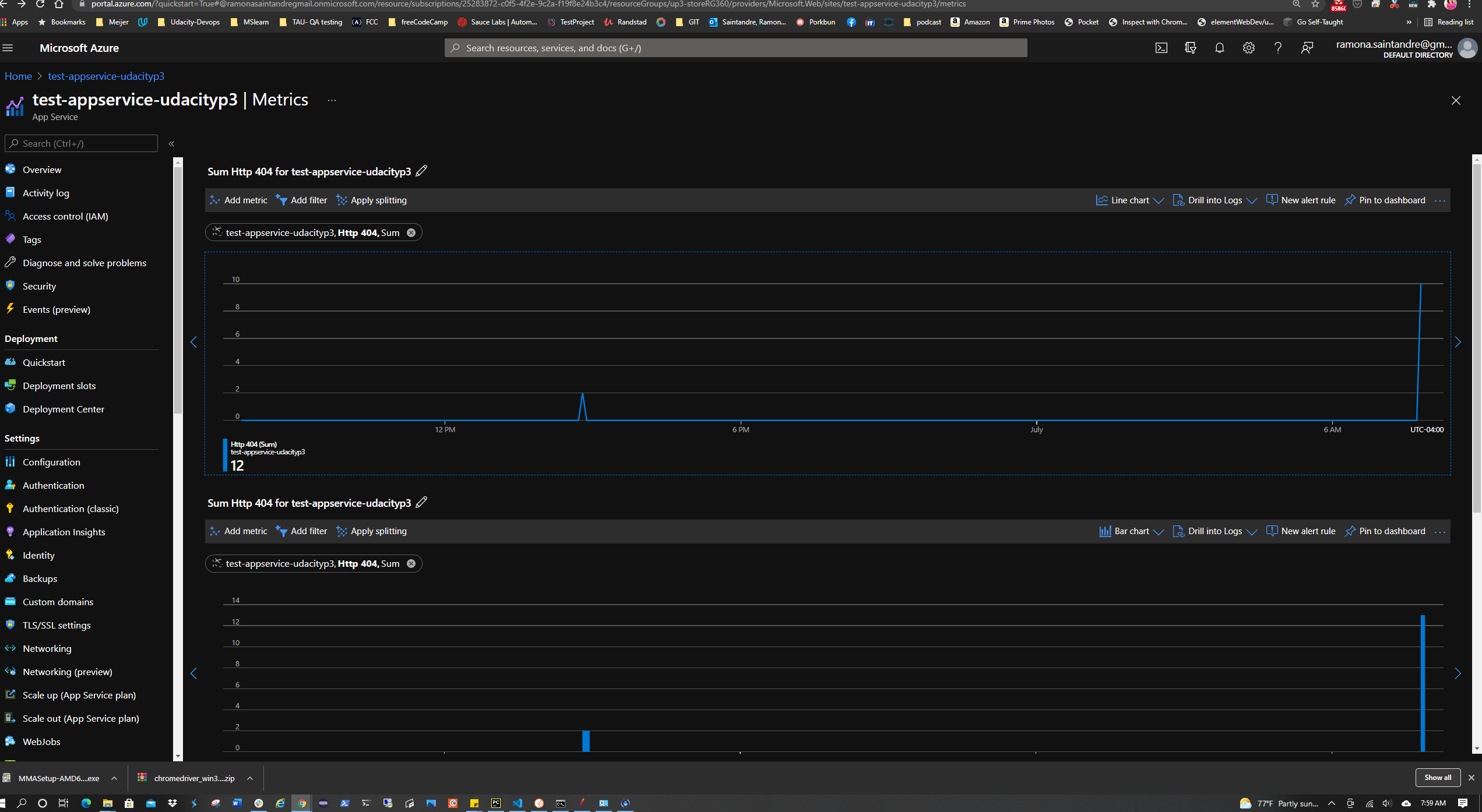

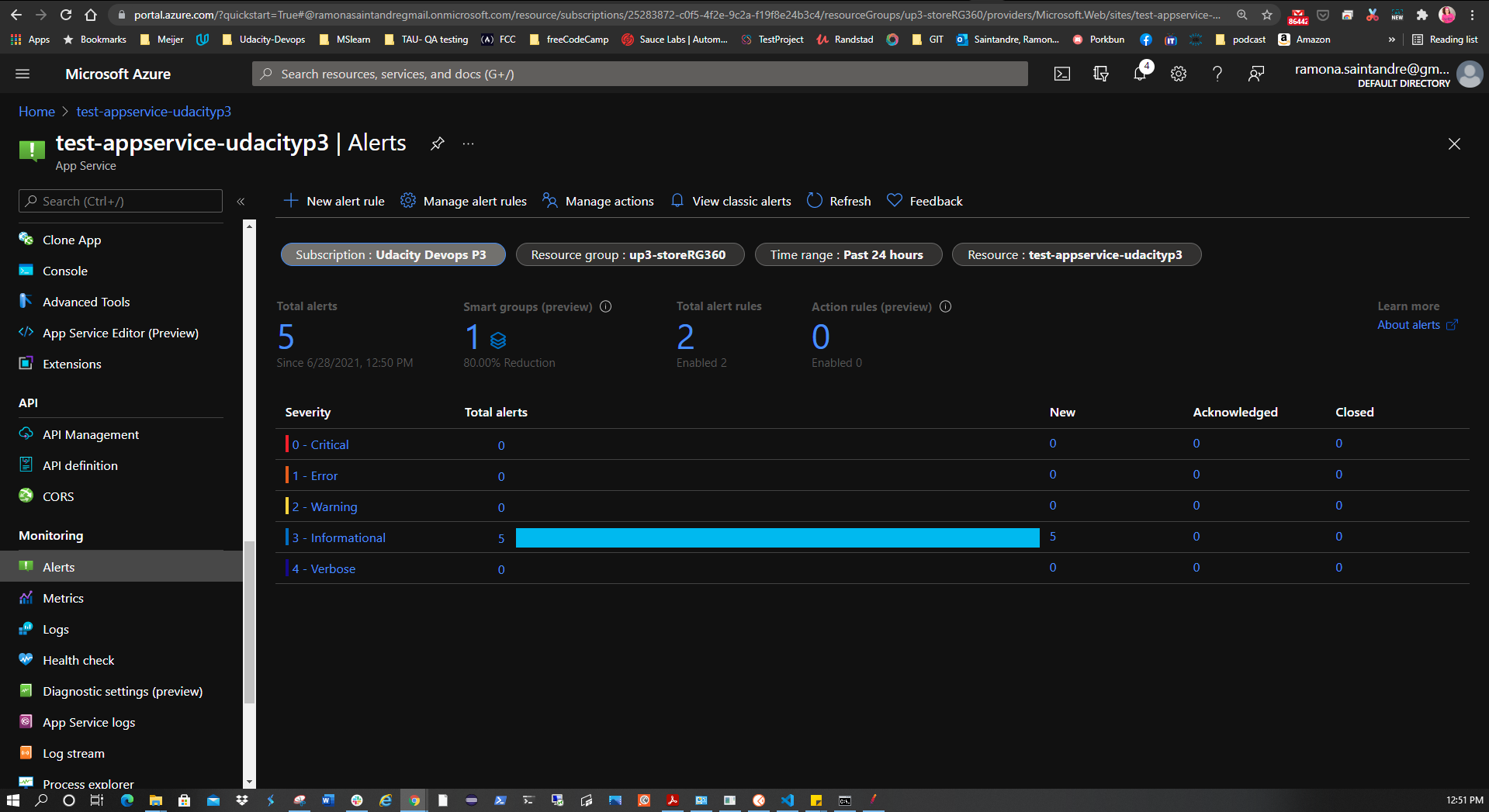

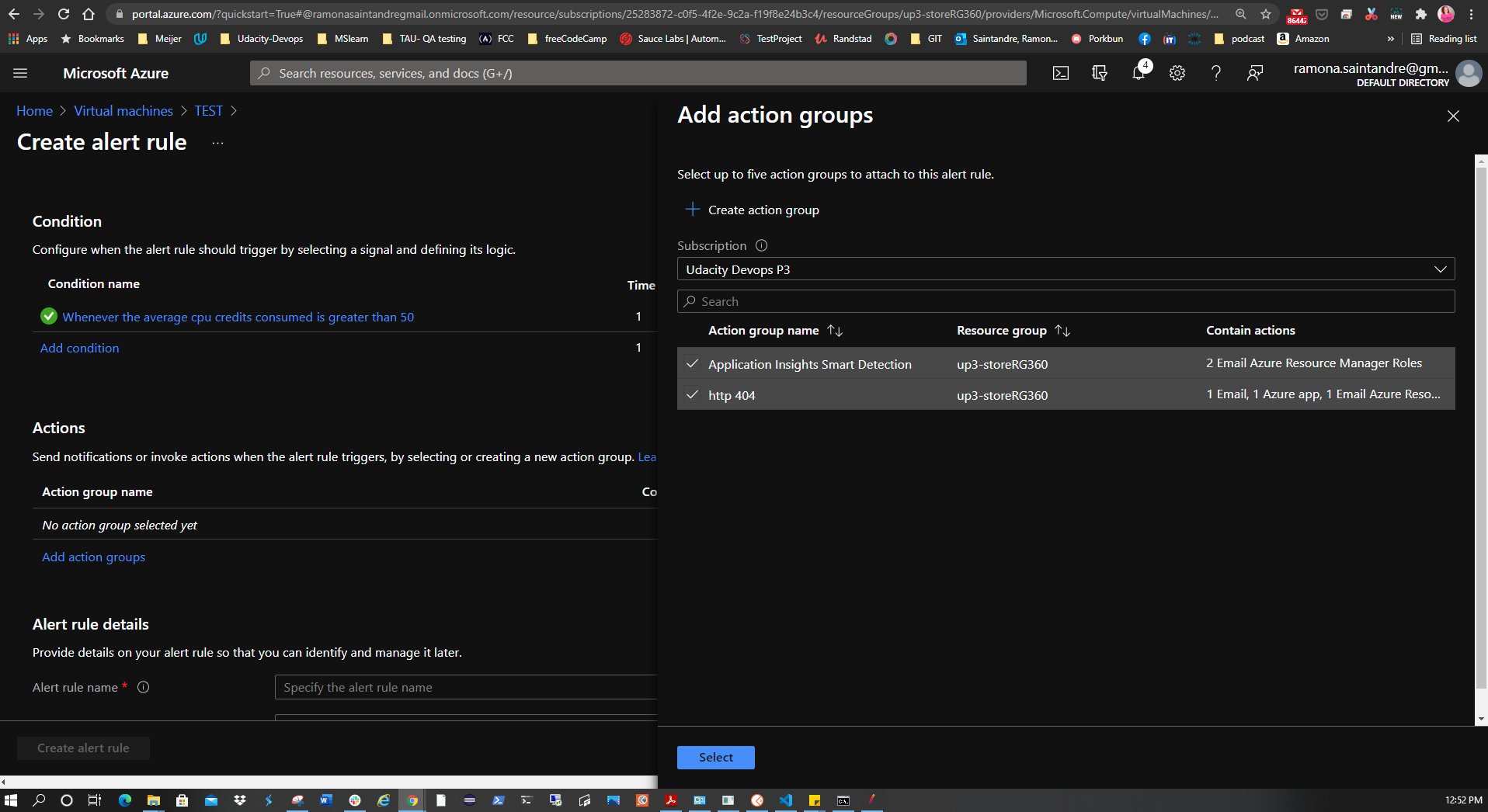

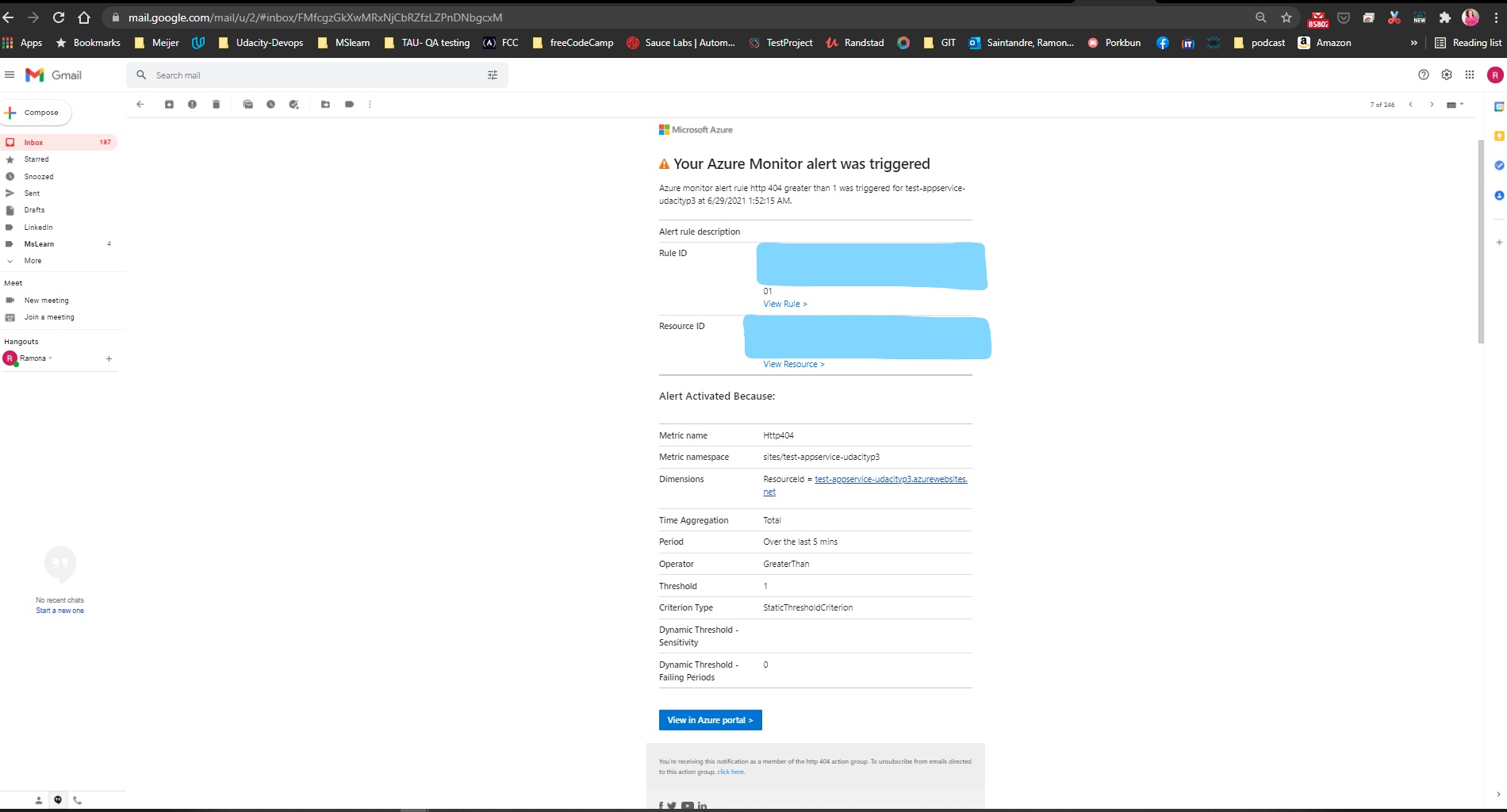

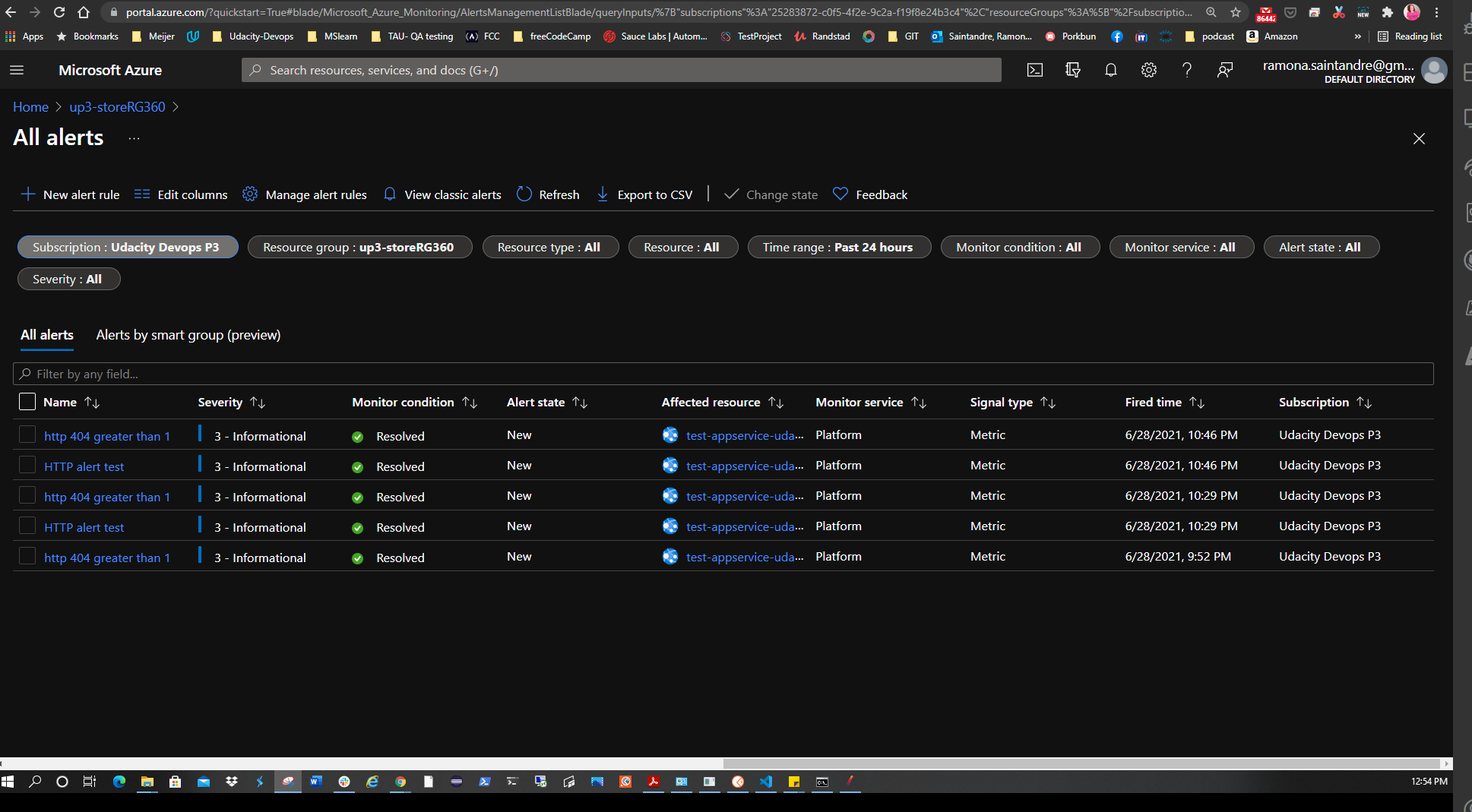

The project also calls for creating an alert rule with an HTTP 404 condition. It also requires an action group to be created with Email notification. After the alert takes effect, visit the URL of the AppService and try to cause 404 errors by visiting non-existent pages. After the 2nd 404 error an email alert should be triggered. To set this up:

In Azure Portal go to the AppService > Alerts > New Alert Rule. Add the HTTP 404 condition with a threshold value of 1. This creates an alert once there are at least 2 404 errors. Click Done. Now create the action group with the notification type set to Email/SMS message/Push/Voice choosing the email option. Give it a name and severity level.

One of the enchancements that I would like to do with this project, is to recreate it using Azure Bicep.

**More images can be found in the /projectimagefolder