This repository accompanies the paper (arXiv). Accepted to ACL 2020.

tl;dr read intro and one of the usage headings.

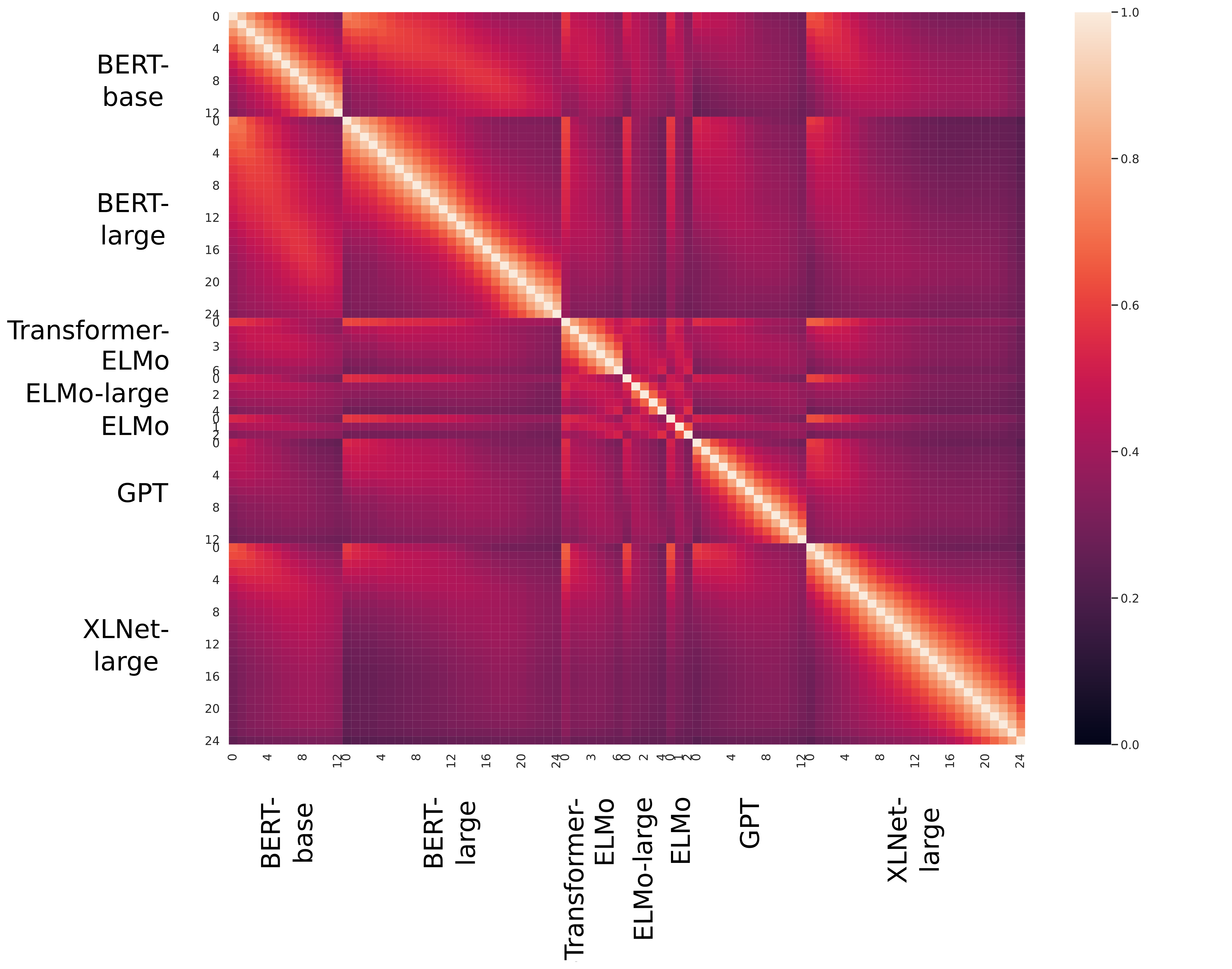

Tools to understand neural representations, and application to contextualizers. A “contexualizer” is a model producing a context-dependent word embedding.

Concretely, similarity measures, eg.

applied to SOTA contextualizers, eg.

All similarity measures can be found in corr_methods.py.

We also experimented with novel attention-based similarity measures in

attention_corr_methods.py.

This repository should be on your Python path.

export PYTHONPATH="${PWD}:${PYTHONPATH}"The main script is main.py.

main.py [--methods [METHODS ...]] REPRESENTATION_FILES OUTPUT_FILEFor examples, see slurm (eg. mk_resultsN.sh and

mk_resultsN-helper.sh). To see all options, run python main.py

--help. Note that REPRESENTATION_FILES is a file containing an input

file on each line. OUTPUT_FILE is a pickle dump.

main_attn.py is analogous.

You can also call the correlation methods directly from python. See

ex.ipynb.

var.py. Stuff you might want to change if you use this. eg, it has functionfname2mname(filename to model name) that transforms/data/sls/temp/belinkov/contextual-corr-analysis/contextualizers/bert_large_cased/ptb_pos_dev.hdf5tobert_large_cased-ptb_pos_dev.hdf5.- analysis. Data analysis. The results that will be presented. analysis-n analyzes the result of experiment n.

- hnb. “Helper notebook.” Files in this directory are to

- help me code

- help the reader understand

the resulting .py files.

These are files containing a copy of the function with loops and co. destructured (run once with an arbitrary value, to help debugging).

It may help you understand a function.

- slurm. SLURM scripts.

Run directly as

SCRIPTNAME. - other. Everything else. Lots of junk.

Our pipeline is:

- Generate representations (hdf5 files)

- Run

main.pyon them- Loads the representations (

load_representationsincorr_methods.py) - Compute the correlations using the given methods

- Writes them to

OUTPUT_FILE.

- Loads the representations (

- Analyze results (the OUTPUT_FILE above) in the

analysisdirectory

New correlation methods should extend corr_methods.Method.

SVCCA similarities (Method CCA in corr_methods.py):

CKA attention similarity (Method AttnLinCKA in

attention_corr_methods.py):