- [2024.1.09] We have comprehensively improved the performance of LHNetV1, especially the inference speed, and latest paper is currently under review. The code of LHNetV2 will be released after the latest paper is accepted.

This repository contains the offical PyTorch implementation of paper:

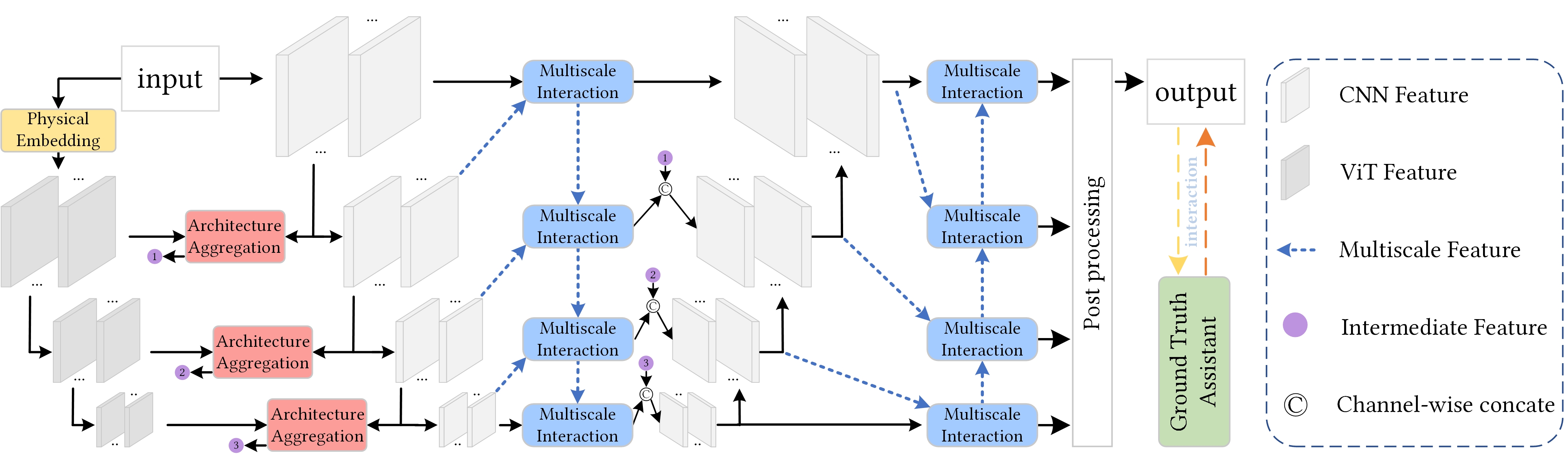

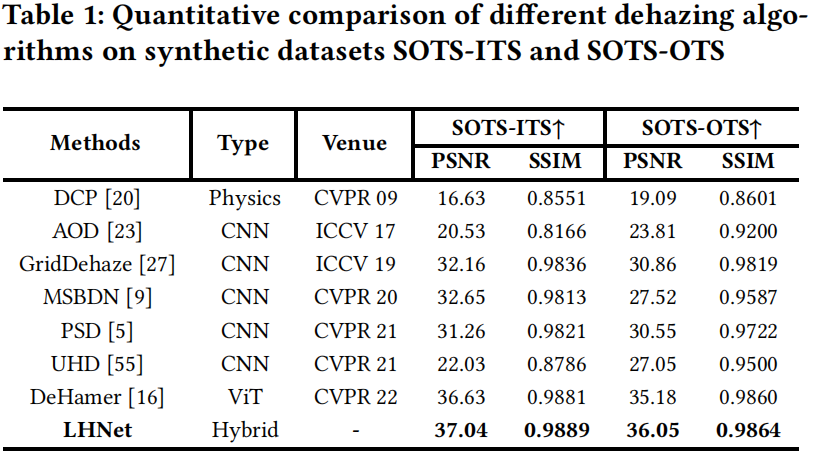

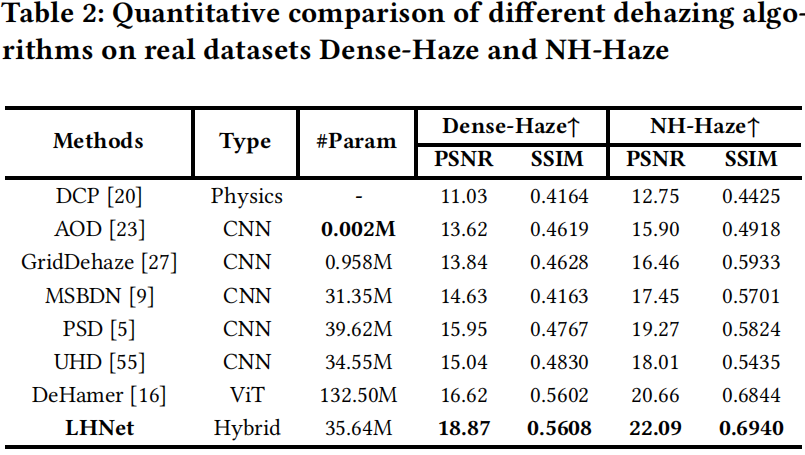

LHNet: A Low-cost Hybrid Network for Single Image Dehazing, ACM MM 2023

-

Clone Repo

git clone https://github.com/SHYuanBest/LHNet-ACM-MM-23.git cd LHNet-ACM-MM-23-main/ -

Create a virtual environment via

conda.conda create -n LHNet python=3.7 conda activate LHNet

-

Install requirements.

pip install -r requirements.txt

-

Download pre-trained models.

-

Download the pre-trained generator and discriminator from here, and place it into the folder

./ckptsckpts |- ITS |- ITS.pt |- OTS |- OTS.pt |- Dense |- Dense.pt |- NH |- NH.pt

-

-

Data preparing.

-

LHNet is trained on RESIDE-ITS, RESIDE-OTS, DENSE-HAZE and NH-HAZE.

-

Download all the datasets and place it into the folder

./datasets.Dataset RESIDE-ITS RESIDE-OTS Dense-Haze NH-HAZE SOTS-ITS/OTS Details about 4.5G about 45G about 250M about 315M about 415M Google drive Google drive Google drive Google drive Google drive Google drive The directory structure will be arranged as:

datasets |-ITS |- train_ITS |- haze |- clear_images |- trainlist.txt |- valid_ITS |- input |- gt |- val_list.txt |-OTS |- train_OTS |- haze |- clear_images |- trainlist.txt |- valid_OTS |- input |- gt |- val_list.txt |-Dense-Haze |- train_Dense |- haze |- clear_images |- trainlist.txt |- valid_Dense |- input |- gt |- val_list.txt |-NH-Haze |- train_NH |- haze |- clear_images |- trainlist.txt |- valid_NH |- input |- gt |- val_list.txt -

Or you can customize the dataset to follow the data format as shown before.

-

See python src/train.py --h for list of optional arguments.

An example:

CUDA_VISIBLE_DEVICES=0 python src/train.py \

--dataset-name your_choice \

--data-dir ../datasets \

--ckpt-save-path ../weights \

--ckpt-load-path ../ckpts/your_choice.pt

--nb-epochs 2000 \

--batch-size 8 \

--train-size 512 512 \

--valid-size 512 512 \

--loss l1 \

--plot-stats \

--cuda TrueSee python src/test.py --h for list of optional arguments.

An example:

CUDA_VISIBLE_DEVICES=0 python src/test.py \

--dataset-name your_choice \

--data-dir your_choice \

--ckpts-dir your_choice \

-val_batch_size 1If you find LHNet useful in your research, please consider citing our paper:

@inproceedings{yuan2023lhnet,

title={LHNet: A Low-cost Hybrid Network for Single Image Dehazing},

author={Yuan, Shenghai and Chen, Jijia and Li, Jiaqi and Jiang, Wenchao and Guo, Song},

booktitle={Proceedings of the 31st ACM International Conference on Multimedia},

pages={7706--7717},

year={2023}

}

If you have any question, please feel free to contact us via [email protected]

This code is based on UNet, Swin-Transformer and Dehamer.