An implementation of WaveNet: A Generative Model for Raw Audio https://arxiv.org/abs/1609.03499 .

This project is originated from the hands-on lecture of SPCC 2018. This project rewrote codes of the lecture with following criteria:

- Simple, modular and easy to read

- Using high level tensorflow APIs:

tf.layers.Layer,tf.data.Dataset,tf.estimator.Estimator. - Fix discrepancy of the results between training and inference that causes workaround to dispose wrong results at early steps of inference samples.

- Review the lecture and deepen my understandings

This project has following limitations.

- Supported data set is LJSpeech only

- No sophisticated initialization, optimization and regularization techniques that was in the lecture

- Lack of hyper-parameter tuning.

- Confirmed generated audio are low quality

For research-ready implementations, please refer to

This implementation was tested with Tesla K20c (4.94GiB GPU memory).

This project requires python >= 3.6 and tensorflow >= 1.8.

The other dependencies can be installed with conda.

conda env create -f=environment.ymlThe following packages are installed.

- pyspark=2.3.1

- librosa==0.6.1

- matplotlib=2.2.2

- hypothesis=3.59.1

- docopt=0.6.2

The following pre-processing command executes mel-spectrogram extraction and serialize waveforms, mel-spectrograms and the other meta data into TFRecord (protocol buffer with content hash header) format.

python preprocess.py ljspeech /path/to/input/corpus/dir /path/to/output/dir/of/preprocessed/dataAfter pre-processing, split data into training, validation, and test sets.

A simple method to create list files is using ls command.

ls /path/to/output/dir/of/preprocessed/data | sed s/.tfrecord// > list.txtThen split the list.txt into three files.

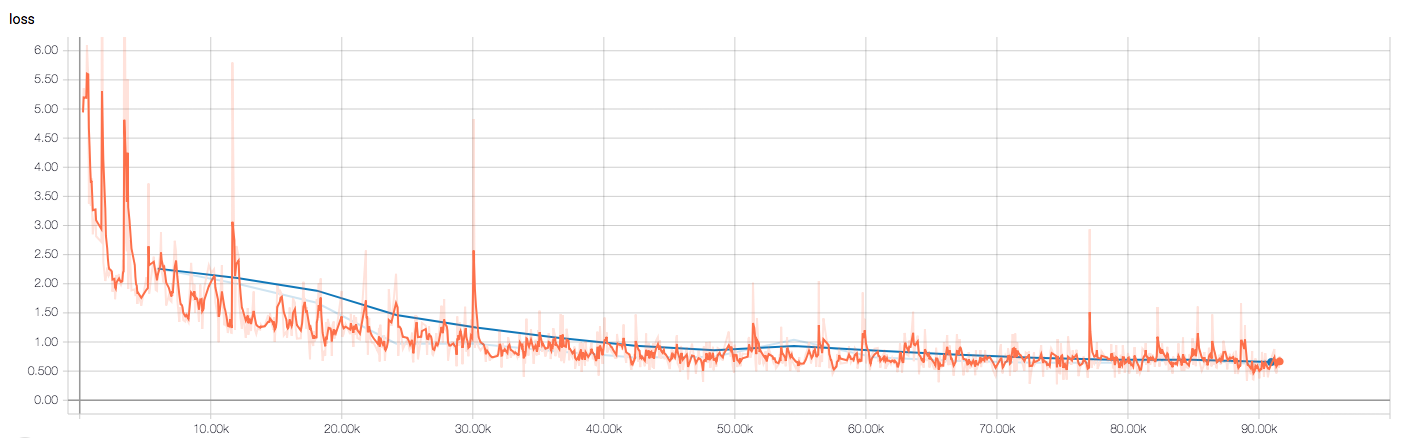

python train.py --data-root=/path/to/output/dir/of/preprocessed/data --checkpoint-dir=/path/to/checkpoint/dir --dataset=ljspeech --training-list-file=/path/to/file/listing/training/data --validation-list-file=/path/to/file/listing/validation/data --log-file=/path/to/log/fileYou can see training and validation losses in a log file and on tensorboard.

tensorboard --logdir=/path/to/checkpoint/dir(orange line: training loss, blue line: validation loss)

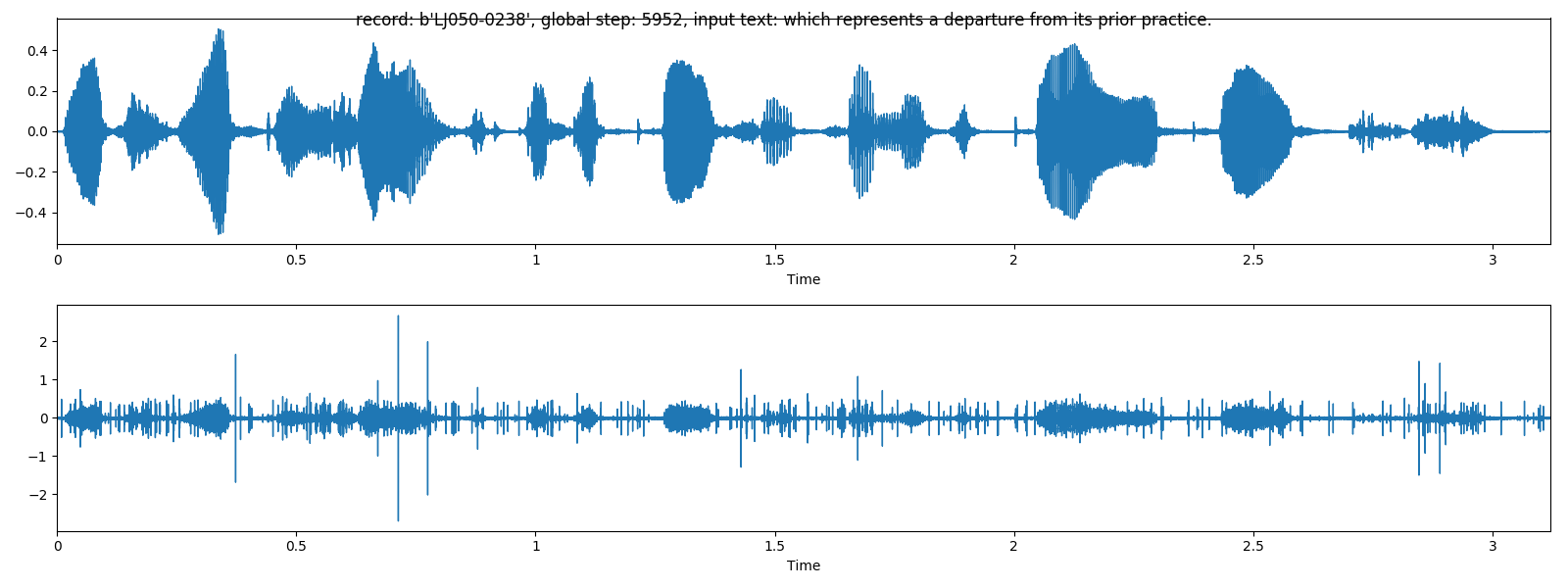

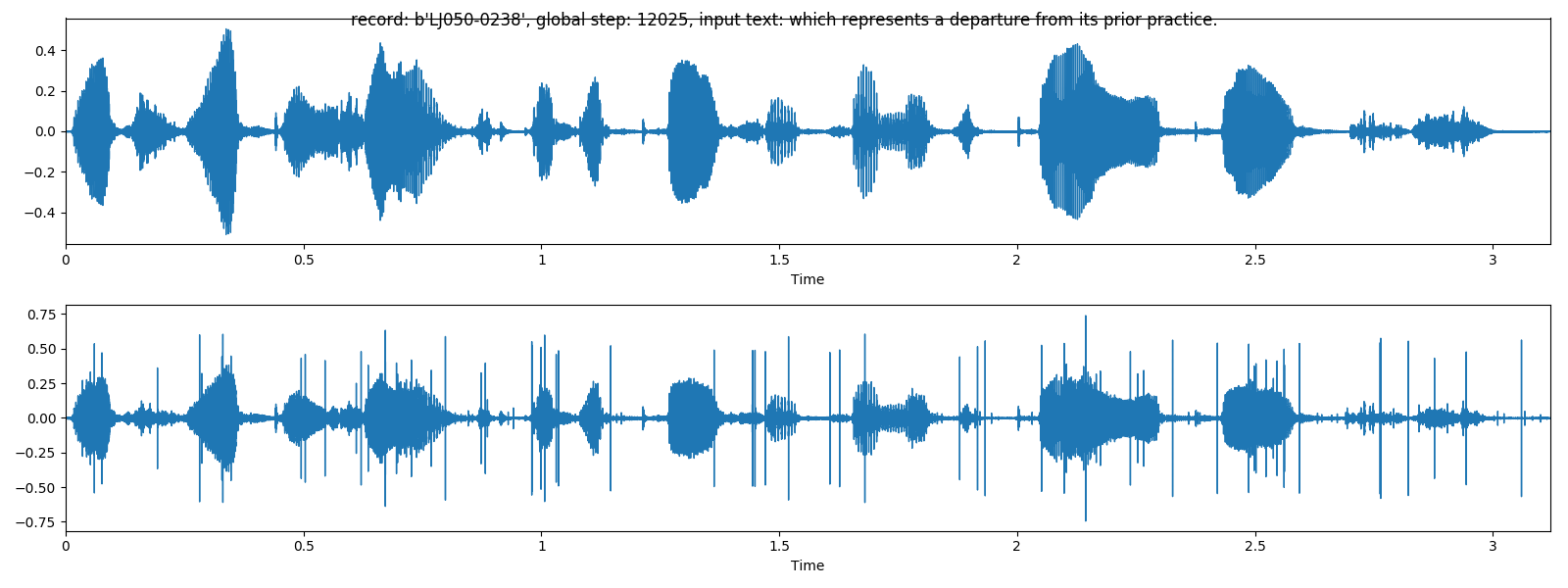

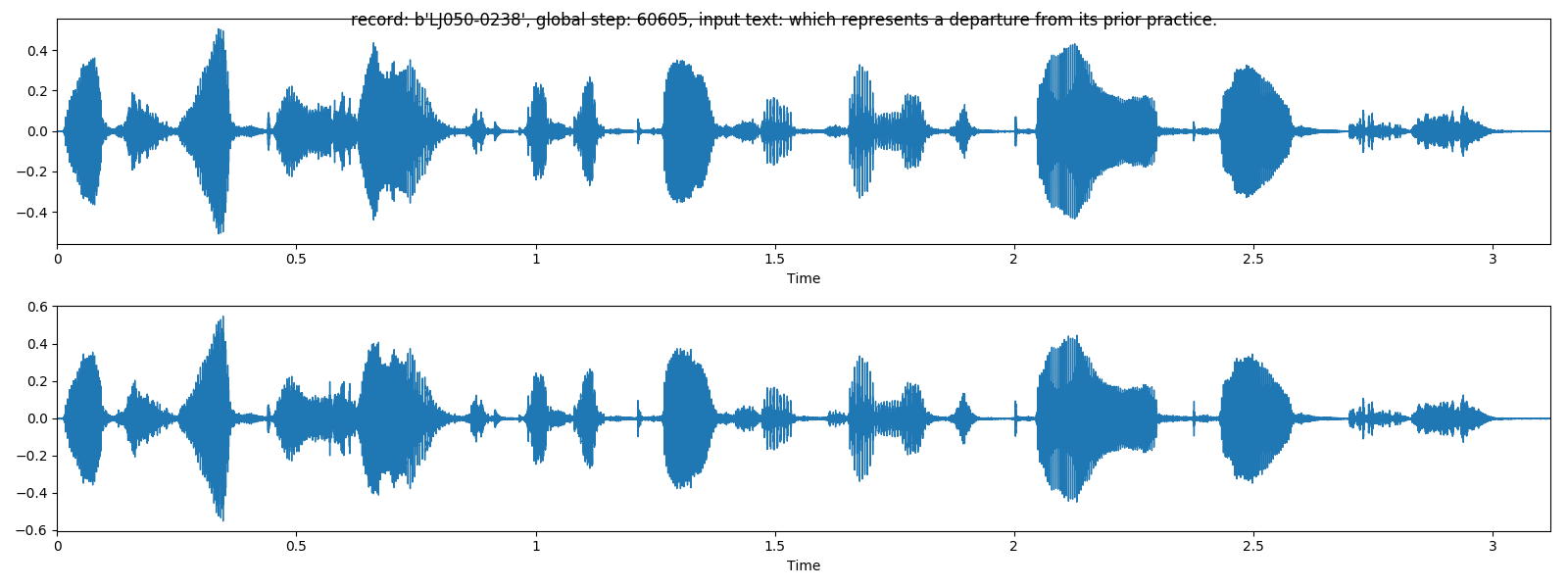

At validation time, predicted waveforms with teacher forcing are generated as images in the checkpoint directory.

(above: natural, below: predicted)

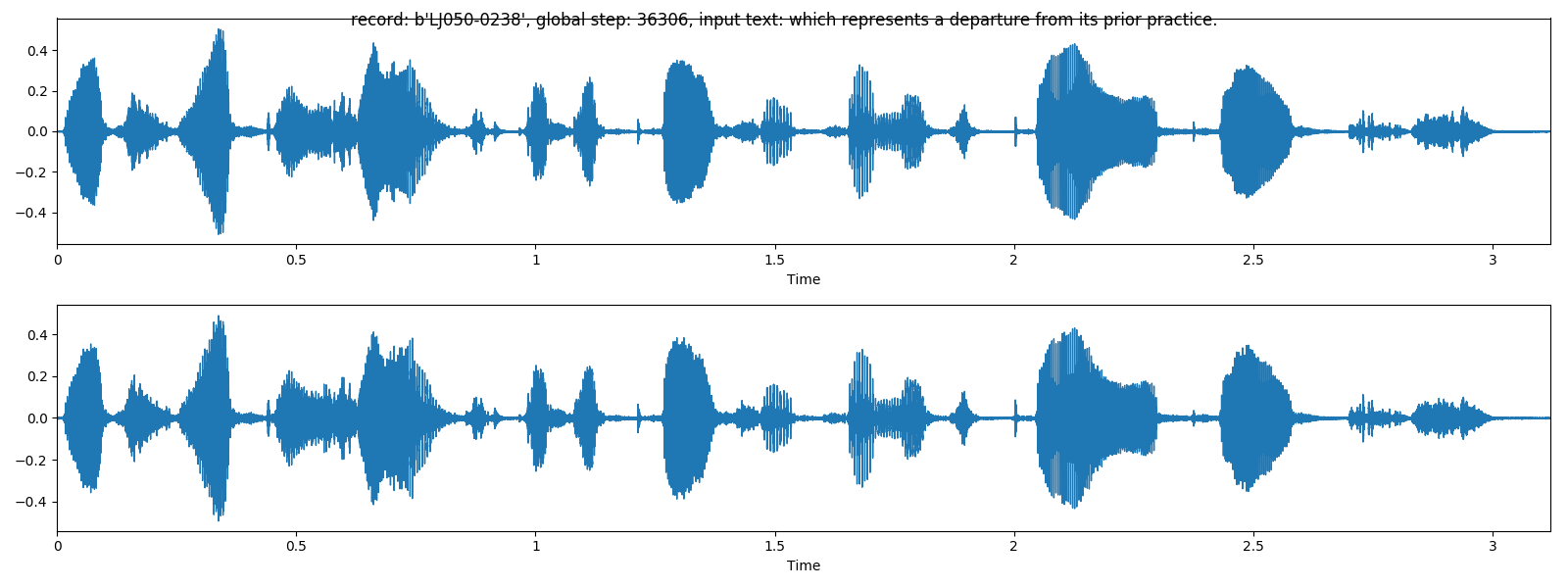

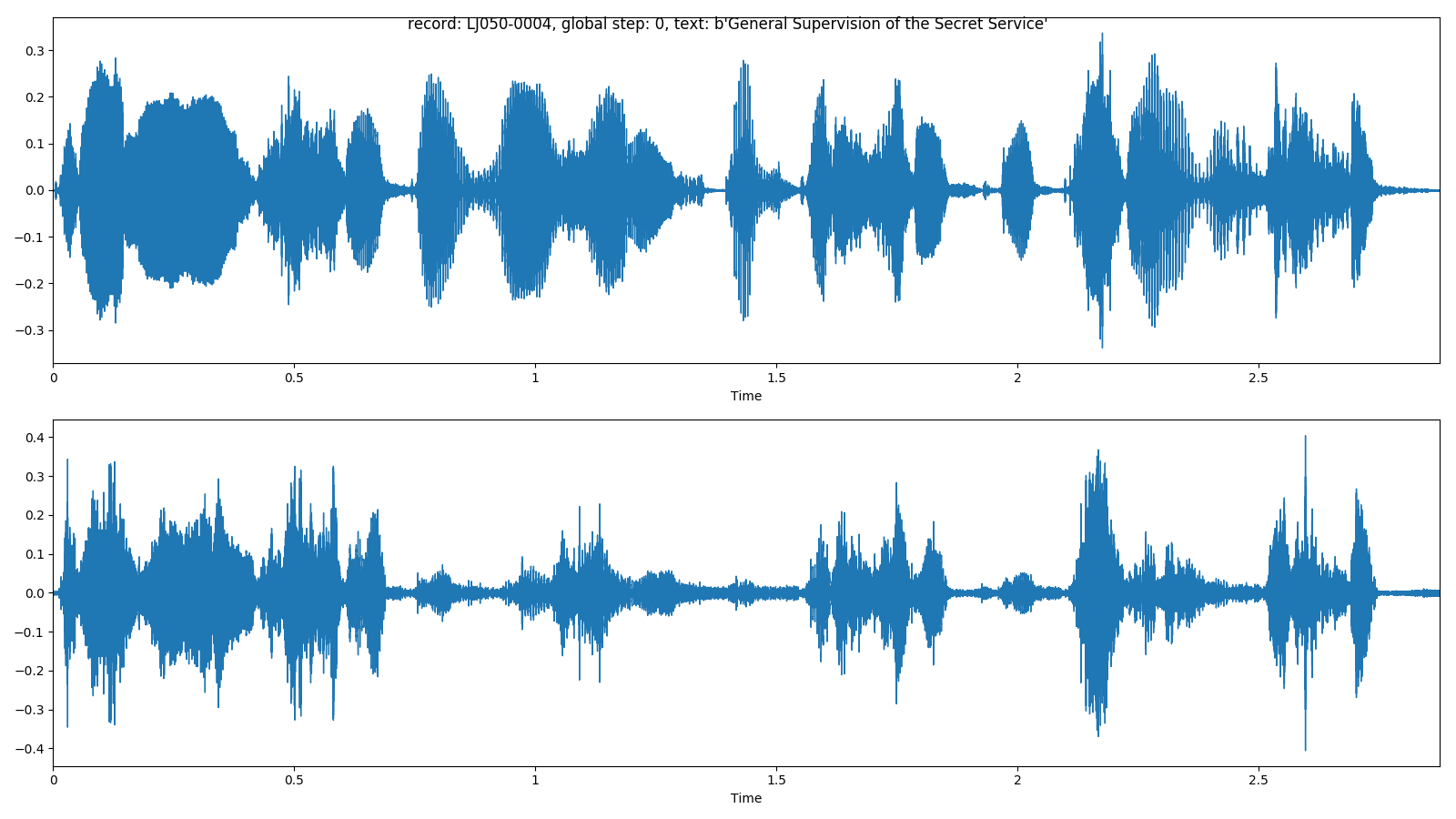

python predict.py --data-root=/path/to/output/dir/of/preprocessed/data --checkpoint-dir=/path/to/checkpoint/dir --dataset=ljspeech --test-list-file=/path/to/file/listing/test/data --output-dir=/path/to/output/dirAt prediction time, predicted samples are generated as audio files and image files.

(above: natural, below: predicted)

Causal convolution is implemented in two different ways. At training time, causal convolution is executed in parallel with optimized cuda kernel. At inference time, causal convolution is executed sequentially with matrix multiplication. The result of two implementation should be same. This project checks the equality of the two implementation with property based test.

python -m unittest ops/convolutions_test.py

python -m unittest layers/modules_test.py