My "Hello world" project of Neural Networks. It is currently based on the Keras API, but I am going implement one literally from scratch after I mastered the section of back propagation.

Before trying the programs, make sure numpy, opencv-python are properly

installed.

To run derivative.py, you also need matplotlib since it is using pyplot

to graph functions.

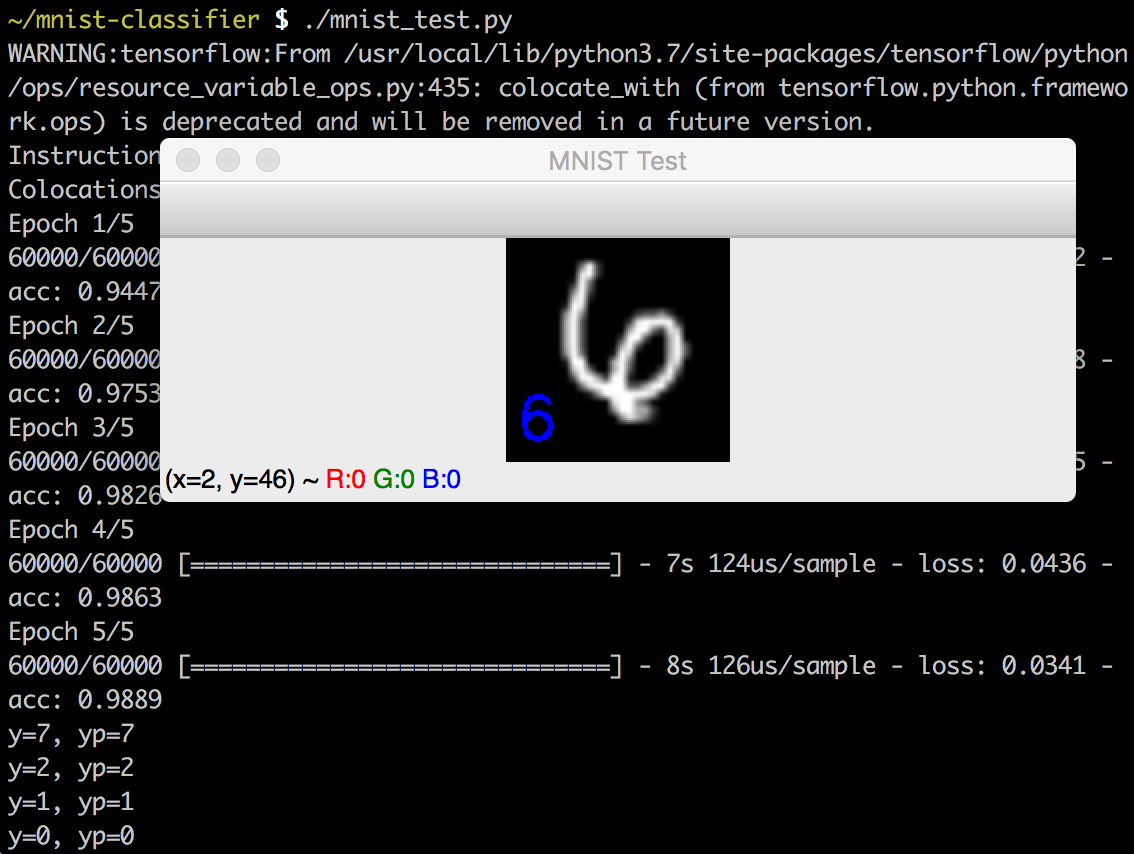

- mnist_test.py: This is the simplest fully-connected network implemented to classify the MNIST handwriting digits. Due to my limitation in mathematics, the program is written using Keras API.

-

fnn_xor.py: It seems to be redundant to let a feedforward neural network learn to do XOR operation, but it is my first neural network made from scratch.

-

gd_linearfunc.py: This script demonstrates Gradient Descent in Machine Learning by fitting a linear function. Though it does some apparently useless jobs, it is probably the simplest learning that makes use of Gradient Descent.

Install MathJax Plugin for Github to view the formulas

This is the form of the linear function to fit:

And the formula below is how we do gradient descent to the parameters (knowledge of multivariable calculus may be required to understand this):

I recently discovered that the program above can also help solve the problem of Linear Regression, I will make a separate program later

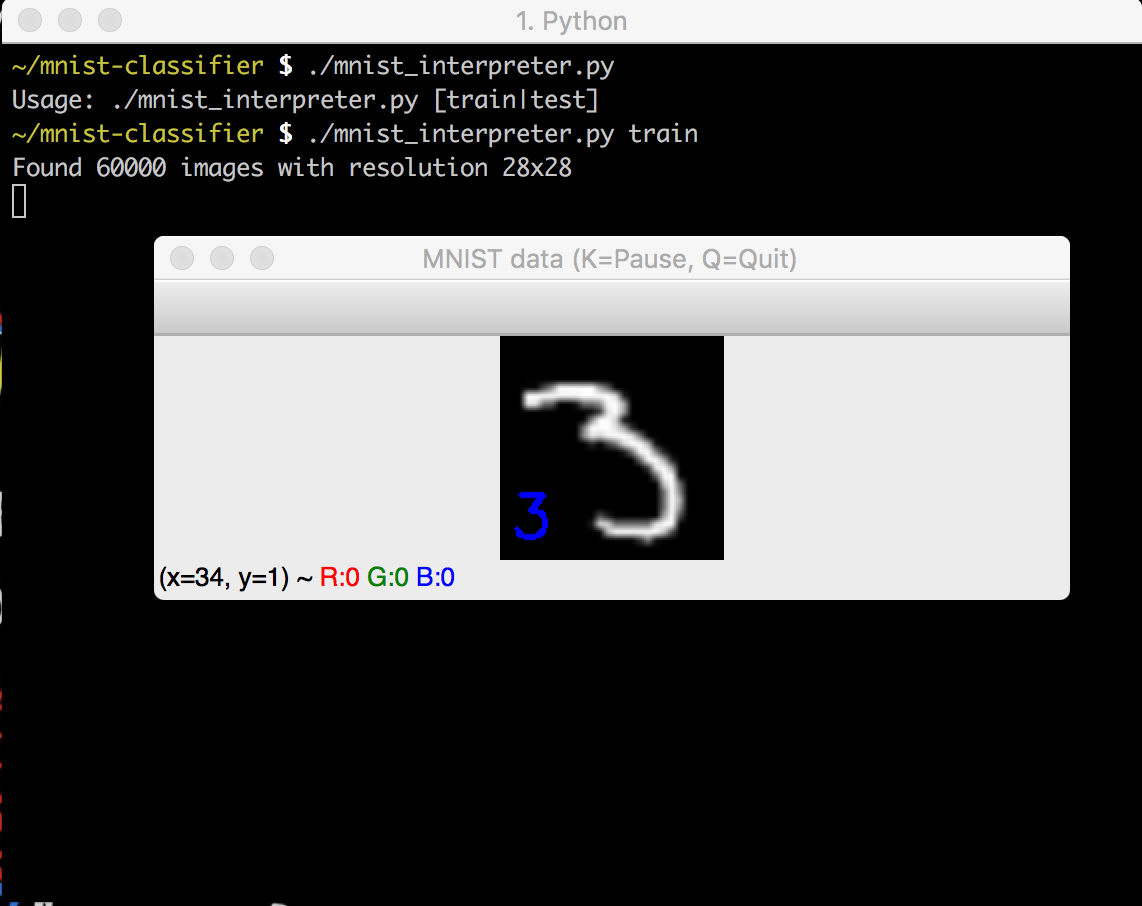

- mnist_interpreter.py: This is a script that helps you view the labeled images from either of the training or testing data sets. It is well-documented, so developers are easy to understand the format of IDX file and can implement new interpreters by themselves.

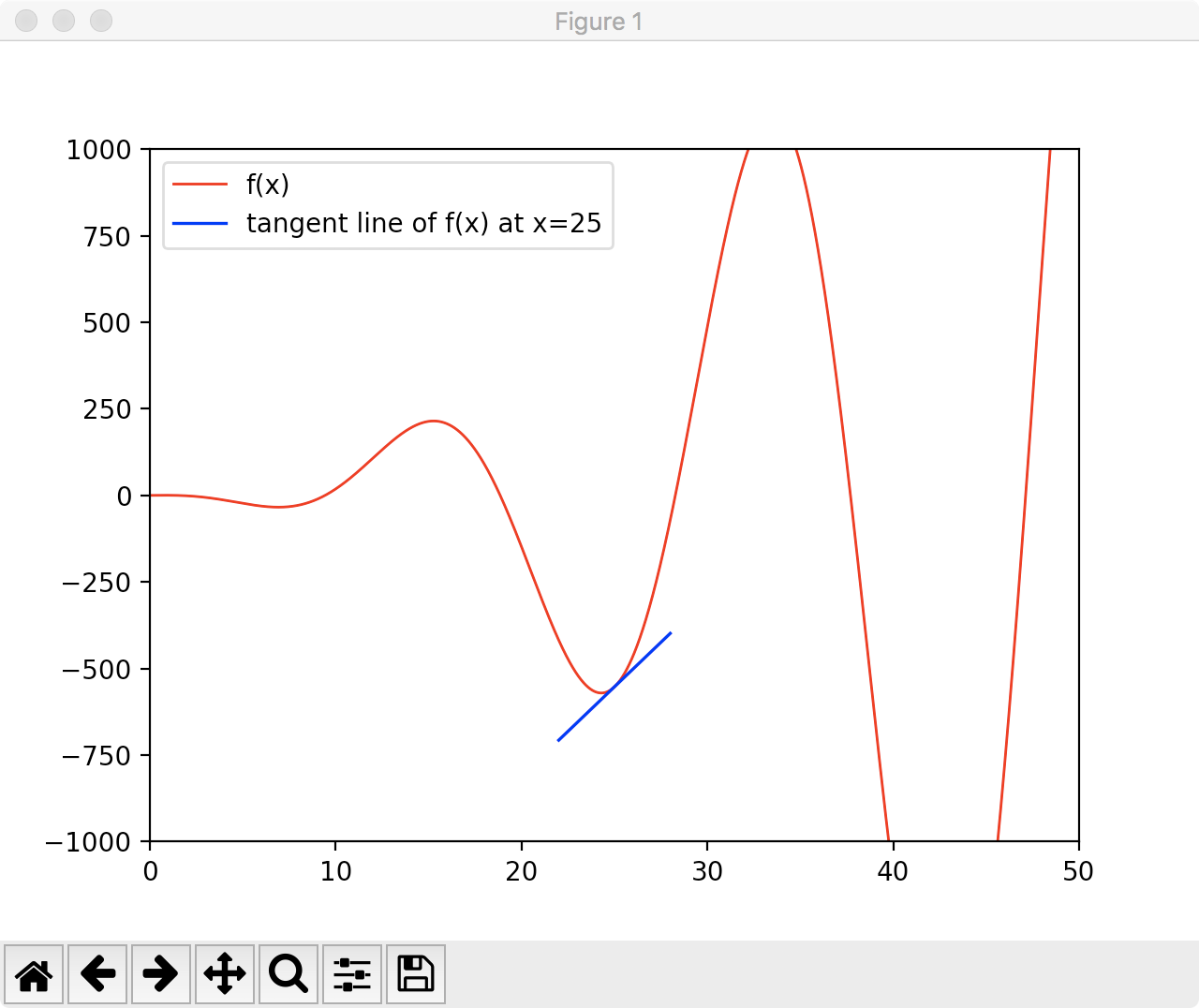

- derivative.py: This script shows the relationship between a function and its derivative by using tangent line. This is a fundamental concept for Gradient Descent algorithm.