This proposes a novel Cloud-based Astronomy Inference (CAI) framework for data parallel AI model inference on AWS. We can classify 500K astronomy images using the AstroMAE model in a minute !

A brief description of the workflow:

- Initialize: Based on the input payload (Sample input) list the partition files and config for each job. Returns an array.

- Distributed Model Inference: Runs distributed map of Lambda executions based on the array returned by previous state. Each of these jobs:

- Load the code, pretrained AI model in a container.

- Download a partition file as specified in input config. The paritions are created and uploaded to a S3 bucket beforehand.

- Run inference on the file and write the execution info to the

result_path.

- Summarize: Summarize the results returned by each lambda execution in the previous distributed map. Concatenate all of those result.json files into a single combined_data.json.

The whole data needs to be split into smaller chunks so that we can run parallel executions on them.

- Get the total dataset fro Google drive.

- Split into smaller chunks (e.g. 10MB) using the split_data.py.

- Now upload those file partitions into a S3 bucket.

Upload the Anomaly Detection folder into a S3 bucket.

This is passed to the state machine as input. It assumes the code and data are loaded into a S3 bucket named cosmicai-data. You can update the lambda functions to change it. The following is a sample input payload:

{

"bucket": "cosmicai-data",

"file_limit": "11",

"batch_size": 512,

"object_type": "folder",

"S3_object_name": "Anomaly Detection",

"script": "/tmp/Anomaly Detection/Inference/inference.py",

"result_path": "result-partition-100MB/1GB/1",

"data_bucket": "cosmicai-data",

"data_prefix": "100MB"

}This means

- The Anomaly Detection folder is uploaded in

cosmicai-databucket. - The partition files are in

cosmicai-data/100MBfolder (data_bucket/data_prefix). - Our inference batch size is 512.

- This is running for

1GBdata. - The results are saved in

bucket/result_pathwhich iscosmicai-data/result-partition-100MB/1GB/1in this case. - We set the file limit to 11, since 1GB file with 100MB partition size will need ceil(1042MB / 100MB) = 11 files. Using 22 files here will run ro 2GB data. See the total_execution_time.csv for what should be the file_limit for different partitions and data sizes.

If you need to change more

- We run each experiment 3 times. Hence

1GB/1,1GB/2and1GB/3. - To benchmark for different batch sizes (32, 64, 128, 256, 512), when keeping the data size same, I saved them in

Batchessubfolder. For example,result-partition-100MB/1GB/Batches/. - If you are running your own experiments, just ensure you change the

result_pathto a different folder (e.g.team1/result-partition-100MB/1GB/1is ok).

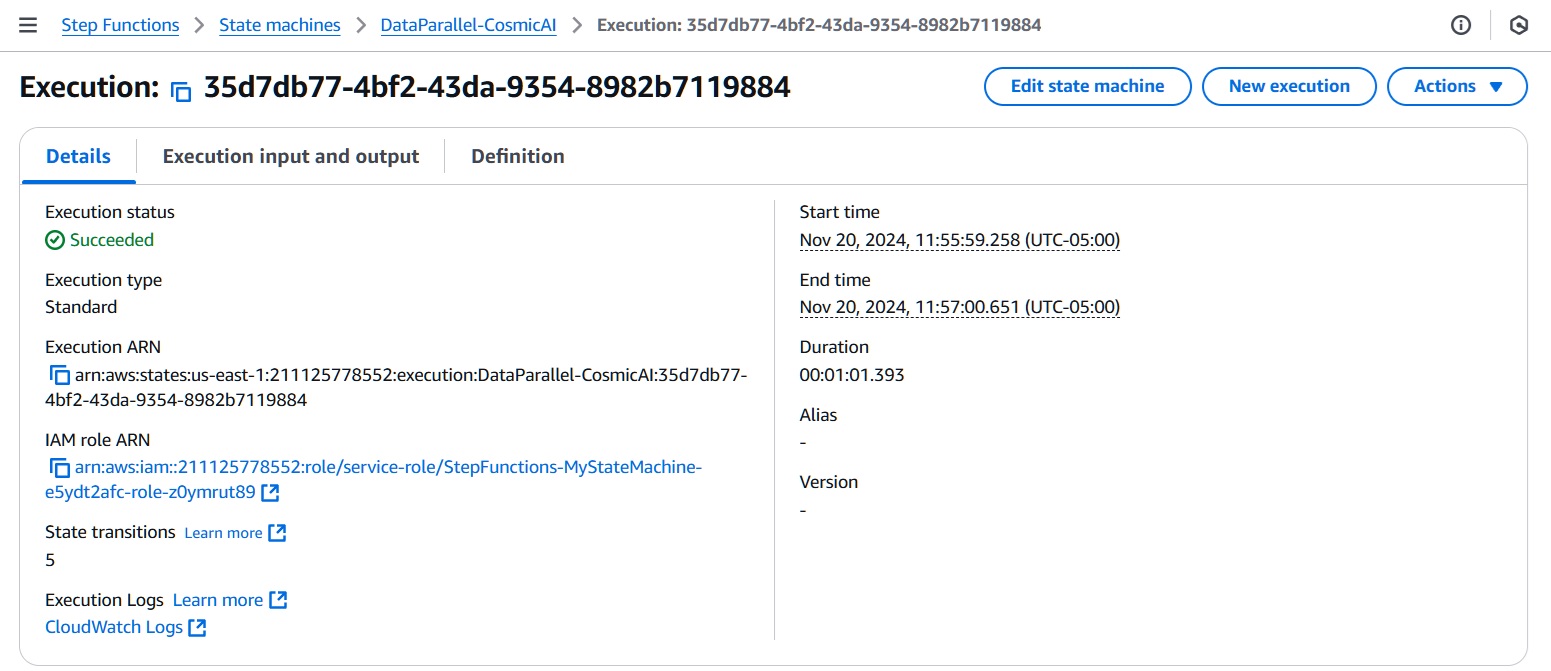

Create a state machine that contains the following Lambda functions.

- Initialize: Create a lambda function (e.g.

data-parallel-init) with the initializer.- Attach necessary permissions to the execution role:

AmazonS3FullAccess,AWSLambda_FullAccess,AWSLambdaBasicExecutionRole,loudWatchActionsEC2Access. - Create a cloudwatch log group with the same name as

/aws/lambda/data-parallel-init. Log group helps debugging errors. - This script creates an array of job configs based on the input payload for each file. Then save it as

payload.jsonin thebucket.

- Attach necessary permissions to the execution role:

- Distributed Inference: Create a distributed map using a lambda container that has all required libraries installed. This fetches the

S3_object_namefolder and starts the python file atscript. The script does the following:- Read the environment variables (rank, world size). Also the

payload.jsonfrom thebucket. This part is hard-coded and should be changed if you want to read payload from a different location. - Fetch the file from

data_bucket/data_prefixfolder. - Run inference and benchmark the execution info.

- Save the json file in

result_pathlocation asrank_no.json.

- Read the environment variables (rank, world size). Also the

- Summarize: Create a Lambda using summarizer.py. Same role permissions as the Initialize.

- Reads the result json files created in the previous state.

- Concatenates all to get

combined_data.jsonand saves it atresult_path.

- I collected the results locally using

aws cli. After installing and configuring it for the class account runningaws s3 sync s3://cosmicai-data/result-partition-100MB result-partition-100MBwill sync the result file locally. - The stats.py iterates through each

combined_data.jsonfile and saves the summary in batch_varying_results.csv when batch size is changed for 1GB data and result_stats.csv for varying data sizes. - The total execution times were manually added in total_execution_time.csv.

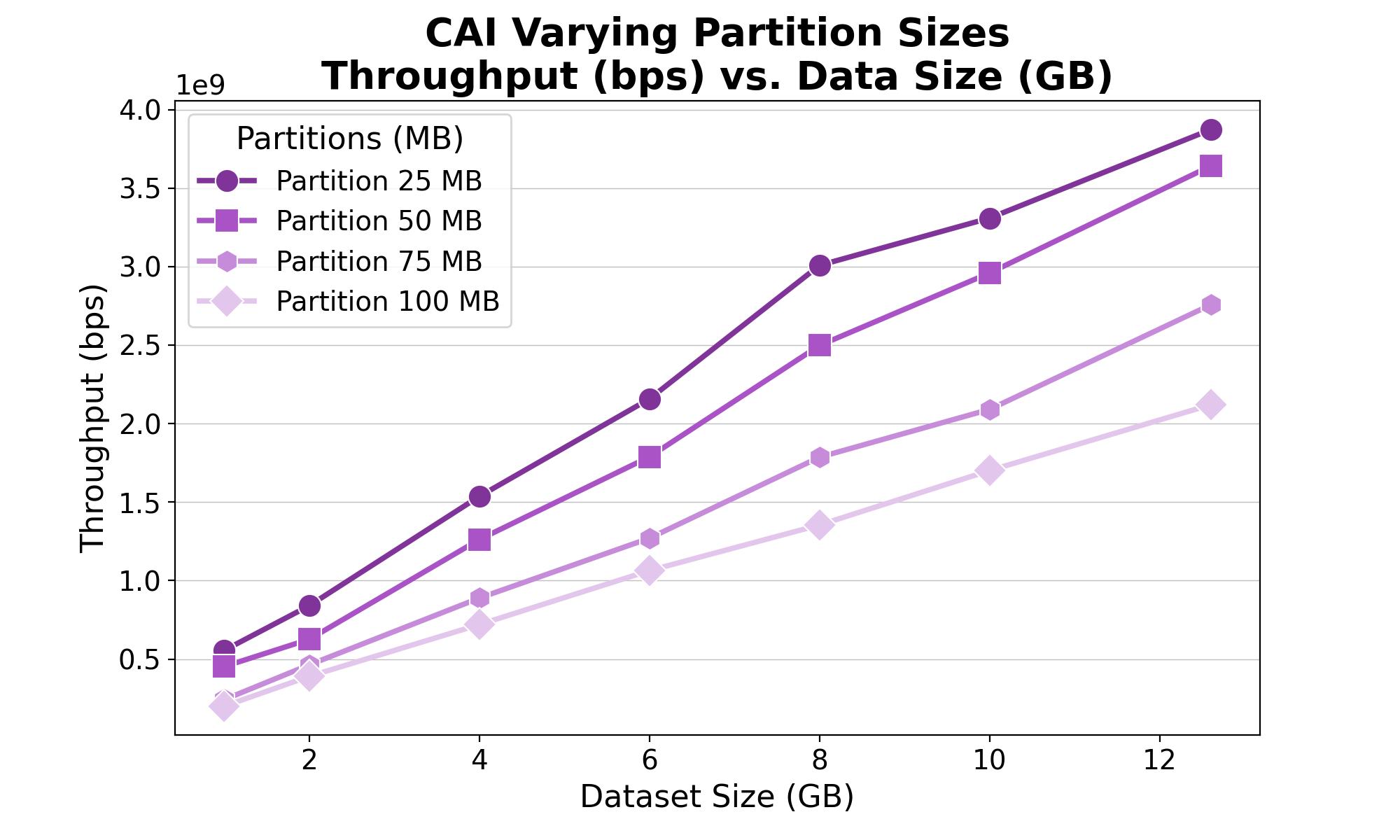

The total data size is 12.6GB. We run the inference for different sizes to evaluate the scaling performance with increasing data load. This experiment runs with size 1GB, 2GB, 4GB, 6GB, 8GB, 10GB and 12.6GB. Batch size 512.

Please check the result_stats_adjusted.csv for the average results.

We use the 1GB data and change batch size by [32, 64, 128, 256, 512]. The results are in batch_varying_adjusted.csv.

Estimated AWS computation cost summary for inference on the total dataset. Cost is requests x duration(s) x memory(GB) x 0.00001667.

| Partition | Requests | Duration (s) | Memory | Cost ($) |

|---|---|---|---|---|

| 25MB | 517 | 6.55 | 2.8GB | 0.16 |

| 50MB | 259 | 11.8 | 4.0GB | 0.20 |

| 75MB | 173 | 17.6 | 5.9GB | 0.30 |

| 100MB | 130 | 25 | 7.0GB | 0.38 |

The number of requests is how many times the Lambda function was called, which is the number of concurrent jobs (data divided by partition size). The maximum memory size can be configured based on memory usage (smaller partitions use less memory). Other costs, for example, request charge ($2e-7/request), and storage charge ($3.09e-8/GB-s if > 512MB) are negligible.