Codebase of "Spiral of Silence: How is Large Language Model Killing Information Retrieval?—A Case Study on Open Domain Question Answering"

Table of Contents

- [06/04/2024] 📝 You can refer to our Arxiv paper and Zhihu (Chinese) for more details.

- [05/15/2024] 🎉 Our paper has been accepted to ACL 2024.

- [05/12/2024] 💻 Published code used in our experiments.

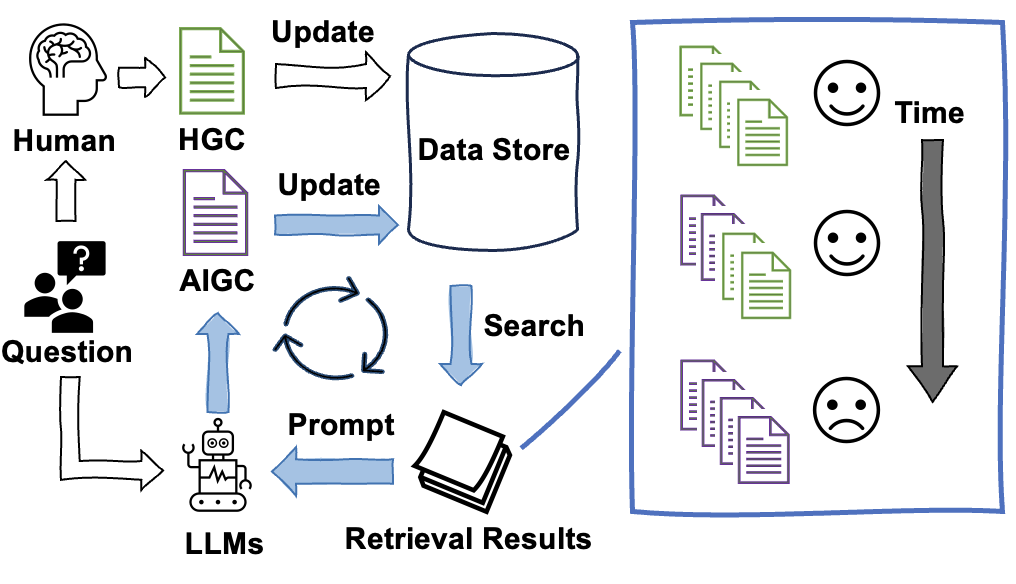

In this study, we construct and iteratively run a simulation pipeline to deeply investigate the short-term and long-term effects of LLM text on RAG systems.

- User-friendly Iteration Simulation Tool: We offer an easy-to-use iteration simulation tool that integrates functionalities from ElasticSearch, LangChain, and api-for-open-llm, allowing for convenient dataset loading, selection of various LLMs and retrieval-ranking models, and automated iterative simulation.

- Support for Multiple Datasets: Including but not limited to Natural Questions, TriviaQA, WebQuestions, PopQA. By converting data to jsonl format, you can use the framework in this paper to experiment with any data.

- Support for Various Retrieval and Re-ranking Models: BM25, Contriever, LLM-Embedder, BGE, UPR, MonoT5 and more.

- Support for frequently-used LLMs: GPT-3.5 turbo, chatglm3-6b, Qwen-14B-Chat, Llama-2-13b-chat-hf, Baichuan2-13B-Chat.

- Supports Various RAG Pipeline Evolution Evaluation Methods: Automatically organizes and assesses the vast amount of results from each experiment.

Our framework depends on ElasticSearch 8.11.1 and api-for-open-llm, therefore it is necessary to install these two tools first. We suggest downloading the same version of ElasticSearch 8.11.1, and before starting, set the appropriate http.port and http.host in the config/elasticsearch.yml file, as these will be used for the configuration needed to run the code in this repository.

When installing api-for-open-llm, please follow the instructions provided by its repository to install all the dependencies and environment required to run the model you need. The PORT you configure in the .env file will also serve as a required configuration for this codebase.

First, clone the repo:

git clone --recurse-submodules [email protected]:VerdureChen/SOS-Retrieval-Loop.gitThen,

cd SOS-Retrieval-LoopTo install the required packages, you can create a conda environment:

conda create --name SOS_LOOP python=3.10Activate the conda environment:

conda activate SOS_LOOPthen use pip to install required packages:

pip install -r requirements.txtPlease see Installation to install the required packages.

Before running our framework, you need to start ElasticSearch and api-for-open-llm. When starting ElasticSearch, you need to set the appropriate http.port and http.host in the config/elasticsearch.yml file, as these will be used for the configuration needed to run the code in this repository.

When starting api-for-open-llm, you need to set the PORT in the .env file, which will also serve as a required configuration for this codebase.

Since our code involves many datasets, models, and index functionalities, we use a three-level config method to control the operation of the code:

-

In the config folder of the specific function (such as retrieval, re-ranking, generation, etc.), there is a configuration file template for that function, which contains all the parameters that can be configured by yourself. You can confirm the function and dataset corresponding to the configuration file from the file name of the configuration file. For example,

src/retrieval_loop/retrieve_configs/bge-base-config-nq.jsonis a retrieval configuration file, corresponding to the Natural Questions dataset, and using the BGE-base model. We takesrc/retrieval_loop/index_configs/bge-base-config-psgs_w100.jsonas an example:{ "new_text_file": "../../data_v2/input_data/DPR/psgs_w100.jsonl", "retrieval_model": "bge-base", "index_name": "bge-base_faiss_index", "index_path": "../../data_v2/indexes", "index_add_path": "../../data_v2/indexes", "page_content_column": "contents", "index_exists": false, "normalize_embeddings": true, "query_files": ["../../data_v2/input_data/DPR/nq-test-h10.jsonl"], "query_page_content_column": "question", "output_files": ["../../data_v2/ret_output/DPR/nq-test-h10-bge-base"], "elasticsearch_url": "http://xxx.xxx.xxx.xxx:xxx" }Where

new_text_fileis the path to the document to be newly added to the index,retrieval_modelis the retrieval model used,index_nameis the name of the index,index_pathis the storage path of the index,index_add_pathis the path where the ID of the incremental document in the index is stored (this is particularly useful when we need to delete specific documents from the index),page_content_columnis the column name of the text to be indexed in the document file,index_existsindicates whether the index already exists (if set to false, the corresponding index will be created, otherwise the existing index will be read from the path),normalize_embeddingsis whether to normalize the output of the retrieval model,query_filesis the path to the query file,query_page_content_columnis the column name of the query text in the query file,output_filesis the path to the output retrieval result file (corresponding to query_files), andelasticsearch_urlis the url of ElasticSearch. -

Since we usually need to integrate multiple steps in a pipeline, corresponding to the example script

src/run_loop.sh, we provide a global configuration filesrc/test_function/test_configs/template_total_config.json. In this global configuration file, you can configure the parameters of each stage at once. You do not need to configure all the parameters in it, just the parameters you need to modify relative to the template configuration file. -

In order to improve the efficiency of script running, the

src/run_loop.shscript supports running multiple datasets and LLM-generated results for a retrieval-re-ranking method at the same time. To flexibly configure such experiments, we support generating new configuration files during the pipeline run insrc/run_loop.shthroughrewrite_configs.py. For example, when we need to run the pipeline in a loop, we need to record the config content of each round. Before each retrieval, the script will run:python ../rewrite_configs.py --total_config "${USER_CONFIG_PATH}" \ --method "${RETRIEVAL_MODEL_NAME}" \ --data_name "nq" \ --loop "${LOOP_NUM}" \ --stage "retrieval" \ --output_dir "${CONFIG_PATH}" \ --overrides '{"query_files": ['"${QUERY_FILE_LIST}"'], "output_files": ['"${OUTPUT_FILE_LIST}"'] , "elasticsearch_url": "'"${elasticsearch_url}"'", "normalize_embeddings": false}'

Where

--total_configis the path to the global configuration file,--methodis the name of the retrieval method,--data_nameis the name of the dataset,--loopis the number of the current loop,--stageis the stage of the current pipeline,--output_diris the storage path of the newly generated configuration file, and--overridesis the parameters that need to be modified (also a subset of the template configuration file for each task). -

When configuring, you need to pay attention to the priority of the three types of configurations: the first level is the default configuration in each task config template, the second level is the global configuration file, and the third level is the new configuration file generated during the pipeline run. During the pipeline run, the second level configuration will override the first level configuration, and the third level configuration will override the second level configuration.

Through the following steps, you can reproduce our experiments. Before that, please read the Configuration section to understand the settings of the configuration file:

- Dataset Preprocessing: Whether it is a query or a document, we need to convert the dataset to jsonl format. In our experiments, we use the data.wikipedia_split.psgs_w100 dataset, which can be downloaded to the

data_v2/raw_data/DPRdirectory and unzipped according to the instructions in the DPR repository. We provide a simple scriptdata_v2/gen_dpr_hc_jsonl.py, which can convert the dataset to jsonl format and place it indata_v2/input_data/DPR. The query files used in the experiment are located indata_v2/input_data/DPR/sampled_query.cd data_v2 python gen_dpr_hc_jsonl.py - Generate Zero-Shot RAG Results: Use

src/llm_zero_generate/run_generate.sh, by modifying the configuration in the file, you can generate zero-shot RAG results for all data and models in batches. Configure the following parameters at the beginning of the script:WhereMODEL_NAMES=(chatglm3-6b) #chatglm3-6b qwen-14b-chat llama2-13b-chat baichuan2-13b-chat gpt-3.5-turbo GENERATE_BASE_AND_KEY=( "gpt-3.5-turbo http://XX.XX.XX.XX:XX/v1 xxx" "chatglm3-6b http://XX.XX.XX.XX:XX/v1 xxx" "qwen-14b-chat http://XX.XX.XX.XX:XX/v1 xxx" "llama2-13b-chat http://XX.XX.XX.XX:XX/v1 xxx" "baichuan2-13b-chat http://XX.XX.XX.XX:XX/v1 xxx" ) DATA_NAMES=(tqa pop nq webq) CONTEXT_REF_NUM=1 QUESTION_FILE_NAMES=( "-test-sample-200.jsonl" "-upr_rerank_based_on_bm25.json" ) LOOP_CONFIG_PATH_NAME="../run_configs/original_retrieval_config" TOTAL_LOG_DIR="../run_logs/original_retrieval_log" QUESTION_FILE_PATH_TOTAL="../../data_v2/loop_output/DPR/original_retrieval_result" TOTAL_OUTPUT_DIR="../../data_v2/loop_output/DPR/original_retrieval_result"

MODEL_NAMESis a list of model names for which results need to be generated,GENERATE_BASE_AND_KEYconsists of the model name, api address, and key,DATA_NAMESis a list of dataset names,CONTEXT_REF_NUMis the number of context references (set to 0 in the zero-shot case),QUESTION_FILE_NAMESis a list of query file names (but note that the script identifies the dataset to which it belongs by the prefix of the file name, so to query nq-test-sample-200.jsonl, you need to includenqinDATA_NAMES, and this field only fills in-test-sample-200.jsonl),LOOP_CONFIG_PATH_NAMEandTOTAL_LOG_DIRare the storage paths of the running config and logs,QUESTION_FILE_PATH_TOTALis the query file storage path, andTOTAL_OUTPUT_DIRis the storage path of the generated results. After configuring, run the script:cd src/llm_zero_generate bash run_generate.sh - Build Dataset Index: Use

src/retrieval_loop/run_index_builder.sh, by modifying theMODEL_NAMESandDATA_NAMESconfiguration in the file, you can build indexes for all data and models at once. You can also obtain retrieval results based on the corresponding method by configuringquery_filesandoutput_files. In our experiments, all retrieval model checkpoints are placed in theret_modeldirectory. Run:cd src/retrieval_loop bash run_index_builder.sh - Post-process the data generated by Zero-Shot RAG, filter the generated text, rename IDs, etc.: Use

src/post_process/post_process.sh, by modifying theMODEL_NAMESandQUERY_DATA_NAMESconfiguration in the file, you can process all data and models of zero-shot RAG generation results in one run. For Zero-shot data, we setLOOP_NUMto 0, andLOOP_CONFIG_PATH_NAME,TOTAL_LOG_DIR,TOTAL_OUTPUT_DIRspecify the paths of the script configs, logs, and output, respectively.FROM_METHODindicates the generation method of the current text to be processed, which will be added as a tag to the processed document ID.INPUT_FILE_PATHis the path to the text file to be processed, with each directory containing the name of each dataset, and each directory containing various Zero-shot result files. In addition, make sure thatINPUT_FILE_NAMEis consistent with the actual input text name. Run:cd src/post_process bash post_process.sh - Add the content generated by Zero-Shot RAG to the index and obtain the retrieval results after adding Zero-Shot data: Use

src/run_zero-shot.sh, by modifying theGENERATE_MODEL_NAMESandQUERY_DATA_NAMESconfiguration in the file, you can add the zero-shot RAG generation results of all data and models to the index in one run, and obtain the retrieval results after adding Zero-Shot data. Note that therun_itemslist indicates the retrieval-re-ranking methods that need to be run, where each element is constructed as"item6 bm25 monot5", indicating that the sixth Zero-Shot RAG experiment in this run is based on the BM25+MonoT5 retrieval-re-ranking method. Run:cd src bash run_zero-shot.sh - Run the main LLM-generated Text simulation loop: Use

src/run_loop.sh, by modifying theGENERATE_MODEL_NAMESandQUERY_DATA_NAMESconfiguration in the file, you can run the LLM-generated Text Simulation loop for all data and models in batches. You can control the number of loops by settingTOTAL_LOOP_NUM. Since it involves updating the index multiple times, only one retrieval-re-ranking method pipeline can be run at a time. If you want to change the number of contexts that LLM can see in the RAG pipeline, you can do so by modifyingCONTEXT_REF_NUM, which is set to 5 by default. Run:cd src bash run_loop.sh

For the large amount of results generated in the experiment, our framework supports various batch evaluation methods. After setting QUERY_DATA_NAMES and RESULT_NAMES in src/evaluation/run_context_eva.sh, you can choose any supported task for evaluation, including:

TASK="retrieval": Evaluate the retrieval and re-ranking results of each iteration, including Acc@5 and Acc@20.TASK="QA": Evaluate the QA results of each iteration (EM).TASK="context_answer": Calculate the number of documents in the contexts (default top 5 retrieval results) that contain the correct answer when each LLM answers correctly (EM=1) or incorrectly (EM=0) at the end of each iteration.TASK="bleu": Calculate the SELF-BLEU value of the contexts (default top 5 retrieval results) at each iteration, with 2-gram and 3-gram calculated by default.TASK="percentage": Calculate the percentage of each LLM and human-generated text in the top 5, 20, and 50 contexts at each iteration.TASK="misQA": In the Misinformation experiment, calculate the EM of specific incorrect answers in the QA results at each iteration.TASK="QA_llm_mis"andTASK="QA_llm_right": In the Misinformation experiment, calculate the situation where specific incorrect or correct answers in the QA results at each iteration are determined to be supported by the text after being judged by GPT-3.5-Turbo (refer to EM_llm in the paper).TASK="filter_bleu_*"andTASK="filter_source_*": In the Filtering experiment, calculate the evaluation results of each iteration under different filtering methods, where * represents the evaluation content that has already appeared (retrieval, percentage, context_answer).

The results generated after evaluation are stored by default in the corresponding RESULT_DIR/RESULT_NAME/QUERY_DATA_NAME/results directory.

By modifying the GENERATE_MODEL_NAMES configuration in src/run_loop.sh, you can use different LLMs at different stages. For example:

GENERATE_MODEL_NAMES_F3=(qwen-0.5b-chat qwen-1.8b-chat qwen-4b-chat)

GENERATE_MODEL_NAMES_F7=(qwen-7b-chat llama2-7b-chat baichuan2-7b-chat)

GENERATE_MODEL_NAMES_F10=(gpt-3.5-turbo qwen-14b-chat llama2-13b-chat)Indicates that qwen-0.5b-chat, qwen-1.8b-chat, and qwen-4b-chat are used in the first three rounds of iteration, qwen-7b-chat, llama2-7b-chat, and baichuan2-7b-chat are used in the fourth to seventh rounds of iteration, and gpt-3.5-turbo, qwen-14b-chat, and llama2-13b-chat are used in the eighth to tenth rounds of iteration.

We can use this to simulate the impact of LLM performance enhancement over time on the RAG system. Remember to provide the corresponding information in GENERATE_BASE_AND_KEY, and modify GENERATE_TASK to update_generate.

- We first use GPT-3.5-Turbo to generate 5 incorrect answers for each query, and then randomly select an incorrect answer, and let all LLMs used in the experiment generate supporting text for it. You can modify the

{dataset}_mis_config_answer_gpt.jsonconfiguration file (used to generate incorrect answers) and{dataset}_mis_config_passage_{llm}.jsonconfiguration file (used to generate text containing incorrect answers) in thesrc/misinfo/mis_configdirectory to configure each dataset and generation content path and api information. - Run the

src/misinfo/run_gen_misinfo.shscript to generate Misinformation text for all data and models in one run. - Refer to Running the Code steps 4 to 6 to add the generated Misinformation text to the index and obtain the RAG after adding Misinformation data.

- Refer to Evaluation to run the

src/evaluation/run_context_eva.shscript to evaluate the results of the Misinformation experiment, and it is recommended to use the"retrieval","context_answer","QA_llm_mis", and"QA_llm_right"evaluation tasks.

In our experiments, we conducted SELF-BLEU filtering and source filtering of the retrieved contexts separately.

- SELF-BLEU filtering: We calculate the SELF-BLEU value of the top retrieval results to ensure that the context input to the LLM maintains a high degree of similarity (i.e., a low SELF-BLEU value, default is 0.4). To enable this feature, set

FILTER_METHOD_NAME=filter_bleuinsrc/run_loop.sh. - Source filtering: We identify text from the LLM in the top retrieval results and exclude it. We use Hello-SimpleAI/chatgpt-qa-detector-roberta for identification. Please place the checkpoint in the

ret_modeldirectory before the experiment starts. To enable this feature, setFILTER_METHOD_NAME=filter_sourceinsrc/run_loop.sh. - Refer to Evaluation to run the

src/evaluation/run_context_eva.shscript to evaluate the results of the Filtering experiment, and it is recommended to use the"retrieval","QA","filter_bleu_*", and"filter_source_*"evaluation tasks.

Since our experiments involve dynamic updates of the index, it is not possible to reconstruct the index from scratch in each simulation. Instead, in each simulation, we record the IDs of the newly added text in the src/run_logs directory under the index_add_logs directory corresponding to this experiment. After the experiment is over, we delete the corresponding documents from the index using the src/post_process/delete_doc_from_index.py script.

- When you need to delete documents from the BM25 index, run:

cd src/post_process

python delete_doc_from_index.py --config_file_path delete_configs/delete_config_bm25.jsonWhere src/post_process/delete_configs/delete_config_bm25.json is the corresponding configuration file, which contains parameters:

{

"id_files": ["../run_logs/zero-shot_retrieval_log_low/index_add_logs"],

"model_name": "bm25",

"index_path": "../../data_v2/indexes",

"index_name": "bm25_psgs_index",

"elasticsearch_url": "http://xxx.xxx.xxx.xxx:xxx",

"delete_log_path": "../run_logs/zero-shot_retrieval_log_low/index_add_logs"

}Where id_files is the directory where the document IDs to be deleted are located, model_name is the index model name, index_path is the index storage path, index_name is the index name, elasticsearch_url is the url of ElasticSearch, and delete_log_path is the storage path of the document ID record. The ID files of different indexes can be mixed in the same directory, and the script will automatically read the document IDs corresponding to the index in the directory and delete the documents from the index.

- When you need to delete documents from the Faiss index, run:

cd src/post_process

python delete_doc_from_index.py --config_file_path delete_configs/delete_config_faiss.jsonWhere src/post_process/delete_configs/delete_config_faiss.json is the corresponding configuration file, which contains parameters:

{

"id_files": ["../run_logs/mis_filter_bleu_nq_webq_pop_tqa_loop_log_contriever_None_total_loop_10_20240206164013/index_add_logs"],

"model_name": "faiss",

"index_path": "../../data_v2/indexes",

"index_name": "contriever_faiss_index",

"elasticsearch_url": "http://xxx.xxx.xxx.xxx:xxx",

"delete_log_path": "../run_logs/mis_filter_bleu_nq_webq_pop_tqa_loop_log_contriever_None_total_loop_10_20240206164013/index_add_logs"

}Where id_files is the directory where the document IDs to be deleted are located, model_name is the index model name, index_path is the index storage path, index_name is the index name, elasticsearch_url is the url of ElasticSearch, and delete_log_path is the storage path of the document ID record.