Recently, consistency regularization has become a fundamental component in semi-supervised learning, which tries to make the network's predictions on unlabeled data to be invariant to perturbations. However, its performance decreases drastically when there are scarce labels, e.g.,

The corresponding code is being collated and will be released soon.

@article{pmix,

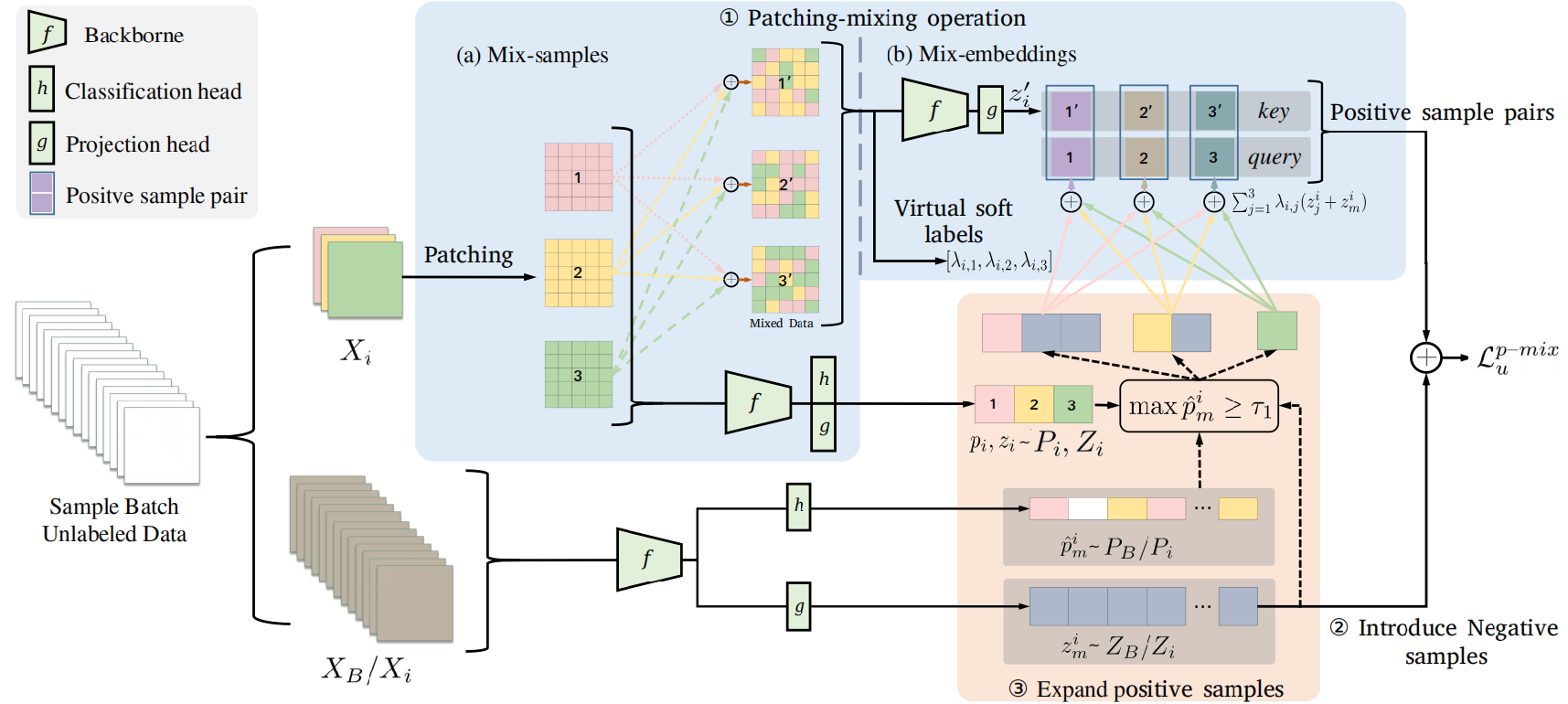

title={Patch-Mixing Contrastive Regularization for Few-Label Semi-Supervised Learning},

author={Xiaochang Hu, Xin Xu, Yujun Zeng and Xihong Yang},

journal={IEEE Transactions on Artificial Intelligence}

year={2023},

}