Hierarchical Neural Operator Transformer with Learnable Frequency-aware Loss Prior for Arbitrary-scale Super-resolution (ICML 2024)

✨Official implementation of our HiNOTE model✨

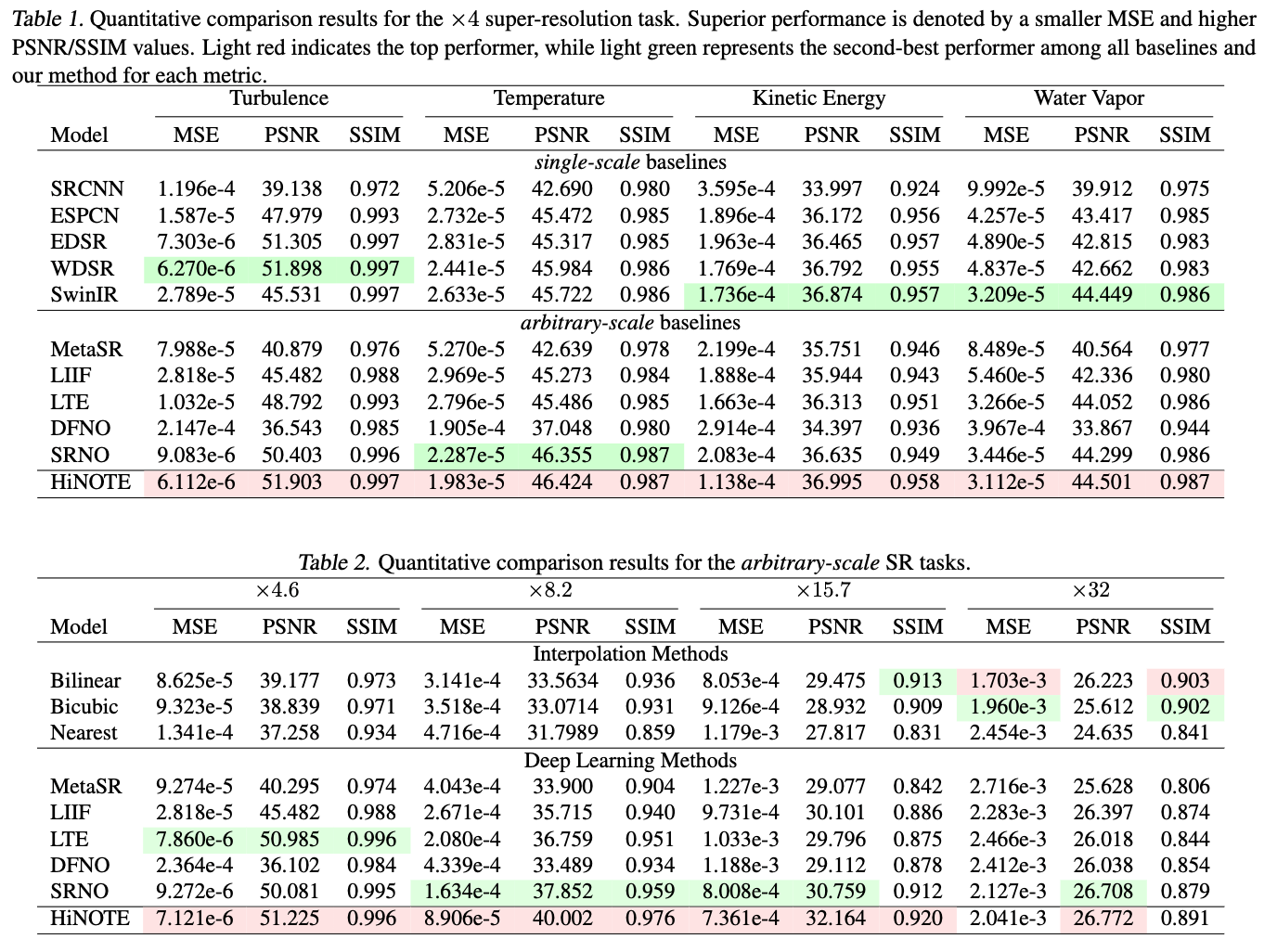

In this work, we present an arbitrary-scale superresolution (SR) method to enhance the resolution of scientific data, which often involves complex challenges such as continuity, multi-scale physics, and the intricacies of high-frequency signals. Grounded in operator learning, the proposed method is resolution-invariant. The core of our model is a hierarchical neural operator that leverages a Galerkin-type self-attention mechanism, enabling efficient learning of mappings between function spaces. Sinc filters are used to facilitate the information transfer across different levels in the hierarchy, thereby ensuring representation equivalence in the proposed neural operator. Additionally, we introduce a learnable prior structure that is derived from the spectral resizing of the input data. This loss prior is model-agnostic and is designed to dynamically adjust the weighting of pixel contributions, thereby balancing gradients effectively across the model. We conduct extensive experiments on diverse datasets from different domains and demonstrate consistent improvements compared to strong baselines, which consist of various state-of-the-art SR methods.

The repository is organized as follows:

├── configs # Configuration files for different models and datasets

│ ├── callback # Callback configuration

│ │ └── default.yaml

│ ├── datamodule # Dataset-specific configurations

│ │ ├── baryon_density.yaml

│ │ ├── kinetic_energy.yaml

│ │ ├── MRI.yaml

│ │ ├── SEVIR_2018.yaml

│ │ ├── temperature.yaml

│ │ ├── vorticity_Re_16000.yaml

│ │ ├── vorticity_Re_32000.yaml

│ │ └── water_vapor.yaml

│ ├── default.yaml # Default configuration

│ ├── hydra # Hydra configurations for experiment management

│ │ └── default.yaml

│ ├── logger # Configuration for logging (e.g., Weights & Biases)

│ │ └── wandb.yaml

│ ├── model # Model-specific configurations

│ │ ├── DFNO.yaml

│ │ ├── EDSR.yaml

│ │ ├── ESPCN.yaml

│ │ ├── HiNOTE.yaml

│ │ ├── LIIF.yaml

│ │ ├── LTE.yaml

│ │ ├── MetaSR.yaml

│ │ ├── SRCNN.yaml

│ │ ├── SRNO.yaml

│ │ ├── SwinIR.yaml

│ │ └── WDSR.yaml

│ ├── optim # Optimizer configurations

│ │ └── default.yaml

│ ├── scheduler # Scheduler configurations

│ │ └── default.yaml

│ └── trainer # Trainer configurations

│ └── default.yaml

├── data # Data generation scripts

│ └── data_generation.py # Script for generating and preprocessing data

├── data_interface.py # Main interface for handling data input/output

├── dataloaders # Custom dataloaders for various data sources

│ ├── ASdataloader.py # Dataloader for AS data

│ ├── data_utils.py # Utility functions for data loading

│ └── SSdataloder.py # Dataloader for SS data

├── model_interface.py # Interface for managing model loading and interaction

├── models # Implementations of various models

│ ├── DFNO.py

│ ├── EDSR.py

│ ├── ESPCN.py

│ ├── HiNOTE.py

│ ├── LIIF.py

│ ├── LTE.py

│ ├── MetaSR.py

│ ├── SRCNN.py

│ ├── SRNO.py

│ ├── SwinIR.py

│ └── WDSR.py

├── run.sh # Shell script for running the project

├── test.py # Script for testing models

├── train.py # Script for training models

└── utils.py # Utility functions

-

Clone the repository:

git clone https://github.com/Xihaier/HiNOTE.git cd HiNOTE -

Create and activate the conda environment:

conda env create -f environment.yml conda activate HiNOTE

-

Verify the installation:

Ensure that all dependencies are correctly installed by running:

python -c "import torch; print(torch.__version__)"

This project utilizes several datasets, each of which is available online. Here is an overview of the datasets and links to access them:

-

Turbulence Data

- Description: This dataset contains two-dimensional Kraichnan turbulence in a doubly periodic square domain spanning.

- Access: Available at NERSC's Data Portal.

-

Weather Data

- Description: This dataset contains ERA5 reanalysis data, high-resolution simulated surface temperature at 2 meters, kinetic energy at 10 meters above the surface, and total column water vapor.

- Access: Available at NERSC's Data Portal.

-

SEVIR Data

- Description: This dataset encompasses various weather phenomena, including thunderstorms, convective systems, and related events

- Access: Available at AWS Open Data Registry.

-

MRI Data

- Description: This dataset contains Magnetic Resonance Imaging data.

- Access: Available on GitHub - UTCSILAB's repository.

To train a model, modify the configuration files in configs/ as needed. Then, run:

python train.pyTo test a trained model, use the following command:

python test.pyFor convenience, you can also use the shell script run.sh to automate multiple training/testing runs.

bash run.shIf you find this repo useful, please cite our paper.

@article{luo2024hierarchical,

title={Hierarchical Neural Operator Transformer with Learnable Frequency-aware Loss Prior for Arbitrary-scale Super-resolution},

author={Luo, Xihaier and Qian, Xiaoning and Yoon, Byung-Jun},

journal={arXiv preprint arXiv:2405.12202},

year={2024}

}

If you have any questions or want to use the code, please contact [email protected].