Deep learning models and dataset for recognizing industrial smoke emissions. The videos are from the smoke labeling tool. The code in this repository assumes that Ubuntu 18.04 server is installed. If you found this dataset and the code useful, we would greatly appreciate it if you could cite our technical report below:

Yen-Chia Hsu, Ting-Hao (Kenneth) Huang, Ting-Yao Hu, Paul Dille, Sean Prendi, Ryan Hoffman, Anastasia Tsuhlares, Randy Sargent, and Illah Nourbakhsh. 2020. RISE Video Dataset: Recognizing Industrial Smoke Emissions. arXiv preprint arXiv:2005.06111. https://arxiv.org/abs/2005.06111

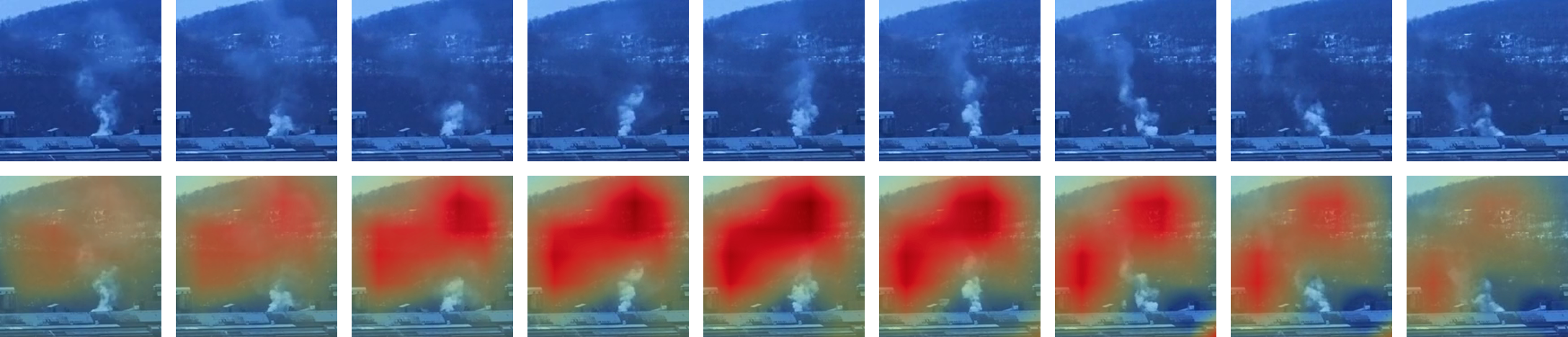

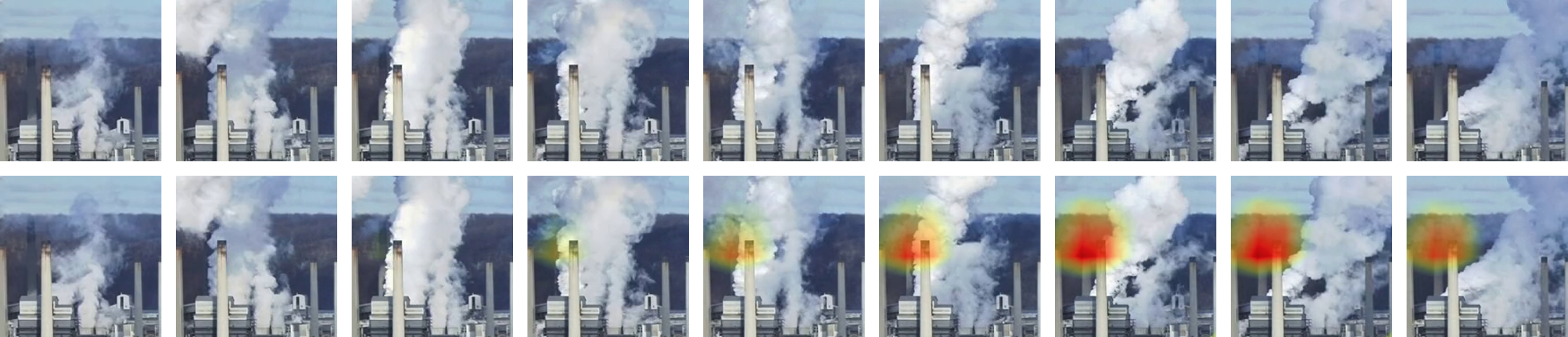

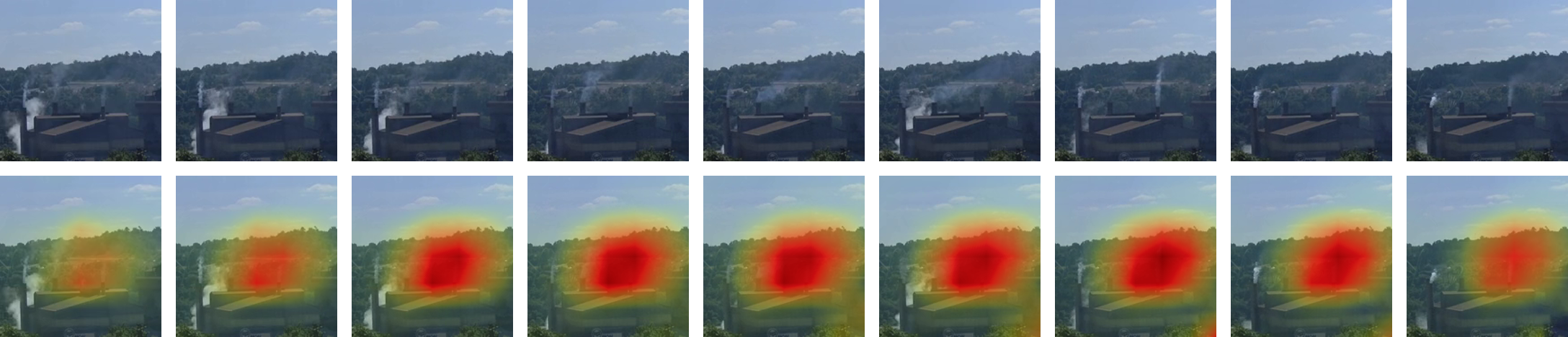

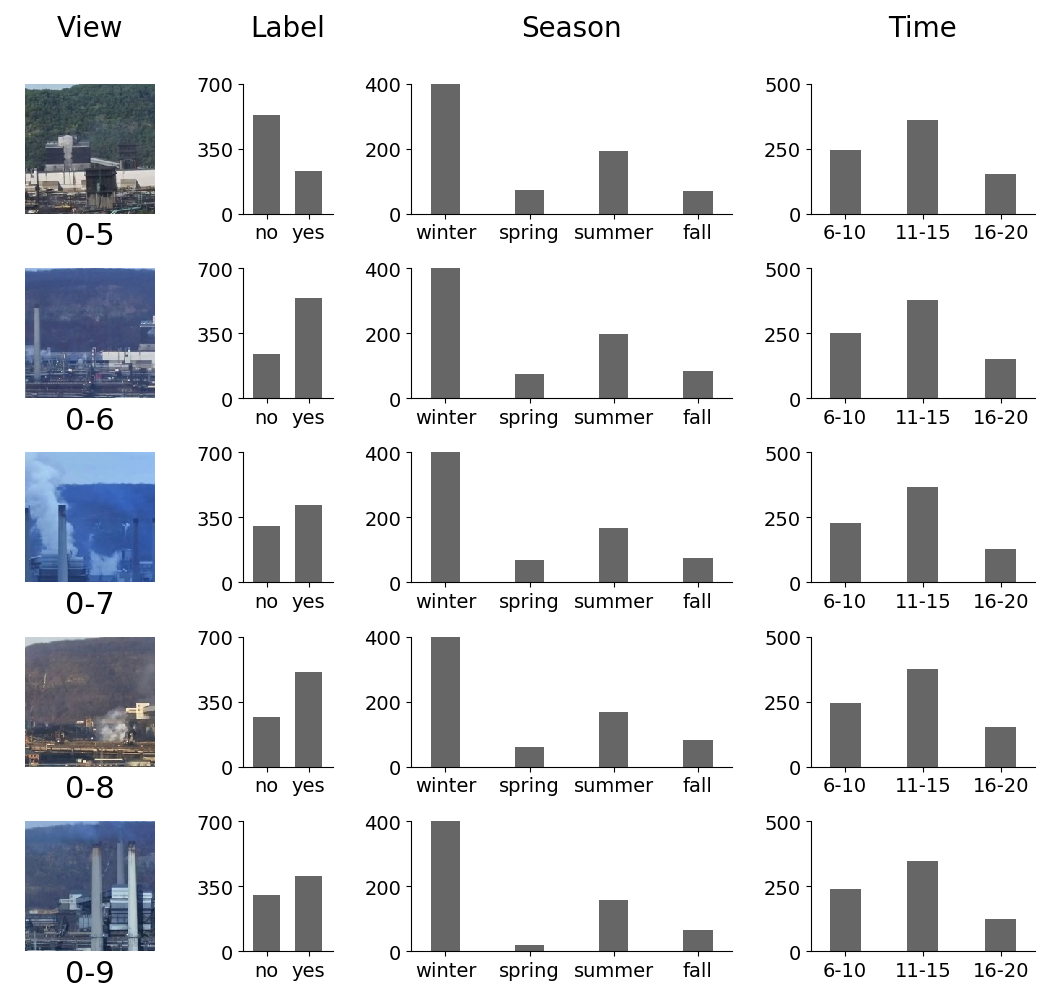

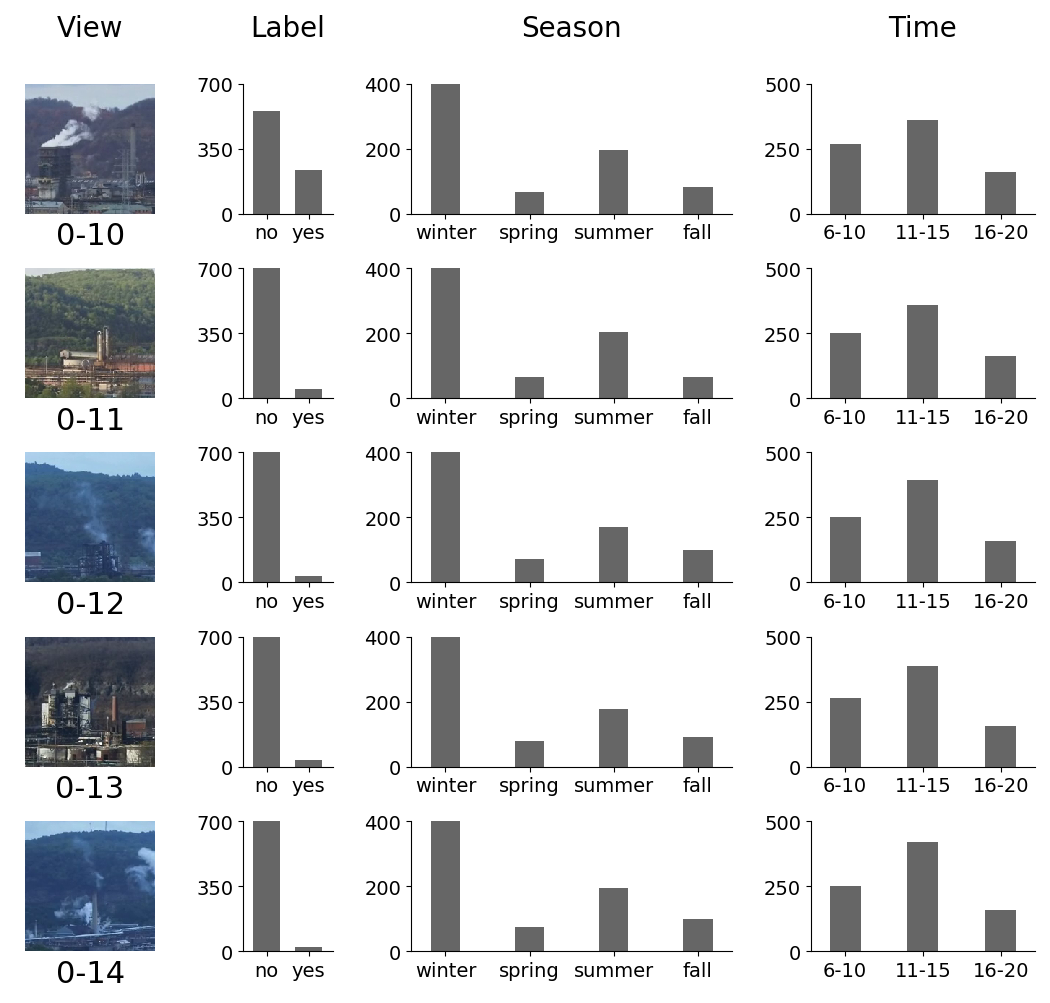

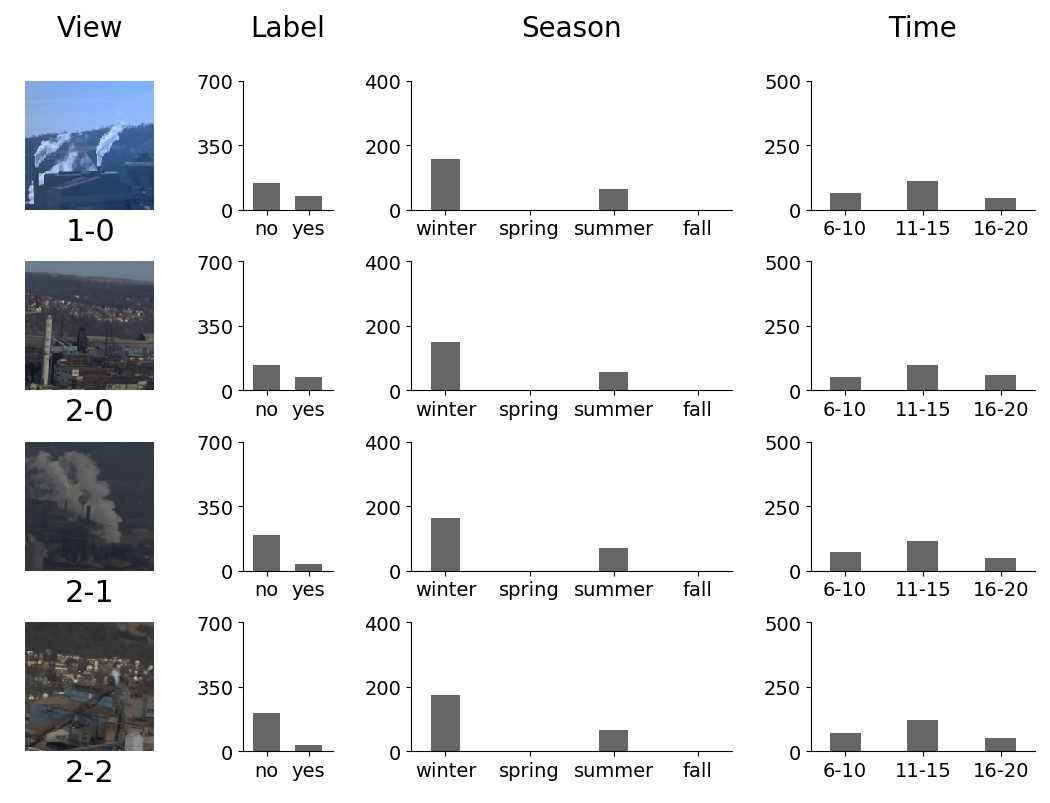

The following figures show some examples of how the I3D model recognizes industrial smoke. The heatmaps (red and yellow areas on top of the images) show the locations where the model thinks have smoke emissions. The examples are from the testing set with different camera views, which means that the model never sees these views at the training stage. These visualizations are generated by using the Grad-CAM technique. The x-axis indicates time.

Disable the nouveau driver.

sudo vim /etc/modprobe.d/blacklist.conf

# Add the following to this file

# Blacklist nouveau driver (for nvidia driver installation)

blacklist nouveau

blacklist lbm-nouveau

options nouveau modeset=0

alias nouveau off

alias lbm-nouveau offRegenerate the kernel initramfs.

sudo update-initramfs -u

sudo reboot nowRemove old nvidia drivers.

sudo apt-get remove --purge '^nvidia-.*'

sudo apt-get autoremove

If using a desktop version of Ubuntu (not the server version), run the following:

sudo apt-get install ubuntu-desktop # only for desktop version, not server version

Install cuda and the nvidia driver. Documentation can be found on Nvidia's website.

sudo apt install build-essential

sudo apt-get install linux-headers-$(uname -r)

wget https://developer.nvidia.com/compute/cuda/10.1/Prod/local_installers/cuda_10.1.168_418.67_linux.run

sudo sh cuda_10.1.168_418.67_linux.runCheck if Nvidia driver is installed. Should be no nouveau.

sudo nvidia-smi

dpkg -l | grep -i nvidia

lsmod | grep -i nvidia

lspci | grep -i nvidia

lsmod | grep -i nouveau

dpkg -l | grep -i nouveauAdd cuda runtime library.

sudo bash -c "echo /usr/local/cuda/lib64/ > /etc/ld.so.conf.d/cuda.conf"

sudo ldconfigAdd cuda environment path.

sudo vim /etc/environment

# add :/usr/local/cuda/bin (including the ":") at the end of the PATH="/[some_path]:/[some_path]" string (inside the quotes)

sudo reboot nowCheck cuda installation.

cd /usr/local/cuda/samples

sudo make

cd /usr/local/cuda/samples/bin/x86_64/linux/release

./deviceQueryInstall cuDNN. Documentation can be found on Nvidia's website. Visit Nvidia's page to download cuDNN to your local machine. Then, move the file to the Ubuntu server.

rsync -av /[path_on_local]/cudnn-10.1-linux-x64-v7.6.0.64.tgz [user_name]@[server_name]:[path_on_server]

ssh [user_name]@[server_name]

cd [path_on_server]

sudo tar -xzvf cudnn-10.1-linux-x64-v7.6.0.64.tgz

sudo cp cuda/include/cudnn.h /usr/local/cuda/include

sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64

sudo chmod a+r /usr/local/cuda/include/cudnn.h /usr/local/cuda/lib64/libcudnn*Install conda. This assumes that Ubuntu is installed. A detailed documentation is here. First visit here to obtain the downloading path. The following script install conda for all users:

wget https://repo.continuum.io/miniconda/Miniconda3-4.7.12.1-Linux-x86_64.sh

sudo sh Miniconda3-4.7.12.1-Linux-x86_64.sh -b -p /opt/miniconda3

sudo vim /etc/bash.bashrc

# Add the following lines to this file

export PATH="/opt/miniconda3/bin:$PATH"

. /opt/miniconda3/etc/profile.d/conda.sh

source /etc/bash.bashrcFor Mac OS, I recommend installing conda by using Homebrew.

brew cask install miniconda

echo 'export PATH="/usr/local/Caskroom/miniconda/base/bin:$PATH"' >> ~/.bash_profile

echo '. /usr/local/Caskroom/miniconda/base/etc/profile.d/conda.sh' >> ~/.bash_profile

source ~/.bash_profileClone this repository and set the permission.

git clone --recursive https://github.com/CMU-CREATE-Lab/deep-smoke-machine.git

sudo chown -R $USER deep-smoke-machine/

sudo addgroup [group_name]

sudo usermod -a -G [group_name] [user_name]

groups [user_name]

sudo chmod -R 775 deep-smoke-machine/

sudo chgrp -R [group_name] deep-smoke-machine/For git to ignore permission changes.

# For only this repository

git config core.fileMode false

# For globally

git config --global core.fileMode falseCreate conda environment and install packages. It is important to install pip first inside the newly created conda environment.

conda create -n deep-smoke-machine

conda activate deep-smoke-machine

conda install python=3.7

conda install pip

which pip # make sure this is the pip inside the deep-smoke-machine environment

sh deep-smoke-machine/back-end/install_packages.shIf the environment already exists and you want to remove it before installing packages, use the following:

conda env remove -n deep-smoke-machineUpdate the optical_flow submodule.

cd deep-smoke-machine/back-end/www/optical_flow/

git submodule update --init --recursive

git checkout masterInstall PyTorch.

conda install pytorch torchvision -c pytorchInstall system packages for OpenCV.

sudo apt update

sudo apt install -y libsm6 libxext6 libxrender-devObtain user token from the smoke labeling tool and put the user_token.js file in the deep-smoke-machine/back-end/data/ directory. You need permissions from the system administrator to download the user token. After getting the token, get the video metadata. This will create a metadata.json file under deep-smoke-machine/back-end/data/.

python get_metadata.py confirmFor others who wish to use the publicly released dataset (a snapshot of the smoke labeling tool on 2/24/2020), we include metadata_02242020.json file under the deep-smoke-machine/back-end/data/dataset/ folder. You need to copy, move, and rename this file to deep-smoke-machine/back-end/data/metadata.json.

cd deep-smoke-machine/back-end/data/

cp dataset/2020-02-24/metadata_02242020.json metadata.jsonSplit the metadata into three sets: train, validation, and test. This will create a deep-smoke-machine/back-end/data/split/ folder that contains all splits, as indicated in our technical report. The method for splitting the dataset will be explained in the next "Dataset" section.

python split_metadata.py confirmDownload all videos in the metadata file to deep-smoke-machine/back-end/data/videos/. We provide a shell script (see bg.sh) to run the python script on the background using the screen command.

python download_videos.py

# Background script (on the background using the "screen" command)

sh bg.sh python download_videos.pyHere are some tips for the screen command:

# List currently running screen names

sudo screen -ls

# Go into a screen

sudo screen -x [NAME_FROM_ABOVE_COMMAND] (e.g. sudo screen -x 33186.download_videos)

# Inside the screen, use CTRL+C to terminate the screen

# Or use CTRL+A+D to detach the screen and send it to the background

# Terminate all screens

sudo screen -X quit

# Keep looking at the screen log

tail -f screenlog.0Process and save all videos into rgb frames (under deep-smoke-machine/back-end/data/rgb/) and optical flow frames (under deep-smoke-machine/back-end/data/flow/). Because computing optical flow takes a very long time, by default this script will only process rgb frames. If you need the optical flow frames, change the flow_type to 1 in the process_videos.py script.

python process_videos.py

# Background script (on the background using the "screen" command)

sh bg.sh python process_videos.pyExtract I3D features under deep-smoke-machine/back-end/data/i3d_features_rgb/ and deep-smoke-machine/back-end/data/i3d_features_flow/. Notice that you need to process the optical flow frames in the previous step to run the i3d-flow model.

python extract_features.py [method] [optional_model_path]

# Extract features from pretrained i3d

python extract_features.py i3d-rgb

python extract_features.py i3d-flow

# Extract features from a saved i3d model

python extract_features.py i3d-rgb ../data/saved_i3d/ecf7308-i3d-rgb/model/16875.pt

python extract_features.py i3d-flow ../data/saved_i3d/af00751-i3d-flow/model/30060.pt

# Background script (on the background using the "screen" command)

sh bg.sh python extract_features.py i3d-rgb

sh bg.sh python extract_features.py i3d-flowTrain the model with cross-validation on all dataset splits. The model will be trained on the training set and validated on the validation set. Pretrained weights are obtained from the pytorch-i3d repository. By default, the information of the trained I3D model will be placed in the deep-smoke-machine/back-end/data/saved_i3d/ folder. For the description of the models, please refer to our technical report. Note that by default the pytorch DistributedDataParallel GPU parallel computing is enabled (see i3d_learner.py).

python train.py [method] [optional_model_path]

# Use I3D features + SVM

python train.py svm-rgb-cv-1

# Use Two-Stream Inflated 3D ConvNet

python train.py i3d-rgb-cv-1

# Background script (on the background using the "screen" command)

sh bg.sh python train.py i3d-rgb-cv-1Test the performance of a model on the test set. This step will also generate summary videos for each cell in the confusion matrix (true positive, true negative, false positive, and false negative).

python test.py [method] [model_path]

# Use I3D features + SVM

python test.py svm-rgb-cv-1 ../data/saved_svm/445cc62-svm-rgb/model/model.pkl

# Use Two-Stream Inflated 3D ConvNet

python test.py i3d-rgb-cv-1 ../data/saved_i3d/ecf7308-i3d-rgb/model/16875.pt

# Background script (on the background using the "screen" command)

sh bg.sh python test.py i3d-rgb-cv-1 ../data/saved_i3d/ecf7308-i3d-rgb/model/16875.ptRun Grad-CAM to visualize the areas in the videos that the model is looking at.

python grad_cam_viz.py i3d-rgb [model_path]

# Background script (on the background using the "screen" command)

sh bg.sh python grad_cam_viz.py i3d-rgb [model_path]After model training and testing, the folder structure will look like the following:

└── saved_i3d # this corresponds to deep-smoke-machine/back-end/data/saved_i3d/

└── 549f8df-i3d-rgb-s1 # the name of the model, s1 means split 1

├── cam # the visualization using Grad-CAM

├── log # the log when training models

├── metadata # the metadata of the dataset split

├── model # the saved models

├── run # the saved information for TensorBoard

└── viz # the sampled videos for each cell in the confusion matrix

If you want to see the training and testing results on TensorBoard, run the following and go to the stated URL in your browser.

cd deep-smoke-machine/back-end/data/

tensorboard --logdir=saved_i3d

Recommended training strategy:

- Set an initial learning rate (e.g., 0.1)

- Keep this learning rate and train the model until the training error decreases too slow (or fluctuate) or until the validation error increases (a sign of overfitting)

- Decrease the learning rate (e.g., by a factor of 10)

- Load the best model weight from the ones that were trained using the previous learning rate

- Repeat step 2, 3, and 4 until convergence

We include our publicly released dataset (a snapshot of the smoke labeling tool on 2/24/2020) metadata_02242020.json file under the deep-smoke-machine/back-end/data/dataset/ folder. The json file contains an array, with each element in the array representing the metadata for a video. Each element is a dictionary with keys and values, explained below:

- camera_id

- ID of the camera (0 means clairton1, 1 means braddock1, and 2 means westmifflin1)

- view_id

- ID of the cropped view from the camera

- Each camera produces a panarama, and each view is cropped from this panarama (will be explained later in this section)

- id

- Unique ID of the video clip

- label_state

- State of the video label produced by the citizen science volunteers (will be explained later in this section)

- label_state_admin

- State of the video label produced by the researchers (will be explained later in this section)

- start_time

- Starting epoch time (in seconds) when capturing the video, corresponding to the real-world time

- url_root

- URL root of the video, need to combine with url_part to get the full URL (url_root + url_part)

- url_part

- URL part of the video, need to combine with url_root to get the full URL (url_root + url_part)

- file_name

- File name of the video, for example 0-1-2018-12-13-6007-928-6509-1430-180-180-6614-1544720610-1544720785

- The format of the file_name is [camera_id]-[view_id]-[year]-[month]-[day]-[bound_left]-[bound_top]-[bound_right]-[bound_bottom]-[video_height]-[video_width]-[start_frame_number]-[start_epoch_time]-[end_epoch_time]

- bound_left, bound_top, bound_right, and bound_bottom mean the bounding box of the video clip in the panarama

Note that the url_root and url_part point to videos with 180 by 180 resolutions. We also provide a higher resolution (320 by 320) version of the videos. Simply replace the "/180/" with "/320/" in the url_root, and also replace the "-180-180-" with "-320-320-" in the url_part. For example, see the following:

- URL for the 180 by 180 version: https://smoke.createlab.org/videos/180/2019-06-24/0-7/0-7-2019-06-24-3504-1067-4125-1688-180-180-9722-1561410615-1561410790.mp4

- URL for the 320 by 320 version: https://smoke.createlab.org/videos/320/2019-06-24/0-7/0-7-2019-06-24-3504-1067-4125-1688-320-320-9722-1561410615-1561410790.mp4

Each video is reviewed by at lease two citizen science volunteers (or one researcher who received the smoke reading training). Our technical report describes the details of the labeling and quality control mechanism. The state of the label (label_state and label_state_admin) in the metadata_02242020.json is briefly explained below.

- 23 : strong positive

- Two volunteers both agree (or one researcher says) that the video has smoke.

- 16 : strong negative

- Two volunteers both agree (or one researcher says) that the video does not have smoke.

- 19 : weak positive

- Two volunteers have different answers, and the third volunteer says that the video has smoke.

- 20 : weak negative

- Two volunteers have different answers, and the third volunteer says that the video does not have smoke.

- 5 : maybe positive

- One volunteers says that the video has smoke.

- 4 : maybe negative

- One volunteers says that the video does not have smoke.

- 3 : has discord

- Two volunteers have different answers (one says yes, and another one says no).

- -1 : no data, no discord

- No data. If label_state_admin is -1, it means that the label is produced solely by citizen science volunteers. If label_state is -1, it means that the label is produced solely by researchers. Otherwise, the label is jointly produced by both citizen science volunteers and researchers. Please refer to our technical report about these three cases.

After running the split_metadata.py script, the "label_state" and "label_state_admin" keys in the dictionary will be aggregated into the final label, represented by the new "label" key (see the json files in the generated deep-smoke-machine/back-end/data/split/ folder). Positive (value 1) and negative (value 0) labels mean if the video clip has smoke emissions or not, respectively.

Also, the dataset will be divided into several splits, based on camera views or dates. The file names (without ".json" file extension) are listed below. The Split S0, S1, S2, S3, S4, and S5 correspond to the ones indicated in the technical report.

| Split | Train | Validate | Test |

|---|---|---|---|

| S0 | metadata_train_split_0_by_camera | metadata_validation_split_0_by_camera | metadata_test_split_0_by_camera |

| S1 | metadata_train_split_1_by_camera | metadata_validation_split_1_by_camera | metadata_test_split_1_by_camera |

| S2 | metadata_train_split_2_by_camera | metadata_validation_split_2_by_camera | metadata_test_split_2_by_camera |

| S3 | metadata_train_split_by_date | metadata_validation_split_by_date | metadata_test_split_by_date |

| S4 | metadata_train_split_3_by_camera | metadata_validation_split_3_by_camera | metadata_test_split_3_by_camera |

| S5 | metadata_train_split_4_by_camera | metadata_validation_split_4_by_camera | metadata_test_split_4_by_camera |

The following table shows the content in each split, except S3. The splitting strategy is that each view will be present in the testing set at least once. Also, the camera views that monitor different industrial facilities (view 1-0, 2-0, 2-1, and 2-2) are always on the testing set. Examples of the camera views will be provided later in this section.

| View | S0 | S1 | S2 | S4 | S5 |

|---|---|---|---|---|---|

| 0-0 | Train | Train | Test | Train | Train |

| 0-1 | Test | Train | Train | Train | Train |

| 0-2 | Train | Test | Train | Train | Train |

| 0-3 | Train | Train | Validate | Train | Test |

| 0-4 | Validate | Train | Train | Test | Validate |

| 0-5 | Train | Validate | Train | Train | Test |

| 0-6 | Train | Train | Test | Train | Validate |

| 0-7 | Test | Train | Train | Validate | Train |

| 0-8 | Train | Train | Validate | Test | Train |

| 0-9 | Train | Test | Train | Validate | Train |

| 0-10 | Validate | Train | Train | Test | Train |

| 0-11 | Train | Validate | Train | Train | Test |

| 0-12 | Train | Train | Test | Train | Train |

| 0-13 | Test | Train | Train | Train | Train |

| 0-14 | Train | Test | Train | Train | Train |

| 1-0 | Test | Test | Test | Test | Test |

| 2-0 | Test | Test | Test | Test | Test |

| 2-1 | Test | Test | Test | Test | Test |

| 2-2 | Test | Test | Test | Test | Test |

The following shows the split of S3 by time sequence, where the farthermost 18 days are used for training, the middle 2 days are used for validation, and the nearest 10 days are used for testing. You can find our camera data by date on our air pollution monitoring network.

- Training set of S3

- 2018-05-11, 2018-06-11, 2018-06-12, 2018-06-14, 2018-07-07, 2018-08-06, 2018-08-24, 2018-09-03, 2018-09-19, 2018-10-07, 2018-11-10, 2018-11-12, 2018-12-11, 2018-12-13, 2018-12-28, 2019-01-11, 2019-01-17, 2019-01-18

- Validation set of S3

- 2019-01-22, 2019-02-02

- Testing set of S3

- 2019-02-03, 2019-02-04, 2019-03-14, 2019-04-01, 2019-04-07, 2019-04-09, 2019-05-15, 2019-06-24, 2019-07-26, 2019-08-11

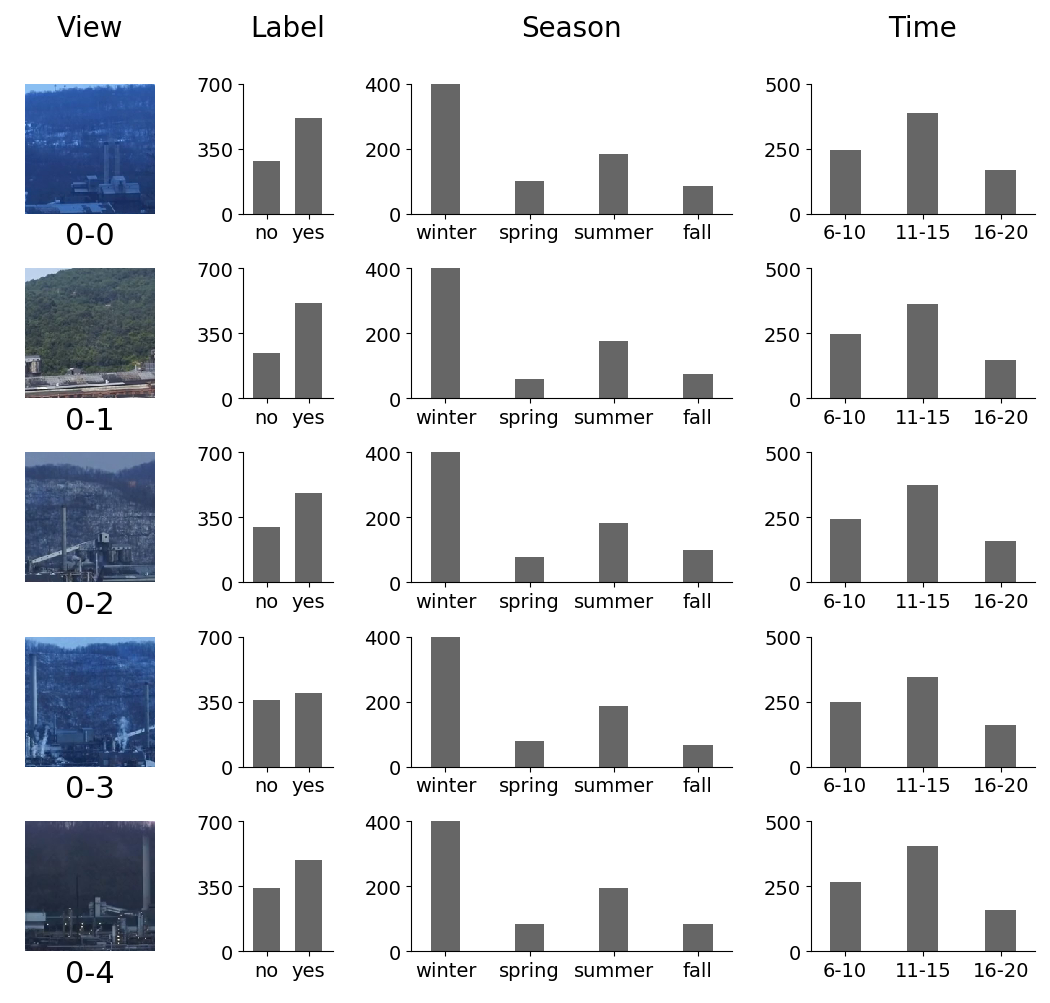

The dataset contains 12,567 clips with 19 distinct views from cameras on three sites that monitored three different industrial facilities. The clips are from 30 days that spans four seasons in two years in the daytime. The following provide examples and the distribution of labels for each camera view, with the format [camera_id]-[view_id]: