This Documentation will guide you on how to set up the automation pipeline to update dataset on Data.wa utilizing:

- GitHub Action as task scheduler

- Azure Cloud Storage as data storage solution

Prerequisites

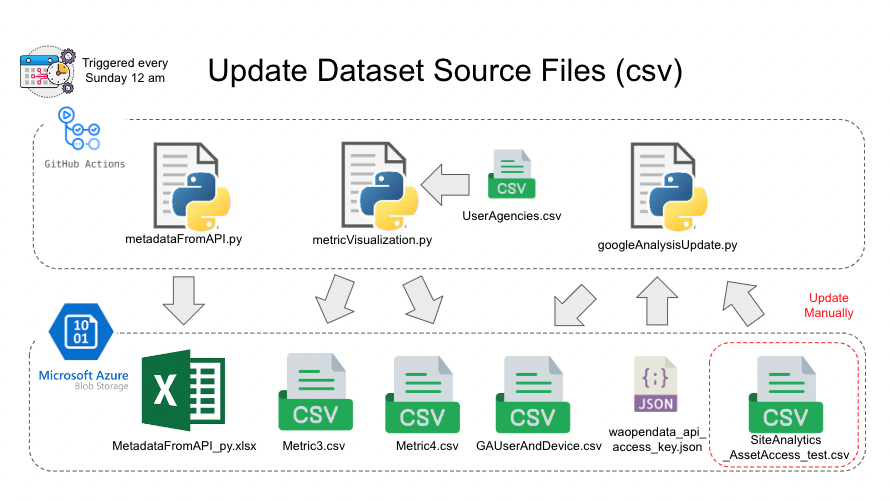

You need to have the SiteAnalytics_AssetAccess_test.csv and waopendata_api_access_key.json files under the destination Azure Blob Storage container.

- Execute metadataFromAPI.py to retrieve the latest Metadata and generate an excel file (MetadataFromAPI_py.xlsx) to Azure data storage.

- Execute metricVisualization.py, which will generate two new csv files (Metric3.csv and Metric4.csv) and also update the one in Azure.

- Execute googleAnalysisUpdate.py to fetch data from Google Analysis and generate a new csv file (GAUserAndDevice.csv) to replace the old one in Azure.

| Python Script Name | GeneratedData | Required Setting File |

|---|---|---|

| metadataFromAPI.py | MetadataFromAPI_py.xslx | N/A |

| metricVisualization.py | Metrics3.csv Metrics4.csv |

UserAgencies.csv (on GitHub) |

| googleAnalysisUpdate.py | GAUserAndDevice.csv | waopendata_api_access_key.json (on Azure Blob Storage) SiteAnalytics_AssetAccess_test.csv (on Azure Blob Storage) |

Prerequisites

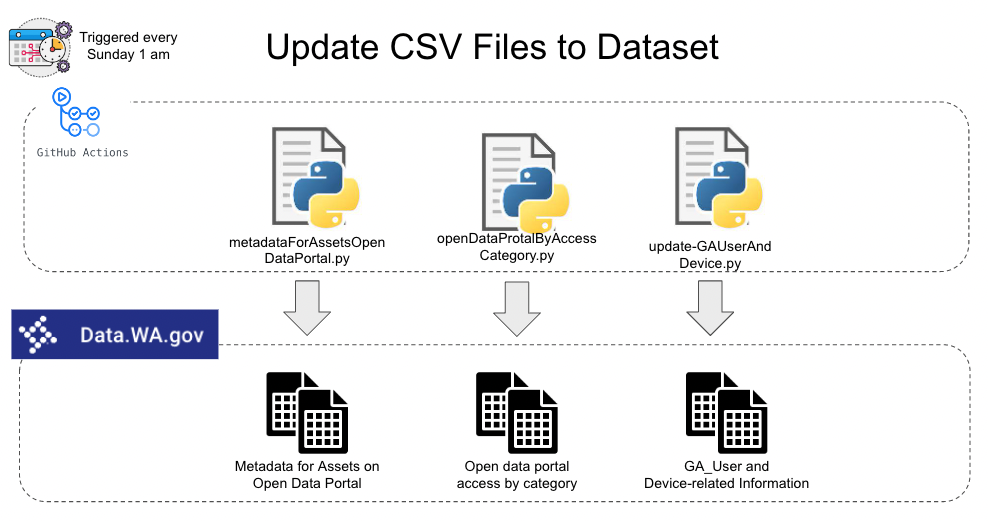

Make sure the files on Azure Blob Storage is already updated.

- Execute metadataForAssetsOpenDataPortal.py to update dataset Metadata for Assets on Open Data Portal.

- Execute openDataProtalByAccessCategory.py to update dataset Open data portal access by category.

- Execute update-GAUserAndDevice.py to update dataset GA_User and Device-related Information

| Python Script Name | File name | Corresponding Dataset | Corresponding Metrics Name |

|---|---|---|---|

| metadataForAssetsOpenDataPortal.py | Metric3.csv | Metadata for Assets on Open Data Portal | Number of assets hosted on data.wa.gov(Measure) Number of datasets with visualizations(Metric) Number of datasets with stories(Metric) |

| openDataProtalByAccessCategory.py | Metric4.csv | Open data portal access by category | Access of assets hosted on data.wa.gov & Most popular assets(Measure) |

| update-GAUserAndDevice.py | GAUserAndDevice.csv | GA_User and Device-related Information | Access of assets hosted on data.wa.gov & Most popular assets(Measure) |

| Workflow Name | YAML File Name | Corresponding Script | Task Detail |

|---|---|---|---|

| Generate and Update MetadataFromAPI_py.csv | UpdateCSVtoAzure.yml | metadataFromAPI.py | Run python script to retrieve new data and generate new MetaDataFromAPI in excel form |

| Generate and Update Metric3.csv, Metric4.csv, and GAUserAndDevice.csv | UpdateMetricsDataset.yml | metricVisualization.py googleAnalysisUpdate.py |

Run two python scripts to generate and and update 3 csv files for dataset updates |

| Update GA_User and Device-related Information Dataset | Update_GA_Device_Dataset.yml | googleAnalysisUpdate.py | Run python script to update GA_User and Device-related Information dataset |

| Update Metadata for Assets on Open Data Portal Dataset | Update_Metadata_Asset_Dataset.yml | metadataForAssetsOpenDataPortal.py | Run python script to update Metadata for Assets on Open Data Portal dataset |

| Update Open Data Protal Access By Category Dataset | Update_Open_Data_Portal_Access.yml | openDataProtalByAccessCategory.py | Run python script to update Open data portal access by category dataset |

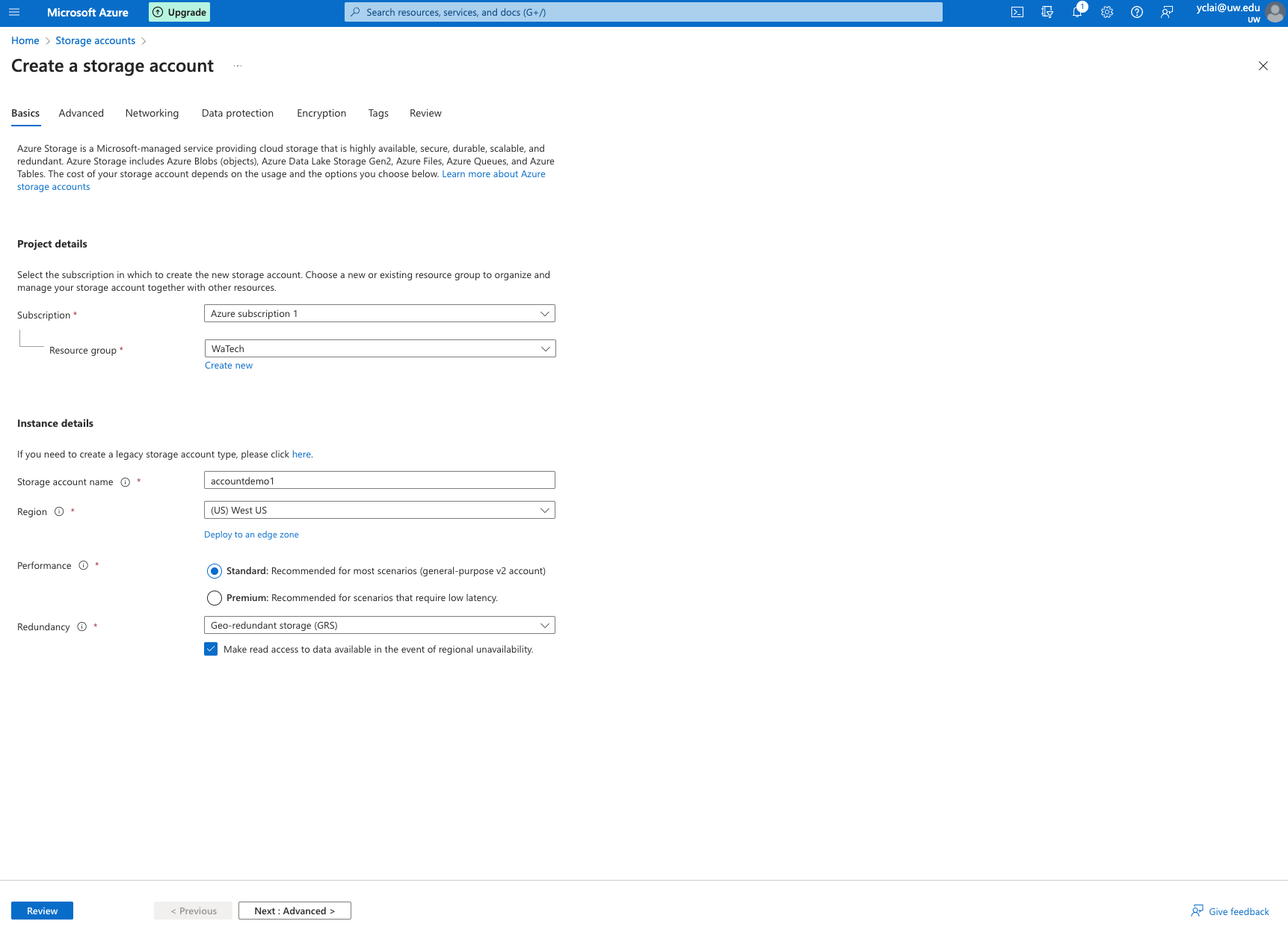

In order to create a Azure Data Storage, you will first need to have an Azure account for yourself. After you created and logged into the Azure platform Portal, here are the following steps to help you create your Azure Storage:

- Login to Azure Portal:

Go to https://portal.azure.com/ and sign in with your Microsoft Azure account.

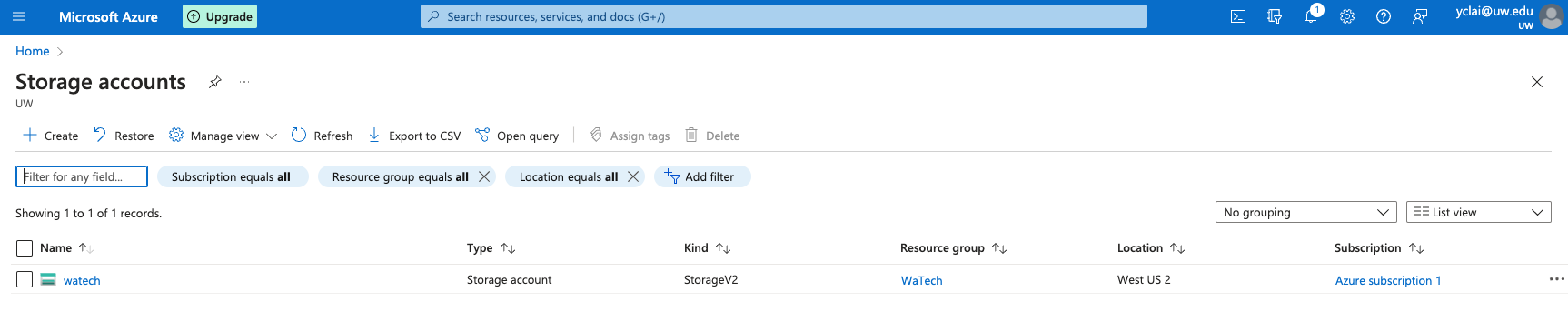

- Create a Storage Account:

Once you've logged in, click on the "Create a resource" button located at the top left of the Azure Portal. From there, you should see a search bar. Type in "Storage account" and select it from the dropdown. On the "Storage account" page, click on the "Create" button to start creating your storage account.

- Configure Your Storage Account:

Now, you'll need to fill in the details for your new storage account:

- Subscription: Choose the subscription under which you want to create the storage account.

- Resource group: You can create a new resource group or use an existing one. A resource group is a way to group together different Azure resources.

- Storage account name: Create a unique name for your storage account. This name must be unique across all existing Azure storage account names.

- Location: Choose the region where you want your storage account to be hosted.

- Performance: Choose between standard and premium. Standard storage uses magnetic drives and is best for most scenarios. Premium storage uses solid-state drives and is best for scenarios that require high transaction rates.

- Account kind: There are different kinds of storage accounts for different types of data, including BlobStorage, StorageV2, and more. StorageV2 is the most general-purpose storage account type.

- Replication: Choose how your data is replicated across Azure's infrastructure. Options include LRS (Locally redundant storage), GRS (Geo-redundant storage), RAGRS (Read-access geo-redundant storage), and ZRS (Zone-redundant storage).

If you don't want to specify the details, you can just use the default setting Azure provided. Remember to create or choose the resource group and storage account name. These two are mandetory in creating storage accounts.

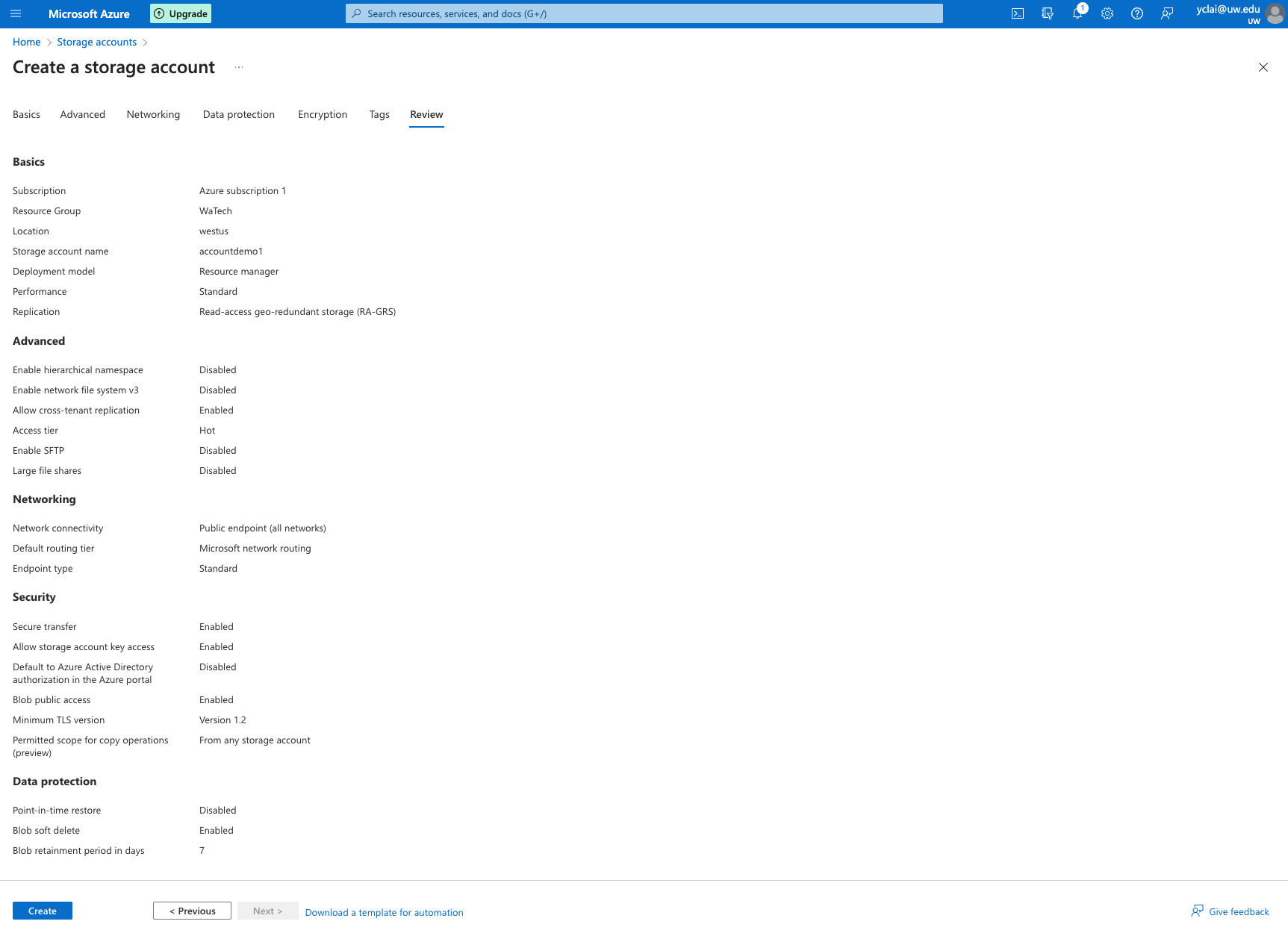

- Review and Create:

Review your settings and click "Create" to create your new storage account.

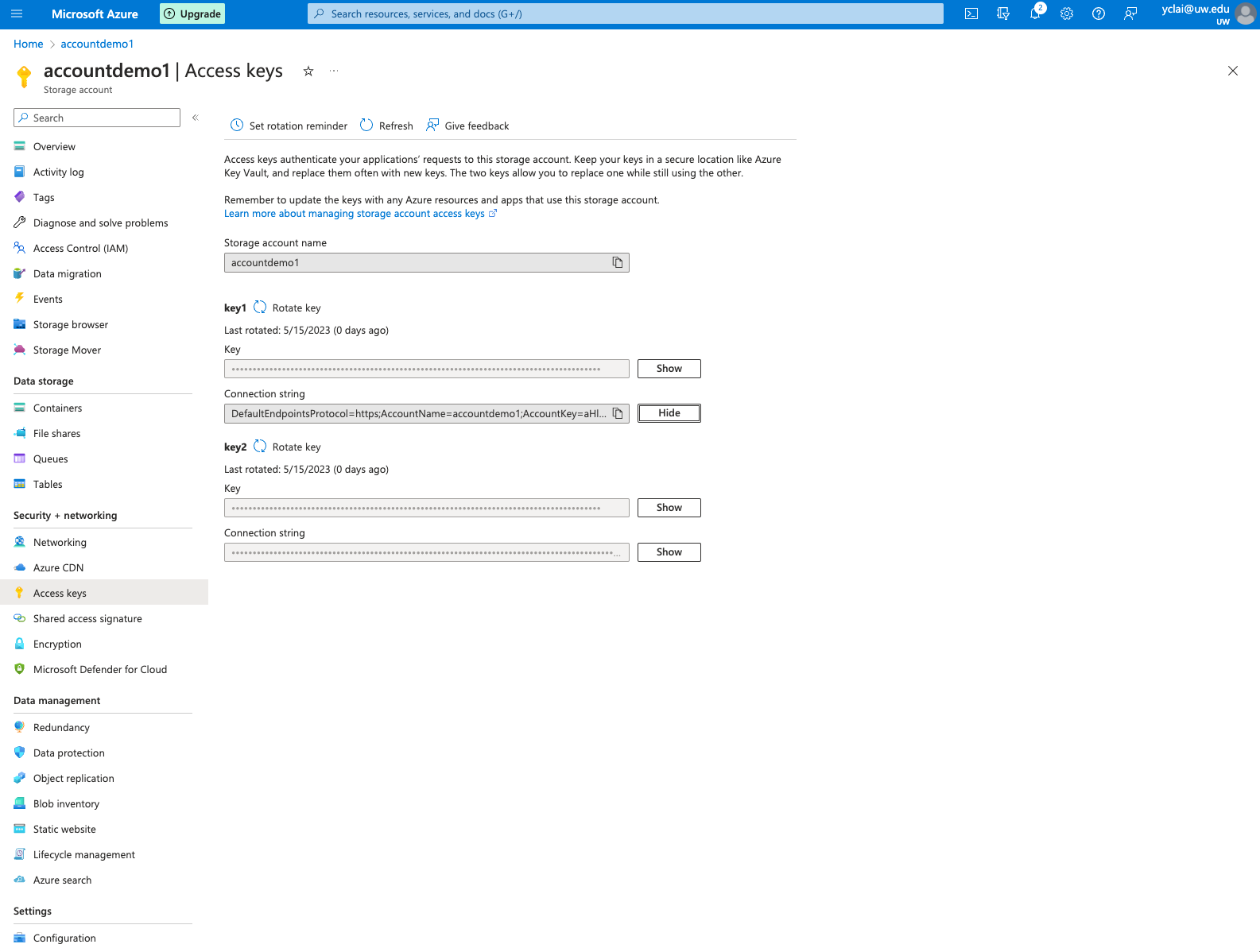

- Access Keys:

Once your storage account is created, you can find your access keys by clicking on "Access keys" under the "Security + networking" section of your storage account page. You'll need these keys to authenticate your application or service with your storage account.

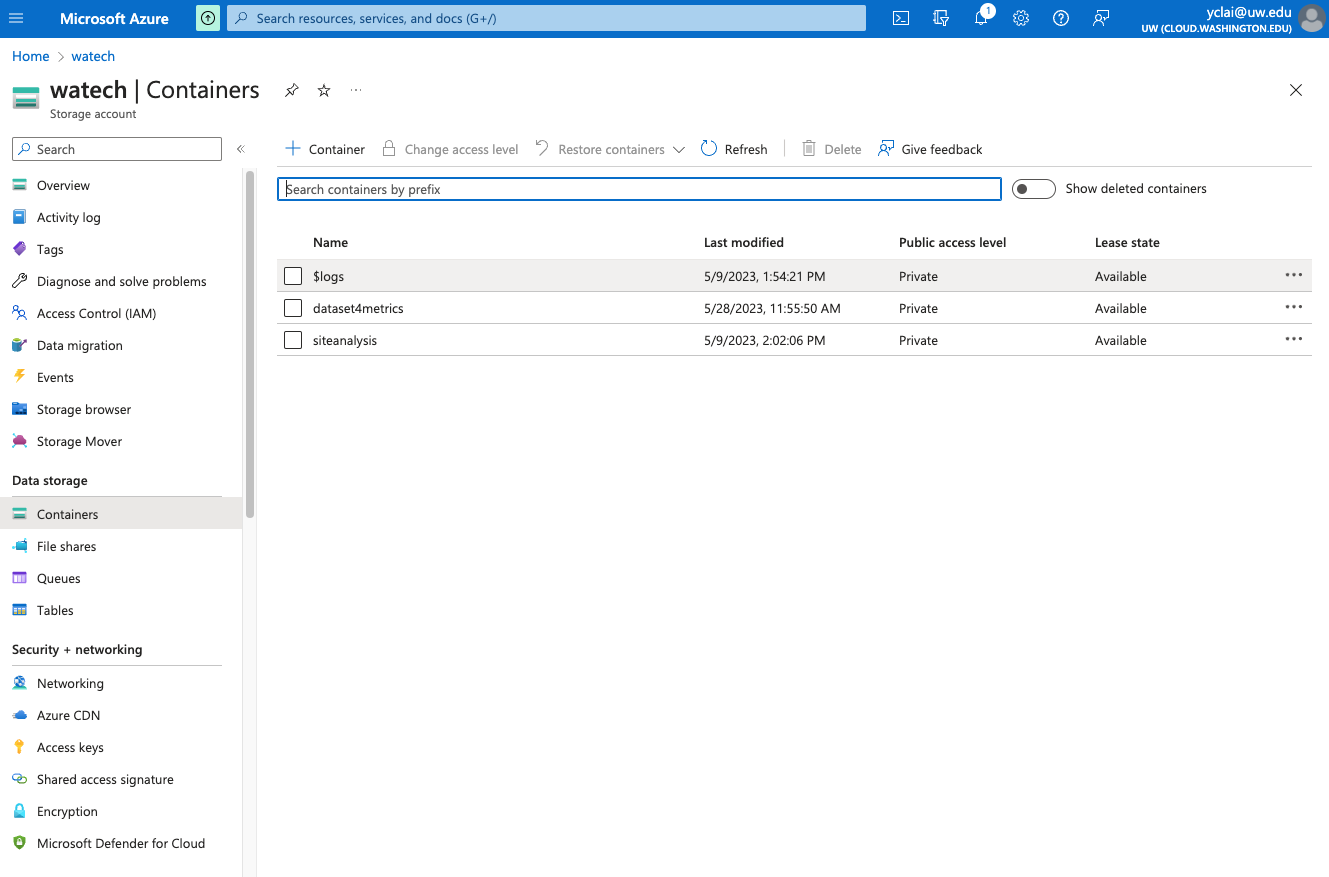

- Create a container:

To store data in blob storage, you'll need to create a container. From your storage account page, click on "Containers" under the "Blob service" section, then click "+ Container" to create a new container.

The container name here will directly influence your execution result. Be sure to use the same container name in the following step for credentials.

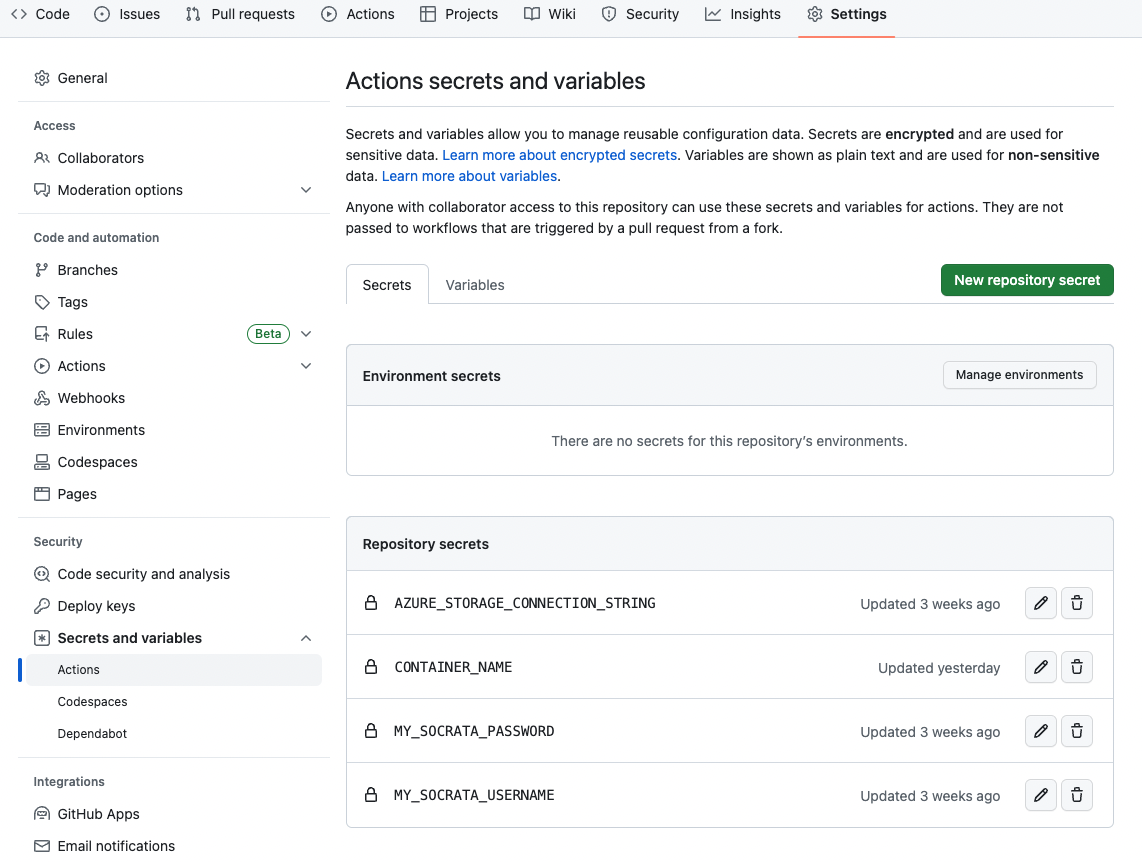

| Name | Value |

|---|---|

| MY_SOCRATA_USERNAME | the username of the service account |

| MY_SOCRATA_PASSWORD | the password of the service account |

| AZURE_STORAGE_CONNECTION_STRING | the connection string retrieved from Azure service |

| CONTAINER_NAME | the container name used on Azure Blob Storage |

If your are going to create a service account to run the scripts, make sure it has enough permission to access the target datasets on Data.wa website

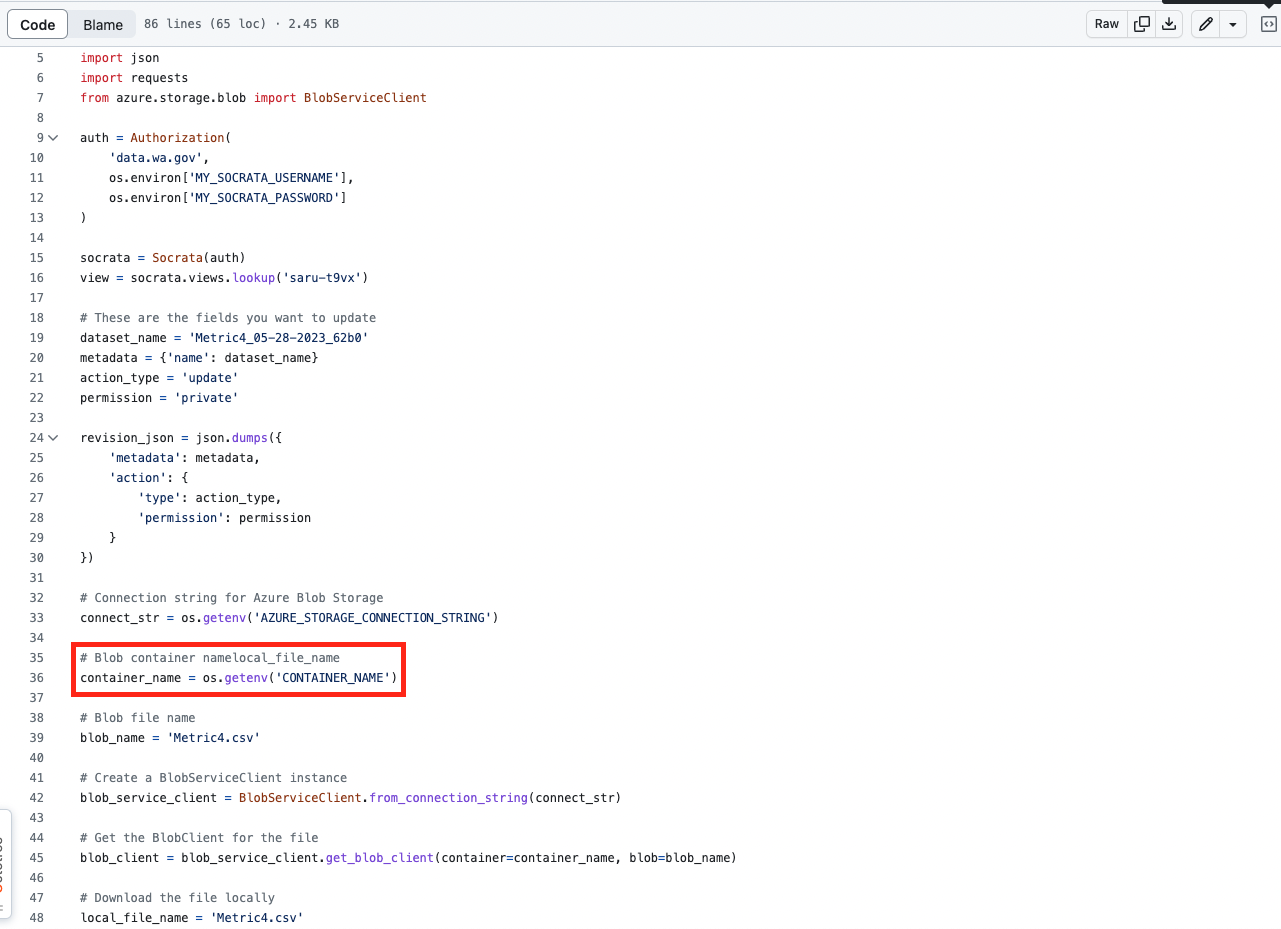

- MY_SOCRATA_USERNAME: this is the username of the service account

- MY_SOCRATA_PASSWORD: this is the password of the service account

- AZURE_STORAGE_CONNECTION_STRING: this is the access key under your Azure Storage Account

- CONTAINER_NAME: this is the container name you created and used for storage

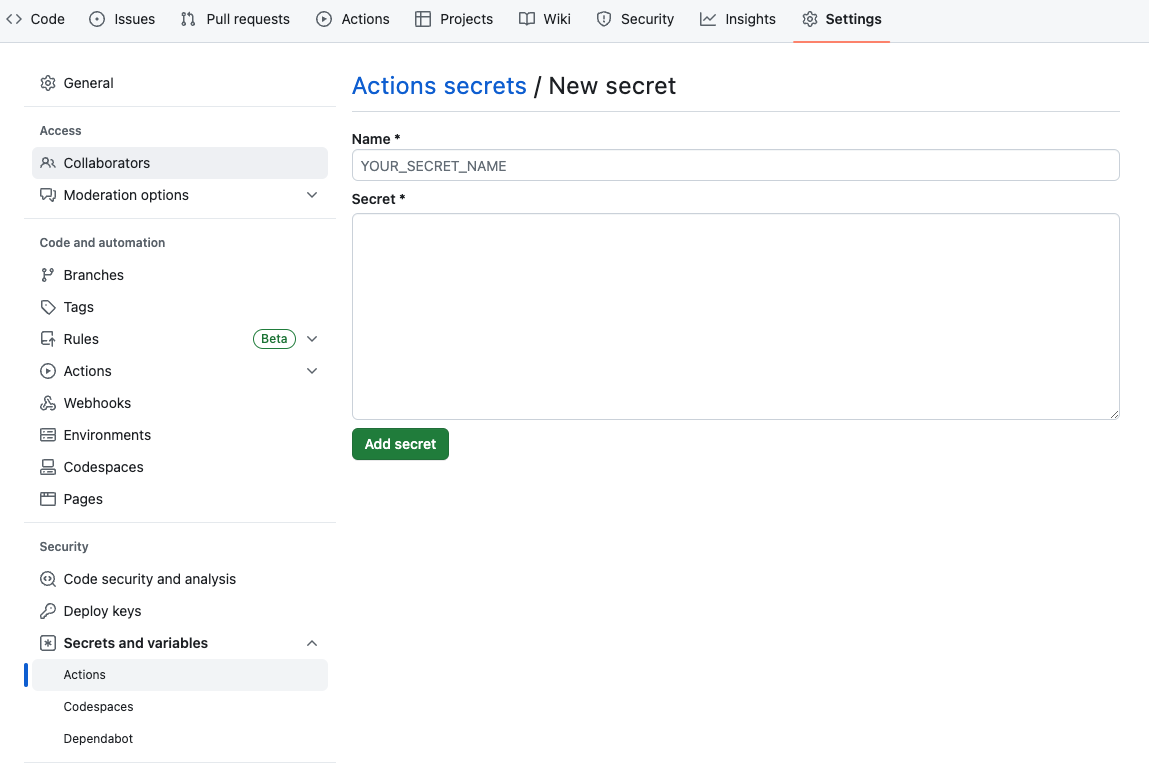

- In the GitHub Repository, click "Settings" tab

- Click "Secrets and variables" in the left sidebar

- Choose "Actions" and add or edit the above-mentioned secrets

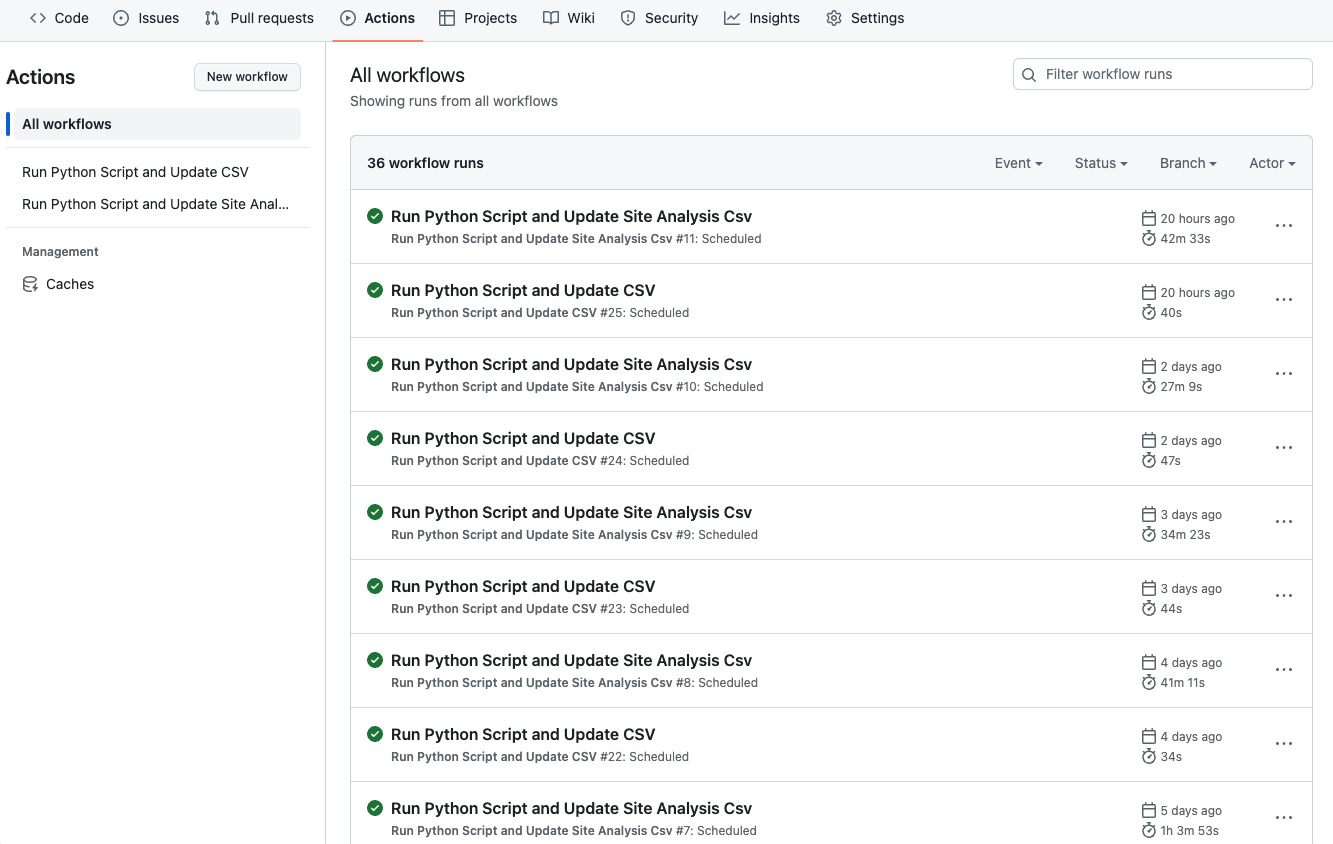

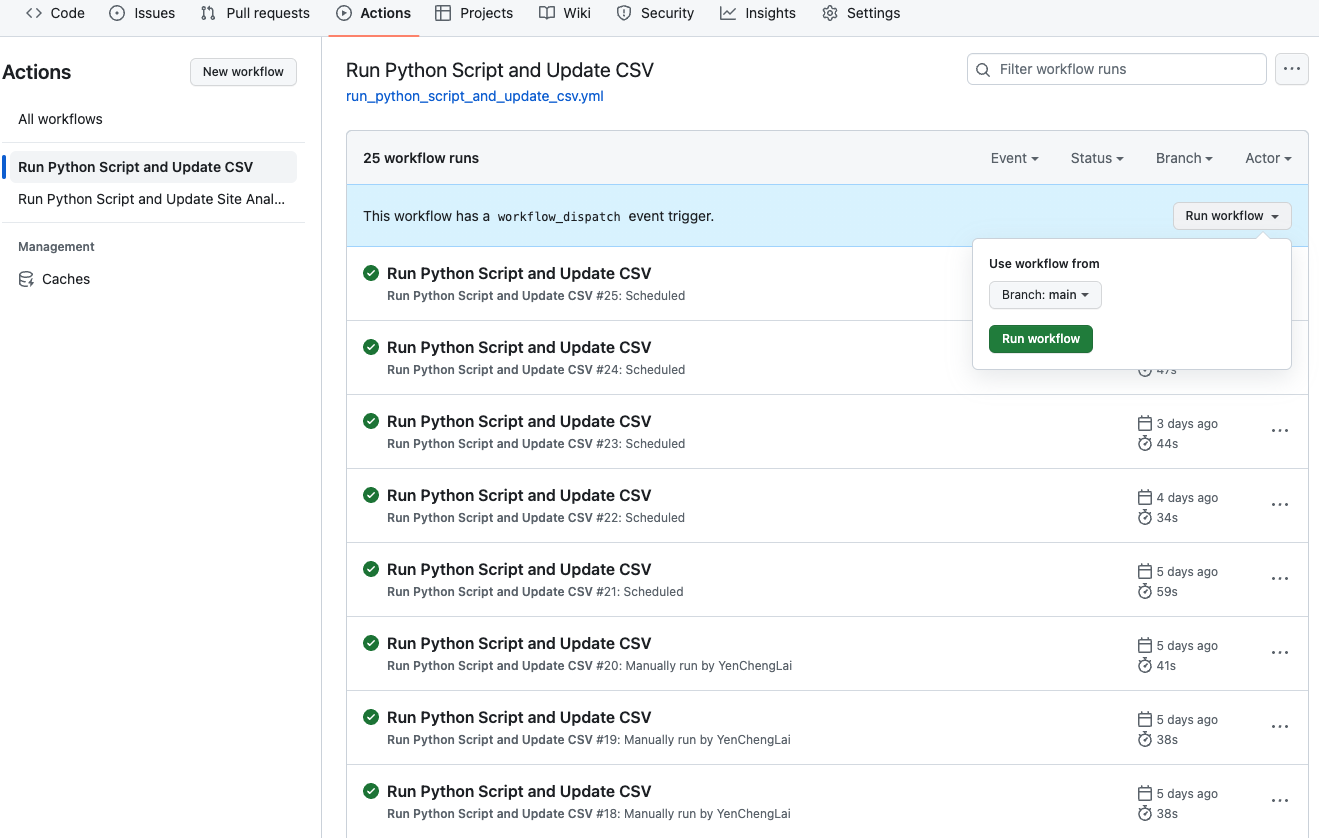

- In the GitHub Repository, click "Actions" tab. You will see all actions and execution results here.

- You can choose a specific action at the left sidebar. If the action provide a manual trigger, it will be mentioned at this page, and you can trigger it by clicking "Run workflow"

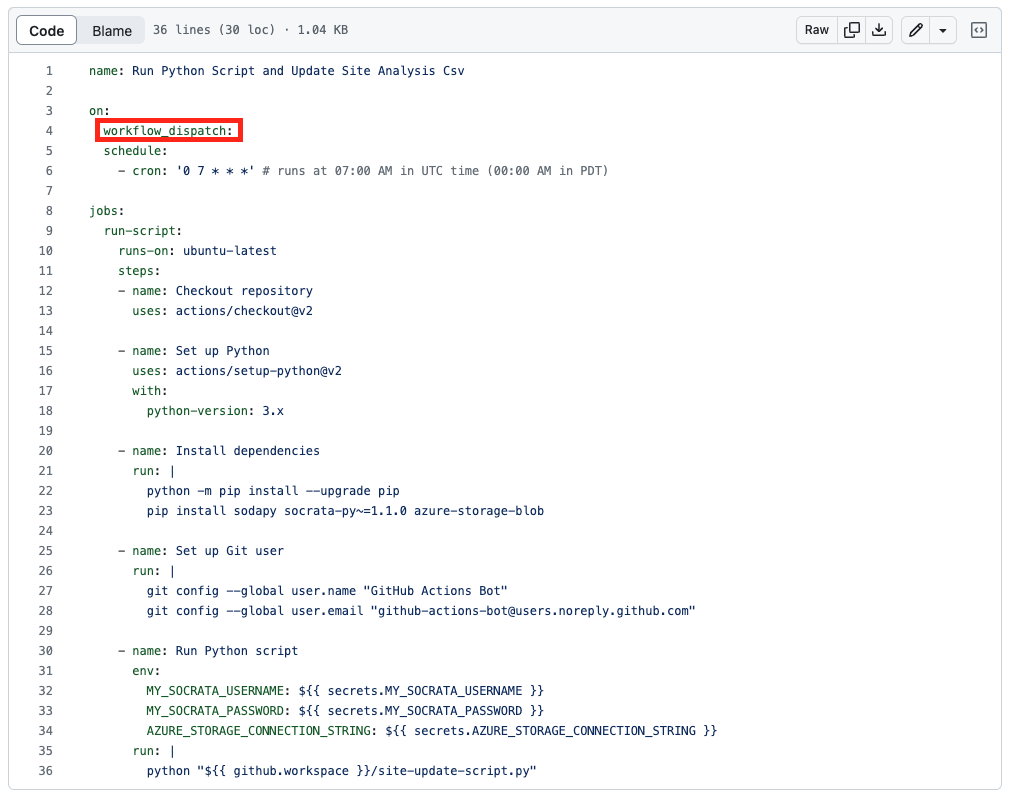

- If you want to add a manual trigger to a specific action, you can do it by adding "workflow_dispatch:" under the "on" section in the corresponding .yml file. Please keep in mind that you need to make sure the indentation, order, and colon are as stated as the below picture because it will affect the .yml compiling result.