If you like our works, please support us with your stars⭐!

🚀 Welcome to the repo of PolaFormer!

This repo contains the official PyTorch code and pre-trained models for PolaFormer.

-

[2/4] 🔥 The triton implementation of PolaFormer is released thanks to fbi_la library

-

[1/22] 🔥 Our paper has been accepted by The International Conference on Learning Representations (ICLR), 2025.

Linear attention has emerged as a promising alternative to softmax-based attention, leveraging kernelized feature maps to reduce complexity from

In this paper, we propose the polarity-aware linear attention mechanism that explicitly models both same-signed and opposite-signed query-key interactions, ensuring comprehensive coverage of relational information. Furthermore, to restore the spiky properties of attention maps, we prove that the existence of a class of element-wise functions (with positive first and second derivatives) can reduce entropy in the attention distribution. Finally, we employ a learnable power function for rescaling, allowing strong and weak attention signals to be effectively separated.

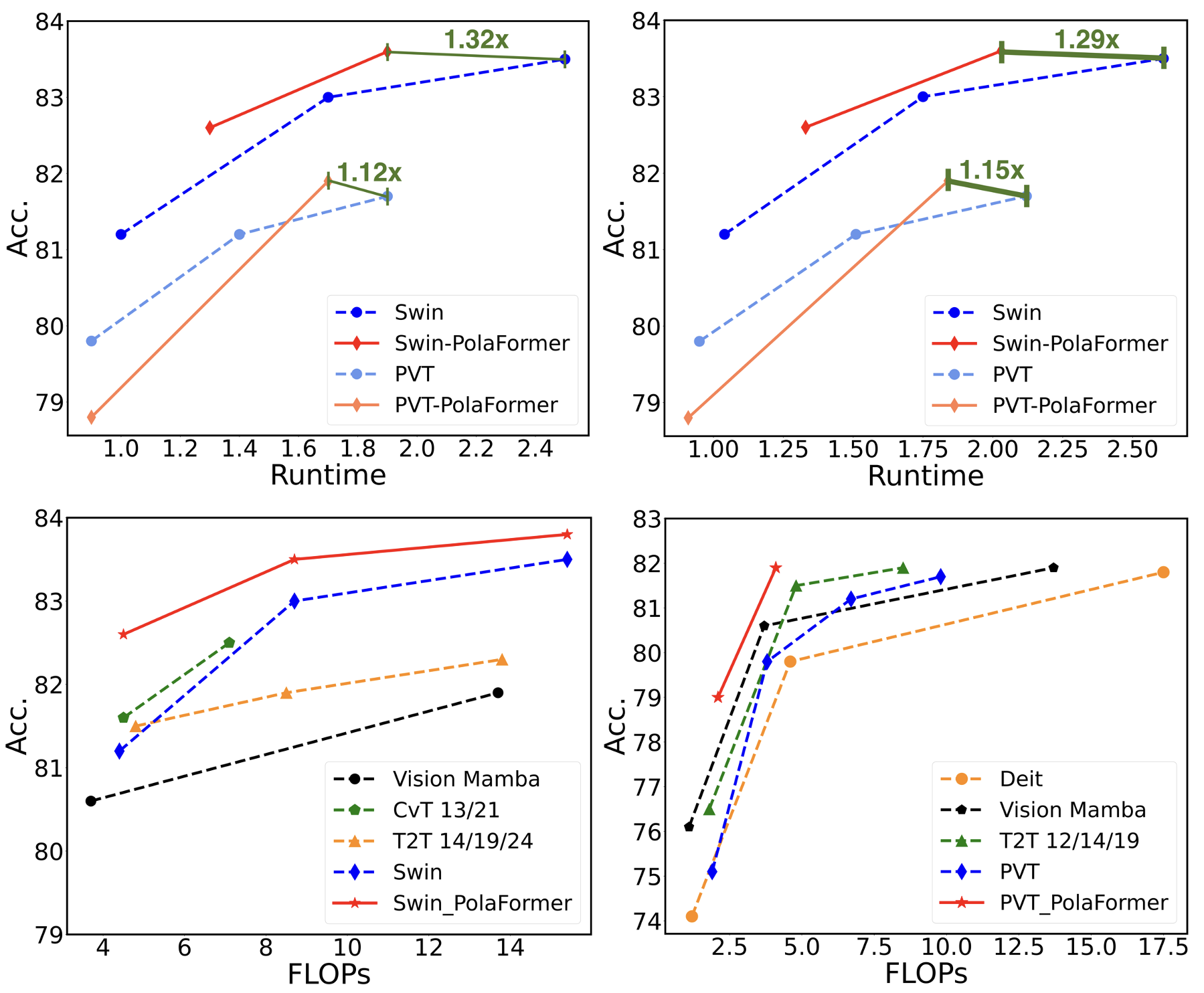

Notably, we introduce two learnable polarity-aware coefficients matrices applied with element-wise multiplication, which are expected to learn the complementary relationship between same-signed and opposite-signed values.- Comparison of different models on ImageNet-1K.

- Performance on Long Range Arena benchmark.

| Model | Text | ListOps | Retrieval | Pathfinder | Image | Average |

|---|---|---|---|---|---|---|

| 73.06 | 37.35 | 80.50 | 70.53 | 42.15 | 60.72 | |

| 72.33 | 38.76 | 80.37 | 68.98 | 41.91 | 60.47 | |

| 71.93 | 37.60 | 81.47 | 69.09 | 42.77 | 60.57 |

- Python 3.9

- PyTorch == 1.11.0

- torchvision == 0.12.0

- numpy

- timm == 0.4.12

- einops

- yacs

The ImageNet dataset should be prepared as follows:

$ tree data

imagenet

├── train

│ ├── class1

│ │ ├── img1.jpeg

│ │ ├── img2.jpeg

│ │ └── ...

│ ├── class2

│ │ ├── img3.jpeg

│ │ └── ...

│ └── ...

└── val

├── class1

│ ├── img4.jpeg

│ ├── img5.jpeg

│ └── ...

├── class2

│ ├── img6.jpeg

│ └── ...

└── ...

Based on different model architectures, we provide several pretrained models, as listed below.

| model | Reso | acc@1 | config |

|---|---|---|---|

| Pola-PVT-T | 78.8 (+3.7) | config | |

| Pola-PVT-S | 81.9 (+2.1) | config | |

| Pola-Swin-T | 82.6 (+1.4) | config | |

| Pola-Swin-S | 83.6 (+0.6) | config | |

| Pola-Swin-B | 83.8 (+0.3) | config |

Evaluate one model on ImageNet:

python -m torch.distributed.launch --nproc_per_node=8 main.py --cfg <path-to-config-file> --data-path <imagenet-path> --output <output-path> --eval --resume <path-to-pretrained-weights>- To train our model on ImageNet from scratch, see pretrain.sh and run:

bash pretrain.shThis code is developed on the top of Swin Transformer and FLatten Transformer.

If you find this repo helpful, please consider citing us.

@inproceedings{

meng2025polaformer,

title={PolaFormer: Polarity-aware Linear Attention for Vision Transformers},

author={Weikang Meng and Yadan Luo and Xin Li and Dongmei Jiang and Zheng Zhang},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://openreview.net/forum?id=kN6MFmKUSK}

}If you have any questions, please feel free to contact the authors.

Weikang Meng: [email protected]