This is a forked tutorial guide and the main codes will not be updated

This forked GitHub project is intented for folks who have little to know command-line knowledge and want to run Nerfstudio to create gaussian splats. If you have used Instant NGP, Nerfstudio, or other similar command-line based radiance field projects, most likely you have already installed some or all of the depedencies required for this project.

I created two walkthrough videos to compliment the tutorial (coming soon). You can watch them independently or with this project page as reference. Please follow my YT channel for additional updates. Now let's get gaussian splatting with Nerfstudio!

The section below is from the original GitHub page. Jump down to "Start Here" for the actual tutorial.

It’s as simple as plug and play with nerfstudio!

Nerfstudio provides a simple API that allows for a simplified end-to-end process of creating, training, and testing NeRFs. The library supports a more interpretable implementation of NeRFs by modularizing each component. With more modular NeRFs, we hope to create a more user-friendly experience in exploring the technology.

This is a contributor-friendly repo with the goal of building a community where users can more easily build upon each other's contributions. Nerfstudio initially launched as an opensource project by Berkeley students in KAIR lab at Berkeley AI Research (BAIR) in October 2022 as a part of a research project (paper). It is currently developed by Berkeley students and community contributors.

We are committed to providing learning resources to help you understand the basics of (if you're just getting started), and keep up-to-date with (if you're a seasoned veteran) all things NeRF. As researchers, we know just how hard it is to get onboarded with this next-gen technology. So we're here to help with tutorials, documentation, and more!

Have feature requests? Want to add your brand-spankin'-new NeRF model? Have a new dataset? We welcome contributions! Please do not hesitate to reach out to the nerfstudio team with any questions via Discord.

Have feedback? We'd love for you to fill out our Nerfstudio Feedback Form if you want to let us know who you are, why you are interested in Nerfstudio, or provide any feedback!

We hope nerfstudio enables you to build faster 🔨 learn together 📚 and contribute to our NeRF community 💖.

You must have an NVIDIA video card with CUDA installed on the system. This library has been tested with version 11.8 of CUDA. You can find more information about installing CUDA here

Install Git.

Install Visual Studio 2022. This must be done before installing CUDA. The necessary components are included in the Desktop Development with C++ workflow (also called C++ Build Tools in the BuildTools edition).

Nerfstudio requires python >= 3.8. We recommend using conda to manage dependencies. Make sure to install Conda before proceeding.

You will need to set up an environment on your PC to install dependencies and code. This next section will be completed primarily in command prompt. If you are new to command prompt, I suggest watching the installation video on my YouTube channel.

conda create --name nerfstudio -y python=3.8

conda activate nerfstudio

pip install --upgrade pipInstall PyTorch with CUDA (this repo has been tested with CUDA 11.7 and CUDA 11.8) and tiny-cuda-nn.

cuda-toolkit is required for building tiny-cuda-nn.

You can check which version of CUDA Toolkit you have installed by entering nvcc --version into command prompt. Use the instructions below that match your version of CUDA Toolkit to install PyTorch.

For CUDA 11.7:

pip install torch==2.0.1+cu117 torchvision==0.15.2+cu117 --extra-index-url https://download.pytorch.org/whl/cu117

conda install -c "nvidia/label/cuda-11.7.1" cuda-toolkit

pip install ninja git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torchFor CUDA 11.8:

pip install torch==2.0.1+cu118 torchvision==0.15.2+cu118 --extra-index-url https://download.pytorch.org/whl/cu118

conda install -c "nvidia/label/cuda-11.8.0" cuda-toolkit

pip install ninja git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torchThis method is the easiest!

pip install nerfstudioFrom source is Optional, use this command if you want the latest development version.

git clone https://github.com/nerfstudio-project/nerfstudio.git

cd nerfstudio

pip install --upgrade pip setuptools

pip install -e .Success! You have installed Nerfstudio!

From time to time you will want to update your version of Nerfstudio. For example, to upgrading to version 1.0.0 to access gaussian splatting. This can be done with a few simple steps.

Use this version if you installed Nerfstudio via pip:

pip install --upgrade nerfstudioUse this version if you installed Nerfstudio via source code:

CD Nerfstudio

Git Pull

pip install -e .The following will teach you how to train a gaussian splat scene with default settings. This is trained to 30,000 steps.

Open command prompt and start your Nerfstudio conda environment:

conda activate nerfstudiopath to the nerfstudio folder

cd nerfstudioThis this tutorial, we will use the open dataset provided by the Nerfstudio team. The following command will download an image set that is already prepared for training.

ns-download-data nerfstudio --capture-name=posterThis downloads a folder of data into: /nerfstudio/data/nerfstudio/poster. The result should look like this:

If you want to use your own data, check out the Using Custom Data section.

Next, you will initiate training of the gaussian splat scene.

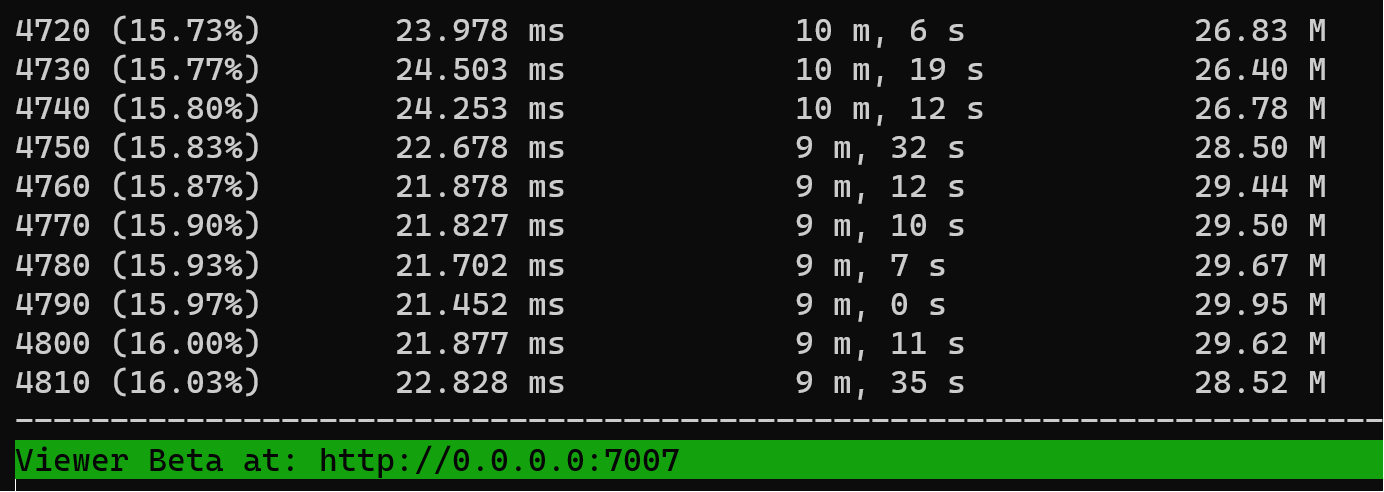

ns-train splatfacto --data data/nerfstudio/posterIf everything works, you should see training progress like the following:

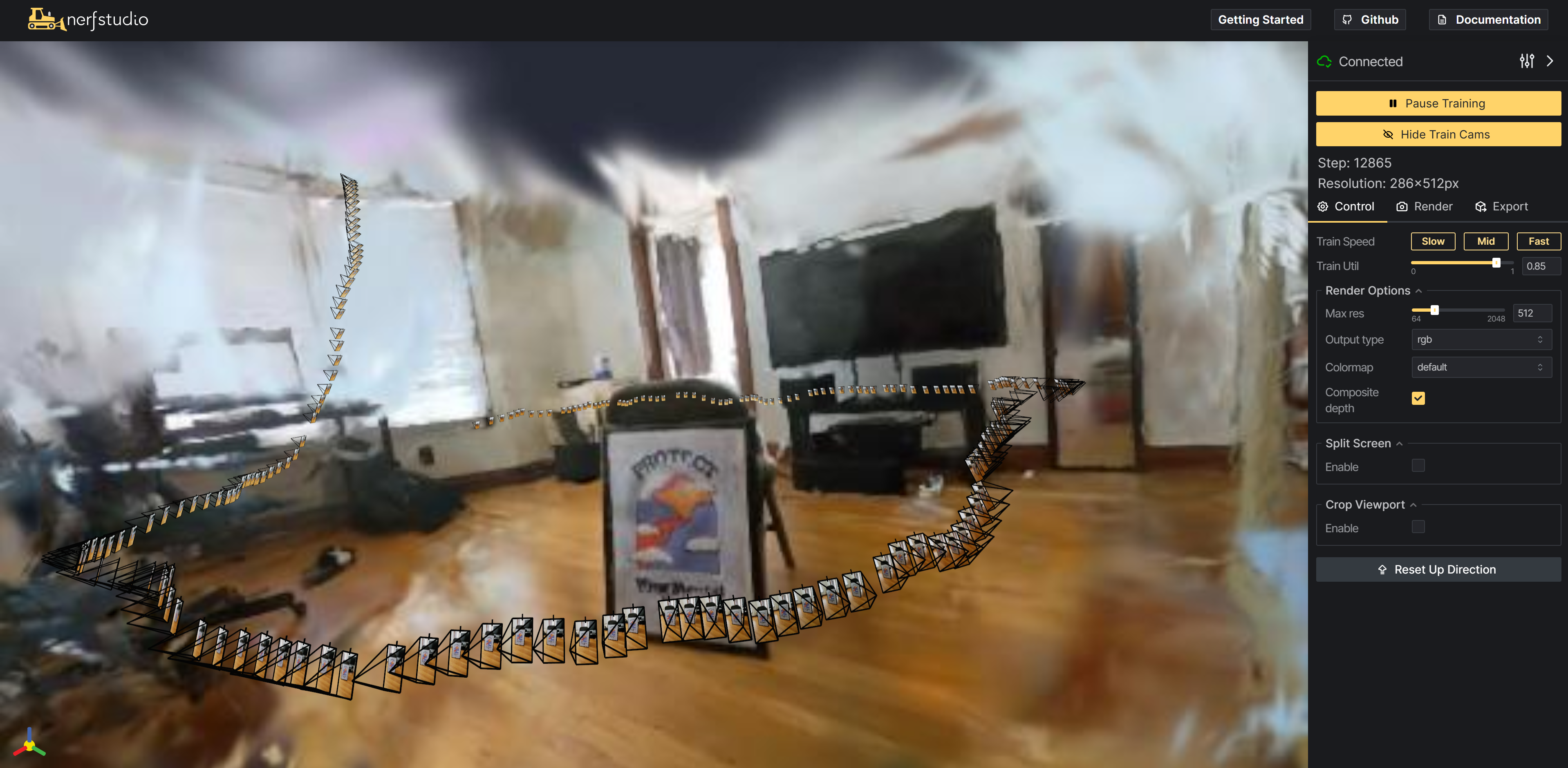

Navigating to the link at the end of the terminal will load the webviewer. If you are running on a remote machine, you will need to port forward the websocket port (defaults to 7007).

Note: At the time of writing this guide, there is a bug where some people will see 0.0.0.0:7007 for the link. Replace the 0.0.0.0 with your IPv4 address. Here are instruction to find your IP address.

Note: The webviewer will slow down the performance of the model training.

It is possible to resume training a checkpoint by running

ns-train splatfacto --data data/nerfstudio/poster --load-dir {outputs/.../nerfstudio_models}You can launch any pre-trained model checkpoint into the viewer without initiating further training by running

ns-viewer --load-config {outputs/.../config.yml}This is recommended if you want to load a project you fully trained in command line and are coming back to later visualize and create video renders.

Once you have a gaussian splatting scene trained, you can either render out a video or export the gaussian splats ply file to view in other platforms such as Unity and UE5.

First we must create a path for the camera to follow. This can be done in the viewer under the "RENDER" tab. Orient your 3D view to the location where you wish the video to start, then press "Add Keyframe". This will set the first camera key frame. Continue to new viewpoints adding additional cameras to create the camera path. Nerfstudio provides other parameters to further refine your camera path. You can press "Preview Render" and then "Play" to preview your camera sequence.

Once satisfied, input a render name and then press "Generate Command" which will display a modal that contains the command needed to render the video. Ensure you copy and post this command into a notes doc as it's easy to lose once you close the viewer.

Kill the training job by pushing ctrl+C in the command prompt window.

Next, run the command you copied from the viewer to generate the video. A video will be rendered and it will display the location path.

Other video export options are available, learn more by running

ns-render --helpCurrently, this must be performed with command line. The export section of the viewer does not function correctly with gaussian splats.

You can export the splat ply by running

ns-export gaussian-splat --load-config {outputs/.../config.yml} --output-dir {path/to/directory}Learn about the export options by running

ns-export gaussian-splat --helpThe gaussian splatting training model contains many parameters that can be changed. Use the --help command to see the full list of configuration options.

ns-train splatfacto --helpUsing an existing dataset is great, but likely you want to use your own data! At this point, Nerfstudio's gaussian splatting pipeline only supports image and video via COLMAP.

| Data | Capture Device | Requirements | ns-process-data Speed |

|---|---|---|---|

| 📷 Images | Any | COLMAP | 🐢 |

| 📹 Video | Any | COLMAP | 🐢 |

And that's it for creating gaussian-splats with nerfstudio.

If you're interested in learning more on how to create your own pipelines, develop with the viewer, run benchmarks, and more, please check out some of the quicklinks below or visit our documentation directly.

| Section | Description |

|---|---|

| Documentation | Full API documentation and tutorials |

| Viewer | Home page for our web viewer |

| 🎒 Educational | |

| Model Descriptions | Description of all the models supported by nerfstudio and explanations of component parts. |

| Component Descriptions | Interactive notebooks that explain notable/commonly used modules in various models. |

| 🏃 Tutorials | |

| Getting Started | A more in-depth guide on how to get started with nerfstudio from installation to contributing. |

| Using the Viewer | A quick demo video on how to navigate the viewer. |

| Using Record3D | Demo video on how to run nerfstudio without using COLMAP. |

| 💻 For Developers | |

| Creating pipelines | Learn how to easily build new neural rendering pipelines by using and/or implementing new modules. |

| Creating datasets | Have a new dataset? Learn how to run it with nerfstudio. |

| Contributing | Walk-through for how you can start contributing now. |

| 💖 Community | |

| Discord | Join our community to discuss more. We would love to hear from you! |

| Follow us on Twitter @nerfstudioteam to see cool updates and announcements | |

| Feedback Form | We welcome any feedback! This is our chance to learn what you all are using Nerfstudio for. |