Autoformer: Decomposition Transformers with Auto-Correlation for Long-Term Series Forecasting

Time series forecasting is a critical demand for real applications. Enlighted by the classic time series analysis and stochastic process theory, we propose the Autoformer as a general series forecasting model [paper]. Autoformer goes beyond the Transformer family and achieves the series-wise connection for the first time.

In long-term forecasting, Autoformer achieves SOTA, with a 38% relative improvement on six benchmarks, covering five practical applications: energy, traffic, economics, weather and disease.

1. Deep decomposition architecture

We renovate the Transformer as a deep decomposition architecture, which can progressively decompose the trend and seasonal components during the forecasting process.

Figure 1. Overall architecture of Autoformer.

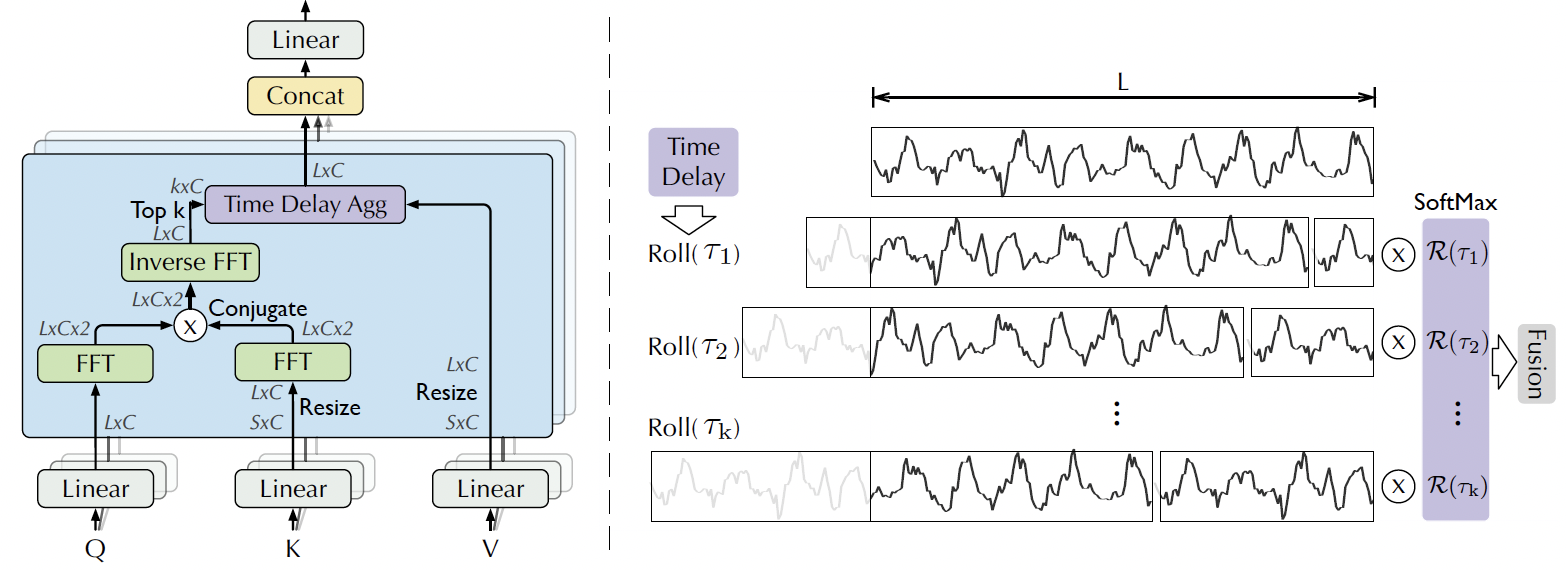

2. Series-wise Auto-Correlation mechanism

Inspired by the stochastic process theory, we design the Auto-Correlation mechanism, which can discover period-based dependencies and aggregate the information at the series level. This empowers the model with inherent log-linear complexity. This series-wise connection contrasts clearly from the previous self-attention family.

Figure 2. Auto-Correlation mechansim.

- Install Python 3.6, PyTorch 1.9.0.

- Download data. You can obtain all the six benchmarks from Tsinghua Cloud or Google Drive. All the datasets are well pre-processed and can be used easily.

- Train the model. We provide the experiment scripts of all benchmarks under the folder

./scripts. You can reproduce the experiment results by:

bash ./scripts/ETT_script/Autoformer_ETTm1.sh

bash ./scripts/ECL_script/Autoformer.sh

bash ./scripts/Exchange_script/Autoformer.sh

bash ./scripts/Traffic_script/Autoformer.sh

bash ./scripts/Weather_script/Autoformer.sh

bash ./scripts/ILI_script/Autoformer.sh- Sepcial-designed implementation

-

Speedup Auto-Correlation: We built the Auto-Correlation mechanism as a batch-normalization-style block to make it more memory-access friendly. See the paper for details.

-

Without the position embedding: Since the series-wise connection will inherently keep the sequential information, Autoformer does not need the position embedding, which is different from Transformers.

We experiment on six benchmarks, covering five main-stream applications. We compare our model with ten baselines, including Informer, N-BEATS, etc. Generally, for the long-term forecasting setting, Autoformer achieves SOTA, with a 38% relative improvement over previous baselines.

We will keep adding series forecasting models to expand this repo:

- Autoformer

- Informer

- Transformer

- LogTrans

- Reformer

- N-BEATS

If you find this repo useful, please cite our paper.

@inproceedings{wu2021autoformer,

title={Autoformer: Decomposition Transformers with {Auto-Correlation} for Long-Term Series Forecasting},

author={Haixu Wu and Jiehui Xu and Jianmin Wang and Mingsheng Long},

booktitle={Advances in Neural Information Processing Systems},

year={2021}

}

If you have any question or want to use the code, please contact [email protected] .

We appreciate the following github repos a lot for their valuable code base or datasets:

https://github.com/zhouhaoyi/Informer2020