Dockerized chat, based on my previous project SocketIOChatDemo, which is an example of a web application using socket.io library.

This repository is a perfect example for a dockerized application having multiple services like a Database and a Backend. I personally use this project in demos for different technologies like Kubernetes or Docker.

This project tries to follow a Microservices architecture where either in the basic setup nor the advanced consist on multiple services that run in separate containers

In order to provide a realistic demo this applications has the following features:

-

Database Perssistance: all messages and users are stored perssistently in a database.

-

Session Management: whenever te name is introduced, a session is created and managed using NodeJS module Express Session. Aditionally, in order to have persistent sessions while scalling our services, sessions are stored in the database using MongoStore.

-

Websocket Support: being a chat application it's needed some kind of real time comunication between the clients and the server, for this SocketIO is used as WebSocket library. When scalling services using WebSockets there are 2 things to take care of;

Session affinity: in order to stablish the WebSocket handshake between the client and one of the replicas.Message replication: once the hanndshake is stablished, messages need to be replicated through al replicas usinga queue that comunicates all of them.

IMAGE WEBSOCKET

- Storage Management: users can upload a profile photo, this implies handling file uploads and storage management. When scalling services, storage management becomes more complicated because all replicas need to access the same filesystem. This will be solved in 2 ways:

- Local volume: when using

docker-composein a local enviroment this can be solved by creating a shared Dockervolumebetween replicas. - Distributed filesystem: when deploying the application in a real-life enviroment, replicas will be distributed son sharing a local volume becomes impossible. For this, [Distributed Filesystems] are the best solution, in this example it will be used GlusterFS.

- Local volume: when using

This a basic demo of the chat working

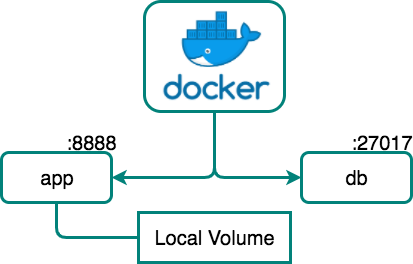

The basic setup adds to the SocketIOChatDemo a database where all mesages will be stored. Using this setup it is assumed that no service will be repplicated. The resulting system has the following modules and is described in the docker-compose.yaml.

- Web (app): NodeJS server containin all business logic and that features mentioned above. It uses the official NodeJS image as base image.

- Database (db): MongoDB database. It uses the official MongoDB image with an additional startup script which sets up users in order to have a securized database (using

MONGO_DB_APP_PASSWORD,MONGO_DB_APP_USERNAME,MONGO_DB_APP_DATABASEenviroment variables).

$ git clone https://github.com/ageapps/docker-chat

$ cd docker-chat

$ docker-compose up

# connect in your browser to <host IP>:8888# run mongo service

$ docker run -v "$(pwd)"/database:/data --name mongo_chat_db -d mongo mongod --smallfiles

# run docker-chat image

$ docker run -d --name node_chat_server -v "$(pwd)"/database:/data --link mongo_chat_db:db -p 8080:4000 ageapps/docker-chat

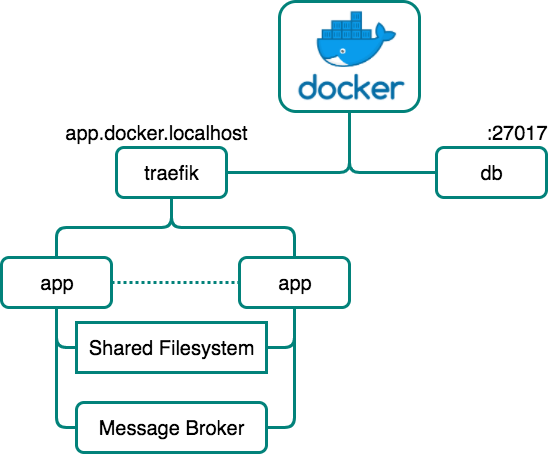

# connect in your browser to <host IP>:8888The advanced setup tries to set up the appplication in a real deployment scenario. This deployment will be distributed and aims to reach a high availability system. All of this means that the architecture and setup will be more complex than the basic one. For advanced setup, the enviroment variable SCALABLE needs to be set to true.

In order to deploy a container based system into a distributed system there will be used 2 deployment options.

- Using docker-compose: any service can be scalled easilly and it is navively supported by Docker.

- Using Kubernetes: K8s is currently one of the most used container orchestration tool. This is due to its versatility and high performance.

-

Web (app): NodeJS server containin all business logic and that features mentioned above. It uses the official NodeJS image as base image.

-

Database (db): MongoDB database. It uses the official MongoDB image with an additional startup script which sets up users in order to have a securized database (using

MONGO_DB_APP_PASSWORD,MONGO_DB_APP_USERNAME,MONGO_DB_APP_DATABASEenviroment variables). -

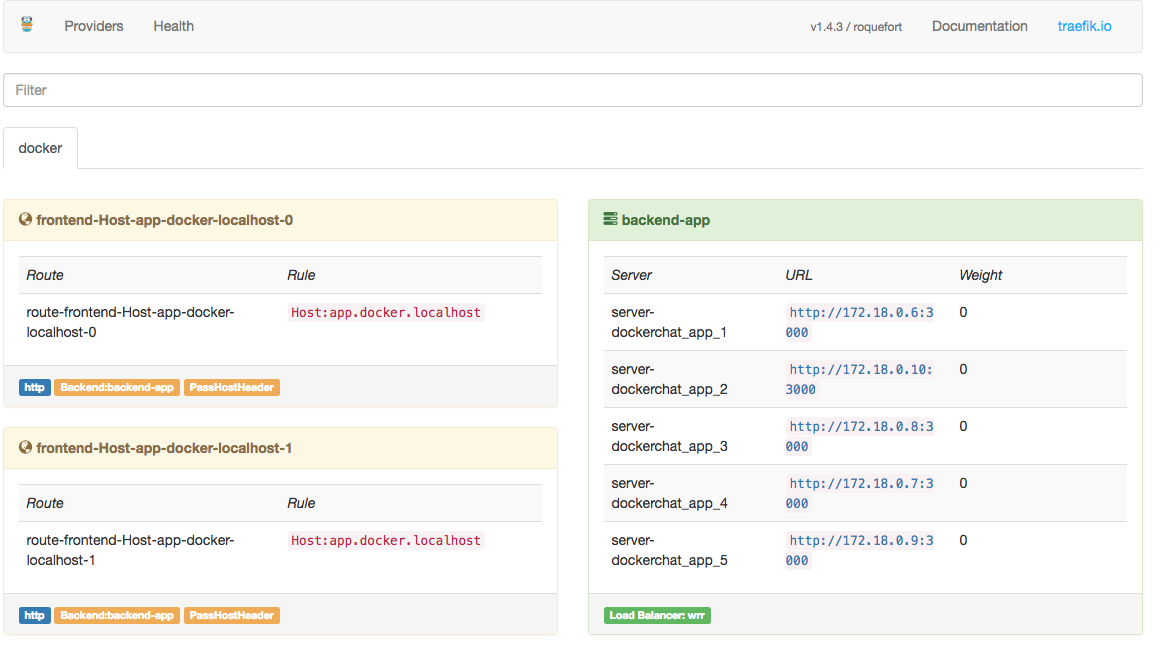

Load Balancer (proxy): traefik proxy. This service is only used when deploying with

docker-compose. This load balancer is needed in order to handle the service repplication and balance traffic between them aswell as session affinity for WebSocket support. It uses the official traefik image being the access point to the system. There is a management interface accesinglocalhostin port8080.

-

Message Broker (redis/rabbit/nats): This service is needed in order to scale WebSockets. The application supports 3 message brokers which are attached to SocketIO library as adapters:

-

Redis: It's possible to use the Redis's publish/subscribe service as message broker. For this it's used the Redis Adapter. To connect to the Redis service it's needed to use theREDIS_HOSTenviroment variable.NOTE: Redis has an extra limitation that all messages used by the publish/subscribe service have to be strings, for this the

PARSE_MSGenviroment variable has to be added. -

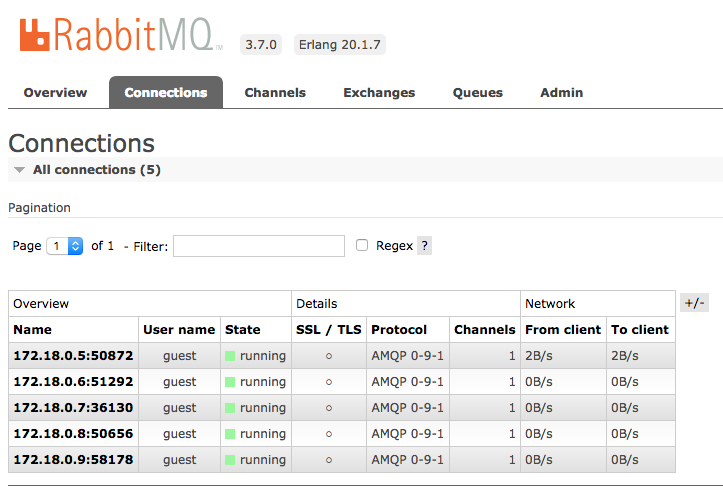

RabbitMQ: It's possible to use the RabbitMQ as message broker. For this it's used the Rabbit Adapter. To connect to the RabbitMQ service it's needed to use theRABBIT_HOSTenviroment variable. RabbitMQ has a management web interface which is accessible usinglocalhostin port15672.

-

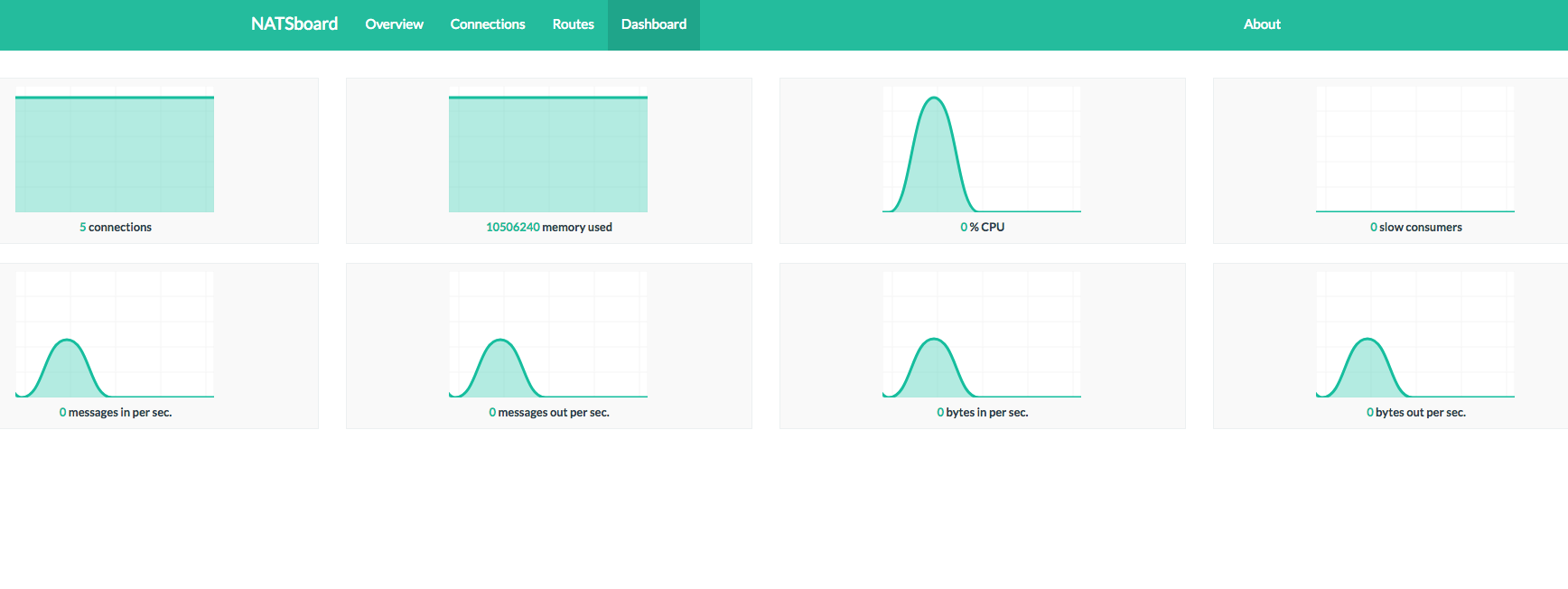

NATS: It's possible to use the NATS as message broker. For this it's used the NATS Adapter. To connect to the NATS service it's needed to use theNATS_HOSTenviroment variable. There is a NATS dashboard which can be accessed usinglocalhostin port3000.

-

In order to deploy the system with docker-compose it's needed an additional configuration. traefik proxy needs to use a DNS name in order to provide session affinity. For this, the DNS name chosen is app.docker.localhost, and it has to be added into the /etc/hosts file:

...

127.0.0.1 app.docker.localhost

...Download the source code:

$ git clone https://github.com/ageapps/docker-chat

$ cd docker-chatDeploy with any of the configurations proposed

# Redis configuration

$ docker-compose -f docker-compose.redis.yaml up

# Rabbit configuration

$ docker-compose -f docker-compose.rabbit.yaml up

# Nats configuration

$ docker-compose -f docker-compose.nats.yaml up

# connect in your browser to app.docker.localhost

# connect in your browser to <host IP>:8888 for the configuration pannelScale the app service

# Redis configuration

$ docker-compose -f docker-compose.redis.yaml scale app=5

# Rabbit configuration

$ docker-compose -f docker-compose.rabbit.yaml scale app=5

# Nats configuration

$ docker-compose -f docker-compose.nats.yaml scale app=5Once kubectl is set up and connected to your cluster, the following steps should be followed in order to deploy the application:

There are global configurations needed for deployment, like database credentials.

$ kubectl apply -f k8s/global-config.yamlThese include the rest of the services used in the system.

Before deploying any service it's needed to choose a message broker, once this is done, it's necessary to configure it in the k8s/docker-chat/docker-chat.yaml file by un commenting the configuration needed for each message broker.

###### MESSAGE BROKERS ########

# # REDIS

# - name: REDIS_HOST

# valueFrom:

# configMapKeyRef:

# name: global-config

# key: app.redis_host

# RABBIT

- name: RABBIT_HOST

valueFrom:

configMapKeyRef:

name: global-config

key: app.rabbit_host

# # NATS

# - name: NATS_HOST

# valueFrom:

# configMapKeyRef:

# name: global-config

# key: app.nats_host$ kubectl apply -f k8s/mongo

# Depending on chosen broker

$ kubectl apply -f k8s/rabbit

$ kubectl apply -f k8s/redis

$ kubectl apply -f k8s/nats

# Main service

$ kubectl apply -f k8s/docker-chatAs mentioned in the services section, since WebSockets are used, it's needed to have session affinity in the Load Balancer service of our system, for this, it's proviced an example here (/k8s/ingress) using NGINX Ingress Controller. You can find more information about the ingress controller and other examples of them in the ingress documentation.

After following these instructions, the ingress has to be configured.

$ kubectl apply -f k8s/ingress/app-ingress.yamlConsidering a GlusterFS cluster is already deployed (Here is a great example of how to do it) lets mount it as a volume in our containers. In order to use the [GlusterFS] feature there are some considerations to take care. On the first hand, the cluster ip adresses should be added into the glusterfs/glusterfs.yaml` file.

kind: Endpoints

apiVersion: v1

metadata:

name: glusterfs

subsets:

- addresses:

- ip: XXXXX

ports:

- port: 1

- addresses:

- ip: XXXXX

ports:

- port: 1Additionaly, the commented lines related to the volume mount of the GlusterFS system need to be uncommented:

spec:

# volumes:

# - name: glusterfs-content

# glusterfs:

# endpoints: glusterfs-cluster

# path: kube-vol

# readOnly: true

containers:

- name: "docker-chat"

image: "ageapps/docker-chat:app"

imagePullPolicy: Always

ports:

- containerPort: 3000

command: ["bash", "-c", "nodemon ./bin/www"]

# volumeMounts:

# - name: glusterfs-content

# mountPath: /uploadsFinally, update all services and deploy the needed services. There is the possibillity to test what the content inside the cluster is by deloying the browser service.

$ kubectl apply -f k8s/glusterfs

# Main service

$ kubectl apply -f k8s/docker-chat

# Browser service

$ kubectl apply -f k8s/browser- Docker: Software containerization platform

- SocketIOChatDemo: Chat web application.

- NodeJS: Server enviroment.

- MongoDB: NoSQL database system.

- mongoose: MongoDB object modeling for node.js.

- docker-build: Automated build of Docker images.

- docker-compose: Automated configuration and run of multi-container Docker applications.

- Kubernetes: Open-source system for automating deployment, scaling, and management of containerized applications.