| page_type | languages | products | description | ||||

|---|---|---|---|---|---|---|---|

sample |

|

|

This example belongs Official Azure MLOps repo. The objetive of this scenario is to create your own YoloV3 training by MLOps tasks. This sample shows you how to operationalize your Machine Learning development cycle with Azure Machine Learning Service with Tensorflow 2.0 using YoloV3 architecture - as a compute target - by leveraging Azure DevOps Pipelines as the orchestrator for the whole flow.

By running this project, you will have the opportunity to work with Azure workloads, such as:

| Technology | Objective/Reason |

|---|---|

| Azure DevOps | The platform to help you implement DevOps practices on your scenario |

| Azure Machine Learning Service | Manage Machine Learning models with the power of Azure |

| Tensorflow 2.0 | Use its power for training models |

| YoloV3 | Deep Learning Architecture model for Object Detection |

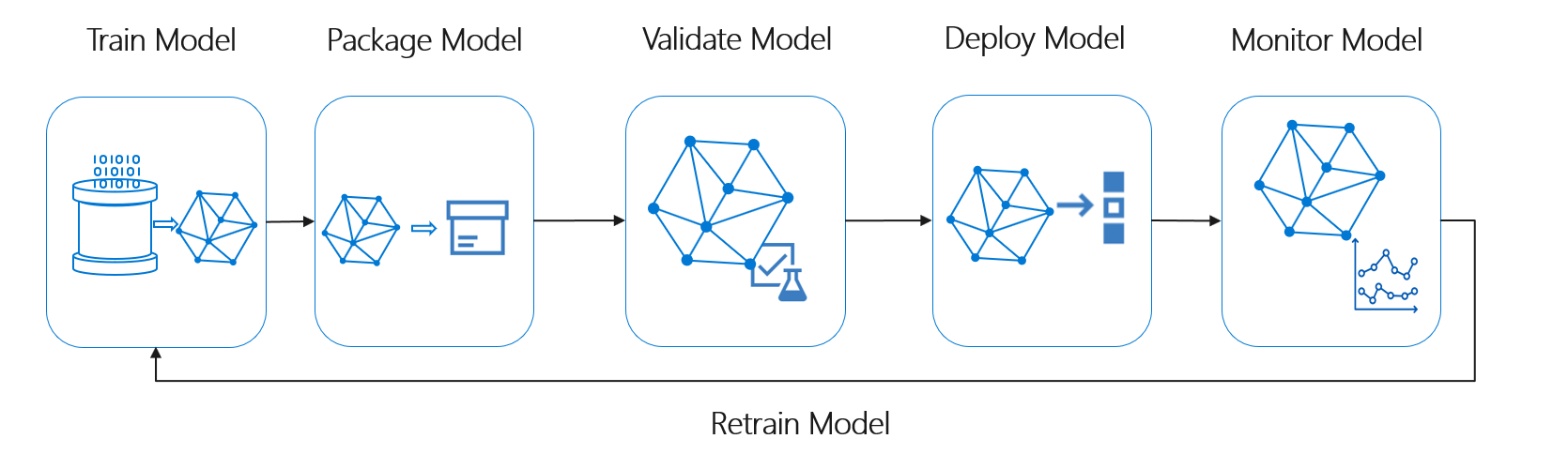

MLOps empowers data scientists and app developers to help bring ML models to production. MLOps enables you to track / version / audit / certify / re-use every asset in your ML lifecycle and provides orchestration services to streamline managing this lifecycle.

Check out the recent TwiML podcast on MLOps here

Azure ML contains a number of asset management and orchestration services to help you manage the lifecycle of your model training & deployment workflows.

With Azure ML + Azure DevOps you can effectively and cohesively manage your datasets, experiments, models, and ML-infused applications.

- Azure DevOps Machine Learning extension

- Azure ML CLI

- Create event driven workflows using Azure Machine Learning and Azure Event Grid for scenarios such as triggering retraining pipelines

- Set up model training & deployment with Azure DevOps

If you are using the Machine Learning DevOps extension, you can access model name and version info using these variables:

- Model Name: Release.Artifacts.{alias}.DefinitionName containing model name

- Model Version: Release.Artifacts.{alias}.BuildNumber where alias is source alias set while adding the release artifact.

An example repo which exercises our recommended flow can be found here

- Data scientists work in topic branches off of master.

- When code is pushed to the Git repo, trigger a CI (continuous integration) pipeline.

- First run: Provision infra-as-code (ML workspace, compute targets, datastores).

- For new code: Every time new code is committed to the repo, run unit tests, data quality checks, train model.

We recommend the following steps in your CI process:

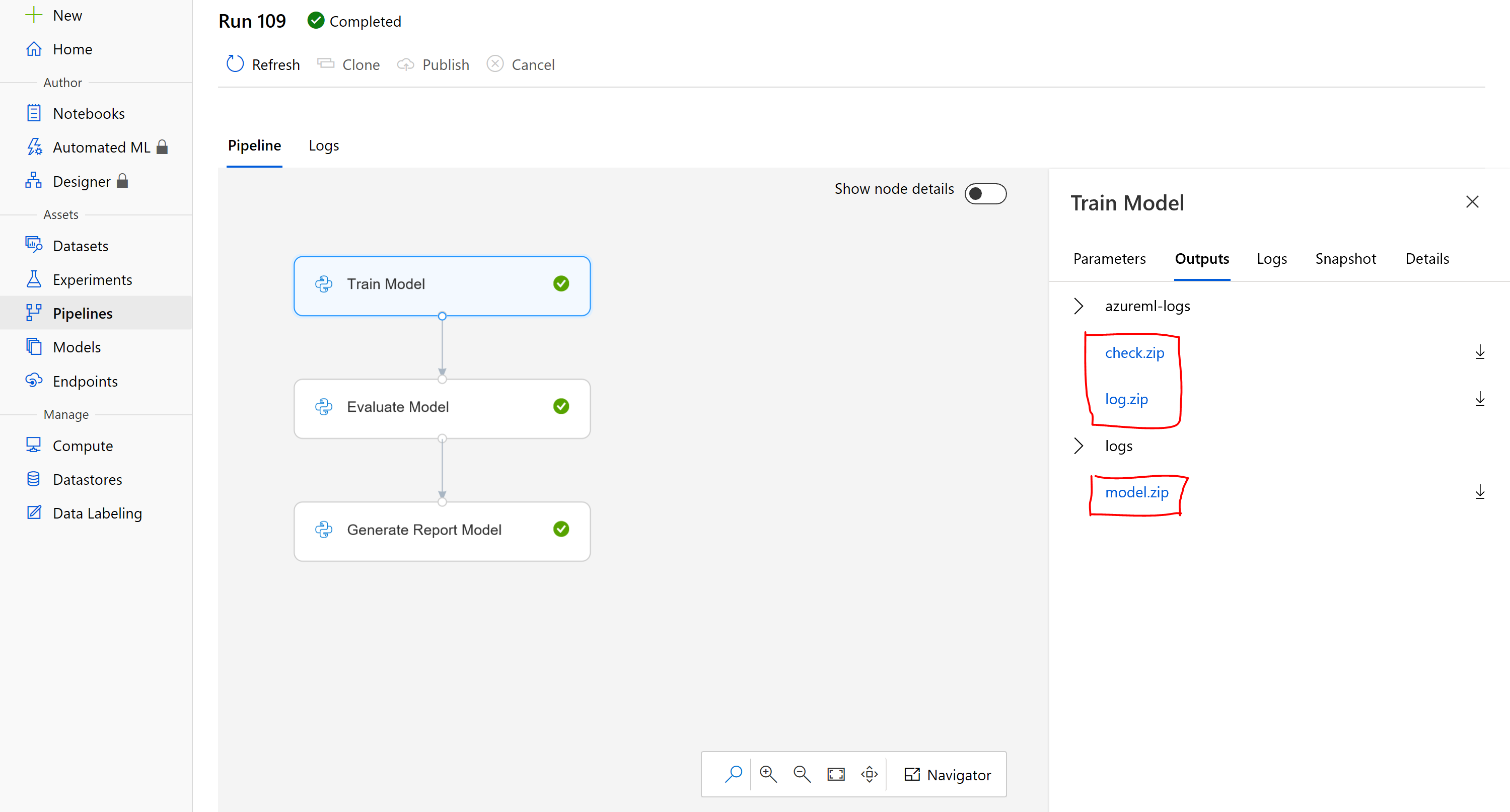

- Train Model - run training code / algo & output a model file which is stored in the run history.

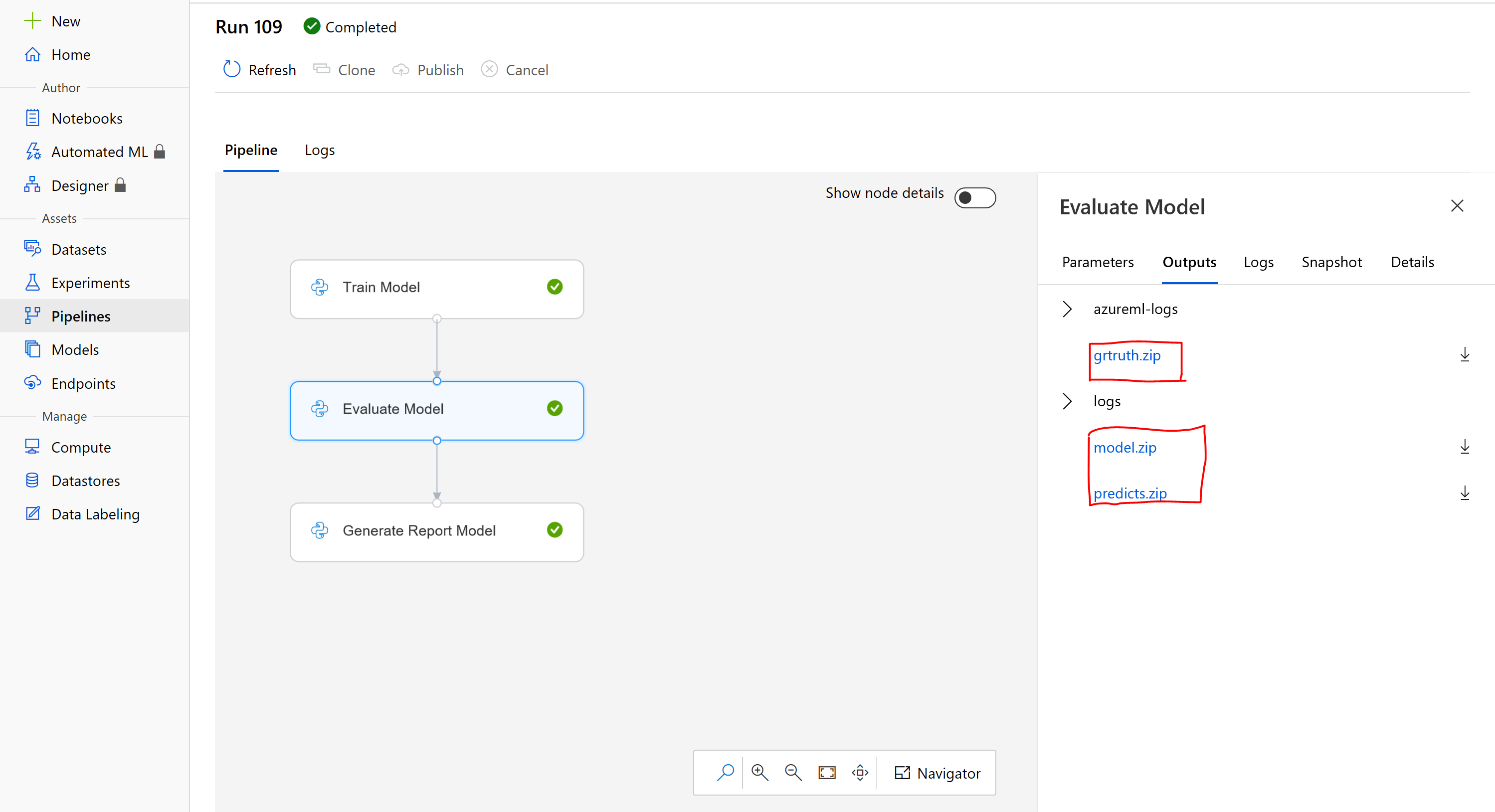

- Evaluate Model - compare the performance of newly trained model with the model in production. If the new model performs better than the production model, the following steps are executed. If not, they will be skipped.

- Register Model - take the best model and register it with the Azure ML Model registry. This allows us to version control it.

.

├── .pipelines # Continuous integration

├── code # Source directory

├── docs # Docs and readme info

├── environment_setup

|── .gitignore

├── README.md

- Active Azure subscription

- At least contributor access to Azure subscription

- Permissions Azure DevOps project, at least as contributor.

- Conda set up

To create the virual environment, we need to have anaconda installed in our computer. It can be downloaded in this link.

For this project there will be two virtual environments. One, with all the packages related to the person module and another one, with the packages related to the PPE module.

To create the virtual environment the requirements.txt file will be used. It containts all the dependencies required.

To create the environment, first you will need to create a conda environment:

Go to code\ppe\experiment\ml_service\pipelines\environment_ppe.yml

conda create --name <environment_name>

Once the environment is created, to activate it:

activate <environment-name>

To deactivate the environment:

deactivate <environment-name>

This pipeline will update the docker image when any change is done to the Dockerfile and it will create an artifact with the IoT manifest. Where the ACR password, username and the Docer image name will be automatically filled with the values specified in the DevOps Library.

This pipeline will automatically create the resource group and the services needed in the subscription that will be specified in the cloud_environment.json file located in the environment_setup/arm_templates folder.

This pipeline will be automatically triggered when a change is done in the ARM template.

MLOps will help you to understand how to build the Continuous Integration and Continuous Delivery pipeline for a ML/AI project. We will be using the Azure DevOps Project for build and release/deployment pipelines along with Azure ML services for model retraining pipeline, model management and operationalization.

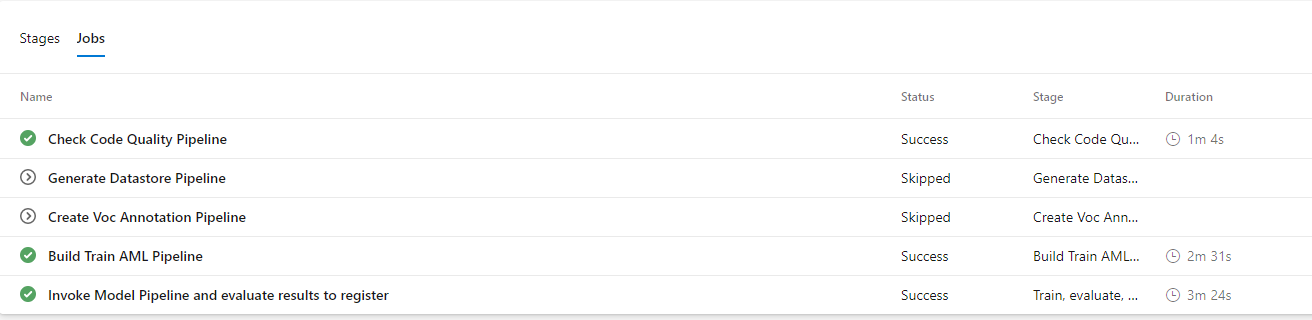

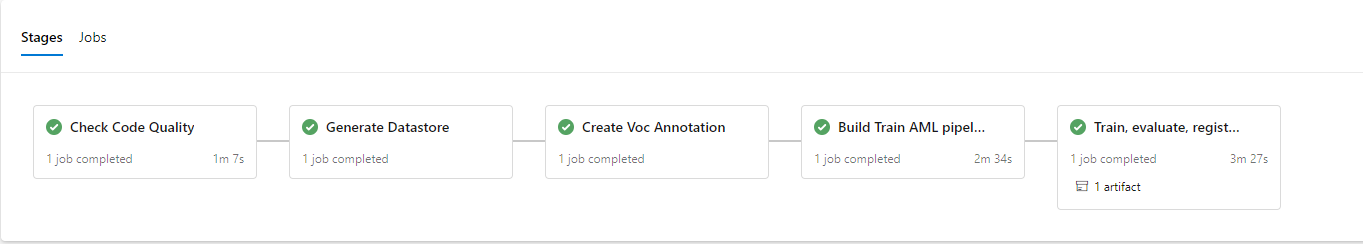

This template contains code and pipeline definition for a machine learning project demonstrating how to automate an end to end ML/AI workflow. The build pipelines include DevOps tasks for check quality, generate/update datstore in our AML resource, generate Pascal VOC annotation, model training on different compute targets, model version management, model evaluation/model selection, model deployment embedded on IoT Module (Edge).

There will be two continuouos integration (CI) pipelines. One, where all the infraestructure will be set up (CI-IaC) and other more specific to AI projects (CI-MLOps)

Any of this pipelines can be manually triggered. To do so, you should go to the Azure DevOps portal, click on Pipelines>Pipelines, select the desired pipeline and click on run pipeline.

During the continuous integration an artifact is created that will leater be released during the continuous deployment.

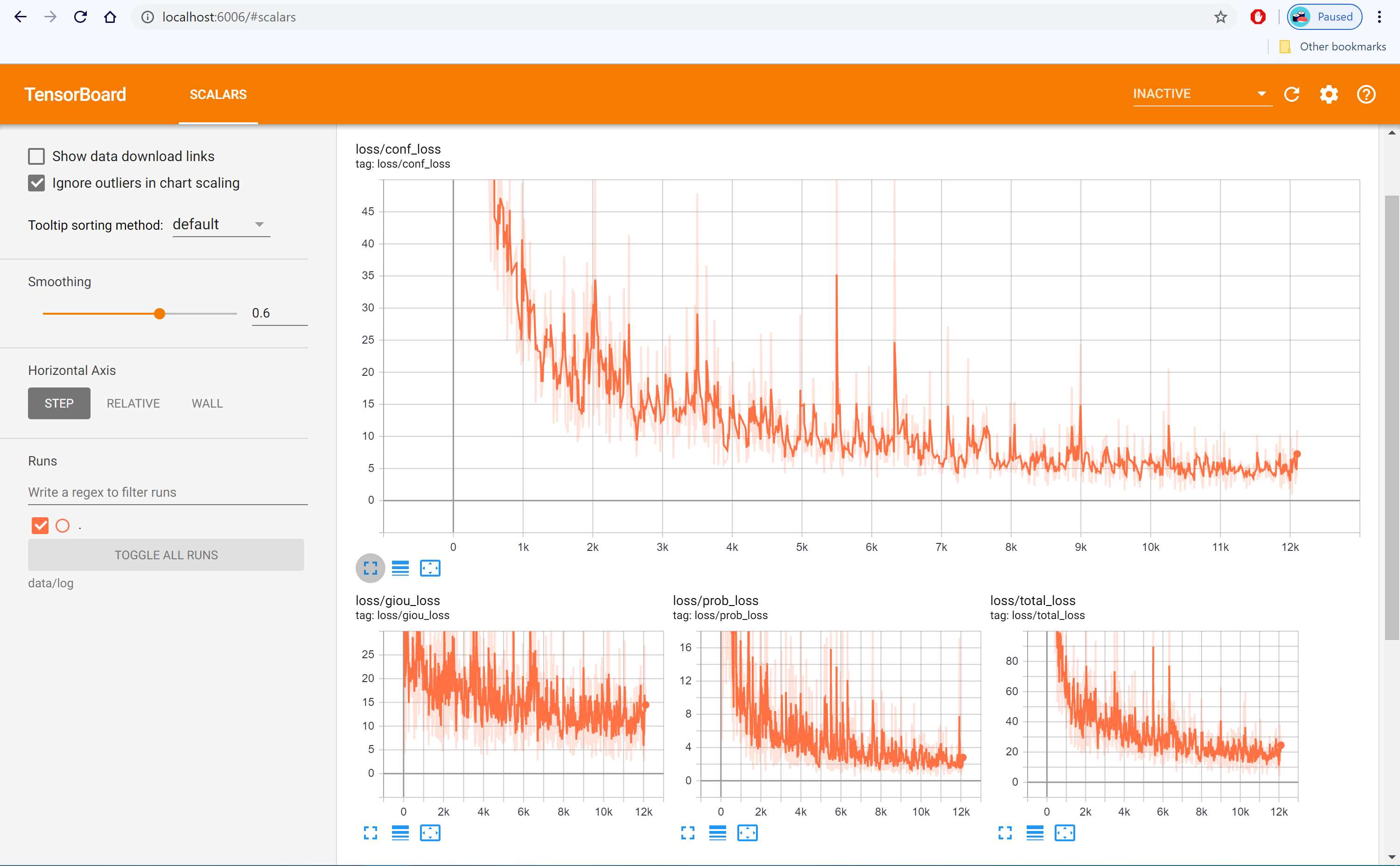

- model.zip -> Zip with saved_model.pb with variables files

- log.zip -> Zip with tf runs logs. Download it and view in your local with Tensorboard the progress of your training

-

tensorboard --logdir=data/log -

Go to

http://localhost:6006/

- checkpoints -> Zip with weights of the Tensorflow model

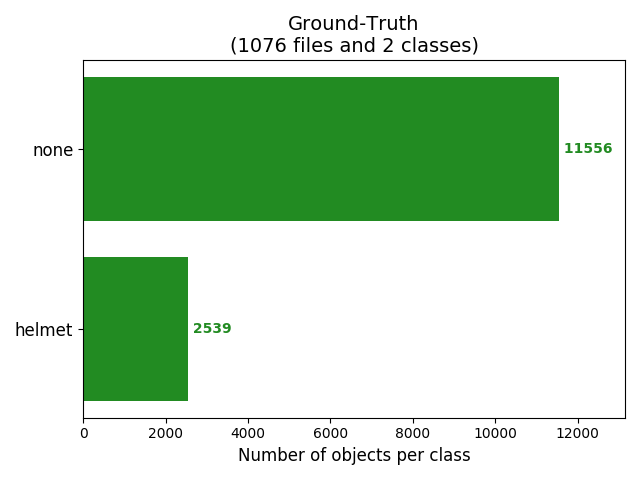

- grtruth.zip -> Zip with ground truth detections

- predicted.zip -> Zip with predicted detections

- model.zip -> Zip with saved_model.pb with variables files

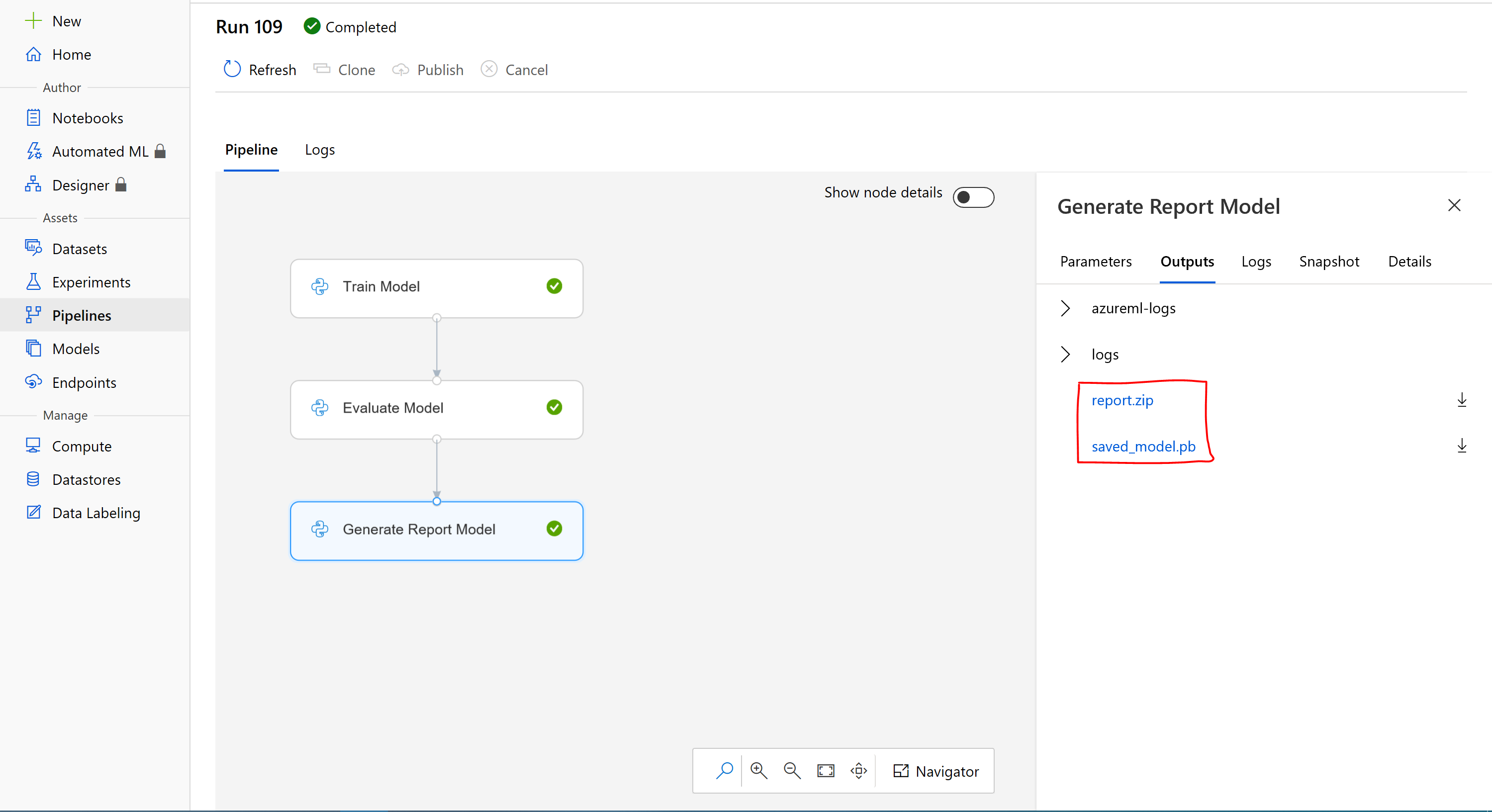

- saved_model.pb -> Tensorflow model

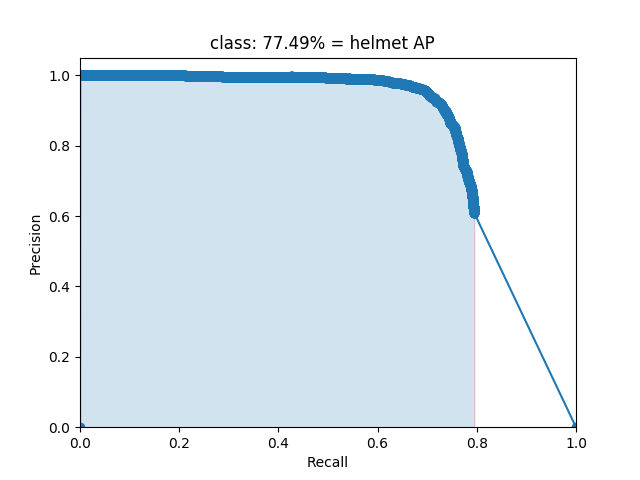

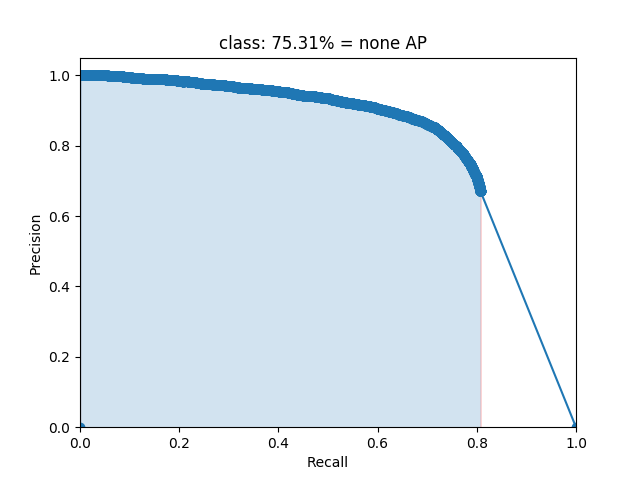

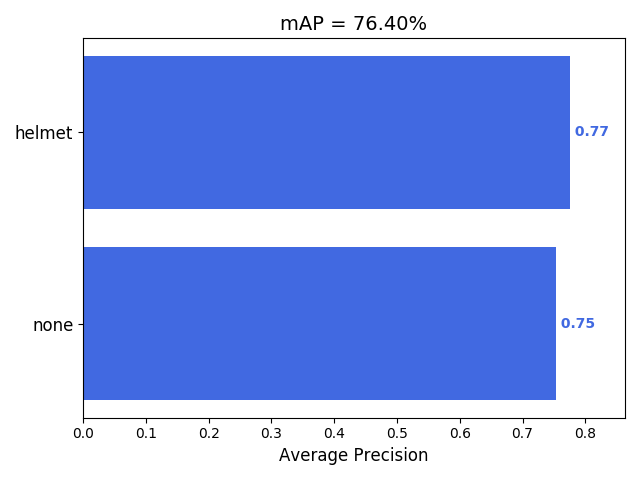

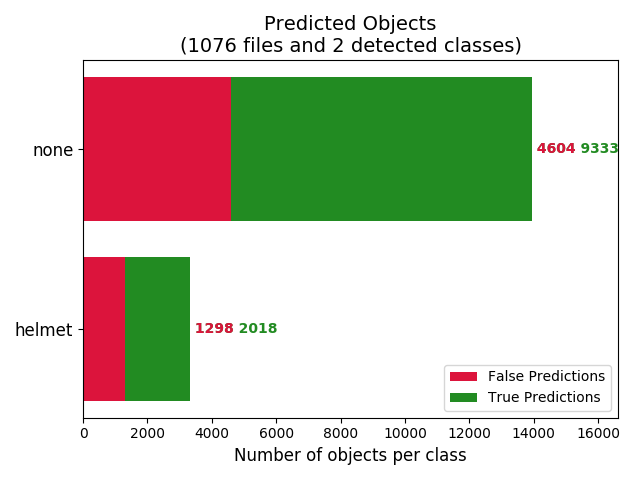

- report.zip -> Zip with metrics results and plots