DeBERTa-ELL is an advanced natural language processing (NLP) project that leverages the power of the DeBERTa (Decoding-enhanced BERT with Disentangled Attention) model to automatically assess the language proficiency of high school English Language Learners (ELLs) based on their essays. This project aims to provide a reliable, efficient, and scalable solution for educators and researchers in the field of second language acquisition and assessment.

- Utilizes state-of-the-art DeBERTa model for text analysis

- Assesses multiple aspects of language proficiency:

- Cohesion

- Syntax

- Vocabulary

- Phraseology

- Grammar

- Conventions

- Implements multi-label stratified k-fold cross-validation for robust model evaluation

- Supports both training and inference modes

- Includes data preprocessing and augmentation techniques

- Provides detailed logging and model checkpointing

- Python 3.10+

- PyTorch 2.3+

- Transformers 4.37+

For a complete list of dependencies, please refer to the requirements.txt file.

-

Clone this repository:

git clone https://github.com/arnavs04/deberta-ell.git cd deberta-ell -

Create a virtual environment (optional but recommended):

python -m venv venv source venv/bin/activate # On Windows, use `venv\Scripts\activate`

-

Install the required packages:

pip install -r requirements.txt

The training data is already in the data/feedback-prize-english-language-learning/ directory.

To train the model, run:

python train.pyYou can modify the hyperparameters in the configs.py file.

To run inference on new data:

python inference.pyYou can modify the hyperparameters in the configs.py file

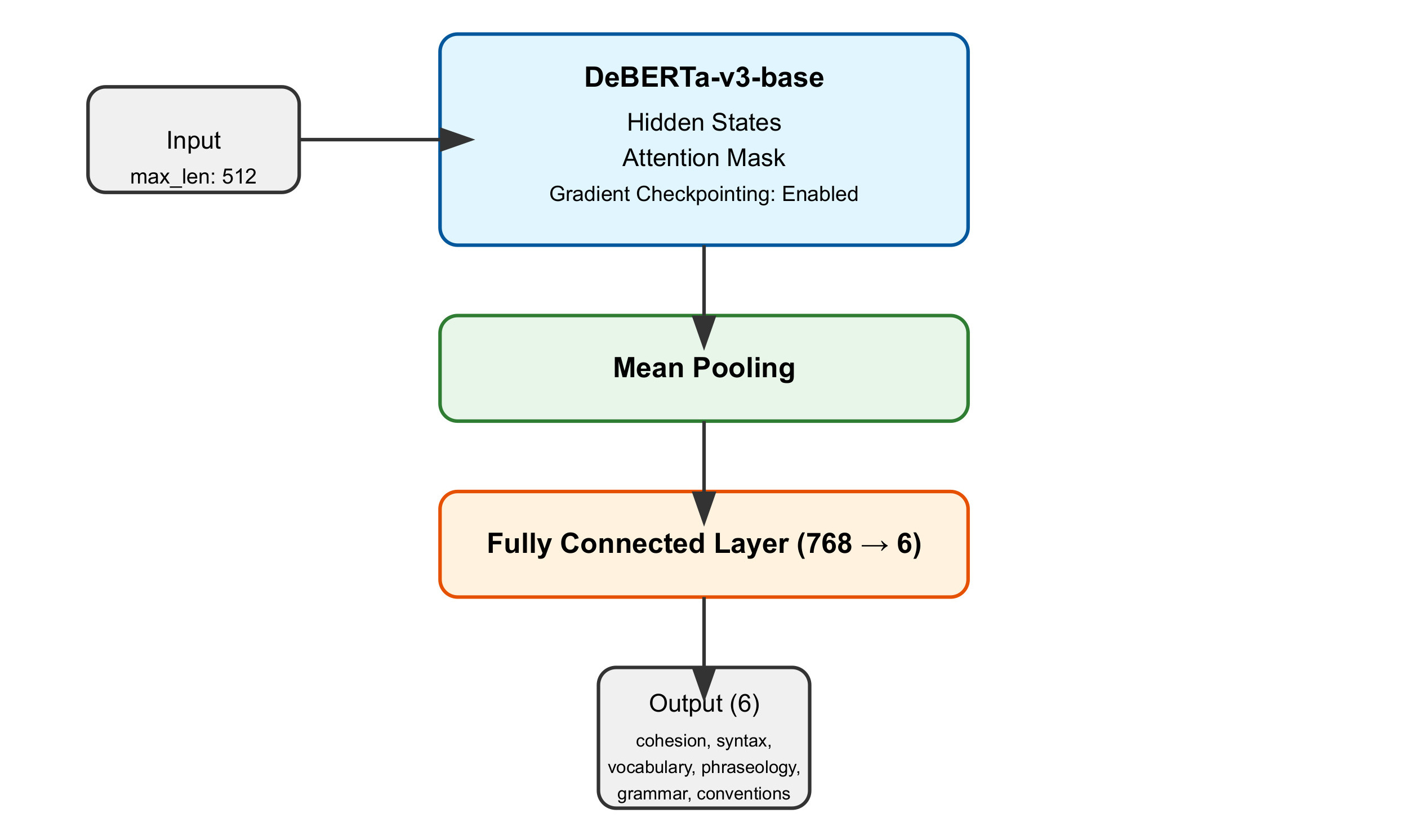

This project uses the DeBERTa-v3-base model as the backbone for essay analysis. The model is fine-tuned on the task of multi-aspect proficiency assessment, with a custom head for multi-label regression.

The performance of the model was evaluated using Smooth L1 Loss for training and validation, and Mean Column-wise Root Mean Square Error (MCRMSE) score for the final evaluation. Below are the summarized results for each fold:

| Fold | Score |

|---|---|

| 0 | 0.4493 |

| 1 | 0.4576 |

| 2 | 0.4663 |

| 3 | 0.4529 |

| Overall | 0.4566 |

Contributions are welcomed to improve DeBERTa-ELL! Please feel free to submit issues, fork the repository and send pull requests!

If you use this code for your research, please cite our project:

@software{DeBERTa_ELL2024,

author = {Arnav Samal},

title = {DeBERTa-ELL: Automated Proficiency Assessment for English Language Learners},

year = {2024},

url = {https://github.com/arnavs04/deberta-ell.git}

}

This project is licensed under the MIT License - see the LICENSE file for details.

For any queries, please open an issue or contact [email protected].