Official PyTorch Implementation for GracoNet (GRAph COmpletion NETwork).

Learning Object Placement via Dual-path Graph Completion [arXiv]

Siyuan Zhou, Liu Liu, Li Niu, Liqing Zhang

Accepted by ECCV2022.

Object placement methods can be divided into generative methods (e.g., TERSE, PlaceNet, GracoNet) and discriminative methods (e.g., FOPA, TopNet). Discriminative methods are more effective and flexible.

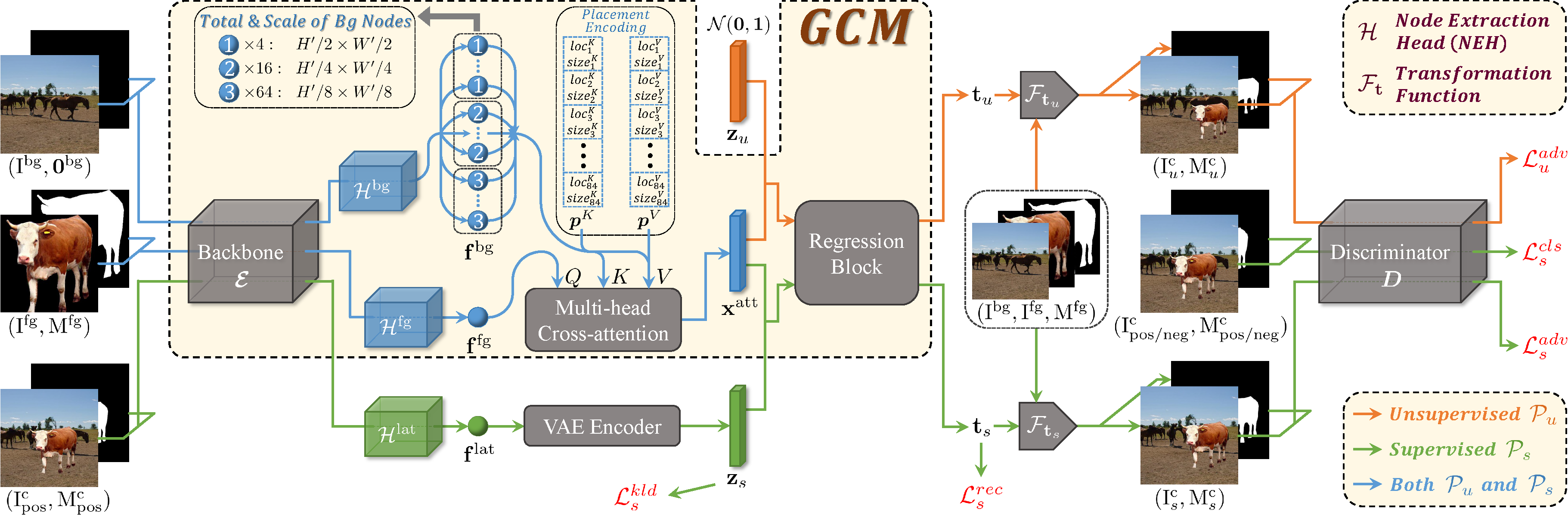

GracoNet is a generative method. In this method, we treat object placement as a graph completion problem and propose a novel graph completion module (GCM). The background scene is represented by a graph with multiple nodes at different spatial locations with various receptive fields. The foreground object is encoded as a special node that should be inserted at a reasonable place in this graph. We also design a dual-path framework upon GCM to fully exploit annotated composite images, which successfully generates plausible and diversified object placement. GracoNet achieves 0.847 accuracy on OPA dataset.

- Sep 29, 2022

Change to use the latest version of the official OPA dataset instead of an old version.

We provide models for TERSE [arXiv], PlaceNet [arXiv], and our GracoNet [arXiv]:

| method | acc. | FID | LPIPS | url of model & logs | file name | size | |

|---|---|---|---|---|---|---|---|

| 0 | TERSE | 0.679 | 46.94 | 0 | bcmi cloud | baidu disk (code: js71) | terse.zip | 51M |

| 1 | PlaceNet | 0.683 | 36.69 | 0.160 | bcmi cloud | baidu disk (code: y0gh) | placenet.zip | 86M |

| 2 | GracoNet | 0.847 | 27.75 | 0.206 | bcmi cloud | baidu disk (code: 8rqm) | graconet.zip | 185M |

| 3 | IOPRE | 0.895 | 21.59 | 0.214 | - | - | - |

| 4 | FOPA | 0.932 | 19.76 | - | - | - | - |

We plan to include more models in the future.

We provide instructions on how to install dependencies via conda. First, clone the repository locally:

git clone https://github.com/bcmi/GracoNet-Object-Placement.git

Then, create a virtual environment:

conda create -n graconet python=3.6

conda activate graconet

Install PyTorch 1.9.1 (require CUDA >= 10.2):

conda install pytorch==1.9.1 torchvision==0.10.1 torchaudio==0.9.1 cudatoolkit=10.2 -c pytorch

Install necessary packages:

pip install -r requirements.txt

Install pycocotools for accuracy evaluation:

pip install -U 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'

Build faster-rcnn for accuracy evaluation (require GCC 5 or later):

cd faster-rcnn/lib

python setup.py build develop

cd ../..

Download and extract OPA dataset from the official link: google drive or baidu disk (code: a982). We expect the directory structure to be the following:

<PATH_TO_OPA>

background/ # background images

foreground/ # foreground images with masks

composite/ # composite images with masks

train_set.csv # train annotation

test_set.csv # test annotation

Then, make some preprocessing:

python tool/preprocess.py --data_root <PATH_TO_OPA>

You will see some new files and directories:

<PATH_TO_OPA>

com_pic_testpos299/ # test set positive composite images (resized to 299)

train_data.csv # transformed train annotation

train_data_pos.csv # train annotation for positive samples

test_data.csv # transformed test annotation

test_data_pos.csv # test annotation for positive samples

test_data_pos_unique.csv # test annotation for positive samples with different fg/bg pairs

To train GracoNet on a single 24GB gpu with batch size 32 for 11 epochs, run:

python main.py --data_root <PATH_TO_OPA> --expid <YOUR_EXPERIMENT_NAME>

If you want to reproduce the baseline models, just replace main.py with main_terse.py / main_placenet.py for training.

To see the change of losses dynamically, use TensorBoard:

tensorboard --logdir result/<YOUR_EXPERIMENT_NAME>/tblog --port <YOUR_SPECIFIED_PORT>

To predict composite images from a trained GracoNet model, run:

python infer.py --data_root <PATH_TO_OPA> --expid <YOUR_EXPERIMENT_NAME> --epoch <EPOCH_TO_EVALUATE> --eval_type eval

python infer.py --data_root <PATH_TO_OPA> --expid <YOUR_EXPERIMENT_NAME> --epoch <EPOCH_TO_EVALUATE> --eval_type evaluni --repeat 10

If you want to infer the baseline models, just replace infer.py with infer_terse.py / infer_placenet.py.

You could also directly make use of our provided models. For example, if you want to infer our best GracoNet model, please 1) download graconet.zip given in the model zoo, 2) place it under result and uncompress it:

mv path/to/your/downloaded/graconet.zip result/graconet.zip

cd result

unzip graconet.zip

cd ..

and 3) run:

python infer.py --data_root <PATH_TO_OPA> --expid graconet --epoch 11 --eval_type eval

python infer.py --data_root <PATH_TO_OPA> --expid graconet --epoch 11 --eval_type evaluni --repeat 10

The procedure of inferring our provided baseline models are similar. Remember to use --epoch 11 for TERSE and --epoch 9 for PlaceNet.

We extend SimOPA as a binary classifier to distingush between reasonable and unreasonable object placements. To evaluate accuracy via the classifier, please 1) download the faster-rcnn model pretrained on visual genome from google drive (provided by Faster-RCNN-VG) to faster-rcnn/models/faster_rcnn_res101_vg.pth, 2) download the pretrained binary classifier model from bcmi cloud or baidu disk (code: 0qty) to BINARY_CLASSIFIER_PATH, and 3) run:

sh script/eval_acc.sh <YOUR_EXPERIMENT_NAME> <EPOCH_TO_EVALUATE> <BINARY_CLASSIFIER_PATH>

To evaluate FID score, run:

sh script/eval_fid.sh <YOUR_EXPERIMENT_NAME> <EPOCH_TO_EVALUATE> <PATH_TO_OPA/com_pic_testpos299>

To evaluate LPIPS score, run:

sh script/eval_lpips.sh <YOUR_EXPERIMENT_NAME> <EPOCH_TO_EVALUATE>

To collect evaluation results of different metrics, run:

python tool/summarize.py --expid <YOUR_EXPERIMENT_NAME> --eval_type eval

python tool/summarize.py --expid <YOUR_EXPERIMENT_NAME> --eval_type evaluni

You could find summarized results at result/YOUR_EXPERIMENT_NAME/***_resall.txt.

Some of the evaluation codes in this repo are borrowed and modified from Faster-RCNN-VG, OPA, FID-Pytorch, and Perceptual Similarity. Thanks them for their great work.