Official code for the paper:

"Optimal Positive Generation via Latent Transformation for Contrastive Learning"

Yinqi Li, Hong Chang, Bingpeng Ma, Shiguang Shan, Xilin Chen

NeurIPS 2022

- Linux

- Python 3.8

- PyTorch 1.7.1

- PyTorch Pretrained GANs 0.0.1

Run the following command to train the navigator in pretrained BigBiGAN's latent space.

cd cop_gen

CUDA_VISIBLE_DEVICES=0,1,2,3 python train_navigator_bigbigan_optimal.py --simclr_aug --batch_size BATCH_SIZEwhere:

simclr_augis set to use SimCLR data space augmentation by default.BATCH_SIZEis set as 176 in the paper, which is the largest value that can be achieved on 4 NVIDIA 2080 Ti GPUs.

Beolow gives the training losses of the navigator and the mutual infomation estimator.

By default, we decided the termination (choice of navigator checkpoint) by simply watching the qualtity of generated positive pairs,

which will be saved at cop_gen/walk_weights_bigbigan/ckpts/name_of_experiment/images.

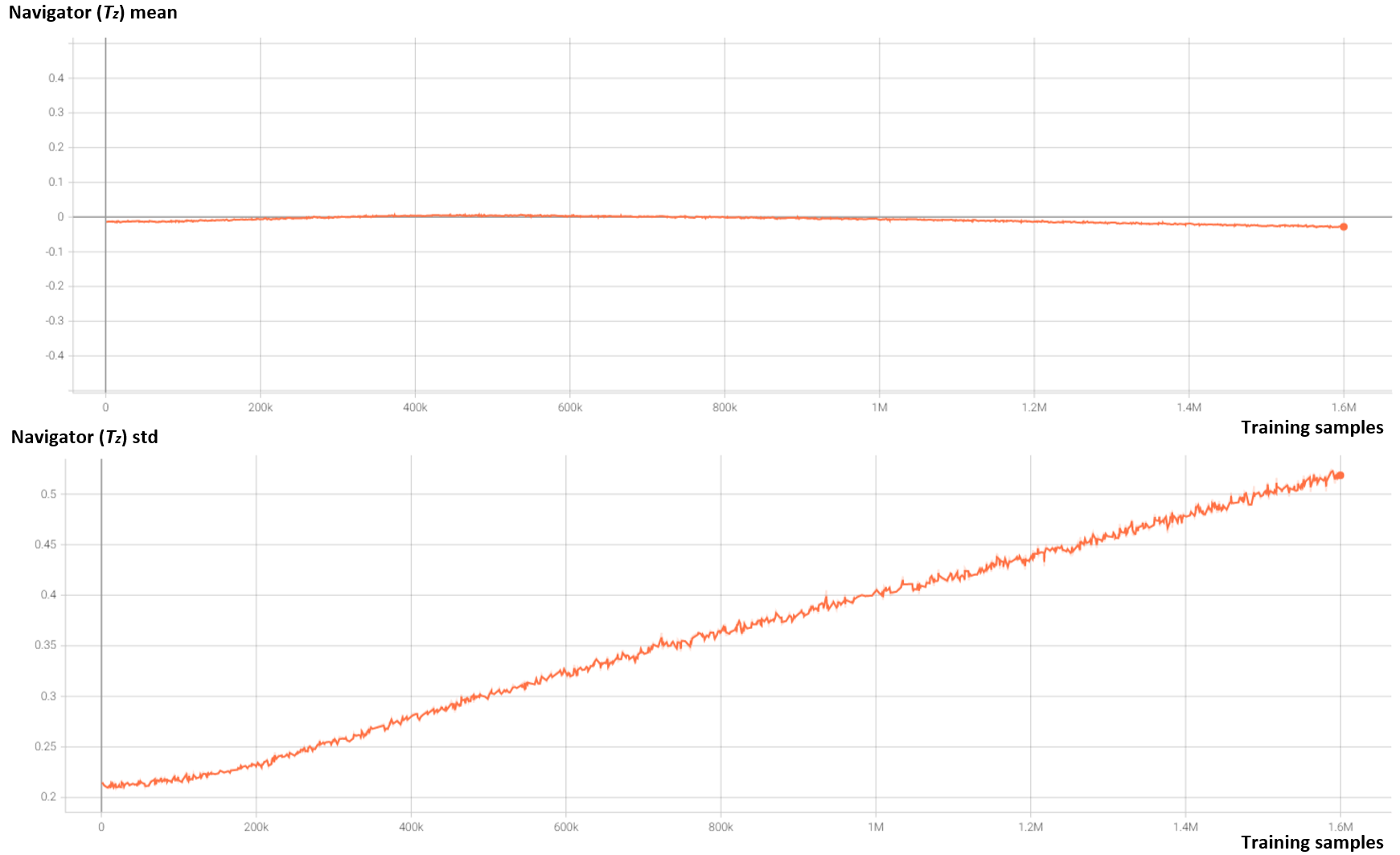

We also monitor the mean and std of the navigator during training and compare them with the prior value investigated in GenRep Gaussian method. This is used as an auxiliary monitor signal for termination, as shown in the figure below.

Since the final performance is affected by the choice of navigator checkpoint, we here provide our trained navigator checkpoint for the following experiments, to reproduce quantitative and qualitative results in the paper.

After training the navigator, we use it together of the pretrained GAN to generate contrastive pretraining dataset offline.

cd cop_gen

CUDA_VISIBLE_DEVICES=0 python generate_dataset_bigbigan_optimal.py --dataset DATASET_TYPE --out_dir OUT_DIR --walker_path /path/to/pretained/navigatorwhere:

DATASET_TYPEis set to1k(100) for ImageNet-1K (ImageNet-100) scale pretraining dataset.OUT_DIRis the path to save the generated dataset.

Run the following command to perform contrastive learning on the generated dataset.

CUDA_VISIBLE_DEVICES=0,1 python main_unified.py \

--method=SimCLR \

--dataset=bigbigan_sweet \

--data_folder=/path/to/generated/dataset \

--save_folder=/path/to/save/contrastive/encoders \

--batch_size=BATCH_SIZE \

--epochs=100 \

--learning_rate=LR \

--cosinewhere:

BATCH_SIZEis set as 224 in the paper, which is the largest value that can be achieved on 2 NVIDIA 2080 Ti GPUs.LRis set as0.03 * BATCH_SIZE / 256 = 0.02625in the paper, following GenRep.

We provide our trained contrastive encoder at Google Drive, for quickly evaluating downstream performances.

Run the following command to train a linear classifier on top of the trained encoder.

CUDA_VISIBLE_DEVICES=0,1 python main_linear.py \

--ckpt=/path/to/trained/contrastive/encoder \

--data_folder=/path/to/ImageNet1k \

--dataset=bigbigan_sweet \

--save_folder=/path/to/save/linear/models \

--batch_size=BATCH_SIZE \

--epochs=60 \

--learning_rate=LR \

--cosinewhere:

BATCH_SIZEis set as 224 to align with the pretraining stage.LRis set as2 * BATCH_SIZE / 256 = 1.75in the paper, following GenRep.

Follow the steps below to evaluate the pretrained contrastive encoder on Pascal VOC.

- Install detectron2

- Convert the pretrained encoder to detectron2's format:

cd transfer_detection

python convert_ckpt.py /path/to/trained/encoder /path/to/converted/ckpt- Put VOC2007 and VOC2012 datasets to

./transfer_detection/datasetsdirectory. - Run training:

cd transfer_detection

CUDA_VISIBLE_DEVICES=0,1 python train_net.py \

--num-gpus 2 \

--config-file ./config/pascal_voc_R_50_C4_transfer.yaml \

MODEL.WEIGHTS /path/to/converted/ckpt \

OUTPUT_DIR /path/to/outputs \

SOLVER.IMS_PER_BATCH 4 \

SOLVER.BASE_LR 0.005 \

INPUT.MAX_SIZE_TRAIN 1024 \

INPUT.MAX_SIZE_TEST 1024 \

SOLVER.MAX_ITER 96000 \

SOLVER.STEPS 72000,88000 \

SOLVER.WARMUP_ITERS 400 \

DATALOADER.NUM_WORKERS 8Follow the steps below to evaluate the pretrained contrastive encoder on transfer classification and semi-supervised learning tasks.

- Install vissl and you will get a

visslfolder under your/home/userdirectory. - Convert the pretrained encoder to vissl's format:

cd transfer_classification

python convert_ckpt.py /path/to/trained/encoder /path/to/converted/ckpt- Preparing datasets using the scripts and guidelines from vissl/extra_scripts.

- Run training:

# This is an example for linear transfer classification on Caltech-101,

# based on the documentation: https://vissl.readthedocs.io/en/v0.1.6/evaluations/linear_benchmark.html

cd vissl

CUDA_VISIBLE_DEVICES=0 python tools/run_distributed_engines.py \

config=benchmark/linear_image_classification/caltech101/eval_resnet_8gpu_transfer_caltech101_linear \

config.MODEL.WEIGHTS_INIT.STATE_DICT_KEY_NAME=model \

config.MODEL.WEIGHTS_INIT.APPEND_PREFIX="trunk.base_model._feature_blocks." \

config.MODEL.WEIGHTS_INIT.PARAMS_FILE=/path/to/converted/ckpt \

config.CHECKPOINT.DIR=/path/to/outputs \

config.DATA.TRAIN.DATA_PATHS=["datasets/caltech101/train"] \

config.DATA.TEST.DATA_PATHS=["datasets/caltech101/test"] \

config.MODEL.SYNC_BN_CONFIG.GROUP_SIZE=-1 \

config.DISTRIBUTED.NUM_PROC_PER_NODE=1 \

config.DATA.TRAIN.BATCHSIZE_PER_REPLICA=256 \

config.DATA.TEST.BATCHSIZE_PER_REPLICA=256 \

config.DATA.NUM_DATALOADER_WORKERS=8

# This is an example for semi-supervised learning on 1% labeled ImageNet,

# based on the documentation: https://vissl.readthedocs.io/en/v0.1.6/evaluations/semi_supervised.html

cd vissl

CUDA_VISIBLE_DEVICES=0 python tools/run_distributed_engines.py \

config=benchmark/semi_supervised/imagenet1k/eval_resnet_8gpu_transfer_in1k_semi_sup_fulltune_per01 \

+config/benchmark/semi_supervised/imagenet1k/dataset=simclr_in1k_per01 \

config.MODEL.WEIGHTS_INIT.STATE_DICT_KEY_NAME=model \

config.MODEL.WEIGHTS_INIT.APPEND_PREFIX="trunk._feature_blocks." \

config.MODEL.WEIGHTS_INIT.PARAMS_FILE=/path/to/converted/ckpt \

config.CHECKPOINT.DIR=/path/to/outputs \

config.DATA.TRAIN.DATA_PATHS=["datasets/imagenet1k_semisupv_1percent/train_images.npy"] \

config.DATA.TRAIN.LABEL_PATHS=["datasets/imagenet1k_semisupv_1percent/train_labels.npy"] \

config.DATA.TEST.DATA_SOURCES=[disk_filelist] \

config.DATA.TEST.LABEL_SOURCES=[disk_filelist] \

config.DATA.TEST.DATA_PATHS=["datasets/imagenet1k_npy/val_images.npy"] \

config.DATA.TEST.LABEL_PATHS=["datasets/imagenet1k_npy/val_labels.npy"] \

config.MODEL.SYNC_BN_CONFIG.GROUP_SIZE=-1 \

config.DISTRIBUTED.NUM_PROC_PER_NODE=1 \

config.DATA.TRAIN.BATCHSIZE_PER_REPLICA=256 \

config.DATA.TEST.BATCHSIZE_PER_REPLICA=256 \

config.DATA.NUM_DATALOADER_WORKERS=8This code is based on the implementation of GenRep. We also thank the authors of PyTorch Pretrained GANs and PyTorch Pretrained BigGAN repos for releasing the pretrained generative models, and the authors of Detectron2 and VISSL repos for implementing the downstream tasks.

If you find this code useful, please consider citing our paper:

@inproceedings{li2022optimal,

title={Optimal Positive Generation via Latent Transformation for Contrastive Learning},

author={Yinqi Li and Hong Chang and Bingpeng Ma and Shiguang Shan and Xilin Chen},

booktitle={Advances in Neural Information Processing Systems},

year={2022}

}