Hawk is a web interface for the Pacemaker High Availability stack. The goal of the project is to create a complete interface to the HA cluster, including the configuration, management and monitoring of cluster resources.

Hawk runs on every node in the cluster, so that you can just point your web browser at any node to access it. E.g.:

Hawk is always accessed via HTTPS, and requires users to log in prior

to providing access to the cluster. The same user privilege rules

apply as for Pacemaker itself: You need to log in as a user in the

haclient group. The easiest thing to do is to assign a password to

the hacluster user, and then to log in using that account. Note that

you will need to configure this user account on every node that you

will use Hawk on.

For more fine-grained control over access to the cluster, you can create multiple user accounts and configure Access Control Lists (ACL) for those users. These access control rules are available directly from the Hawk user interface.

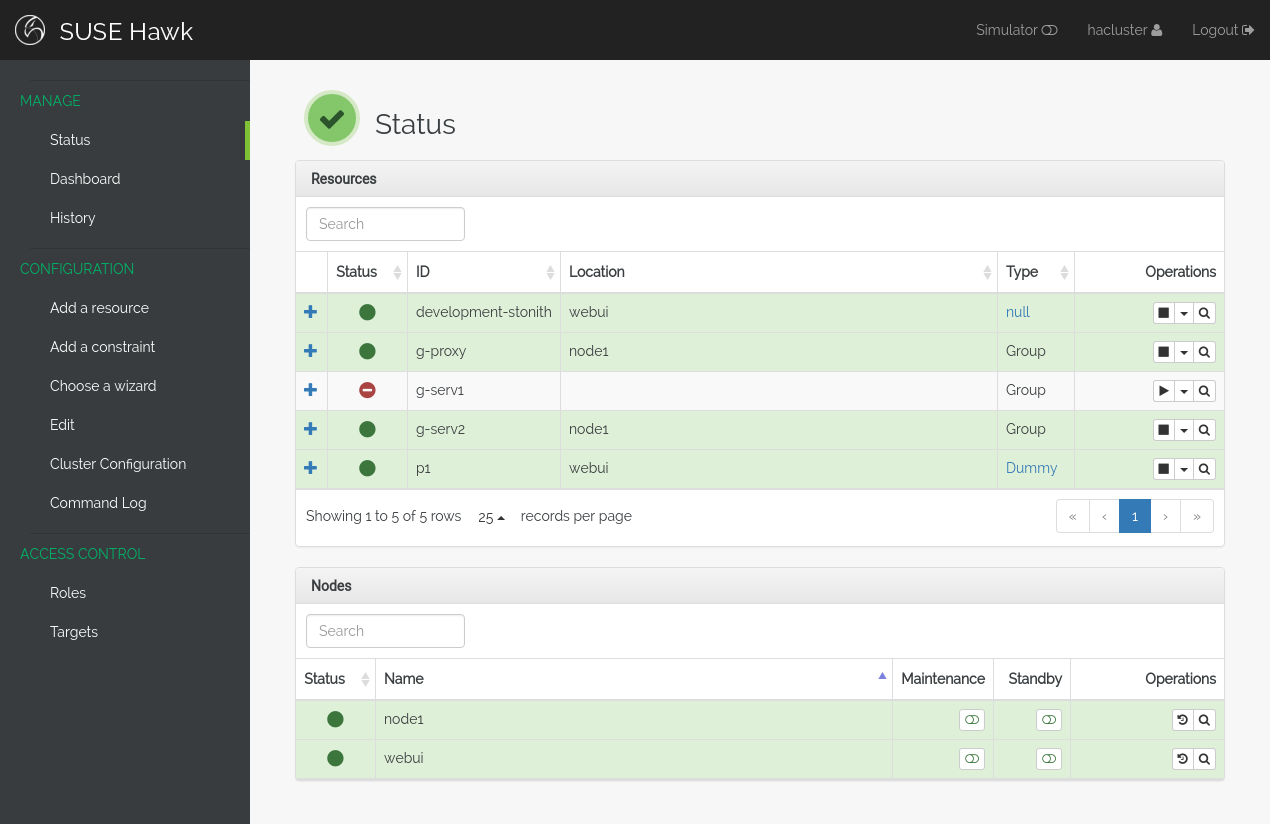

Once logged in, you will see a status view displaying the current state of the cluster. All the configured cluster resources are shown together with their status, as well as a general state of the cluster and a list of recent cluster events (if any).

The navigation menu on the left hand side provides access to the additional features of Hawk, such as the history explorer, the multi-cluster dashboard and configuration management. On the top right of the screen you can enable or disable the simulator, configure user preferences and log out of the cluster.

Resource management operations (start, stop, online, standby, etc.) can be performed using the menu of operations next to the resource in the status view.

Note that this list of features is not complete, and is intended only as a rough guide to the Hawk user interface.

From the status view of Hawk, you can control the state of individual resources or resource groups, start / stop / promote / demote. You can also migrate resources to and away from specific nodes, clean up resources after failure and show a list of recent events for the resource.

On the status page you can also manage nodes including setting node attributes and displaying recent events related to the specific node.

Additionally, if there are any tickets configured (requires the use of geo clustering via booth [1]), these are also displayed in the status view and can be managed in a similar fashion to resources.

The Dashboard can be used to monitor the local cluster, displaying a blinkenlights-style overview of all resources as well as any recent failures that may have occurred. It is also possible to configure access to remote clusters, so that multiple clusters can be monitored from a single interface. This can be useful as a HUD in an operations center, or when using geo clustering.

Hawk can also run in an offline mode, where you run Hawk on a non-cluster machine which monitors one or more remote clusters.

The history explorer is a tool for collecting and downloading cluster reports, which include logs and other information for a certain timeframe. The history explorer is also useful for analysing such cluster reports. You can either upload a previously generated cluster report for analysis, or generate one on the fly.

Once uploaded, you can scroll through all of the cluster events that took place in the time frame covered by the report. For each event, you can see the current cluster configuration, logs from all cluster nodes and a transition graph showing exactly what happened and why.

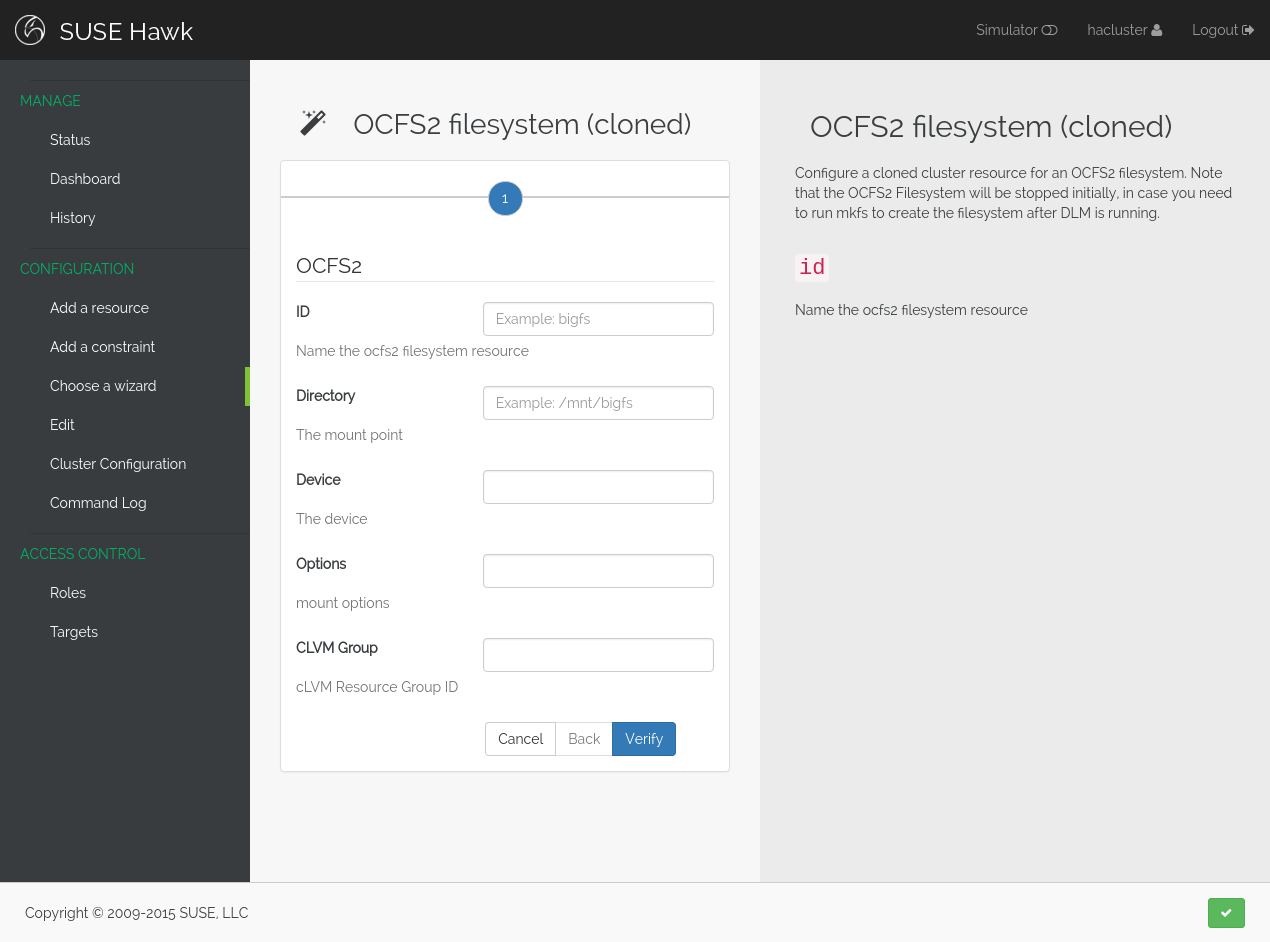

Hawk makes it easy to configure both resources, groups of resources, constraints and tags. You can also configure resource templates to be reused later, and cloned resources that are active on multiple nodes at once.

Cluster wizards are useful for creating more complicated configurations in a single process. The wizards vary in complexity from simply configuring a single virtual IP address to configuring multiple resources together with constraints, in multiple steps and including package installation, configuration and setup.

From the web interface you can view the current cluster configuration

in the crm shell syntax or as XML. You can also generate a graph

view of the resources and constraints configured in the cluster.

To make the transition between using the web interface and the command line interface easier, Hawk provides a command log showing a list of recent commands executed by the web interface. A user who is learning to configure a Pacemaker cluster can start by using the web interface, and learn how to use the command line in the process.

Pacemaker supports fine-grained access control to the configuration based on user roles. These roles can be viewed and configured directly from the web interface. Using the ACL rules, you can for example create unprivileged user accounts that are able to log in and view the state of the cluster, but cannot edit resources.

Hawk features a cluster simulation mode. Once enabled, any changes to the cluster are not applied directly. Instead, events such as resource failure or node failure can be simulated, and the user can see what the resulting cluster response would be. This can be very useful when configuring constraints, to ensure that the rules work as intended.

Hawk is a Ruby on Rails app which runs using the Puma web server (http://puma.io/).

There is a special build mode which vendors all the rubygems used by

Hawk, to create a package which bundles all of the gems. See the

included RPM spec file in rpm/hawk.spec for details.

For details on the rubygems used by hawk, see the gemfile in

hawk/Gemfile.

The exact versions specified here may not be accurate. Also, note that

Hawk also requires the rubygems listed in hawk/Gemfile.

-

ruby version 1.9.3 or higher

-

libpacemaker-devel

-

pacemaker-libs-devel

-

glib2-devel

-

libxml2-devel >= 2.6.21

-

libxslt-devel

-

openssl-devel

-

pam-devel

The exact versions specified here may not be accurate. Also, note that

Hawk also requires the rubygems listed in hawk/Gemfile.

-

crmsh

-

graphviz

-

graphviz-gd

-

dejavu

-

pacemaker >= 1.1.8

-

iproute2

Some dependencies may differ depending on the distribution:

-

rubypick (Fedora)

Hawk is included with SLE HA 11 SP1, openSUSE 11.4, and later SUSE releases. Recent versions are also available for download from OBS.

Just install the RPM, then run:

# chkconfig hawk on

# service hawk start

If you have a SUSE- or Fedora-based system, you can build an RPM easily from the source tree. Just clone this git repo, and run "make rpm".

Once built, install the RPM on your cluster nodes and:

# chkconfig hawk on

# service hawk start

If the above RPM build doesn’t work for you, you can build and install straight from the source tree, but read the Makefile first to ensure you’ll be happy with the outcome!

# make

# sudo make install

The above will install in /srv/www/hawk. To install somewhere else

(e.g.: /var/www/hawk) and/or to use a Red Hat-style init script,

try:

# make WWW_BASE=/var/www INIT_STYLE=redhat

# sudo make WWW_BASE=/var/www INIT_STYLE=redhat install

Grab the SRPM from OBS, for example try the one in http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/Fedora_19/src/ if you’re using Fedora 19, and build that.

The Hawk init script will automatically generate a self-signed SSL

certificate, in /etc/hawk/hawk.pem. If you want

to use your own certificate, replace hawk.key and hawk.pem with

your certificate.

To hack on Hawk we recommend to use the vagrant setup. There is a Vagrantfile attached, maybe you need to change some values to get access to the correct files as the current locations are restricted to SUSE employees.

To be prepared for getting our vagrant setup running you need to follow some steps.

-

Install the vagrant package from http://www.vagrantup.com/downloads.html, the minimal version requirement is

>= 1.7.0in order to work properly with openSUSE/SLED workstation setups. -

Install

virtualbox, we assume you know how to do that on your OS. If you preferlibvirtyou can use that as well.

Out of the box, vagrant is configured to synchronize the working

folder to /vagrant in the virtual machines using NFS. For this to

work properly, the vagrant-bindfs plugin is necessary.

Install it using the following command:

# vagrant plugin install vagrant-bindfs

-

If you plan to use

libvirtas provider make sure you have the libvirt-plugin installed:

# vagrant plugin install vagrant-libvirt

-

You still need to fetch the git submodules to finish your development setup:

# git submodule update --init --recursive

This is all you need to prepare initally to set up the vagrant environment,

now you can simply start the virtual machine with vagrant up and start

an ssh session with vagrant ssh webui based on virtualbox. To start the

virtual machines on libvirt you have to append --provider=libvirt to the

above commands, e.g. vagrant up --provider=libvirt. If you want to access

the source within the virtual machine you have to switch to the /vagrant

directory.

You can access the Hawk web interface based on the git source through

http://localhost:3000 now. If you want to access the version installed

through packages you can reach it through https://localhost:7630.

Occasionally it can happen that the source based web interface has not started properly and is not responding. In that case restart it with:

vagrant@webui:~> sudo systemctl restart hawk-development.service

If you need to change something on hawk_chkpwd, hawk_invoke or

hawk_monitor you need to provision the machine again with the command

vagrant provision to get this scripts compiled and copied to the correct

places, setuid-root and group to haclient in /usr/bin again. You should

end up with something like:

# ls /usr/sbin/hawk_* -l+ + -rwsr-x--- 1 root haclient 9884 2011-04-14 22:56 /usr/sbin/hawk_chkpwd+ -rwsr-x--- 1 root haclient 9928 2011-04-14 22:56 /usr/sbin/hawk_invoke+ -rwxr-xr-x 1 root root 9992 2011-04-14 22:56 /usr/sbin/hawk_monitor+

hawk_chkpwd is almost identical to unix2_chkpwd, except it restricts

acccess to users in the haclient group, and doesn’t inject any delay

when invoked by the hacluster user (which is the user the Hawk web

server instance runs as).

hawk_invoke allows the hacluster user to run a small assortment

of Pacemaker CLI tools as another user in order to support Pacemaker’s

ACL feature. It is used by Hawk when performing various management

tasks.

hawk_monitor is not installed setuid-root. It exists to be polled

by the web browser, to facilitate near-realtime updates of the cluster

status display. It is not used when running Hawk via WEBrick.

If the development hawk instance isn’t running, it can be started using this command:

webui:/vagrant/hawk # sudo -u vagrant script/rails s

Hawk is developed at github, please file any issues or submit patches via the github interface at https://github.com/ClusterLabs/hawk/issues .

Please direct comments, feedback, questions etc. to the Pacemaker mailing list at http://clusterlabs.org/mailman/listinfo/users .