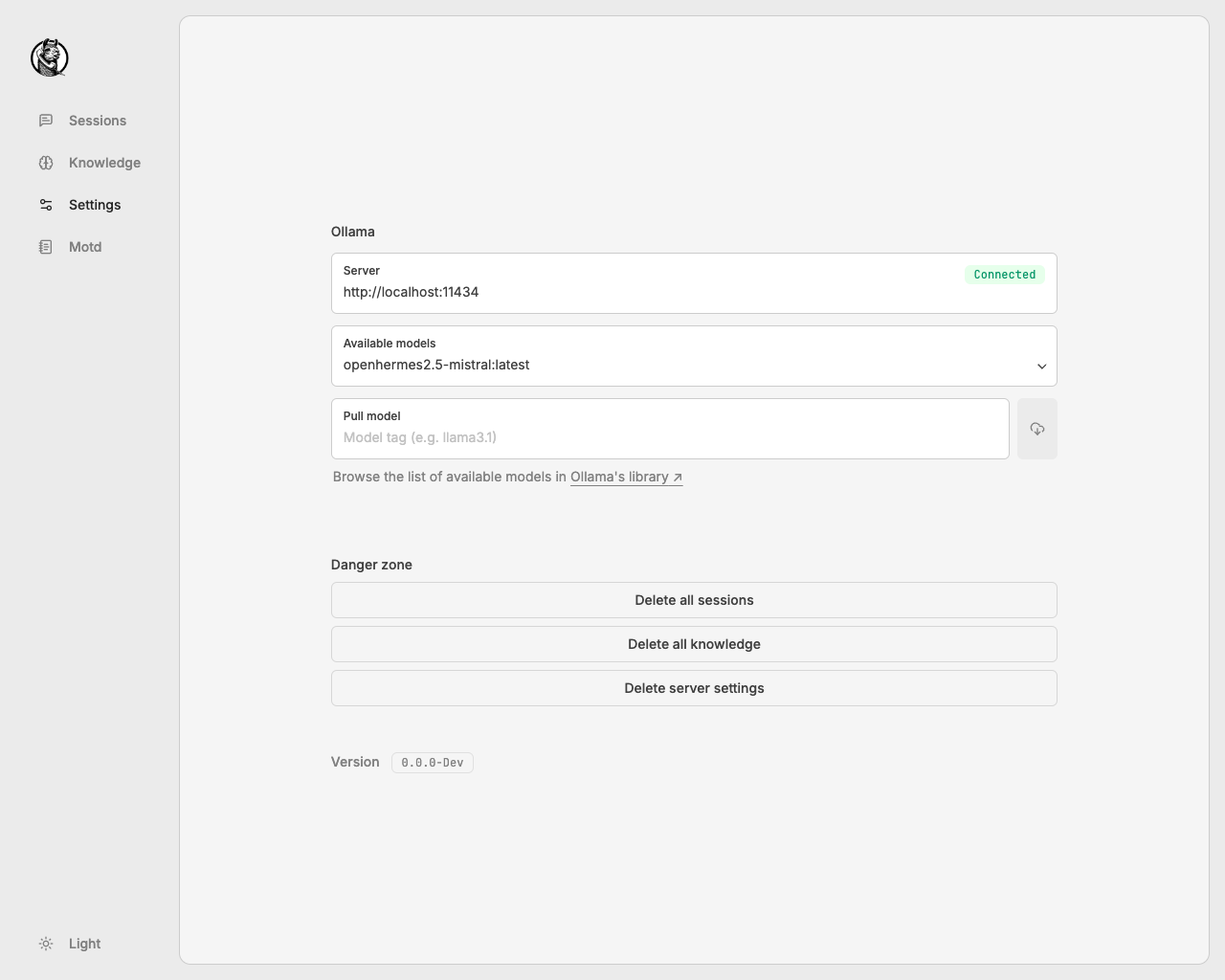

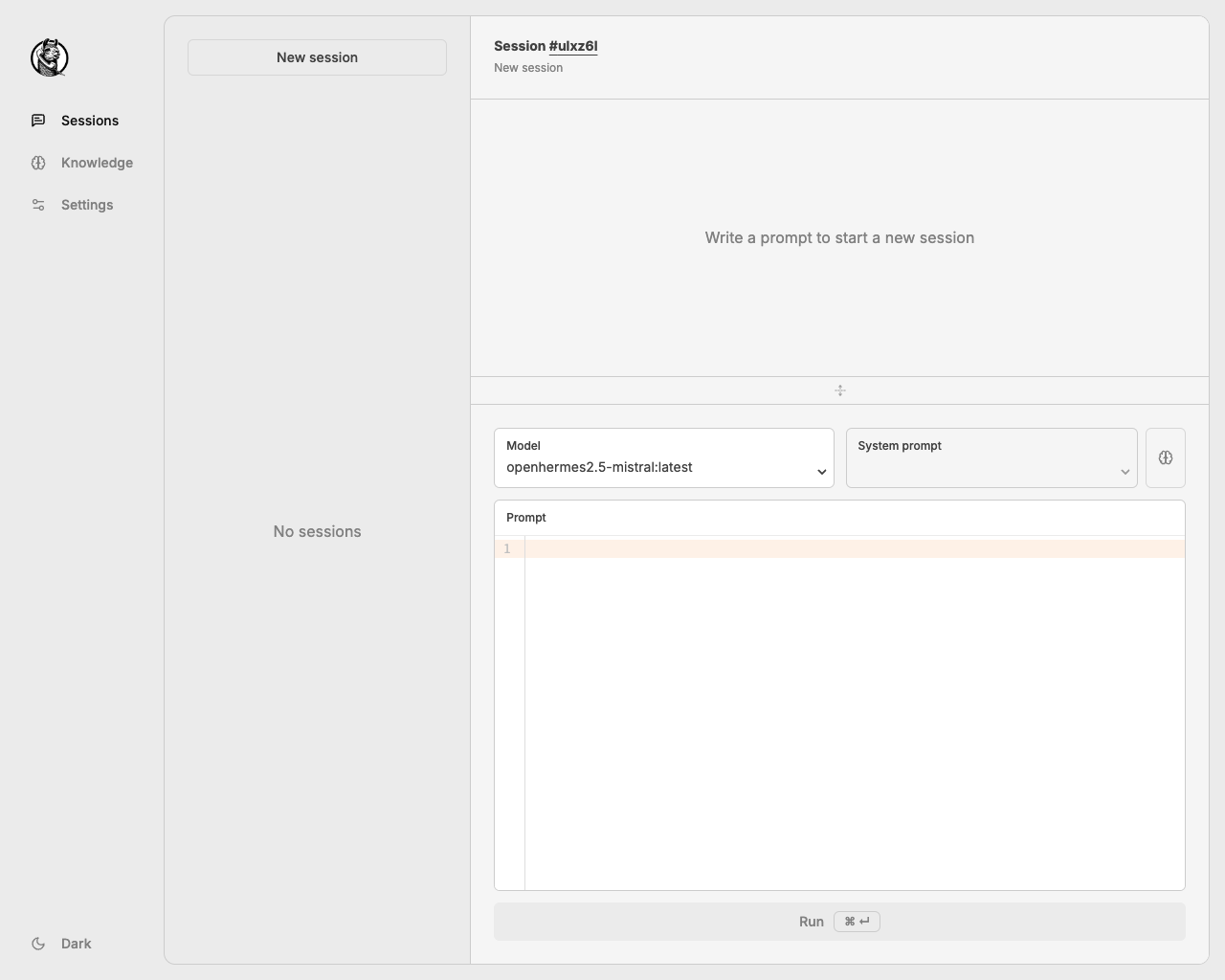

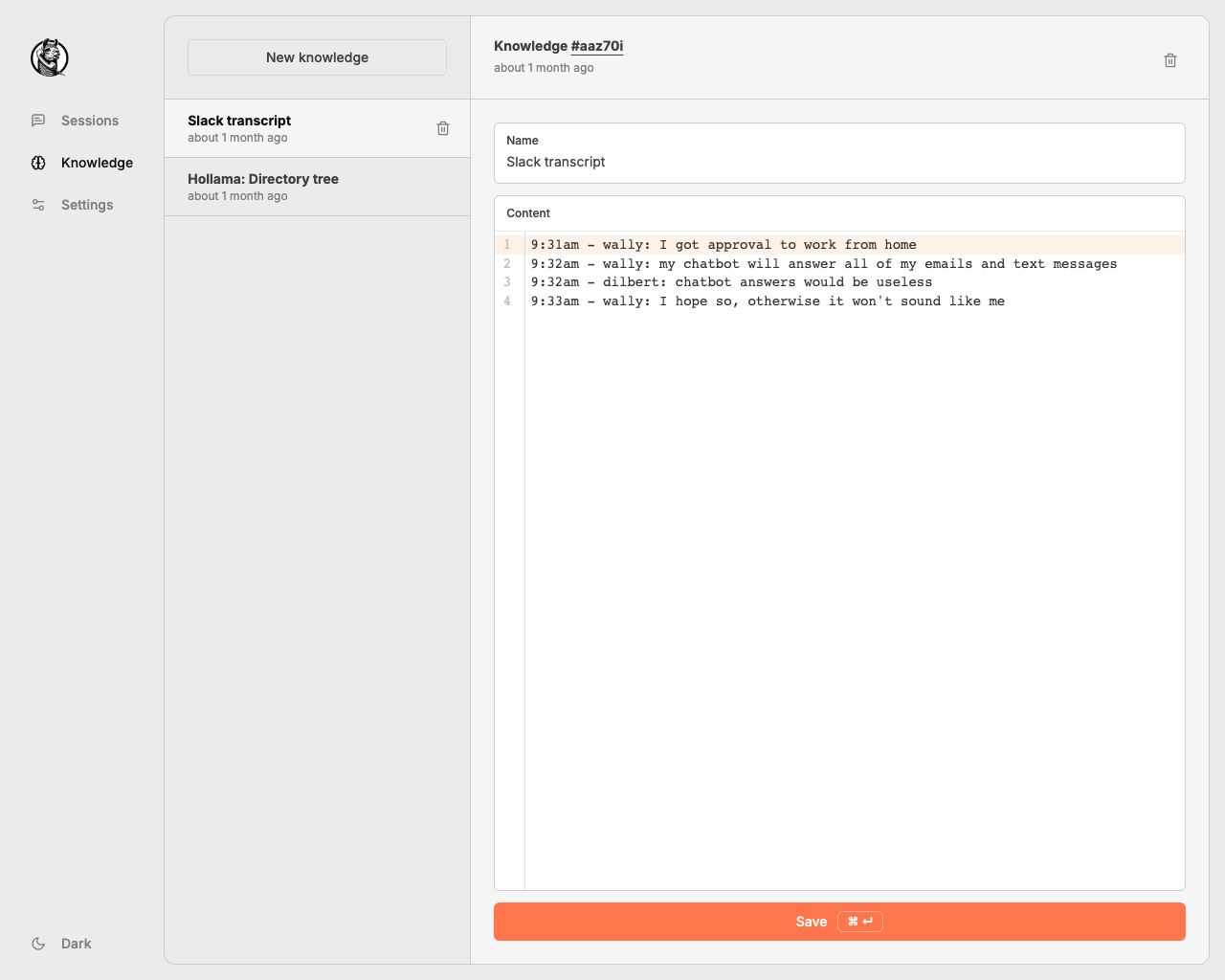

A minimal web-UI for talking to Ollama servers.

- Large prompt fields

- Markdown rendering with syntax highlighting

- Code editor features

- Customizable system prompts

- Copy code snippets, messages or entire sessions

- Retry completions

- Stores data locally on your browser

- Responsive layout

- Light & dark themes

- Download Ollama models directly from the UI

- ⚡️ Live demo

- No sign-up required

- 🖥️ Download for macOS, Windows & Linux

- 🐳 Self-hosting with Docker

- 🐞 Contribute

|

|

|---|---|

|

|

To host your own Hollama server, install Docker and run the command below in your favorite terminal:

docker run --rm -d -p 4173:4173 ghcr.io/fmaclen/hollama:latestThen visit http://localhost:4173.

If you are using the publicly hosted version or your Docker server is on a separate device than the Ollama server you'll have to set the domain in OLLAMA_ORIGINS. Learn more in Ollama's docs.

OLLAMA_ORIGINS=https://hollama.fernando.is ollama serve