This is a pure experiment on langchain + chainlit + LLM based chatbot!

Prerequisites:

- To use LLMs via OpenAI remotely, an OpenAI API key is required.

- To use LLMs via Groq remotely, an Groq API key is required.

- To use Ollama wrapped LLMs on your own local computer, download and intall Ollama.

Installation:

- Clone this repo.

- Create a virtual environment in Python via venv, conda or other package manager.

- Install requirements of Python packages

pip install -r requirements.txt - To use LLMs remotely provided by OpenAI or Groq, copy and rename the

.env.examplefile to.env; set your own OpenAI and or Groq API keys. For current functionalities the LANGCHAIN_API_KEY is not required. Currently the code in main.py is using GPT3.5 and GPT4 on OpenAI and Mixtral-8x7b, Gemma-7 and Llam3-8b on Groq. So, please modify main.py accordingly based on your needs. - To use Ollama wrapped LLMs on your own local computer, download and intall Ollama first and then in terminal run

ollama pull <model-name>to pull the model you need. Currently the code in main.py has Llama3, Gemma, Phi-3, CodeGemma and Deepseek-coder. So, please modify main.py accordingly based on your needs. When using Ollama LLM locally make sure the Ollama app is running. Otherwise, there could be error likeaiohttp.client_exceptions.ClientConnectorError: Cannot connect to host localhost: xxxxx ssl:default [Connec call fialed ...]To run LLMs locally your computer should be strong engouh with at least 8 CPU cores and 16GB RAM.

Luanch the chatbot:

In terminal run the following commands

conda activate <virtural env you created>chainlit run main.py --watch

See other user chainlit commands and options via chainlit --help

(langchian-groq-chainlit) ~/ chainlit --help

Usage: chainlit [OPTIONS] COMMAND [ARGS]...

Options:

--version Show the version and exit.

--help Show this message and exit.

Commands:

create-secret

hello

init

lint-translations

run

Options of commmand chainlit run:

(langchian-groq-chainlit) ~/ chainlit run --help

Usage: chainlit run [OPTIONS] TARGET

Options:

-w, --watch Reload the app when the module changes

-h, --headless Will prevent to auto open the app in the browser

-d, --debug Set the log level to debug

-c, --ci Flag to run in CI mode

--no-cache Useful to disable third parties cache, such as langchain.

--host TEXT Specify a different host to run the server on

--port TEXT Specify a different port to run the server on

--help Show this message and exit.

You can use --port to specify a port, default is 8000.

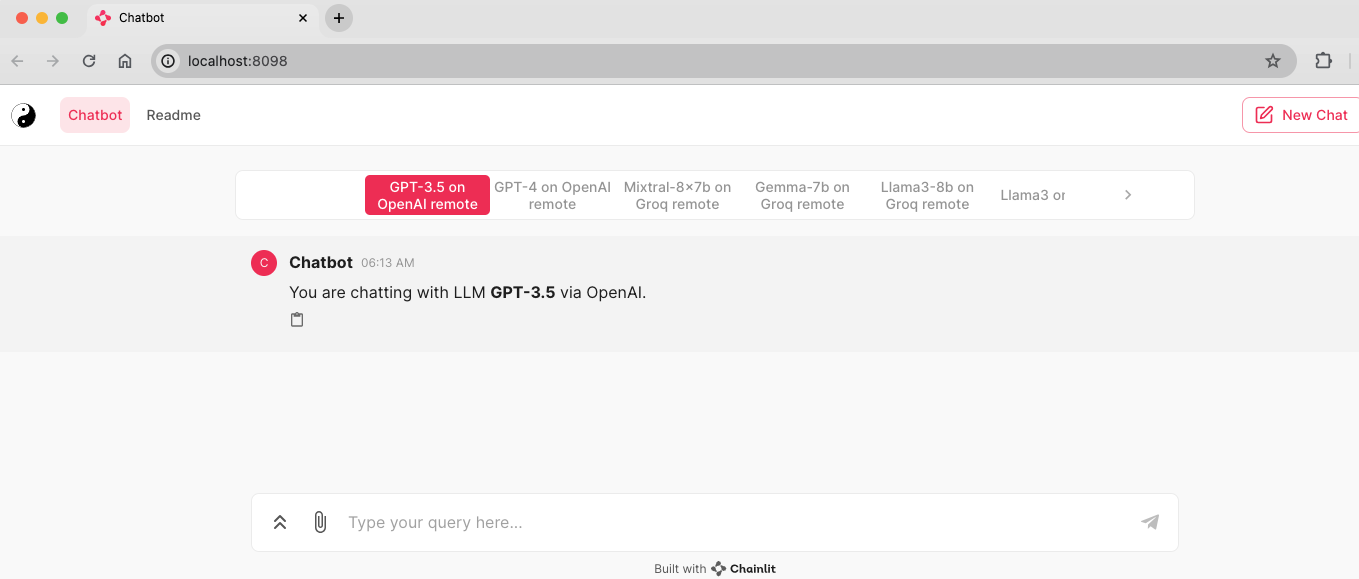

If everything going well you should see a web browser window or tab popped out like below

Now you can chat with the chatbot! Enjoy! Any questions/comments/suggestions would be appreciated very much!