Lithops is a Python multi-cloud distributed computing framework. It allows to run unmodified local python code at massive scale in the main serverless computing platforms. Lithops delivers the user’s code into the cloud without requiring knowledge of how it is deployed and run. Moreover, its multicloud-agnostic architecture ensures portability across cloud providers, overcoming vendor lock-in.

Lithops is specially suited for highly-parallel programs with little or no need for communication between processes, but it also supports parallel applications that need to share state among processes. Examples of applications that run with Lithops include Monte Carlo simulations, deep learning and machine learning processes, metabolomics computations, and geospatial analytics, to name a few.

-

Install Lithops from the PyPi repository:

$ pip install lithops

-

Execute a Hello World test function:

$ lithops test

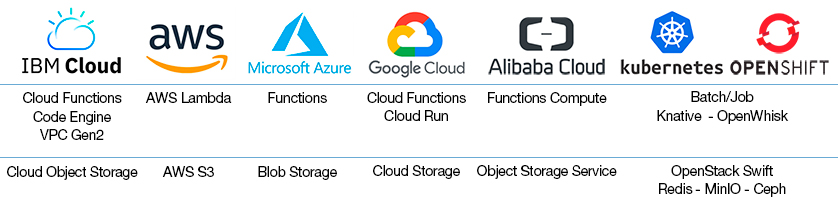

Lithops provides an extensible backend architecture (compute, storage) that is designed to work with different Cloud providers and on-premise backends. In this sense, you can code in python and run it unmodified in IBM Cloud, AWS, Azure, Google Cloud and Alibaba Aliyun. Moreover, it provides support for running jobs on vanilla kubernetes, or by using a kubernetes serverless framework like Knative or OpenWhisk.

Follow these instructions to configure your compute and storage backends

Lithops is shipped with 2 different high-level Compute APIs, and 2 high-level Storage APIs

You can find more usage examples in the examples folder.

Lithops is shipped with 3 different modes of execution. The execution mode allows you to decide where and how the functions are executed.

For documentation on using Lithops, see the User guide.

If you are interested in contributing, see CONTRIBUTING.md and DEVELOPMENT.md.

- Serverless Without Constraints

- Lithops, a Multi-cloud Serverless Programming Framework

- CNCF Webinar - Toward Hybrid Cloud Serverless Transparency with Lithops Framework

- Using Serverless to Run Your Python Code on 1000 Cores by Changing Two Lines of Code

- Decoding dark molecular matter in spatial metabolomics with IBM Cloud Functions

- Your easy move to serverless computing and radically simplified data processing Strata Data Conference, NY 2019

- Ants, serverless computing, and simplified data processing

- Speed up data pre-processing with Lithops in deep learning

- Predicting the future with Monte Carlo simulations over IBM Cloud Functions

- Process large data sets at massive scale with Lithops over IBM Cloud Functions

- Industrial project in Technion on Lithops

- Towards Multicloud Access Transparency in Serverless Computing - IEEE Software 2021

- Primula: a Practical Shuffle/Sort Operator for Serverless Computing - ACM/IFIP International Middleware Conference 2020. See presentation here

- Bringing scaling transparency to Proteomics applications with serverless computing - 6th International Workshop on Serverless Computing (WoSC6) 2020. See presentation here

- Serverless data analytics in the IBM Cloud - ACM/IFIP International Middleware Conference 2018

This project has received funding from the European Union's Horizon 2020 research and innovation programme under grant agreement No 825184.