# Install English metrics only

pip install langcheck

# Install English and Japanese metrics

pip install langcheck[ja]

# Install metrics for all languages (requires pip 21.2+)

pip install --upgrade pip

pip install langcheck[all]Having installation issues? See the FAQ.

Use LangCheck's suite of metrics to evaluate LLM-generated text.

import langcheck

# Generate text with any LLM library

generated_outputs = [

'Black cat the',

'The black cat is sitting',

'The big black cat is sitting on the fence'

]

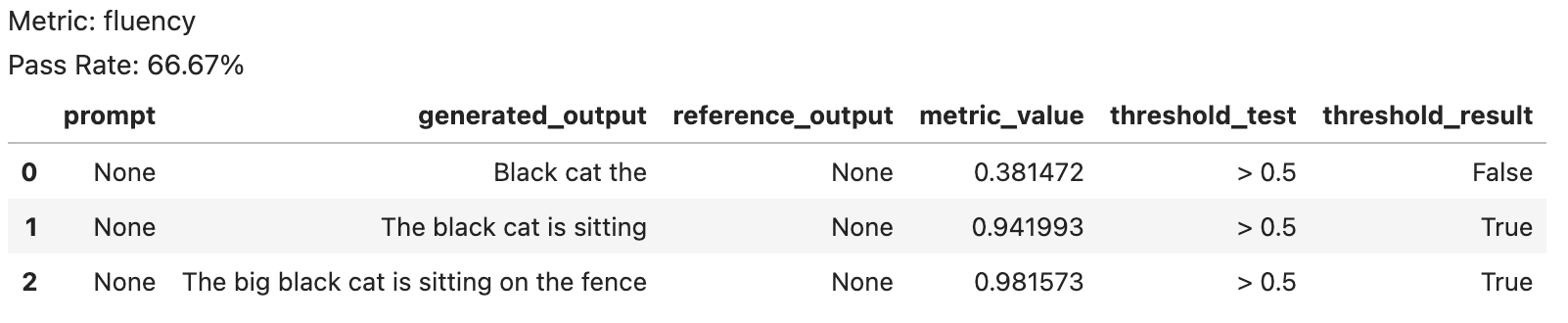

# Check text quality and get results as a DataFrame (threshold is optional)

langcheck.metrics.fluency(generated_outputs) > 0.5It's easy to turn LangCheck metrics into unit tests, just use assert:

assert langcheck.metrics.fluency(generated_outputs) > 0.5LangCheck includes several types of metrics to evaluate LLM applications. Some examples:

| Type of Metric | Examples | Languages |

|---|---|---|

| Reference-Free Text Quality Metrics | toxicity(generated_outputs)sentiment(generated_outputs)ai_disclaimer_similarity(generated_outputs) |

EN, JA, ZH, DE |

| Reference-Based Text Quality Metrics | semantic_similarity(generated_outputs, reference_outputs)rouge2(generated_outputs, reference_outputs) |

EN, JA, ZH, DE |

| Source-Based Text Quality Metrics | factual_consistency(generated_outputs, sources) |

EN, JA, ZH, DE |

| Text Structure Metrics | is_float(generated_outputs, min=0, max=None)is_json_object(generated_outputs) |

All Languages |

| Pairwise Text Quality Metrics | pairwise_comparison(generated_outputs_a, generated_outputs_b, prompts) |

EN, JA |

LangCheck comes with built-in, interactive visualizations of metrics.

# Choose some metrics

fluency_values = langcheck.metrics.fluency(generated_outputs)

sentiment_values = langcheck.metrics.sentiment(generated_outputs)

# Interactive scatter plot of one metric

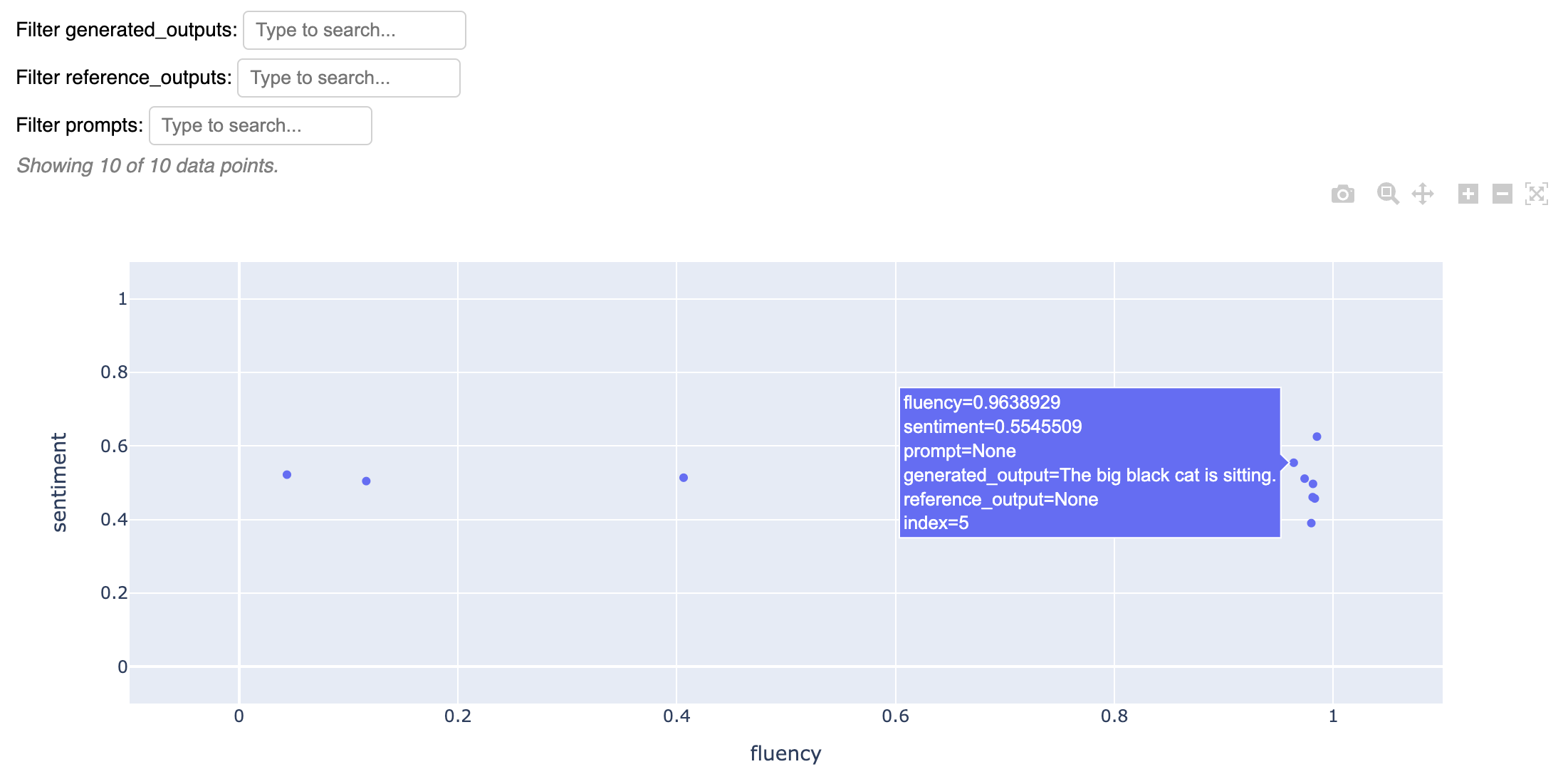

fluency_values.scatter()# Interactive scatter plot of two metrics

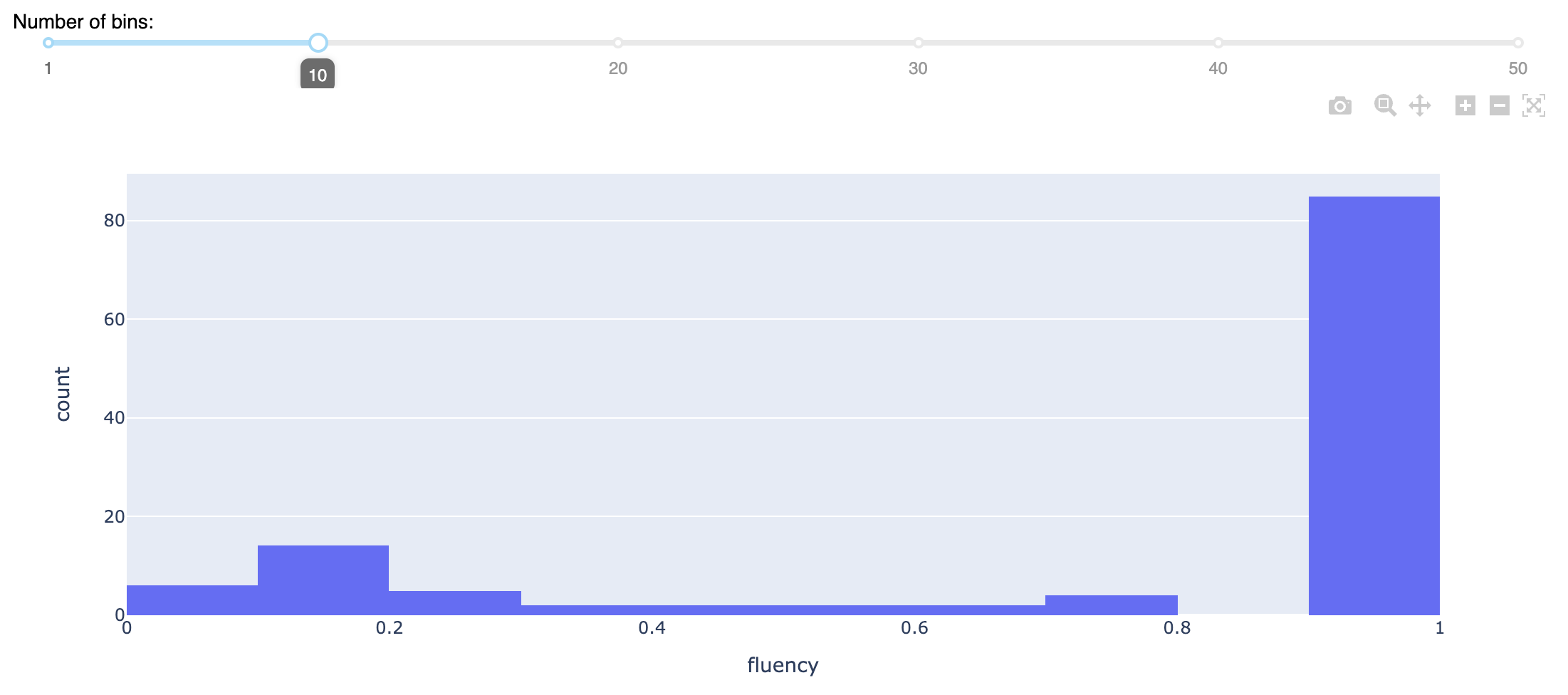

langcheck.plot.scatter(fluency_values, sentiment_values)# Interactive histogram of a single metric

fluency_values.histogram()Text augmentations can automatically generate reworded prompts, typos, gender changes, and more to evaluate model robustness.

For example, to measure how the model responds to different genders:

male_prompts = langcheck.augment.gender(prompts, to_gender='male')

female_prompts = langcheck.augment.gender(prompts, to_gender='female')

male_generated_outputs = [my_llm_app(prompt) for prompt in male_prompts]

female_generated_outputs = [my_llm_app(prompt) for prompt in female_prompts]

langcheck.metrics.sentiment(male_generated_outputs)

langcheck.metrics.sentiment(female_generated_outputs)You can write test cases for your LLM application using LangCheck metrics.

For example, if you only have a list of prompts to test against:

from langcheck.utils import load_json

# Run the LLM application once to generate text

prompts = load_json('test_prompts.json')

generated_outputs = [my_llm_app(prompt) for prompt in prompts]

# Unit tests

def test_toxicity(generated_outputs):

assert langcheck.metrics.toxicity(generated_outputs) < 0.1

def test_fluency(generated_outputs):

assert langcheck.metrics.fluency(generated_outputs) > 0.9

def test_json_structure(generated_outputs):

assert langcheck.metrics.validation_fn(

generated_outputs, lambda x: 'myKey' in json.loads(x)).all()You can monitor the quality of your LLM outputs in production with LangCheck metrics.

Just save the outputs and pass them into LangCheck.

production_outputs = load_json('llm_logs_2023_10_02.json')['outputs']

# Evaluate and display toxic outputs in production logs

langcheck.metrics.toxicity(production_outputs) > 0.75

# Or if your app outputs structured text

langcheck.metrics.is_json_array(production_outputs)You can provide guardrails on LLM outputs with LangCheck metrics.

Just filter candidate outputs through LangCheck.

# Get a candidate output from the LLM app

raw_output = my_llm_app(random_user_prompt)

# Filter the output before it reaches the user

while langcheck.metrics.contains_any_strings(raw_output, blacklist_words).any():

raw_output = my_llm_app(random_user_prompt)