Real-time transcription using faster-whisper

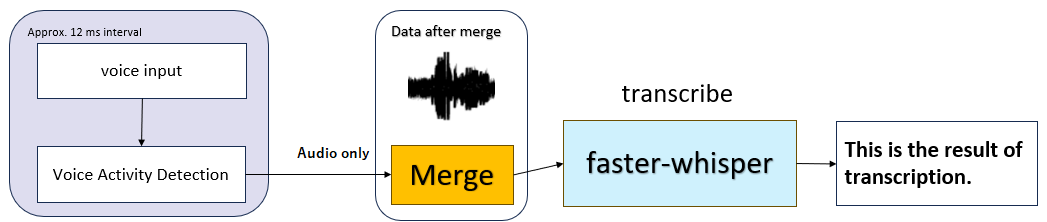

Accepts audio input from a microphone using a Sounddevice. By using Silero VAD(Voice Activity Detection), silent parts are detected and recognized as one voice data. This audio data is converted to text using Faster-Whisper.

The HTML-based GUI allows you to check the transcription results and make detailed settings for the faster-whisper.

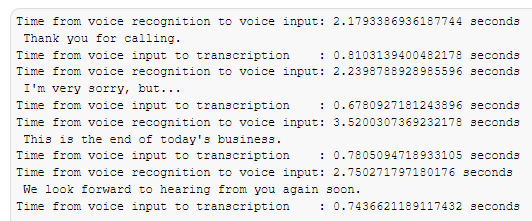

If the sentences are well separated, the transcription takes less than a second.

Large-v2 model

Executed with CUDA 11.7 on a NVIDIA GeForce RTX 3060 12GB.

- pip install .

- python -m speech_to_text

- Select "App Settings" and configure the settings.

- Select "Model Settings" and configure the settings.

- Select "Transcribe Settings" and configure the settings.

- Select "VAD Settings" and configure the settings.

- Start Transcription

If you use the OpenAI API for text proofreading, set OPENAI_API_KEY as an environment variable.

- If you select local_model in "Model size or path", the model with the same name in the local folder will be referenced.

- Add generate audio files from input sound.

- Add synchronize audio files with transcription.

Audio and text highlighting are linked.

- Add transcription from audio files.(only wav format)

- Add Send transcription results from a WebSocket server to a WebSocket client.

Example of use: Display subtitles in live streaming.

- Add generate SRT files from transcription result.

- Add support for mp3, ogg, and other audio files.

Depends on Soundfile support. - Add setting to include non-speech data in buffer.

While this will increase memory usage, it will improve transcription accuracy.

- Add non-speech threshold setting.

- Add Text proofreading option via OpenAI API.

Transcription results can be proofread.

- Add feature where audio and word highlighting are synchronized.

if Word Timestamps is true.

-

Save and load previous settings.

-

Use Silero VAD

-

Allow local parameters to be set from the GUI.