- Our team name: Science AIO

- My roles: Leader - Researcher - Developer

conda create -n py38 python==3.8

conda activate py38

pip install git+https://github.com/openai/CLIP.git

pip install -r requirements.txt

- Download all the images here. We have applied various image compression methods. The results are compacted into 6GB.

- account.txt: is used for login and submission.

- dataframe_Lxx.csv: detection database

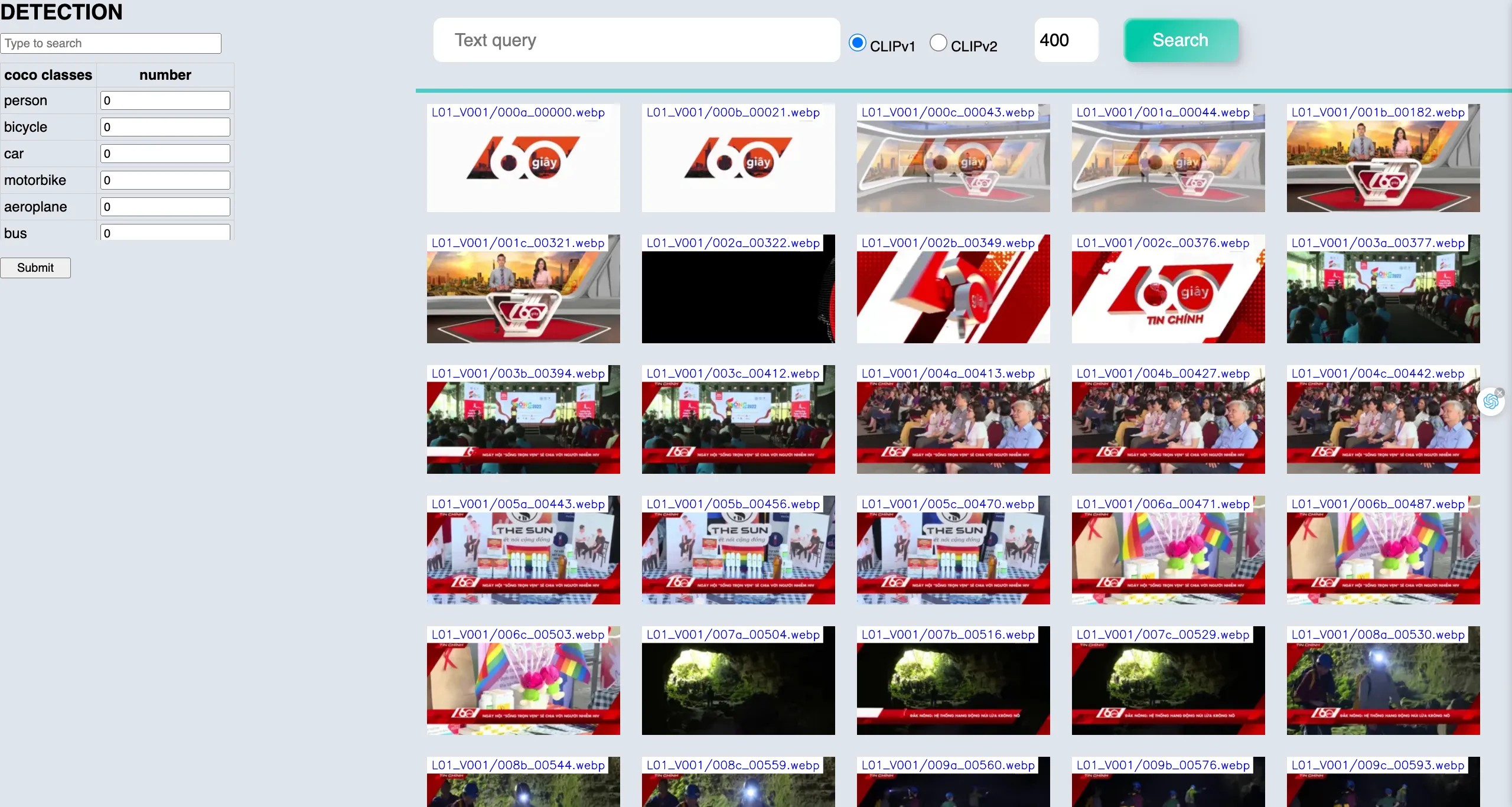

You can choose 1 of the following 3 versions for testing.

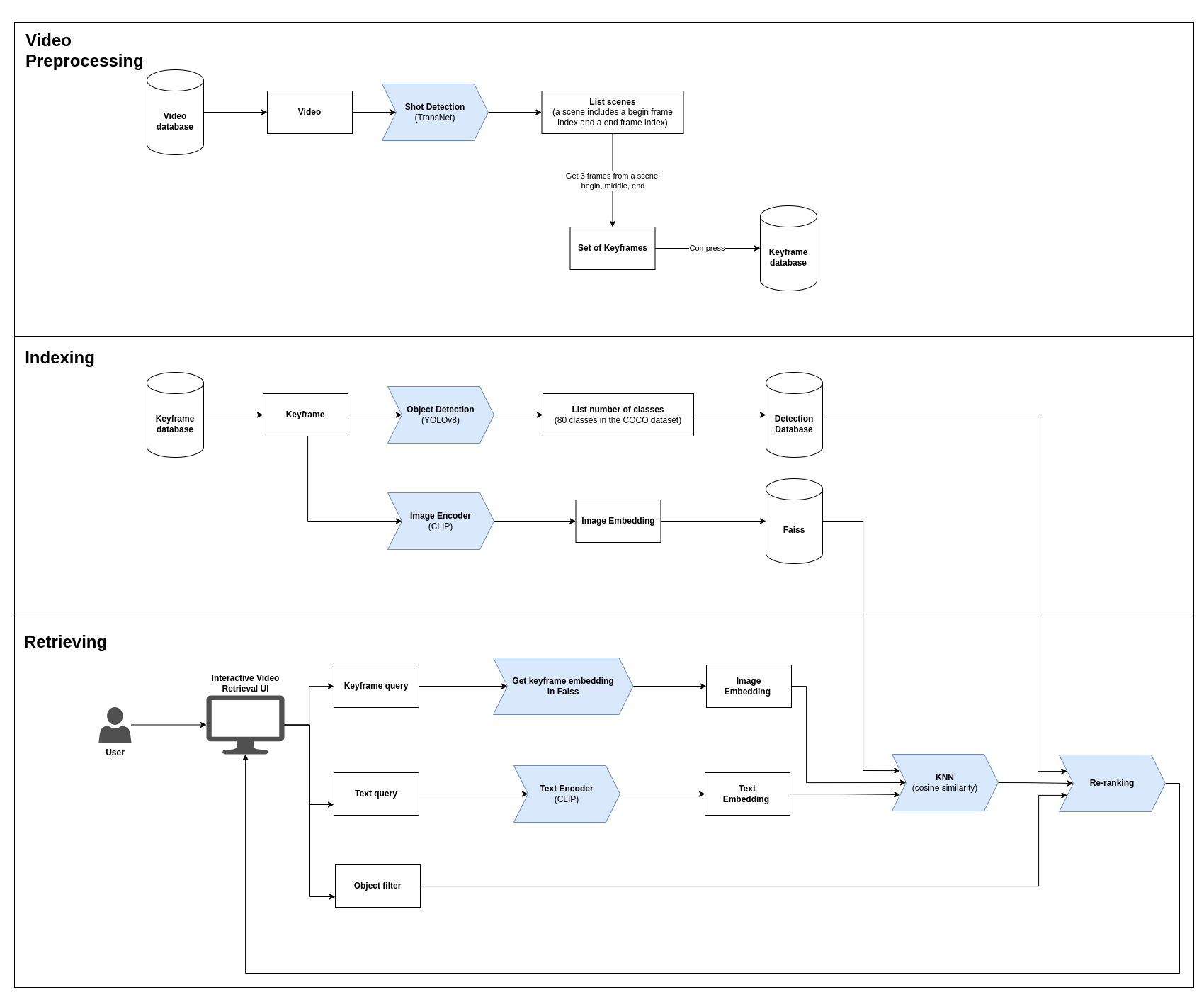

| version | description | json | faiss bin v1 | faiss bin v2 |

|---|---|---|---|---|

| full | contains 3 frames in each scene (begin, middle, end) | full_path_v1.json | full_faiss_v1.bin | full_faiss_v2.bin |

| standard | contains 2 frames in each scene (begin, end) | full_path_v3.json | full_faiss_v3.bin | full_faiss_v4.bin |

| lightweight | contains 1 frame in each scene (middle) | full_path_v5.json | full_faiss_v5.bin | full_faiss_v6.bin |

python app.py

Run this URL in your browser: http://0.0.0.0:5001/home?index=0

Note: I use 2 versions of CLIP to increase the diversity of displayed results.

- Faiss: Facebook AI Research Search Similarity (Docs)

- Learning Transferable Visual Models From Natural Language Supervision - 2021 (Paper - GitHub - Blog)

- How to Try CLIP: OpenAI's Zero-Shot Image Classifier (Blog)

- Learning to Prompt for Vision-Language Models - CoOp - 2022 (Paper)

- Towards Robust Prompts on Vision-Language Models - 2023 (Paper)

- Prompt Engineering: The Magic Words to using OpenAI's CLIP - 2021 (Blog)