Documentation Authors: Marcin Grzejszczak

Spring, Spring Boot and Spring Cloud are tools that allow developers speed up the time of creating new business features. It’s common knowledge however that the feature is only valuable if it’s in production. That’s why companies spend a lot of time and resources on building their own deployment pipelines.

This project tries to solve the following problems:

-

Creation of a common deployment pipeline

-

Propagation of good testing & deployment practices

-

Speed up the time required to deploy a feature to production

A common way of running, configuring and deploying applications lowers support costs and time needed by new developers to blend in when they change projects.

In the following section we will describe in more depth the rationale behind the presented opinionated pipeline. We will go through each deployment step and describe it in details.

.

├── common

├── concourse

├── docs

└── jenkinsIn the common folder you can find all the Bash scripts containing the pipeline logic. These

scripts are reused by both Concourse and Jenkins pipelines.

In the concourse folder you can find all the necessary scripts and setup to run Concourse demo.

In the docs section you have the whole documentation of the project.

In the jenkins folder you can find all the necessary scripts and setup to run Jenkins demo.

This repository can be treated as a template for your pipeline. We provide some opinionated implementation that you can alter to suit your needs. The best approach to use it to build your production projects would be to download the Spring Cloud Pipelines repository as ZIP, then init a Git project there and modify it as you wish.

$ # pass the branch (e.g. master) or a particular tag (e.g. v1.0.0.RELEASE)

$ SC_PIPELINES_RELEASE=...

$ curl -LOk https://github.com/spring-cloud/spring-cloud-pipelines/archive/${SC_PIPELINES_RELEASE}.zip

$ unzip ${SC_PIPELINES_RELEASE}.zip

$ cd spring-cloud-pipelines-${SC_PIPELINES_RELEASE}

$ git init

$ # modify the pipelines to suit your needs

$ git add .

$ git commit -m "Initial commit"

$ git remote add origin ${YOUR_REPOSITORY_URL}

$ git push origin master|

Note

|

Why aren’t you simply cloning the repo? This is meant to be a seed for building new, versioned pipelines for you. You don’t want to have all of our history dragged along with you, don’t you? |

You can use Spring Cloud Pipelines to generate pipelines for all projects in your system. You can scan all your repositories (e.g. call the Stash / Github API and retrieve the list of repos) and then…

-

For Jenkins, call the seed job and pass the

REPOSparameter that would contain the list of repositories -

For Concourse, you’d have to call

flyand set pipeline for every single repo

You can use Spring Cloud Pipelines in such a way that each project contains its own pipeline definition in its code. Spring Cloud Pipelines clones the code with the pipeline definitions (the bash scripts) so the only piece of logic that could be there in your application’s repository would be how the pipeline should look like.

-

For Jenkins, you’d have to either set up the

Jenkinsfileor the jobs using Jenkins Job DSL plugin in your repo. Then in Jenkins whenever you set up a new pipeline for a repo then you reference the pipeline definition in that repo. -

For Concourse, each project contains its own pipeline steps and it’s up to the project to set up the pipeline.

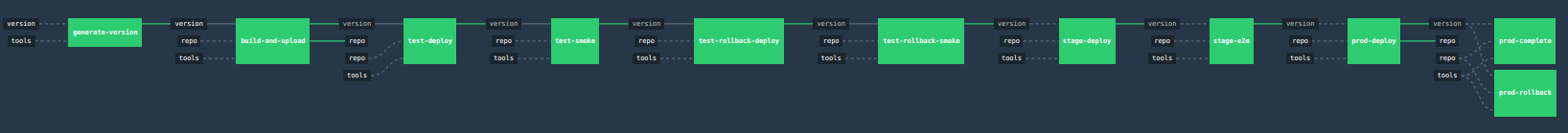

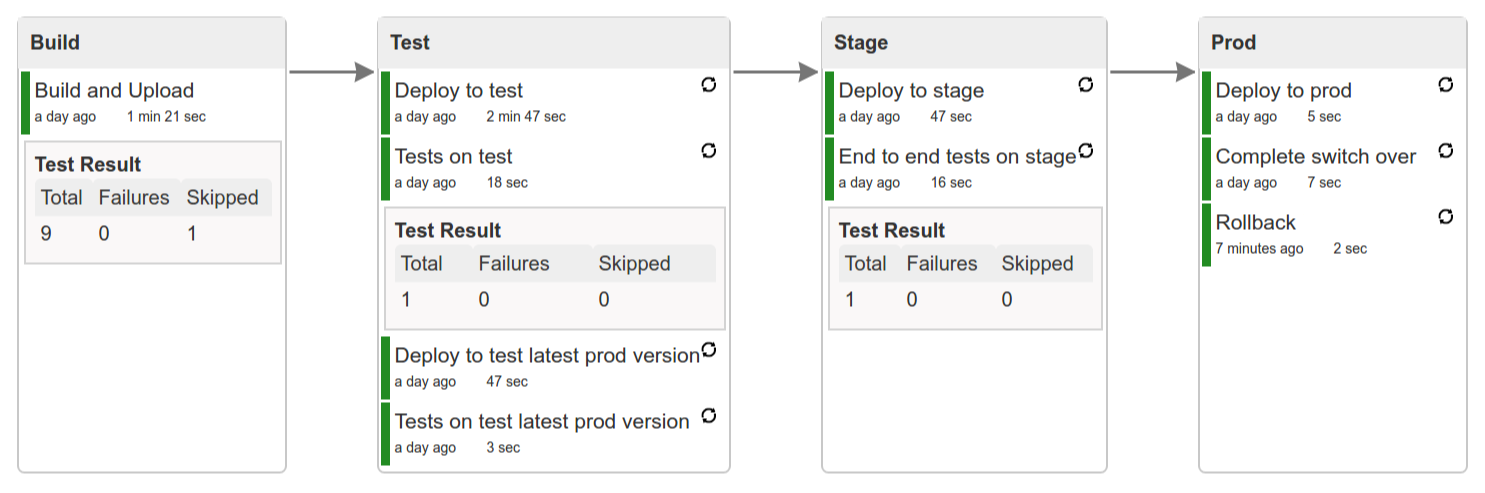

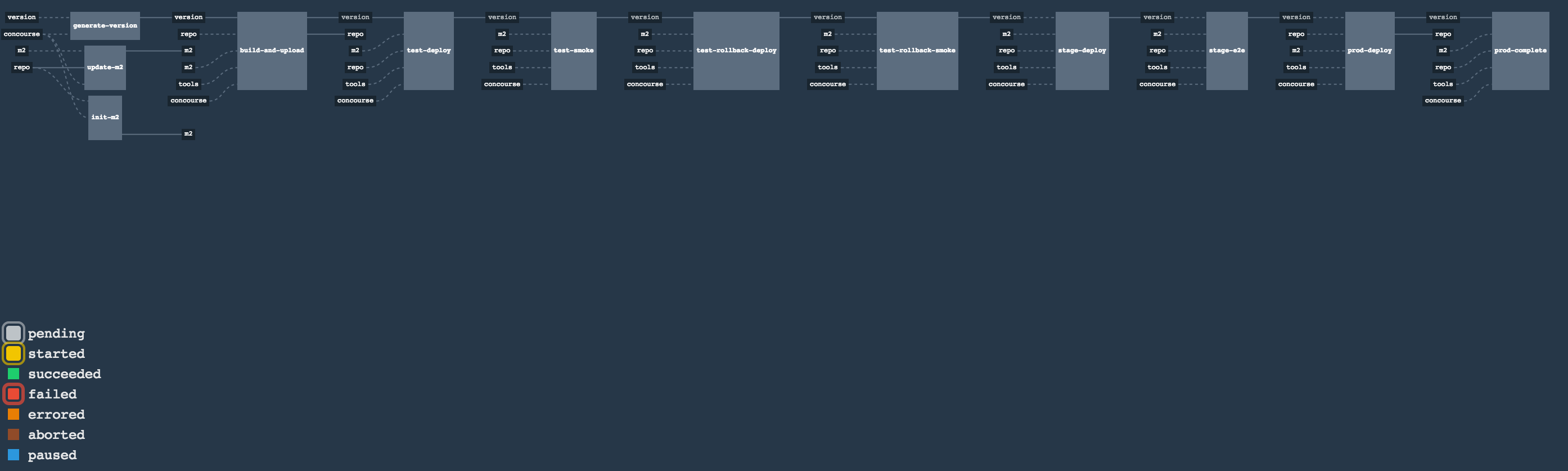

Let’s take a look at the flow of the opinionated pipeline

We’ll first describe the overall concept behind the flow and then we’ll split it into pieces and describe every piece independently.

So we’re on the same page let’s define some common vocabulary. We discern 4 typical environments in terms of running the pipeline.

-

build environment is a machine where the building of the application takes place. It’s a CI / CD tool worker.

-

test is an environment where you can deploy an application to test it. It doesn’t resemble production, we can’t be sure of it’s state (which application is deployed there and in which version). It can be used by multiple teams at the same time.

-

stage is an environment that does resemble production. Most likely applications are deployed there in versions that correspond to those deployed to production. Typically databases there are filled up with (obfuscated) production data. Most often this environment is a single, shared one between many teams. In other words in order to run some performance, user acceptance tests you have to block and wait until the environment is free.

-

prod is a production environment where we want our tested applications to be deployed for our customers.

Unit tests - tests that are executed on the application during the build phase. No integrations with databases / HTTP server stubs etc. take place. Generally speaking your application should have plenty of these to have fast feedback if your features are working fine.

Integration tests - tests that are executed on the built application during the build phase. Integrations with in memory databases / HTTP server stubs take place. According to the test pyramid, in most cases you should have not too many of these kind of tests.

Smoke tests - tests that are executed on a deployed application. The concept of these tests is to check the crucial parts of your application are working properly. If you have 100 features in your application but you gain most money from e.g. 5 features then you could write smoke tests for those 5 features. As you can see we’re talking about smoke tests of an application, not of the whole system. In our understanding inside the opinionated pipeline, these tests are executed against an application that is surrounded with stubs.

End to end tests - tests that are executed on a system composing of multiple applications. The idea of these tests is to check if the tested feature works when the whole system is set up. Due to the fact that it takes a lot of time, effort, resources to maintain such an environment and that often those tests are unreliable (due to many different moving pieces like network database etc.) you should have a handful of those tests. Only for critical parts of your business. Since only production is the key verifier of whether your feature works, some companies don’t even want to do those and move directly to deployment to production. When your system contains KPI monitoring and alerting you can quickly react when your deployed application is not behaving properly.

Performance testing - tests executed on an application or set of applications to check if your system can handle big load of input. In case of our opinionated pipeline these tests could be executed either on test (against stubbed environment) or stage (against the whole system)

Before we go into details of the flow let’s take a look at the following example.

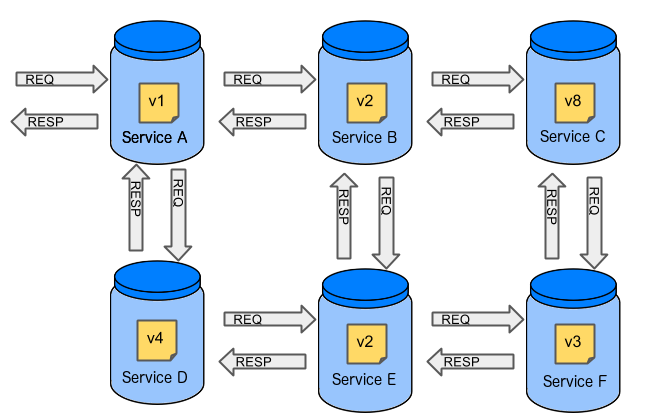

When having only a handful of applications, performing end to end testing is beneficial. From the operations perspective it’s maintainable for a finite number of deployed instances. From the developers perspective it’s nice to verify the whole flow in the system for a feature.

In case of microservices the scale starts to be a problem:

The questions arise:

-

Should I queue deployments of microservices on one testing environment or should I have an environment per microservice?

-

If I queue deployments people will have to wait for hours to have their tests ran - that can be a problem

-

-

To remove that issue I can have an environment per microservice

-

Who will pay the bills (imagine 100 microservices - each having each own environment).

-

Who will support each of those environments?

-

Should we spawn a new environment each time we execute a new pipeline and then wrap it up or should we have them up and running for the whole day?

-

-

In which versions should I deploy the dependent microservices - development or production versions?

-

If I have development versions then I can test my application against a feature that is not yet on production. That can lead to exceptions on production

-

If I test against production versions then I’ll never be able to test against a feature under development anytime before deployment to production.

-

One of the possibilities of tackling these problems is to… not do end to end tests.

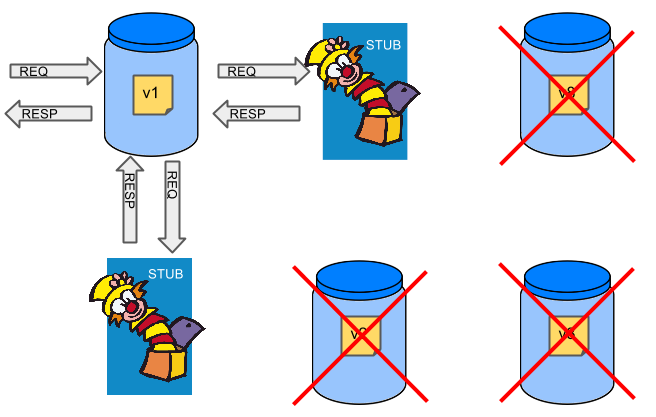

If we stub out all the dependencies of our application then most of the problems presented above disappear. There is no need to start and setup infrastructure required by the dependant microservices. That way the testing setup looks like this:

Such an approach to testing and deployment gives the following benefits (thanks to the usage of Spring Cloud Contract):

-

No need to deploy dependant services

-

The stubs used for the tests ran on a deployed microservice are the same as those used during integration tests

-

Those stubs have been tested against the application that produces them (check Spring Cloud Contract for more information)

-

We don’t have many slow tests running on a deployed application - thus the pipeline gets executed much faster

-

We don’t have to queue deployments - we’re testing in isolation thus pipelines don’t interfere with each other

-

We don’t have to spawn virtual machines each time for deployment purposes

It brings however the following challenges:

-

No end to end tests before production - you don’t have the full certainty that a feature is working

-

First time the applications will talk in a real way will be on production

Like every solution it has its benefits and drawbacks. The opinionated pipeline allows you to configure whether you want to follow this flow or not.

The general view behind this deployment pipeline is to:

-

test the application in isolation

-

test the backwards compatibility of the application in order to roll it back if necessary

-

allow testing of the packaged app in a deployed environment

-

allow user acceptance tests / performance tests in a deployed environment

-

allow deployment to production

Obviously the pipeline could have been split to more steps but it seems that all of the aforementioned actions comprise nicely in our opinionated proposal.

Spring Cloud Pipelines uses Bash scripts extensively. Below you can find the list of software that needs to be installed on a CI server worker for the build to pass.

|

Tip

|

In the demo setup all of these libraries are already installed. |

apt-get -y install \

bash \

git \

tar \

zip \

curl \

ruby \

wget \

unzip \

python \

jq|

Important

|

In the Jenkins case you will also need bats and shellcheck. They are not

presented in the list since the installed versions by Linux distributions might be old.

That’s why this project’s Gradle tasks will download latest versions of both libraries

for you.

|

Each application can contain a file called sc-pipelines.yml with the following structure:

lowercaseEnvironmentName1:

services:

- type: service1Type

name: service1Name

coordinates: value

- type: service2Type

name: service2Name

key: value

lowercaseEnvironmentName2:

services:

- type: service3Type

name: service3Name

coordinates: value

- type: service4Type

name: service4Name

key: valueFor a given environment we declare a list of infrastructure services that we want to have deployed. Services have

-

type(example:eureka,mysql,rabbitmq,stubrunner) - this value gets then applied to thedeployServiceBash function -

[KUBERNETES] for

mysqlyou can pass the database name via thedatabaseproperty -

name- name of the service to get deployed -

coordinates- coordinate that allows you to fetch the binary of the service. Examples: It can be a maven coordinategroupid:artifactid:version, docker imageorganization/nameOfImage, etc. -

arbitrary key value pairs - you can customize the services as you wish

The stubrunner type can also have the useClasspath flag turned on to true

or false.

Example:

test:

services:

- type: rabbitmq

name: rabbitmq-github-webhook

- type: mysql

name: mysql-github-webhook

- type: eureka

name: eureka-github-webhook

coordinates: com.example.eureka:github-eureka:0.0.1.M1

- type: stubrunner

name: stubrunner-github-webhook

coordinates: com.example.eureka:github-analytics-stub-runner-boot-classpath-stubs:0.0.1.M1

useClasspath: true

stage:

services:

- type: rabbitmq

name: rabbitmq-github

- type: mysql

name: mysql-github

- type: eureka

name: github-eureka

coordinates: com.example.eureka:github-eureka:0.0.1.M1When the deployment to test or deployment to stage occurs, Spring Cloud Pipelines will:

-

for

testenvironment, delete existing services and redeploy the ones from the list -

for

stageenvironment, if the service is not available it will get deployed. Otherwise nothing will happen

For the demo purposes we’re providing Docker Compose setup with Artifactory and Concourse / Jenkins tools. Regardless of the picked CD application for the pipeline to pass one needs either

-

a Cloud Foundry instance (for example Pivotal Web Services or PCF Dev)

-

a Kubernetes cluster (for example Minikube)

-

the infrastructure applications deployed to the JAR hosting application (for the demo we’re providing Artifactory).

-

Eurekafor Service Discovery -

Stub Runner Bootfor running Spring Cloud Contract stubs.

|

Tip

|

In the demos we’re showing you how to first build the github-webhook project. That’s because

the github-analytics needs the stubs of github-webhook to pass the tests. Below you’ll find

references to github-analytics project since it contains more interesting pieces as far as testing

is concerned.

|

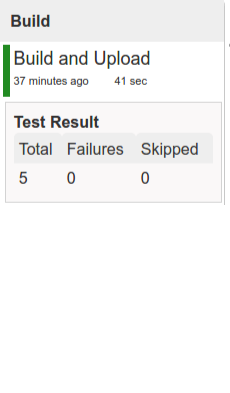

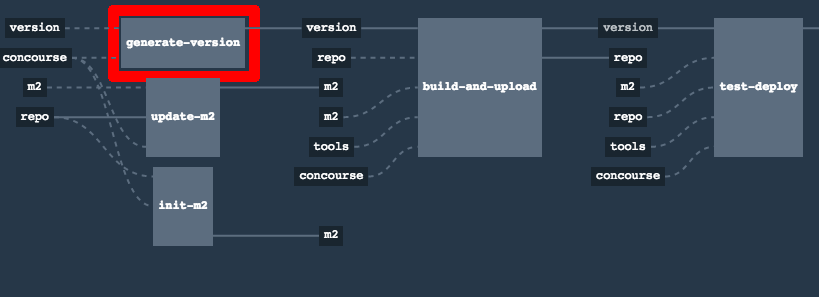

In this step we’re generating a version of the pipeline, next we’re running unit, integration and contract tests. Finally we’re:

-

publishing a fat jar of the application

-

publishing a Spring Cloud Contract jar containing stubs of the application

-

for Kubernetes - uploading a Docker image of the application

During this phase we’re executing a Maven build using Maven Wrapper or a Gradle build using Gradle Wrapper

, with unit and integration tests. We’re also tagging the repository with dev/${version} format. That way in each

subsequent step of the pipeline we’re able to retrieve the tagged version. Also we know

exactly which version of the pipeline corresponds to which Git hash.

Once the artifact got built we’re running API compatibility check.

-

we’re searching for the latest production deployment

-

we’re retrieving the contracts that were used by that deployment

-

from the contracts we’re generating API tests to see if the current implementation is fulfilling the HTTP / messaging contracts that the current production deployment has defined (we’re checking backward compatibility of the API)

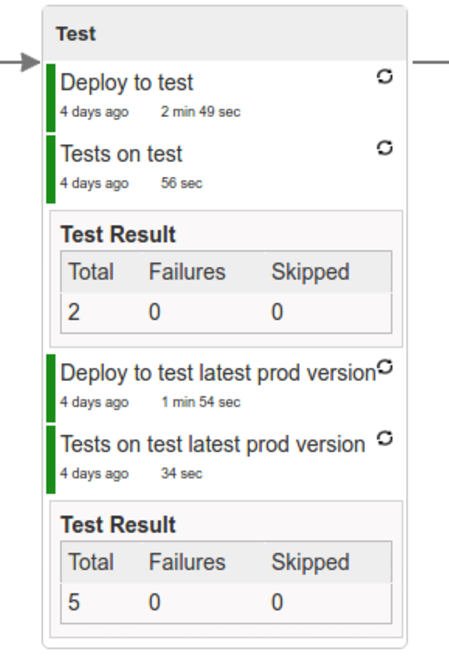

Here we’re

-

starting a RabbitMQ service in PaaS

-

deploying

Eurekainfrastructure application to PaaS -

downloading the fat jar from Nexus and we’re uploading it to PaaS. We want the application to run in isolation (be surrounded by stubs).

|

Tip

|

Currently due to port constraints in Cloud Foundry

we cannot run multiple stubbed HTTP services in the cloud so to fix this issue we’re running

the application with smoke Spring profile on which you can stub out all HTTP calls to return

a mocked response

|

-

if the application is using a database then it gets upgraded at this point via Flyway, Liquibase or any other tool once the application gets started

-

from the project’s Maven or Gradle build we’re extracting

stubrunner.idsproperty that contains all thegroupId:artifactId:version:classifiernotation of dependant projects for which the stubs should be downloaded. -

then we’re uploading

Stub Runner Bootand pass the extractedstubrunner.idsto it. That way we’ll have a running application in Cloud Foundry that will download all the necessary stubs of our application -

from the checked out code we’re running the tests available under the

smokeprofile. In the case ofGitHub Analyticsapplication we’re triggering a message from theGitHub Webhookapplication’s stub, that is sent via RabbitMQ to GitHub Analytics. Then we’re checking if message count has increased. -

once the tests pass we’re searching for the last production release. Once the application is deployed to production we’re tagging it with

prod/${version}tag. If there is no such tag (there was no production release) there will be no rollback tests executed. If there was a production release the tests will get executed. -

assuming that there was a production release we’re checking out the code corresponding to that release (we’re checking out the tag), we’re downloading the appropriate artifact (either JAR for Cloud Foundry or Docker image for Kubernetes) and we’re uploading it to PaaS. IMPORTANT the old artifact is running against the NEW version of the database.

-

we’re running the old

smoketests against the freshly deployed application surrounded by stubs. If those tests pass then we have a high probability that the application is backwards compatible -

the default behaviour is that after all of those steps the user can manually click to deploy the application to a stage environment

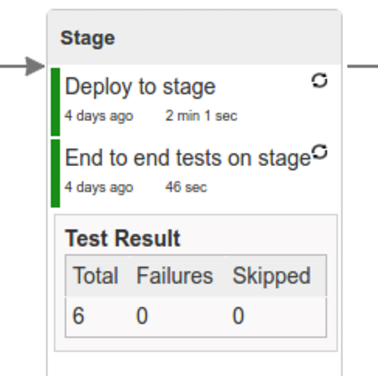

Here we’re

-

starting a RabbitMQ service in PaaS

-

deploying

Eurekainfrastructure application to PaaS -

downloading the artifact (either JAR for Cloud Foundry or Docker image for Kubernetes) from and we’re uploading it to PaaS.

Next we have a manual step in which:

-

from the checked out code we’re running the tests available under the

e2eprofile. In the case ofGitHub Analyticsapplication we’re sending a HTTP message to GitHub Analytic’s endpoint. Then we’re checking if the received message count has increased.

The step is manual by default due to the fact that stage environment is often shared between teams and some preparations on databases / infrastructure have to take place before running the tests. Ideally these step should be fully automatic.

The step to deploy to production is manual but ideally it should be automatic.

|

Important

|

This step does deployment to production. On production you would assume

that you have the infrastructure running. That’s why before you run this step you

must execute a script that will provision the services on the production environment.

For Cloud Foundry just call tools/cf-helper.sh setup-prod-infra and

for Kubernetes tools/k8s-helper.sh setup-prod-infra

|

Here we’re

-

tagging the Git repo with

prod/${version}tag -

downloading the application artifact (either JAR for Cloud Foundry or Docker image for Kubernetes)

-

we’re doing Blue Green deployment:

-

for Cloud Foundry

-

we’re renaming the current instance of the app e.g.

fooServicetofooService-venerable -

we’re deploying the new instance of the app under the

fooServicename -

now two instances of the same application are running on production

-

-

for Kubernetes

-

we’re deploying a service with the name of the app e.g.

fooService -

we’re doing a deployment with the name of the app with version suffix (with the name escaped to fulfill the DNS name requirements) e.g.

fooService-1-0-0-M1-123-456-VERSION -

all deployments of the same application have the same label

nameequal to app name e.g.fooService -

the service is routing the traffic basing on the

namelabel selector -

now two instances of the same application are running on production

-

-

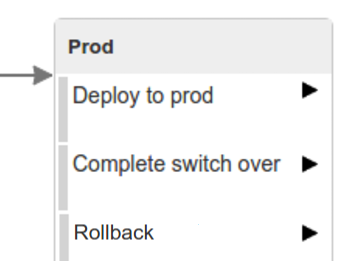

in the

Complete switch overwhich is a manual step-

we’re deleting the old instance

-

remember to run this step only after you have confirmed that both instances are working fine!

-

now two instances of the same application are running on production

-

-

in the

Rollback to bluewhich is a manual step-

we’re routing all the traffic to the old instance

-

in CF we do that by ensuring that blue is running and stopping green

-

in K8S we do that by scaling the number of instances of green to 0

-

this step will set the state of your system to such where most likely some manual intervention should take place (to restart some applications, redeploy them, etc.)

-

In this section we will go through the assumptions we’ve made in the project structure and project properties.

We’ve taken the following opinionated decisions for a Cloud Foundry based project:

-

application built using Maven or Gradle wrappers

-

application deployment to Cloud Foundry

-

For Maven (example project):

-

usage of Maven Wrapper

-

settings.xmlis parametrized to pass the credentials to push code to Artifactory-

M2_SETTINGS_REPO_ID- server id for Artifactory / Nexus deployment -

M2_SETTINGS_REPO_USERNAME- username for Artifactory / Nexus deployment -

M2_SETTINGS_REPO_PASSWORD- password for Artifactory / Nexus deployment

-

-

artifacts deployment by

./mvnw clean deploy -

stubrunner.idsproperty to retrieve list of collaborators for which stubs should be downloaded -

repo.with.binariesproperty - (Injected by the pipeline) will contain the URL to the repo containing binaries (e.g. Artifactory) -

distribution.management.release.idproperty - (Injected by the pipeline) ID of the distribution management. Corresponds to server id insettings.xml -

distribution.management.release.urlproperty - (Injected by the pipeline) Will contain the URL to the repo containing binaries (e.g. Artifactory) -

running API compatibility tests via the

apicompatibilityMaven profile -

latest.production.versionproperty - (Injected by the pipeline) will contain the latest production version for the repo (retrieved from Git tags) -

running smoke tests on a deployed app via the

smokeMaven profile -

running end to end tests on a deployed app via the

e2eMaven profile

-

-

For Gradle (example project check the

gradle/pipeline.gradlefile):-

usage of Gradlew Wrapper

-

deploytask for artifacts deployment -

REPO_WITH_BINARIESenv var - (Injected by the pipeline) will contain the URL to the repo containing binaries (e.g. Artifactory) -

M2_SETTINGS_REPO_USERNAMEenv var - Username used to send the binary to the repo containing binaries (e.g. Artifactory) -

M2_SETTINGS_REPO_PASSWORDenv var - Password used to send the binary to the repo containing binaries (e.g. Artifactory) -

running API compatibility tests via the

apiCompatibilitytask -

latestProductionVersionproperty - (Injected by the pipeline) will contain the latest production version for the repo (retrieved from Git tags) -

running smoke tests on a deployed app via the

smoketask -

running end to end tests on a deployed app via the

e2etask -

groupIdtask to retrieve group id -

artifactIdtask to retrieve artifact id -

currentVersiontask to retrieve the current version -

stubIdstask to retrieve list of collaborators for which stubs should be downloaded

-

We’ve taken the following opinionated decisions for a Cloud Foundry based project:

-

application built using Maven or Gradle wrappers

-

application deployment to Kubernetes

-

The produced Java Docker image needs to allow passing of system properties via

SYSTEM_PROPSenv variable -

For Maven (example project):

-

usage of Maven Wrapper

-

settings.xmlis parametrized to pass the credentials to push code to Artifactory and Docker repository-

M2_SETTINGS_REPO_ID- server id for Artifactory / Nexus deployment -

M2_SETTINGS_REPO_USERNAME- username for Artifactory / Nexus deployment -

M2_SETTINGS_REPO_PASSWORD- password for Artifactory / Nexus deployment -

DOCKER_SERVER_ID- server id for Docker image pushing -

DOCKER_USERNAME- username for Docker image pushing -

DOCKER_PASSWORD- password for Docker image pushing -

DOCKER_EMAIL- email for Artifactory / Nexus deployment

-

-

DOCKER_REGISTRY_URLenv var - (Overridable - defaults to DockerHub) URL of the Docker registry -

DOCKER_REGISTRY_ORGANIZATION- env var containing the organization where your Docker repo lays -

artifacts and Docker image deployment by

./mvnw clean deploy -

stubrunner.idsproperty to retrieve list of collaborators for which stubs should be downloaded -

repo.with.binariesproperty - (Injected by the pipeline) will contain the URL to the repo containing binaries (e.g. Artifactory) -

distribution.management.release.idproperty - (Injected by the pipeline) ID of the distribution management. Corresponds to server id insettings.xml -

distribution.management.release.urlproperty - (Injected by the pipeline) Will contain the URL to the repo containing binaries (e.g. Artifactory) -

deployment.ymlcontains the Kubernetes deployment descriptor -

service.ymlcontains the Kubernetes service descriptor -

running API compatibility tests via the

apicompatibilityMaven profile -

latest.production.versionproperty - (Injected by the pipeline) will contain the latest production version for the repo (retrieved from Git tags) -

running smoke tests on a deployed app via the

smokeMaven profile -

running end to end tests on a deployed app via the

e2eMaven profile

-

-

For Gradle (example project check the

gradle/pipeline.gradlefile):-

usage of Gradlew Wrapper

-

deploytask for artifacts deployment -

REPO_WITH_BINARIESenv var - (Injected by the pipeline) will contain the URL to the repo containing binaries (e.g. Artifactory) -

M2_SETTINGS_REPO_USERNAMEenv var - Username used to send the binary to the repo containing binaries (e.g. Artifactory) -

M2_SETTINGS_REPO_PASSWORDenv var - Password used to send the binary to the repo containing binaries (e.g. Artifactory) -

DOCKER_REGISTRY_URLenv var - (Overridable - defaults to DockerHub) URL of the Docker registry -

DOCKER_USERNAMEenv var - Username used to send the the Docker image -

DOCKER_PASSWORDenv var - Password used to send the the Docker image -

DOCKER_EMAILenv var - Email used to send the the Docker image -

DOCKER_REGISTRY_ORGANIZATION- env var containing the organization where your Docker repo lays -

deployment.ymlcontains the Kubernetes deployment descriptor -

service.ymlcontains the Kubernetes service descriptor -

running API compatibility tests via the

apiCompatibilitytask -

latestProductionVersionproperty - (Injected by the pipeline) will contain the latest production version for the repo (retrieved from Git tags) -

running smoke tests on a deployed app via the

smoketask -

running end to end tests on a deployed app via the

e2etask -

groupIdtask to retrieve group id -

artifactIdtask to retrieve artifact id -

currentVersiontask to retrieve the current version -

stubIdstask to retrieve list of collaborators for which stubs should be downloaded

-

|

Important

|

In this chapter we assume that you perform deployment of your application to Cloud Foundry PaaS |

The Spring Cloud Pipelines repository contains opinionated Concourse pipeline definition. Those jobs will form an empty pipeline and a sample, opinionated one that you can use in your company.

All in all there are the following projects taking part in the whole microservice setup for this demo.

-

Github Analytics - the app that has a REST endpoint and uses messaging. Our business application.

-

Github Webhook - project that emits messages that are used by Github Analytics. Our business application.

-

Eureka - simple Eureka Server. This is an infrastructure application.

-

Github Analytics Stub Runner Boot - Stub Runner Boot server to be used for tests with Github Analytics. Uses Eureka and Messaging. This is an infrastructure application.

If you want to just run the demo as far as possible using PCF Dev and Docker Compose

There are 4 apps that are composing the pipeline

You need to fork only these. That’s because only then will your user be able to tag and push the tag to repo.

Concourse + Artifactory can be run locally. To do that just execute the

start.sh script from this repo.

git clone https://github.com/spring-cloud/spring-cloud-pipelines

cd spring-cloud-pipelines/concourse

./setup_docker_compose.sh

./start.sh 192.168.99.100The setup_docker_compose.sh script should be executed once only to allow

generation of keys.

The 192.168.99.100 param is an example of an external URL of Concourse

(equal to Docker-Machine ip in this example).

Then Concourse will be running on port 8080 and Artifactory 8081.

When Artifactory is running, just execute the tools/deploy-infra.sh script from this repo.

git clone https://github.com/spring-cloud/spring-cloud-pipelines

cd spring-cloud-pipelines/

./tools/deploy-infra.shAs a result both eureka and stub runner repos will be cloned, built

and uploaded to Artifactory.

|

Tip

|

You can skip this step if you have CF installed and don’t want to use PCF Dev The only thing you have to do is to set up spaces. |

|

Warning

|

It’s more than likely that you’ll run out of resources when you reach stage step. Don’t worry! Keep calm and clear some apps from PCF Dev and continue. |

You have to download and start PCF Dev. A link how to do it is available here.

The default credentials when using PCF Dev are:

username: user

password: pass

email: user

org: pcfdev-org

space: pcfdev-space

api: api.local.pcfdev.ioYou can start the PCF Dev like this:

cf dev startYou’ll have to create 3 separate spaces (email admin, pass admin)

cf login -a https://api.local.pcfdev.io --skip-ssl-validation -u admin -p admin -o pcfdev-org

cf create-space pcfdev-test

cf set-space-role user pcfdev-org pcfdev-test SpaceDeveloper

cf create-space pcfdev-stage

cf set-space-role user pcfdev-org pcfdev-stage SpaceDeveloper

cf create-space pcfdev-prod

cf set-space-role user pcfdev-org pcfdev-prod SpaceDeveloperYou can also execute the ./tools/cf-helper.sh setup-spaces to do this.

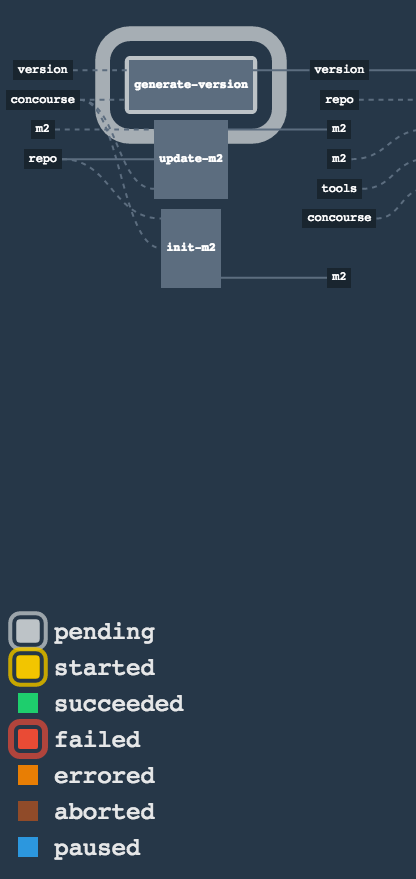

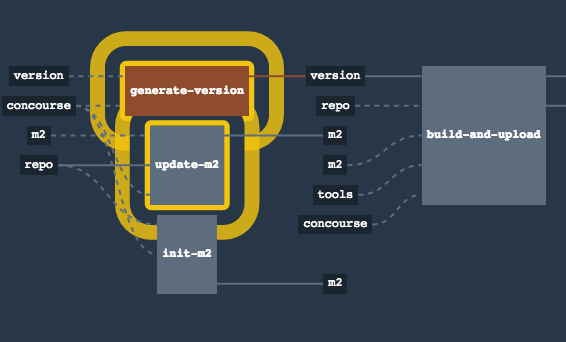

If you go to Concourse website you should see sth like this:

You can click one of the icons (depending on your OS) to download fly, which is the Concourse CLI. Once you’ve downloaded that (and maybe added to your PATH) you can run:

fly --versionIf fly is properly installed then it should print out the version.

The repo comes with credentials-sample-cf.yml which is set up with sample data (most credentials) are set to be applicable for PCF Dev. Copy this file to a new file credentials.yml (the file is added to .gitignore so don’t worry that you’ll push it with your passwords) and edit it as you wish. For our demo just setup:

-

app-url- url pointing to your forkedgithub-webhookrepo -

github-private-key- your private key to clone / tag GitHub repos -

repo-with-binaries- the IP is set to the defaults for Docker Machine. You should update it to point to your setup

If you don’t have a Docker Machine just execute ./whats_my_ip.sh script to

get an external IP that you can pass to your repo-with-binaries instead of the default

Docker Machine IP.

Below you can see what environment variables are required by the scripts. To the right hand side you can see the default values for PCF Dev that we set in the credentials-sample-cf.yml.

| Property Name | Property Description | Default value |

|---|---|---|

PAAS_TEST_API_URL |

The URL to the CF Api for TEST env |

api.local.pcfdev.io |

PAAS_STAGE_API_URL |

The URL to the CF Api for STAGE env |

api.local.pcfdev.io |

PAAS_PROD_API_URL |

The URL to the CF Api for PROD env |

api.local.pcfdev.io |

PAAS_TEST_ORG |

Name of the org for the test env |

pcfdev-org |

PAAS_TEST_SPACE |

Name of the space for the test env |

pcfdev-space |

PAAS_STAGE_ORG |

Name of the org for the stage env |

pcfdev-org |

PAAS_STAGE_SPACE |

Name of the space for the stage env |

pcfdev-space |

PAAS_PROD_ORG |

Name of the org for the prod env |

pcfdev-org |

PAAS_PROD_SPACE |

Name of the space for the prod env |

pcfdev-space |

REPO_WITH_BINARIES |

URL to repo with the deployed jars |

|

M2_SETTINGS_REPO_ID |

The id of server from Maven settings.xml |

artifactory-local |

PAAS_HOSTNAME_UUID |

Additional suffix for the route. In a shared environment the default routes can be already taken |

|

APP_MEMORY_LIMIT |

How much memory should be used by the infra apps (Eureka, Stub Runner etc.) |

256m |

JAVA_BUILDPACK_URL |

The URL to the Java buildpack to be used by CF |

|

BUILD_OPTIONS |

Additional options you would like to pass to the Maven / Gradle build |

Log in (e.g. for Concourse running at 192.168.99.100 - if you don’t provide any value then localhost is assumed). If you execute this script (it assumes that either fly is on your PATH or it’s in the same folder as the script is):

./login.sh 192.168.99.100Next run the command to create the pipeline.

./set_pipeline.shThen you’ll create a github-webhook pipeline under the docker alias, using the provided credentials.yml file.

You can override these values in exactly that order (e.g. ./set-pipeline.sh some-project another-target some-other-credentials.yml)

Not really. This is an opinionated pipeline that’s why we took some

opinionated decisions. Check out the documentation to see

what those decisions are.

Sure! It’s open-source! The important thing is that the core part of the logic is written in Bash scripts. That way, in the majority of cases, you could change only the bash scripts without changing the whole pipeline. You can check out the scripts here.

Furthermore, if you only want to customize a particular function under common/src/main/bash, you can provide your own

function under common/src/main/bash/<some custom identifier> where <some custom identifier> is equal to the value of

the CUSTOM_SCRIPT_IDENTIFIER environment variable. It defaults to custom.

When deploying the app to stage or prod you can get an exception Insufficient resources. The way to

solve it is to kill some apps from test / stage env. To achieve that just call

cf target -o pcfdev-org -s pcfdev-test

cf stop github-webhook

cf stop github-eureka

cf stop stubrunnerYou can also execute ./tools/cf-helper.sh kill-all-apps that will remove

all demo-related apps deployed to PCF Dev.

You must have pushed some tags and have removed the Artifactory volume that contained them. To fix this, just remove the tags

git tag -l | xargs -n 1 git push --delete originYes! Assuming that pipeline name is github-webhook and job name is build-and-upload you can running

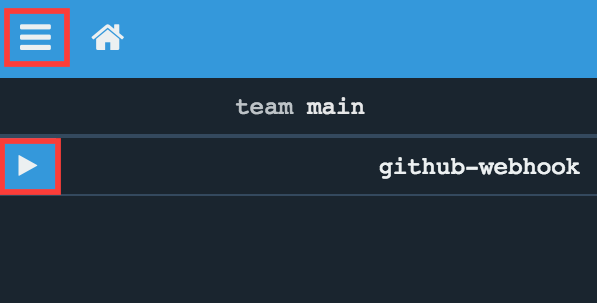

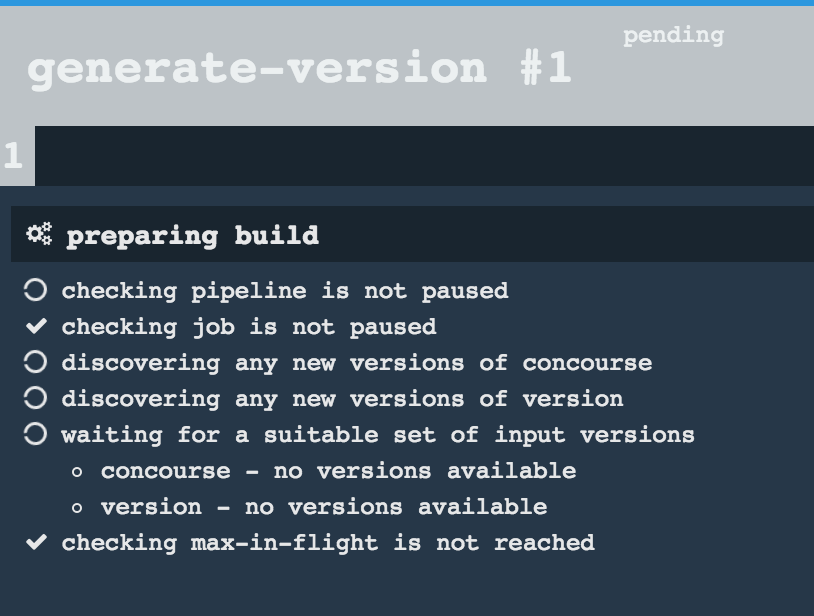

fly watch --job github-webhook/build-and-upload -t dockerDon’t worry… most likely you’ve just forgotten to click the play button to

unpause the pipeline. Click to the top left, expand the list of pipelines and click

the play button next to github-webhook.

Another problem that might occur is that you need to have the version branch.

Concourse will wait for the version branch to appear in your repo. So in order for

the pipeline to start ensure that when doing some git operations you haven’t

forgotten to create / copy the version branch too.

If you play around with Jenkins / Concourse you might end up with the routes occupied

Using route github-webhook-test.local.pcfdev.io

Binding github-webhook-test.local.pcfdev.io to github-webhook...

FAILED

The route github-webhook-test.local.pcfdev.io is already in use.Just delete the routes

yes | cf delete-route local.pcfdev.io -n github-webhook-test

yes | cf delete-route local.pcfdev.io -n github-eureka-test

yes | cf delete-route local.pcfdev.io -n stubrunner-test

yes | cf delete-route local.pcfdev.io -n github-webhook-stage

yes | cf delete-route local.pcfdev.io -n github-eureka-stage

yes | cf delete-route local.pcfdev.io -n github-webhook-prod

yes | cf delete-route local.pcfdev.io -n github-eureka-prodYou can also execute the ./tools/cf-helper.sh delete-routes

Most likely you’ve forgotten to update your local settings.xml with the Artifactory’s

setup. Check out this section of the docs and update your settings.xml.

When I click on it it looks like this:

resource script '/opt/resource/check []' failed: exit status 128

stderr:

Identity added: /tmp/git-resource-private-key (/tmp/git-resource-private-key)

Cloning into '/tmp/git-resource-repo-cache'...

warning: Could not find remote branch version to clone.

fatal: Remote branch version not found in upstream originThat means that your repo doesn’t have the version branch. Please

set it up.

Below you can find the answers to most frequently asked questions.

You can check the Jenkins logs and you’ll see

WARNING: Skipped parameter `PIPELINE_VERSION` as it is undefined on `jenkins-pipeline-sample-build`.

Set `-Dhudson.model.ParametersAction.keepUndefinedParameters`=true to allow undefined parameters

to be injected as environment variables or

`-Dhudson.model.ParametersAction.safeParameters=[comma-separated list]`

to whitelist specific parameter names, even though it represents a security breachTo fix it you have to do exactly what the warning suggests… Also ensure that the Groovy token macro processing

checkbox is set.

You can see that the Jenkins version is properly set but in the build version is still snapshot and

the echo "${PIPELINE_VERSION}" doesn’t print anything.

You can check the Jenkins logs and you’ll see

WARNING: Skipped parameter `PIPELINE_VERSION` as it is undefined on `jenkins-pipeline-sample-build`.

Set `-Dhudson.model.ParametersAction.keepUndefinedParameters`=true to allow undefined parameters

to be injected as environment variables or

`-Dhudson.model.ParametersAction.safeParameters=[comma-separated list]`

to whitelist specific parameter names, even though it represents a security breachTo fix it you have to do exactly what the warning suggests…

Docker compose, docker compose, docker compose… The problem is that for some reason, only in Docker, the execution of Java hangs. But it hangs randomly and only the first time you try to execute the pipeline.

The solution to this is to run the pipeline again. If once it suddenly, magically passes then it will pass for any subsequent build.

Another thing that you can try is to run it with plain Docker. Maybe that will help.

Sure! you can pass REPOS variable with comma separated list of

project_name$project_url format. If you don’t provide the PROJECT_NAME the

repo name will be extracted and used as the name of the project.

E.g. for REPOS equal to:

will result in the creation of pipelines with root names github-analytics and github-webhook.

E.g. for REPOS equal to:

foo$https://github.com/spring-cloud-samples/github-analytics,bar$https://github.com/spring-cloud-samples/atom-feed

will result in the creation of pipelines with root names foo for github-analytics

and bar for github-webhook.

Not really. This is an opinionated pipeline that’s why we took some

opinionated decisions like:

-

usage of Spring Cloud, Spring Cloud Contract Stub Runner and Spring Cloud Eureka

-

application deployment to Cloud Foundry

-

For Maven:

-

usage of Maven Wrapper

-

artifacts deployment by

./mvnw clean deploy -

stubrunner.idsproperty to retrieve list of collaborators for which stubs should be downloaded -

running smoke tests on a deployed app via the

smokeMaven profile -

running end to end tests on a deployed app via the

e2eMaven profile

-

-

For Gradle (in the

github-analyticsapplication check thegradle/pipeline.gradlefile):-

usage of Gradlew Wrapper

-

deploytask for artifacts deployment -

running smoke tests on a deployed app via the

smoketask -

running end to end tests on a deployed app via the

e2etask -

groupIdtask to retrieve group id -

artifactIdtask to retrieve artifact id -

currentVersiontask to retrieve the current version -

stubIdstask to retrieve list of collaborators for which stubs should be downloaded

-

This is the initial approach that can be easily changed in the future.

Sure! It’s open-source! The important thing is that the core part of the logic is written in Bash scripts. That way, in the majority of cases, you could change only the bash scripts without changing the whole pipeline.

You must have pushed some tags and have removed the Artifactory volume that contained them. To fix this, just remove the tags

git tag -l | xargs -n 1 git push --delete origin-

by default we assume that you have jdk with id

jdk8configured -

if you want a different one just override

JDK_VERSIONenv var and point to the proper one

|

Tip

|

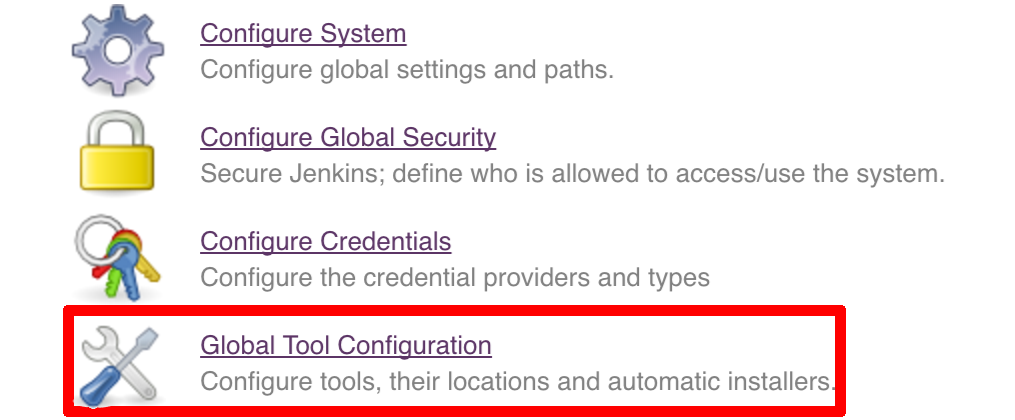

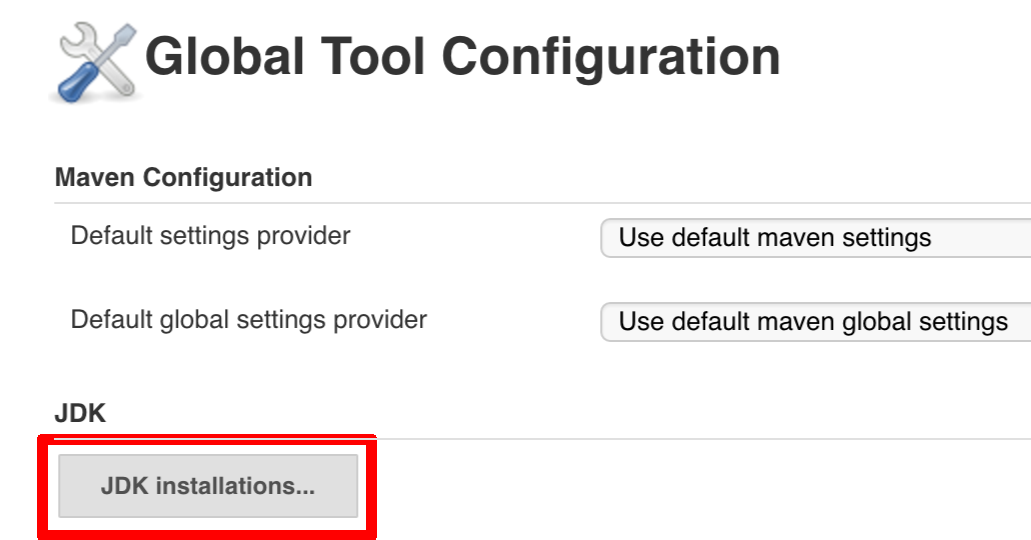

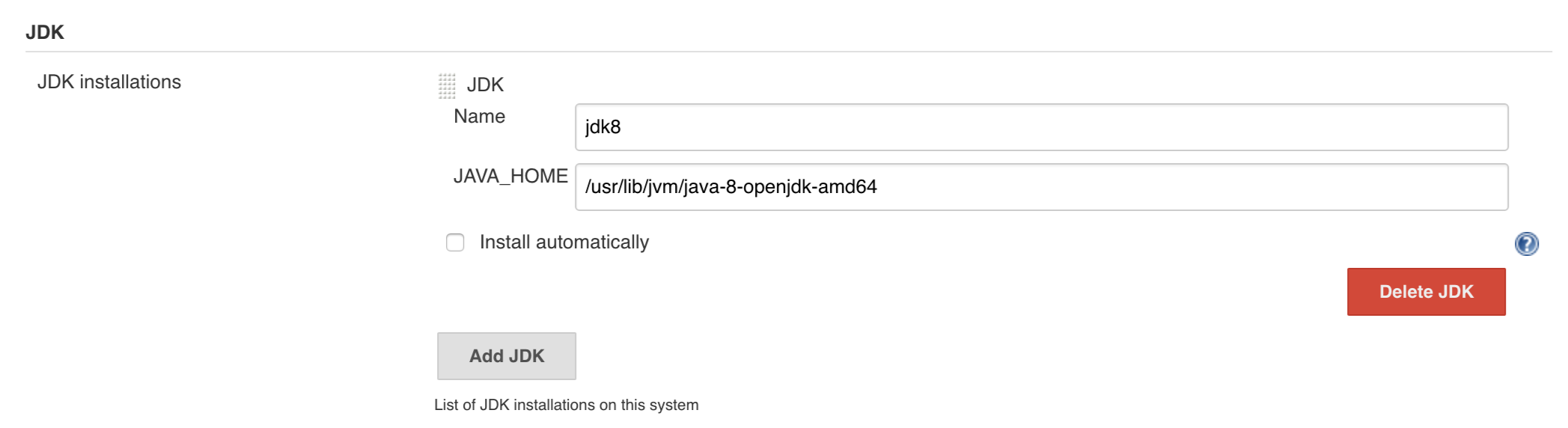

The docker image comes in with Java installed at /usr/lib/jvm/java-8-openjdk-amd64.

You can go to Global Tools and create a JDK with jdk8 id and JAVA_HOME

pointing to /usr/lib/jvm/java-8-openjdk-amd64

|

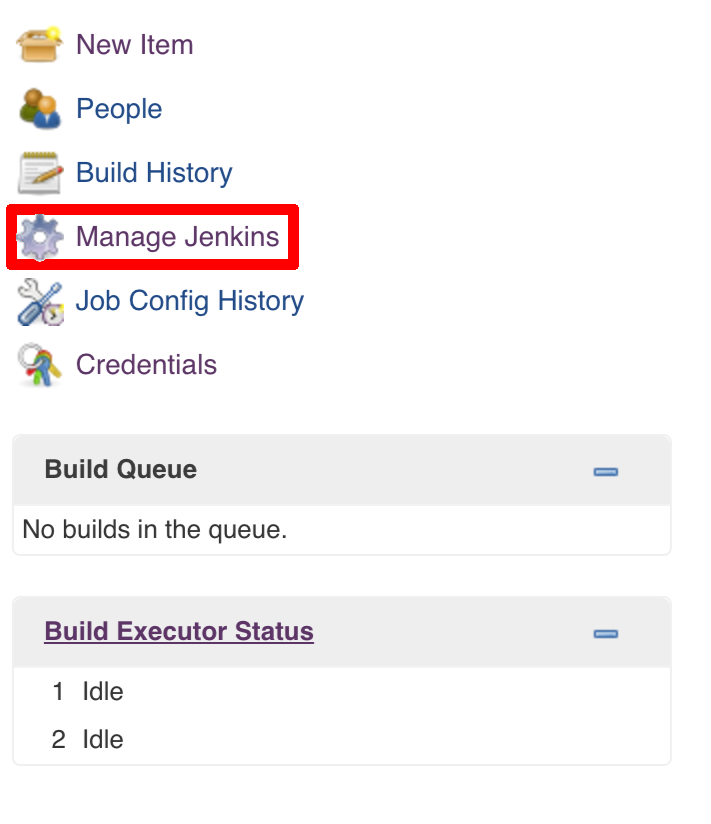

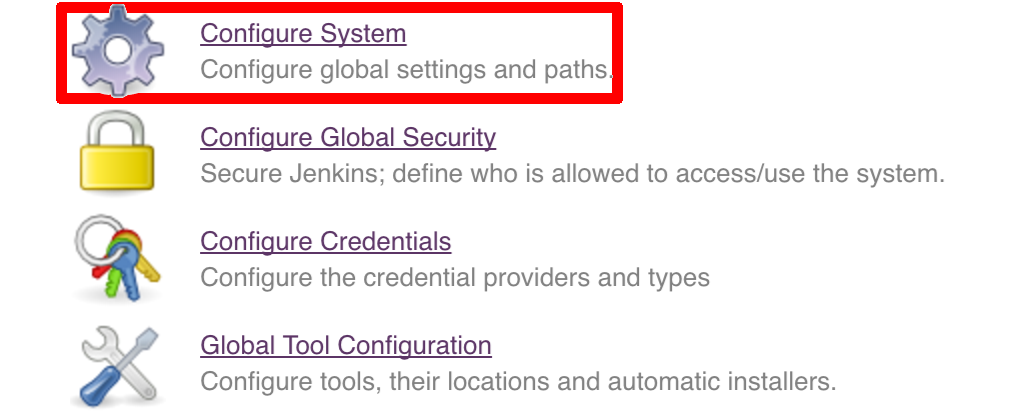

To change the default one just follow these steps:

And that’s it!

With scripted that but if you needed to this manually then this is how to do it:

No problem, just set the property / env var to true

-

AUTO_DEPLOY_TO_STAGEto automatically deploy to stage -

AUTO_DEPLOY_TO_PRODto automatically deploy to prod

No problem, just set the API_COMPATIBILITY_STEP_REQUIRED env variable

to false and rerun the seed (you can pick it from the seed

job’s properties too).

When you get sth like this:

19:01:44 stderr: remote: Invalid username or password.

19:01:44 fatal: Authentication failed for 'https://github.com/marcingrzejszczak/github-webhook/'

19:01:44

19:01:44 at org.jenkinsci.plugins.gitclient.CliGitAPIImpl.launchCommandIn(CliGitAPIImpl.java:1740)

19:01:44 at org.jenkinsci.plugins.gitclient.CliGitAPIImpl.launchCommandWithCredentials(CliGitAPIImpl.java:1476)

19:01:44 at org.jenkinsci.plugins.gitclient.CliGitAPIImpl.access$300(CliGitAPIImpl.java:63)

19:01:44 at org.jenkinsci.plugins.gitclient.CliGitAPIImpl$8.execute(CliGitAPIImpl.java:1816)

19:01:44 at hudson.plugins.git.GitPublisher.perform(GitPublisher.java:295)

19:01:44 at hudson.tasks.BuildStepMonitor$3.perform(BuildStepMonitor.java:45)

19:01:44 at hudson.model.AbstractBuild$AbstractBuildExecution.perform(AbstractBuild.java:779)

19:01:44 at hudson.model.AbstractBuild$AbstractBuildExecution.performAllBuildSteps(AbstractBuild.java:720)

19:01:44 at hudson.model.Build$BuildExecution.post2(Build.java:185)

19:01:44 at hudson.model.AbstractBuild$AbstractBuildExecution.post(AbstractBuild.java:665)

19:01:44 at hudson.model.Run.execute(Run.java:1745)

19:01:44 at hudson.model.FreeStyleBuild.run(FreeStyleBuild.java:43)

19:01:44 at hudson.model.ResourceController.execute(ResourceController.java:98)

19:01:44 at hudson.model.Executor.run(Executor.java:404)most likely you’ve passed a wrong password. Check the credentials section on how to update your credentials.

Most likely you’ve forgotten to update your local settings.xml with the Artifactory’s

setup. Check out this section of the docs and update your settings.xml.

In some cases it may be required that when performing a release that the artifacts be signed

before pushing them to the repository.

To do this you will need to import your GPG keys into the Docker image running Jenkins.

This can be done by placing a file called public.key containing your public key

and a file called private.key containing your private key in the seed directory.

These keys will be imported by the init.groovy script that is run when Jenkins starts.

The seed job checks if an env variable GIT_USE_SSH_KEY is set to true. If that’s the case

then env variable GIT_SSH_CREDENTIAL_ID will be chosen as the one that contains the

id of the credential that contains SSH private key. By default GIT_CREDENTIAL_ID will be picked

as the one that contains username and password to connect to git.

You can set these values in the seed job by filling out the form / toggling a checkbox.

There can be a number of reason but remember that for stage we assume that a sequence of manual steps need to be performed. We don’t redeploy any existing services cause most likely you deliberately have set it up in that way or the other. If in the logs of your application you can see that you can’t connect to a service, first ensure that the service is forwarding traffic to a pod. Next if that’s not the case please delete the service and re-run the step in the pipeline. That way Spring Cloud Pipelines will redeploy the service and the underlying pods.

[jenkins-cf-resources]] When deploying the app to stage or prod you can get an exception Insufficient resources. The way to

solve it is to kill some apps from test / stage env. To achieve that just call

cf target -o pcfdev-org -s pcfdev-test

cf stop github-webhook

cf stop github-eureka

cf stop stubrunnerYou can also execute ./tools/cf-helper.sh kill-all-apps that will remove all demo-related apps

deployed to PCF Dev.

If you receive a similar exception:

20:26:18 API endpoint: https://api.local.pcfdev.io (API version: 2.58.0)

20:26:18 User: user

20:26:18 Org: pcfdev-org

20:26:18 Space: No space targeted, use 'cf target -s SPACE'

20:26:18 FAILED

20:26:18 Error finding space pcfdev-test

20:26:18 Space pcfdev-test not foundIt means that you’ve forgotten to create the spaces in your PCF Dev installation.

If you play around with Jenkins / Concourse you might end up with the routes occupied

Using route github-webhook-test.local.pcfdev.io

Binding github-webhook-test.local.pcfdev.io to github-webhook...

FAILED

The route github-webhook-test.local.pcfdev.io is already in use.Just delete the routes

yes | cf delete-route local.pcfdev.io -n github-webhook-test

yes | cf delete-route local.pcfdev.io -n github-eureka-test

yes | cf delete-route local.pcfdev.io -n stubrunner-test

yes | cf delete-route local.pcfdev.io -n github-webhook-stage

yes | cf delete-route local.pcfdev.io -n github-eureka-stage

yes | cf delete-route local.pcfdev.io -n github-webhook-prod

yes | cf delete-route local.pcfdev.io -n github-eureka-prodYou can also execute the ./tools/cf-helper.sh delete-routes

Assuming that you’re already logged into the cluster it’s enough to run the

helper script with the REUSE_CF_LOGIN=true env variable. Example:

REUSE_CF_LOGIN=true ./tools/cf-helper.sh setup-prod-infraThis script will create the mysql db, rabbit mq service, download and deploy Eureka to the space and organization you’re logged into.

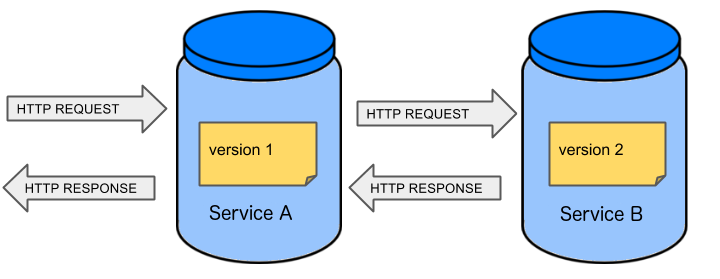

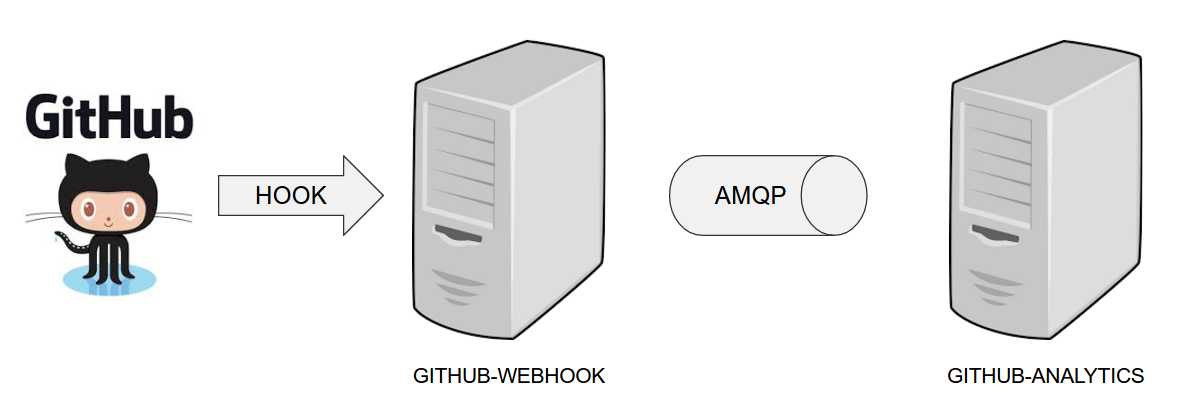

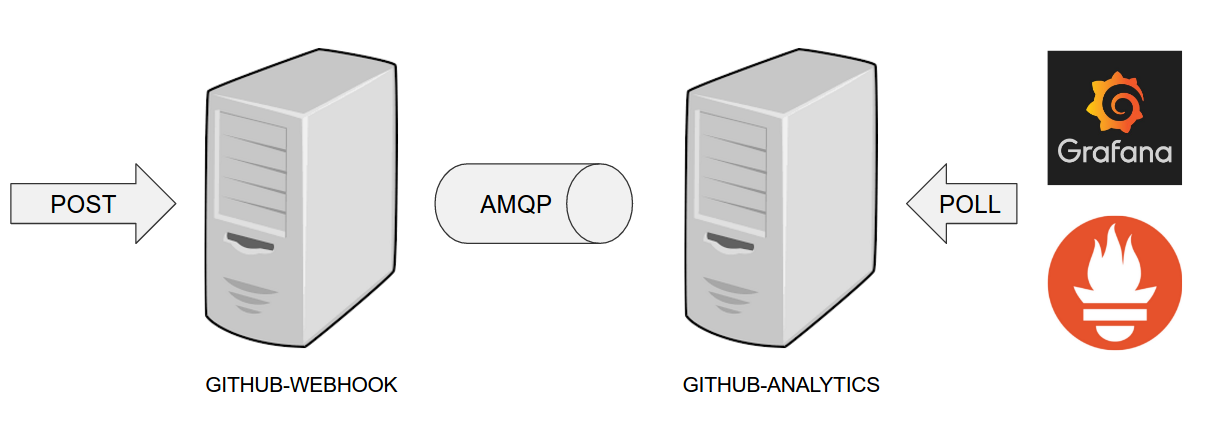

The demo uses 2 applications. Github Webhook and Github analytics code. Below you can see an image of how these application communicate with each other.

For the demo scenario we have two applications. Github Analytics and Github Webhook.

Let’s imagine a case where Github is emitting events via HTTP. Github Webhook has

an API that could register to such hooks and receive those messages. Once this happens

Github Webhook sends a message by RabbitMQ to a channel. Github Analytics is

listening to those messages and stores them in a MySQL database.

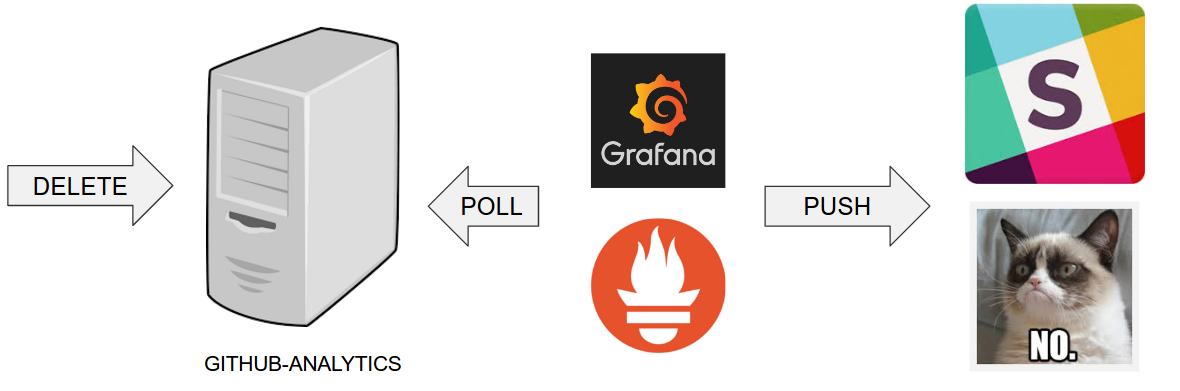

Github Analytics has its KPIs (Key Performance Indicators) monitored. In the case

of that application the KPI is number of issues.

Let’s assume that if we go below the threshold of X issues then an alert should be sent to Slack.

In the real world scenario we wouldn’t want to automatically provision services like

RabbitMQ, MySQL or Eureka each time we deploy a new application to production. Typically

production is provisioned manually (using automated solutions). In our case, before

you deploy to production you can provision the pcfdev-prod space using the

cf-helper.sh. Just call

$ ./cf-helper.sh setup-prod-infraWhat will happen is that the CF CLI will login to PCF Dev, target pcfdev-prod space,

setup RabbitMQ (under rabbitmq-github name), MySQL (under mysql-github-analytics name)

and Eureka (under github-eureka name).

You can check out Toshiaki Maki’s code on how to automate Prometheus installation on CF.

Go to https://prometheus.io/download/ and download linux binary. Then call:

cf push sc-pipelines-prometheus -b binary_buildpack -c './prometheus -web.listen-address=:8080' -m 64mAlso localhost:9090 in prometheus.yml should be localhost:8080.

The file should look like this to work with the demo setup (change github-analytics-sc-pipelines.cfapps.io

to your github-analytics installation).

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Attach these labels to any time series or alerts when communicating with

# external systems (federation, remote storage, Alertmanager).

external_labels:

monitor: 'codelab-monitor'

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first.rules"

# - "second.rules"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:8080']

- job_name: 'demo-app'

# Override the global default and scrape targets from this job every 5 seconds.

scrape_interval: 5s

metrics_path: '/prometheus'

# scheme defaults to 'http'.

static_configs:

- targets: ['github-analytics-sc-pipelines.cfapps.io']A deployed version for the Spring Cloud Pipelines demo is available here

You can check out Toshiaki Maki’s code on how to automate Prometheus installation on CF.

Download tarball from https://grafana.com/grafana/download?platform=linux

Next set http_port = 8080 in conf/default.ini. Then call

cf push sc-pipelines-grafana -b binary_buildpack -c './bin/grafana-server web' -m 64mThe demo is using Grafana Dashboard with ID 2471.

A deployed version for the Spring Cloud Pipelines demo is available here

As prerequisites you need to have shellcheck,

bats, jq

and ruby installed. If you’re on a Linux

machine then bats and shellcheck will be installed for you.

To install the required software on Linux just type the following commands

$ sudo apt-get install -y ruby jqIf you’re on a Mac then just execute these commands to install the missing software

$ brew install jq

$ brew install ruby

$ brew install bats

$ brew install shellcheckTo make bats work properly we needed to attach Git submodules. To have them

initialized either clone with appropriate command

$ git clone --recursive https://github.com/spring-cloud/spring-cloud-pipelines.gitor if you have already cloned the project and are just pulling changes

$ git submodule init

$ git submodule updateIf you forget about this step, then Gradle will execute these steps for you.

Once you have installed all the prerequisites you can execute

$ ./gradlew clean buildto build and test the project.

If you want to pick only pieces (e.g. you’re interested only in Cloud Foundry with

Concourse combination) it’s enough to execute this command:

$ ./gradlew customizeYou’ll see a screen looking more or less like this:

:customize

___ _ ___ _ _ ___ _ _ _

/ __|_ __ _ _(_)_ _ __ _ / __| |___ _ _ __| | | _ (_)_ __ ___| (_)_ _ ___ ___

\__ \ '_ \ '_| | ' \/ _` | | (__| / _ \ || / _` | | _/ | '_ \/ -_) | | ' \/ -_|_-<

|___/ .__/_| |_|_||_\__, | \___|_\___/\_,_\__,_| |_| |_| .__/\___|_|_|_||_\___/__/

|_| |___/ |_|

Follow the instructions presented in the console or terminate the process to quit (ctrl + c)

=== PAAS TYPE ===

Which PAAS type do you want to use? Options: [CF, K8S, BOTH]

<-------------> 0% EXECUTING

> :customizeNow you need to answer a couple of questions. That way whole files and its pieces

will get removed / updated accordingly. If you provide CF and Concourse options

thn Kubernetes and Jenkins configuration / folders / pieces of code in

the project will get removed.

When doing a release you also need to push a Docker image to Dockerhub.

From the project root, run the following commands replacing <version> with the

version of the release.

docker login

docker build -t springcloud/spring-cloud-pipeline-jenkins:<version> ./jenkins

docker push springcloud/spring-cloud-pipeline-jenkins:<version>