Features • Usage • Configuration • Development • Credits • License

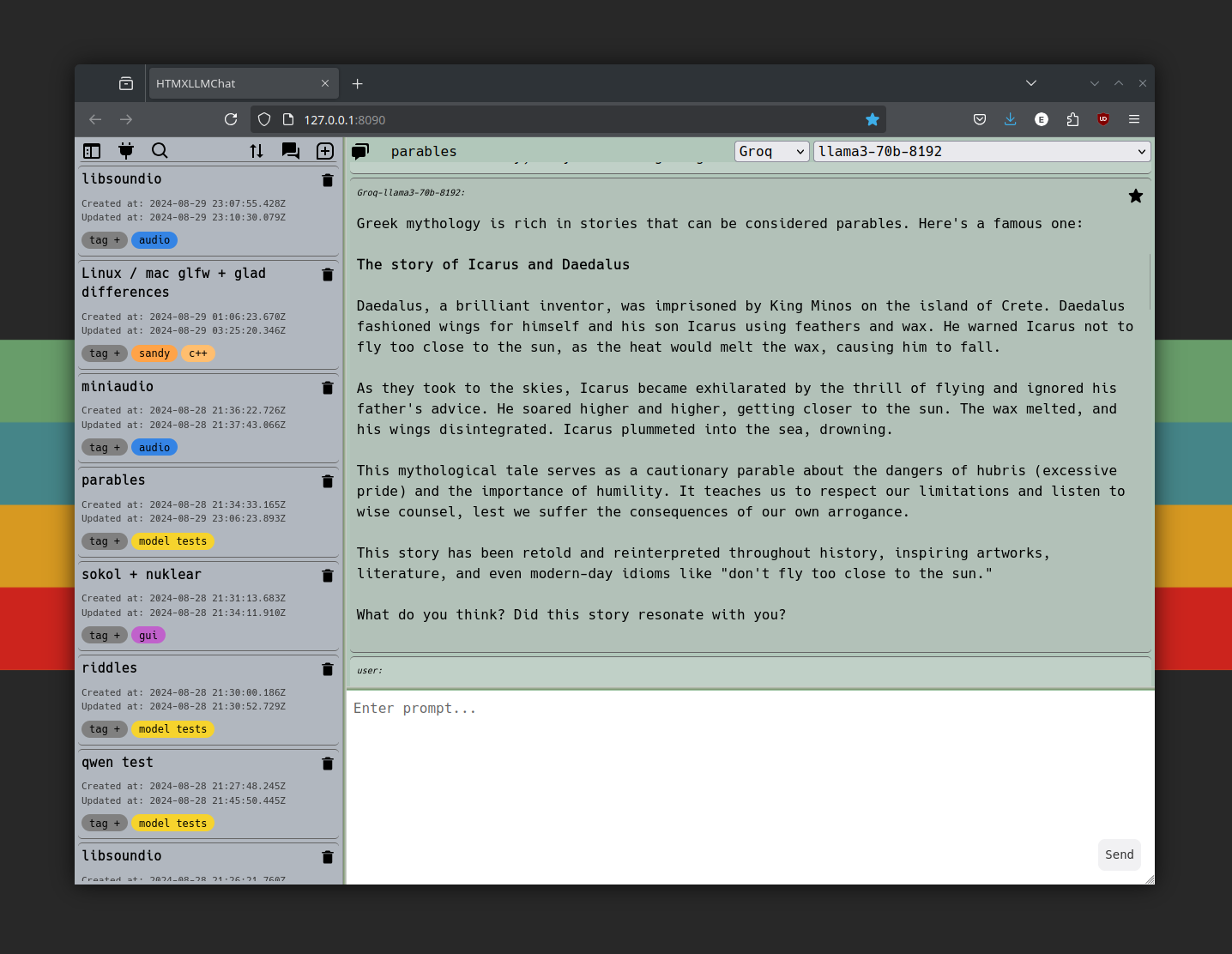

- Connect to any OpenAI compatible API, local or external.

- Switch between APIs and models within conversations.

- Search thread history based on content, tags, models, and usefulness.

- Tag threads to keep common topics readily accessible.

- Mark messages as useful to easily find and for a basic model ranking system.

- Customize colors to your preference.

- Download from releases

- Open command prompt in htmx-llmchat directory

./htmx-llmchat serveNote Unsigned and mostly untested, you may encounter security warnings before being able to run on macOS and Windows.

git clone https://github.com/erikmillergalow/htmx-llmchat.git

cd htmx-llmchat

templ generate

go run main.go serve- Connect to 127.0.01:8090 in web browser

To clone and run this application, you'll need Git and Node.js (which comes with npm) installed on your computer. From your command line:

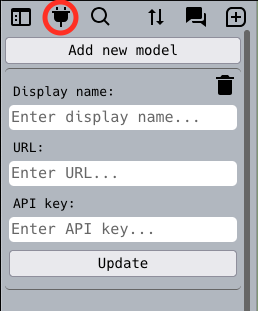

- Open the API editor and add an API:

- Enter a display name, the OpenAI compatible API /v1 endpoint, and an API key (not always necessary)

- Press update for changes to take effect

- Easy setup, easy to download and manage models.

- Download

- Run Ollama, download models listed here

- HTMXLLMChat Config:

- URL: http://localhost:11434/v1

- API Key: ollama

- Quickstart

- HTMXLLMChat Config:

- URL: http://0.0.0.0:8080/v1

- No API key needed

- Option to run in OpenAI compatible server mode

- Create config file to enable multi-model support

python3 -m llama_cpp.server --config_file <config_file>- HTMXLLMChat Config:

- URL: http://0.0.0.0:8080/v1

- No API key needed

- Offers llama, gemma, and mistral on free tier, fast responses.

- Create account

- Generate API key

- HTMXLLMChat Config:

- URL: https://api.groq.com/openai/v1

- Paste API key generated in web console

- Pay as you go instead of flat ChatGPT Plus rate, access to wider range of models.

- Create account

- Generate API key

- HTMXLLMChat Config:

- URL: https://api.openai.com/v1

- Paste API key generated in web console

Note Many other options available, just make sure that they support

/v1/chat/completionsstreaming and/v1/modelsto list model options.

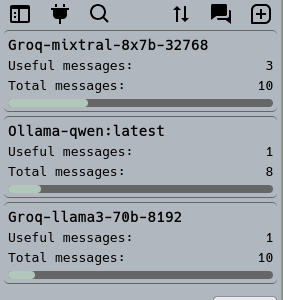

Note Total and 'useful' chats tracked over time per model and can be viewed in the config menu:

- air can be installed for live reloading

.air.tomlincluded in repo

// run for development

air

// build binaries

make all- Tauri can be used to package everything as a standalone desktop app (no browser needed)

// development mode

npm run tauri dev

// create release bundle

// src-tauri/tauri.conf.json beforeBuildCommand is configured for Github Actions,

// needs to be modified for local builds

npm run tauri build- Runs on macOS successfully but ran into this issue while creating release bundle for Linux/Windows. Need to determine if the paths API or BaseDirectory can be used to provide the Pocketbase sidecar access to a non read-only filesystem.

This software uses the following open source packages:

- sashabaranov/go-openai

- Pocketbase

- Templ

- HTMX

- Air

- Tauri - (attempted to package as all-in-one program, incomplete)

MIT