This repository contains the essential code for the paper ConfliBERT: A Pre-trained Language Model for Political Conflict and Violence (NAACL 2022).

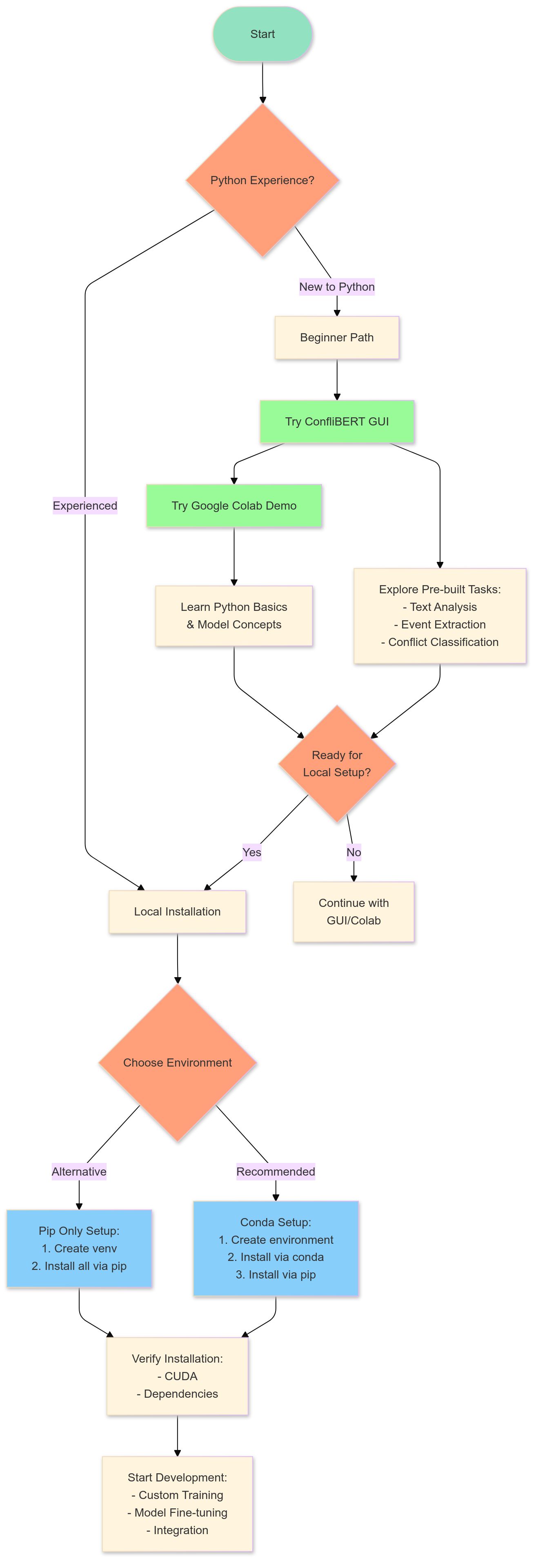

Not sure where to start and why a research scholar would use ConfliBERT? Check our Installation Decision Workflow to find the best path for your experience level and needs.

We offer multiple ways to get started with ConfliBERT:

- Browser-Based Options

- Try ConfliBERT directly in your browser with no installation required

- Cloud GUI - Access through our hosted web interface

- Local GUI Installation Run ConfliBERT's interface on your own machine for enhanced privacy and speed: Clone the repository to a local directory (do not clone to cloud storage, venv installs will be very slow if you do):

git clone https://github.com/shreyasmeher/conflibert-gui.git

cd conflibert-guiCreate and activate a virtual environment:

python -m venv env

source env/bin/activate # On Windows, use: env\Scripts\activateInstall required packages:

pip install -r requirements.txtStart the application:

python app.pyThe app will provide two URLs in the terminal:

- A local URL (e.g., http://127.0.0.1:7860 or http://localhost:7860)

- A public URL (to access from other devices)

Open either URL in your web browser to use the interface.

If you're comfortable with Python and want to set up ConfliBERT locally, continue with the installation guide below.

- Original Paper

- Hugging Face Documentation

- EventData Hugging Face (finetuned models)

- ConfliBERT Documentation (Work in Progress!)

ConfliBERT requires Python 3 and CUDA (for GPU accel). You can install the dependencies using either conda (recommended) or pip.

# Create and activate a new conda environment

conda create -n conflibert python=3.10 # Using a newer Python version for better compatibility

conda activate conflibert

# Install core packages

conda install pytorch -c pytorch # Latest stable version

conda install numpy scikit-learn pandas -c conda-forge # Latest compatible versions

# Install transformer libraries

pip install transformers # Latest stable version

pip install simpletransformers

# Optional: If you need CUDA support for GPU

# conda install cudatoolkit -c pytorch# Create and activate a virtual environment (optional but recommended)

python3 -m venv conflibert-env

source conflibert-env/bin/activate # On Windows use: conflibert-env\Scripts\activate

# Install core packages

pip install torch # Latest stable version

pip install numpy scikit-learn pandas # Latest compatible versions

# Install transformer libraries

pip install transformers

pip install simpletransformers

# Optional: If you need GPU support, install CUDA toolkit

# Download from: https://developer.nvidia.com/cuda-downloadsAfter installation, verify your setup:

import torch

import transformers

import numpy

import sklearn

import pandas

from simpletransformers.model import TransformerModel

# Check CUDA availability

print(f"CUDA available: {torch.cuda.is_available()}")

print(f"PyTorch version: {torch.__version__}")

print(f"Transformers version: {transformers.__version__}")- If you encounter CUDA errors, ensure your NVIDIA drivers are properly installed:

nvidia-smi - For pip-only installation, you might need to install CUDA toolkit separately

- If you face dependency conflicts, try installing packages one at a time

We provided four versions of ConfliBERT:

- ConfliBERT-scr-uncased: Pretraining from scratch with our own uncased vocabulary (preferred)

- ConfliBERT-scr-cased: Pretraining from scratch with our own cased vocabulary

- ConfliBERT-cont-uncased: Continual pretraining with original BERT's uncased vocabulary

- ConfliBERT-cont-cased: Continual pretraining with original BERT's cased vocabulary

You can import the above four models directly via Huggingface API:

from transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("snowood1/ConfliBERT-scr-uncased", use_auth_token=True)

model = AutoModelForMaskedLM.from_pretrained("snowood1/ConfliBERT-scr-uncased", use_auth_token=True)

The usage of ConfliBERT is the same as other BERT models in Huggingface.

We provided multiple examples using Simple Transformers. You can run:

CUDA_VISIBLE_DEVICES=0 python finetune_data.py --dataset IndiaPoliceEvents_sents --report_per_epoch

Click the Colab demo to see an example of evaluation:

Below is the summary of the publicly available datasets:

| Dataset | Links |

|---|---|

| 20Newsgroups | https://www.kaggle.com/crawford/20-newsgroups |

| BBCnews | https://www.kaggle.com/c/learn-ai-bbc/overview |

| EventStatusCorpus | https://catalog.ldc.upenn.edu/LDC2017T09 |

| GlobalContention | https://github.com/emerging-welfare/glocongold/tree/master/sample |

| GlobalTerrorismDatabase | https://www.start.umd.edu/gtd/ |

| Gun Violence Database | http://gun-violence.org/download/ |

| IndiaPoliceEvents | https://github.com/slanglab/IndiaPoliceEvents |

| InsightCrime | https://figshare.com/s/73f02ab8423bb83048aa |

| MUC-4 | https://github.com/xinyadu/grit_doc_event_entity/tree/master/data/muc |

| re3d | https://github.com/juand-r/entity-recognition-datasets/tree/master/data/re3d |

| SATP | https://github.com/javierosorio/SATP |

| CAMEO | https://dl.acm.org/doi/abs/10.1145/3514094.3534178 |

To use your own datasets, the 1st step is to preprocess the datasets into the required formats in ./data. For example,

- IndiaPoliceEvents_sents for classfication tasks. The format is sentence + labels separated by tabs.

- re3d for NER tasks in CONLL format

The 2nd step is to create the corresponding config files in ./configs with the correct tasks from ["binary", "multiclass", "multilabel", "ner"].

We have gathered a large corpus in politics and conflicts domain (33 GB) for pretraining ConfliBERT. The folder ./pretrain-corpora/Crawlers and Processes contains the sample scripts used to generate the corpus used in this study. Due to the copyright, we provide a few samples in ./pretrain-corpora/Samples. These samples follow the format of "one sentence per line format". See more details of pretraining corpora in our paper's Section 2 and Appendix.

We followed the same pretraining scripts run_mlm.py from Huggingface (The original link). Below is an example using 8 GPUs. We have provided our parameters in the Appendix. However, you should change the parameters according to your own devices:

export NGPU=8; nohup python -m torch.distributed.launch --master_port 12345 \

--nproc_per_node=$NGPU run_mlm.py \

--model_type bert \

--config_name ./bert_base_cased \

--tokenizer_name ./bert_base_cased \

--output_dir ./bert_base_cased \

--cache_dir ./cache_cased_128 \

--use_fast_tokenizer \

--overwrite_output_dir \

--train_file YOUR_TRAIN_FILE \

--validation_file YOUR_VALID_FILE \

--max_seq_length 128\

--preprocessing_num_workers 4 \

--dataloader_num_workers 2 \

--do_train --do_eval \

--learning_rate 5e-4 \

--warmup_steps=10000 \

--save_steps 1000 \

--evaluation_strategy steps \

--eval_steps 10000 \

--prediction_loss_only \

--save_total_limit 3 \

--per_device_train_batch_size 64 --per_device_eval_batch_size 64 \

--gradient_accumulation_steps 4 \

--logging_steps=100 \

--max_steps 100000 \

--adam_beta1 0.9 --adam_beta2 0.98 --adam_epsilon 1e-6 \

--fp16 True --weight_decay=0.01

If you find this repo useful in your research, please consider citing:

@inproceedings{hu2022conflibert,

title={ConfliBERT: A Pre-trained Language Model for Political Conflict and Violence},

author={Hu, Yibo and Hosseini, MohammadSaleh and Parolin, Erick Skorupa and Osorio, Javier and Khan, Latifur and Brandt, Patrick and D’Orazio, Vito},

booktitle={Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies},

pages={5469--5482},

year={2022}

}

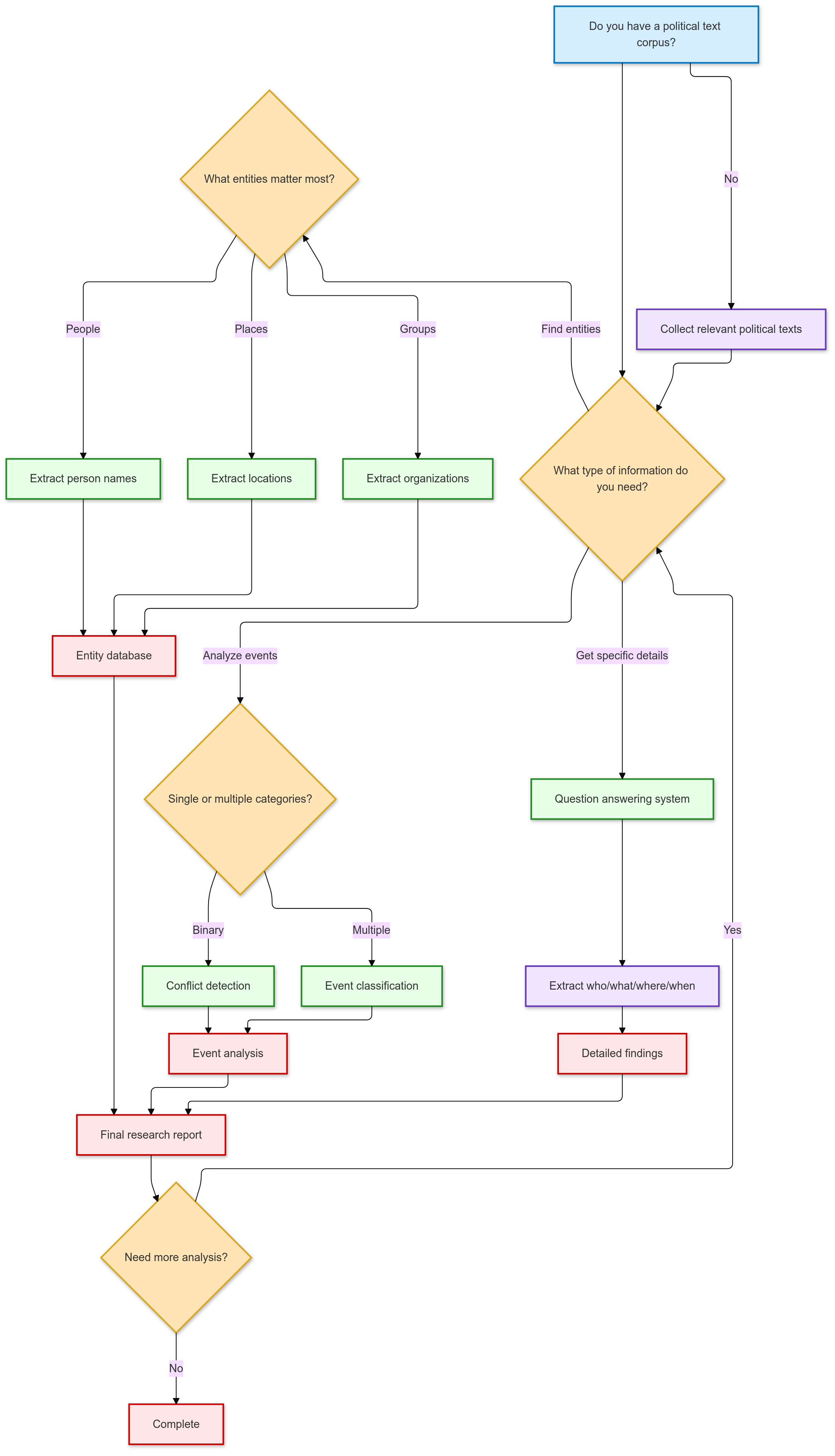

These workflows help you navigate both technical setup and research planning with ConfliBERT.