MongoDB GridFS comes with some natural advantages such as scalability(sharding) and HA(replica set). But as it stores file in ASCII string chunks, there's no doubt a performance loss.

I'm trying 3 different deployments (different MongoDB drivers) to read from GridFS. And compare the results to classic Nginx configuration.

location /files/ {

alias /home/ubuntu/;

}

open_file_cache kept off during the test.

It's a Nginx plugin based on MongoDB C driver. https://github.com/mdirolf/nginx-gridfs

I made a quick install script in this repo, run it with sudo. After Nginx is ready, modify the configration file under /usr/local/nginx/conf/nginx.conf (if you didn't change the path).

location /gridfs/{

gridfs test1 type=string field=filename;

}

Use /usr/local/nginx/sbin/nginx to start Nginx. And use parameter -s reload if you changed the configuration file again.

- Flask 0.10.1

- Gevent 1.0.0

- Gunicorn 0.18.0

- pymongo 2.6.3

cd flaskapp/ sudo chmod +x runflask.sh bash runflask.sh

Script runflask.sh will start gunicorn with gevnet woker mode.

Gunicorn configuration file here

- Node.js 0.10.4

- Express 3.4.7

- mongodb(driver) 1.3.23

cd nodejsapp/ sudo chmod +x runnodejs.sh bash runnodejs.sh

- file served by Nginx directly

- file served by Nginx_gridFS + GridFS

- file served by Flask + pymongo + gevent + GridFS

- file served by Node.js + GridFS

Run script insert_file_gridfs.py from MongoDB server to insert 4 different size of file to database test1(pymongo is required)

- 1KB

- 100KB

- 1MB

2 servers:

- MongoDB+Application/Nginx

- tester(Apache ab/JMeter)

hardware:

- Amazon EC2 m1.medium

- Ubuntu 12.04 x64

100 concurrent requests, total 500 requests.

ab -c 100 -n 500 ...

Time per request (download)

| File size | Nginx+Hard drive | Nginx+GridFS plugin | Python(pymongo+gevent) | Node.js |

|---|---|---|---|---|

| 1KB | 0.174 | 1.124 | 1.982 | 1.679 |

| 100KB | 1.014 | 1.572 | 3.103 | 3.708 |

| 1MB | 9.582 | 9.567 | 15.973 | 18.317 |

You can get Apache ab report in folder: testresult

The server load is be monitored by command:

vmstat 2

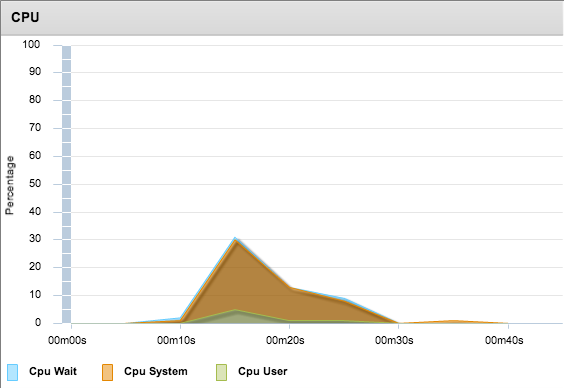

Nginx:

Nginx_gridfs

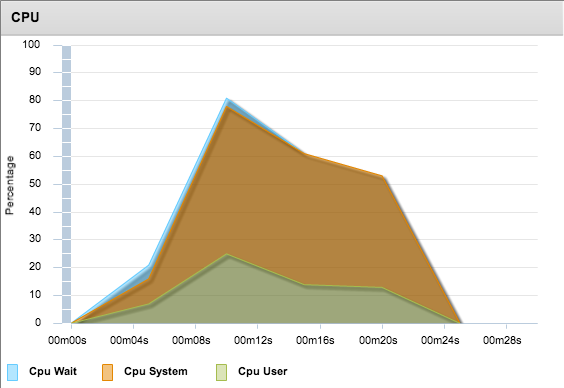

gevent+pymongo

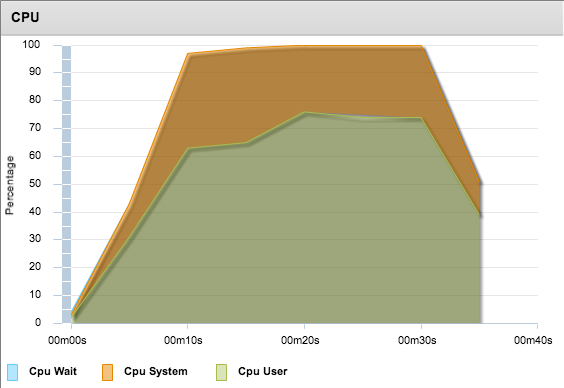

Node.js

- Files served by Nginx directly

-

No doubt it's the most efficient one, whether performance or server load.

-

Support cache. In real world, the directive

open_file_cacheshould be configured well for better performance. -

And must mention, it's the only one support pause and resume during the download(HTTP range support).

-

For the rest 3 test items, files are stored in MongoDB, but served by different drivers.

- serve static files by application is really not an appropriate choice. They drains CPU too much and the performance is not good.

- nginx_gridfs (MongoDB C driver): downloading requests will be processed at Nginx level, which is in front of web applications in most deployments. Web application can focus on processing dynamic contents instead of static content.

- nginx_gridfs got the best performance comparing to other applications written in script languages. - The performance differences between Nginx and nginx_gridfs getting small after file size increased. But you can not turn a blind eye on the server load.

- pymongo and node.js driver: it's a draw game. Static files should be avoid to be served in productive applications.

- Put files in database make static content management much easier. We can omit maintain the consistency between files and its meta data in database.

- Scalable and HA advantages come with MongoDB

- bad performance

- can not resume downloading after pause or break

There are rare use cases I can imagine, especially in a performance sensitive system. But I may taste it in some prototype projects.

Here goes the answer from MongoDB official website, hope this will help. http://docs.mongodb.org/manual/faq/developers/#faq-developers-when-to-use-gridfs