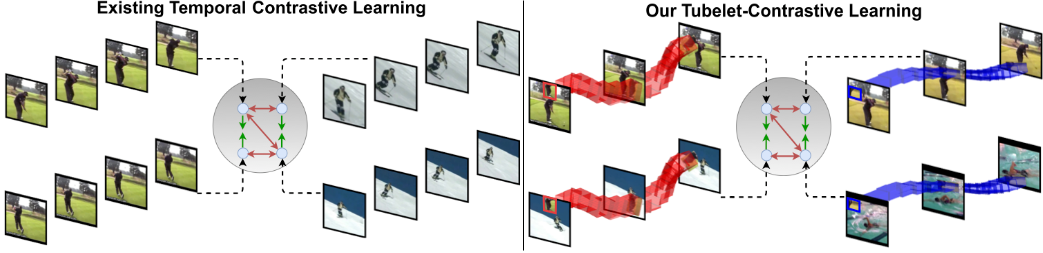

Tubelet-Contrastive Self-Supervision for Video-Efficient Generalization

Fida Mohammad Thoker, Hazel Doughty, Cees Snoek,

University Of Amsterdam, VIS Lab

[2023.10.08] The pre-trained models and scripts for Mini-Kinetics pretraning are available!

[2022.10.08] Code and pre-trained models are available now!

Please follow the instructions in INSTALL.md.

Please follow the instructions in DATASET.md for data preparation.

The pre-training instruction is in PRETRAIN.md.

The fine-tuning instruction is in FINETUNE.md.

We provide pre-trained and fine-tuned models in MODEL_ZOO.md.

Fida Mohammad Thoker: [email protected]

This project is built upon Catch the Patch and mmcv. Thanks to the contributors of these great codebases.

If you think this project is helpful, please feel free to leave a star⭐️ and cite our paperrs below:

@inproceedings{thoker2023tubelet,

author = {Thoker, Fida Mohammad and Doughty, Hazel and Snoek, Cees},

title = {Tubelet-Contrastive Self-Supervision for Video-Efficient Generalization},

journal = {ICCV},

year = {2023},

}

@inproceedings{thoker2022severe,

author = {Thoker, Fida Mohammad and Doughty, Hazel and Bagad, Piyush and Snoek, Cees},

title = {How Severe is Benchmark-Sensitivity in Video Self-Supervised Learning?},

journal = {ECCV},

year = {2022},

}