Potato is an easy-to-use web-based annotation tool accepted by EMNLP 2022 DEMO track. Potato allows you quickly mock-up and deploy a variety of text annotation tasks. Potato works in the back-end as a web server that you can launch locally and then annotators use the web-based front end to work through data. Our goal is to allow folks to quickly and easily annotate text data by themselves or in small teams—going from zero to annotating in a matter of a few lines of configuration.

Potato is driven by a single configuration file that specifies the type of task and data you want to use. Potato does not require any coding to get up and running. For most tasks, no additional web design is needed, though Potato is easily customizable so you can tweak the interface and elements your annotators see.

Please check out our official documentation for detailed instructions.

Jiaxin Pei, Aparna Ananthasubramaniam, Xingyao Wang, Naitian Zhou, Jackson Sargent, Apostolos Dedeloudis and David Jurgens. 🥔Potato: the POrtable Text Annotation TOol. In Proceedings of the 2022 Conference on Empirical Methods on Natural Language Processing (EMNLP'22 demo)

Potato supports a wide ranges of features that can make your data annotation easier:

Potato can be easily set up with simply editing a configuration file. You don't need to write any codes to set up your annotation webpage. Potato also comes with a series of features for diverse needs.

- Built-in schemas and templates: Potato supports a wide range of annotation schemas including radio, likert, checkbox, textbox, span, pairwise comparison, best-worst-scaling, image/video-as-label, etc. All these schemas can be

- Flexible data types: Potato supports displaying short documents, long documents, dialogue, comparisons, etc..

- Multi-task setup: NLP researchers may need to set up a series of similar but different tasks (e.g. multilingual annotation). Potato allows you to easily generate configuration files for all the tasks with minimum configurations and has supported the SemEval 2023 Task 9: Multilingual Tweet Intimacy Analysis

Potato is carefully desinged with a series of features that can make your annotators experience better and help you get your annotations faster. You can easily set up

- Keyboard Shortcuts: Annotators can direcly type in their answers with keyboards

- Dynamic Highlighting: For tasks that have a lot of labels or super long documents, you can setup dynamic highlighting which will smartly highlight the potential association between labels and keywords in the document (as defined by you).

- Label Tooltips: When you have a lot of labels (e.g. 30 labels in 4 categories), it'd be extremely hard for annotators to remember all the detailed descriptions of each of them. Potato allows you to set up label tooltips and annotators can hover the mouse over labels to view the description.

Potato allows a series of features that can help you to better understand the background of annotators and identify potential data biases in your data.

- Pre and Post screening questions: Potato allows you to easily set up prescreening and postscreening questions and can help you to better understand the backgrounds of your annotators. Potato comes with a seires of question templates that allows you to easily setup common prescreening questions like demographics.

Potato comes with features that allows you to collect more reliable annotations and identify potential spammers.

- Attention Test: Potato allows you to easily set up attention test questions and will randomly insert them into the annotation queue, allowing you to better identify potential spammers.

- Qualification Test: Potato allows you to easily set up qualification test before the full data labeling and allows you to easily identify disqualified annotators.

- Built-in time check: Potato automatically keeps track of the time annotators spend on each instance and allows you to better analyze annotator behaviors.

Clone the github repo to your computer

git clone https://github.com/davidjurgens/potato.git

Install all the required dependencies

pip3 install -r requirements.txt

To run a simple check-box style annotation on text data, run

python3 potato/flask_server.py config/examples/simple-check-box.yaml -p 8000

This will launch the webserver on port 8000 which can be accessed at http://localhost:8000.

Clicking "Submit" will autoadvance to the next instance and you can navigate between items using the arrow keys.

The config/examples folder contains example .yaml configuration files that match many common simple use-cases. See the full documentation for all configuration options.

Potato aims to improve the replicability of data annotation and reduce the cost for researchers to set up new annotation tasks. Therefore, Potato comes with a list of predefined example projects, and welcome public contribution to the project hub. If you have used potato for your own annotation, you are encouraged to create a pull request and release your annotation setup.

[launch] python3 potato/flask_server.py example-projects/dialogue_analysis/configs/dialogue-analysis.yaml -p 8000

[Annotate] http://localhost:8000

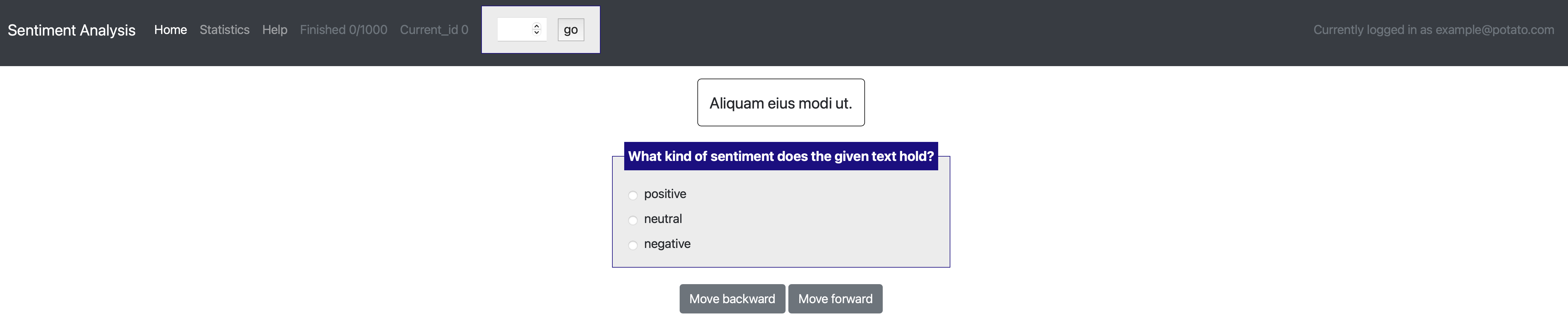

[launch] python3 potato/flask_server.py example-projects/sentiment_analysis/configs/sentiment-analysis.yaml -p 8000

[Annotate] http://localhost:8000

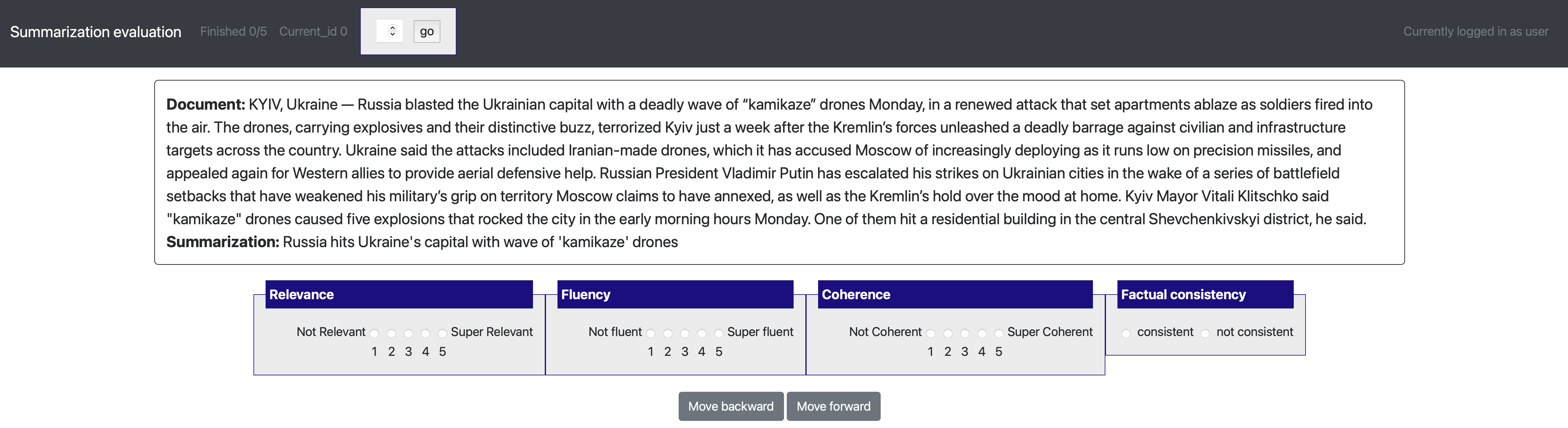

[launch] python3 potato/flask_server.py example-projects/summarization_evaluation/configs/summ-eval.yaml -p 8000

[Annotate] http://localhost:8000/?PROLIFIC_PID=user

yaml config | Paper | Dataset

[Setup configuration files for multiple similar tasks] python3 potato/setup_multitask_config.py example-projects/match_finding/multitask_config.yaml

[launch] python3 potato/flask_server.py example-projects/match_finding/configs/Computer_Science.yaml -p 8000

[Annotate] http://localhost:8000/?PROLIFIC_PID=user

yaml config | Paper | Dataset

[launch] python3 potato/flask_server.py example-projects/immigration_framing/configs/config.yaml -p 8000

[Annotate] http://localhost:8000/

yaml config | Paper | Dataset

[launch] python3 potato/flask_server.py example-projects/gif_reply/configs/gif-reply.yaml -p 8000

[Annotate] http://localhost:8000/

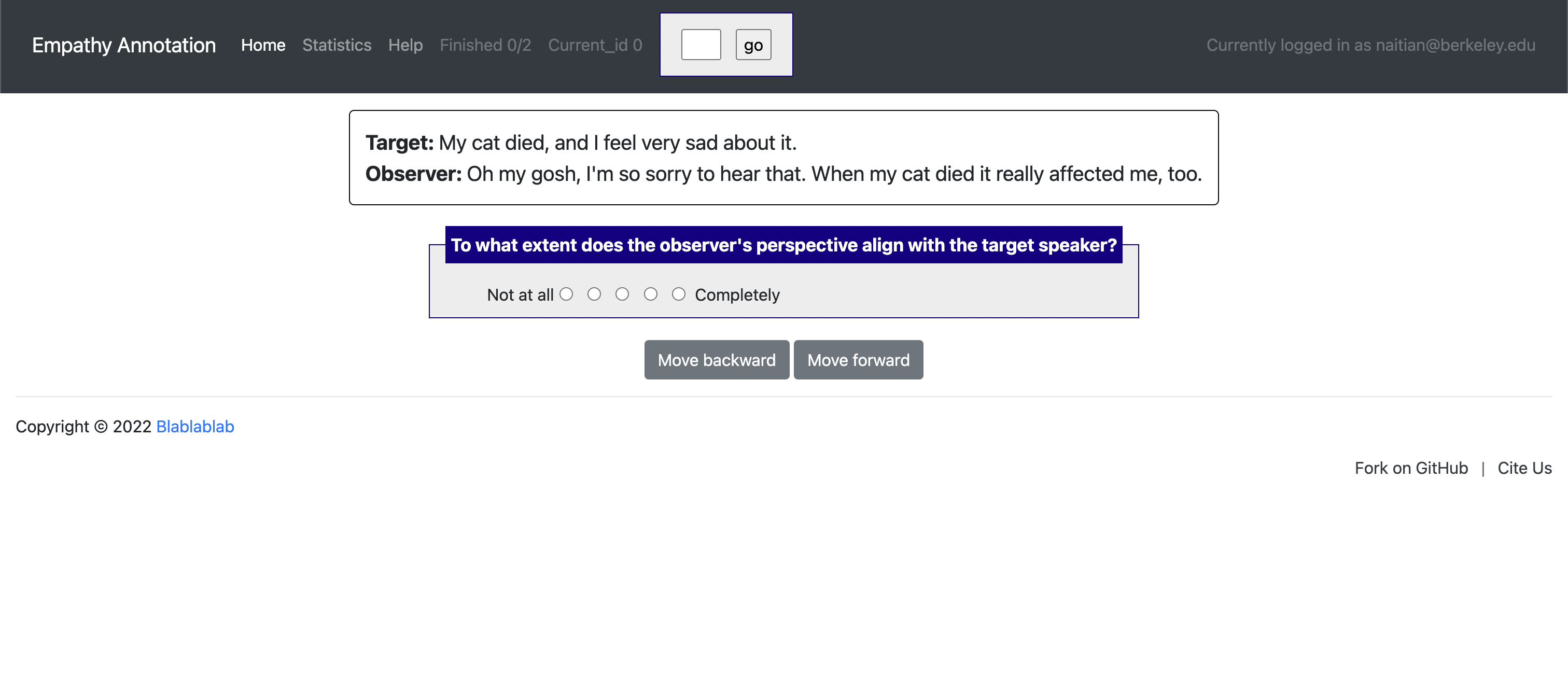

[launch] python3 potato/flask_server.py example-projects/empathy/configs/empathy.yaml -p 8000

[Annotate] http://localhost:8000/

Potato is run by a small and engergetic team of academics doing the best they can. For support, please leave a issue on this git repo. Feature requests and issues are both welcomed! If you have any questions or want to collaborate on this project, please email [email protected]

Please use the following bibtex when referencing this work:

@inproceedings{pei2022potato,

title={POTATO: The Portable Text Annotation Tool},

author={Pei, Jiaxin and Ananthasubramaniam, Aparna and Wang, Xingyao and Zhou, Naitian and Dedeloudis, Apostolos and Sargent, Jackson and Jurgens, David},

booktitle={Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing: System Demonstrations},

year={2022}

}