This section provides instructions on how to set up the environment for Exploratory Data Analysis (EDA) in this project. To install the required packages for EDA, run the following command:

-

Create a virtual environment and activate it:

python -m venv .venv source .venv/bin/activate -

Installing Required Packages: Run the following command to install the necessary packages for EDA.

pip install ".[dev]" -

Launch the Jupyter notebook by running:

jupyter lab EDA/challenge.ipynb

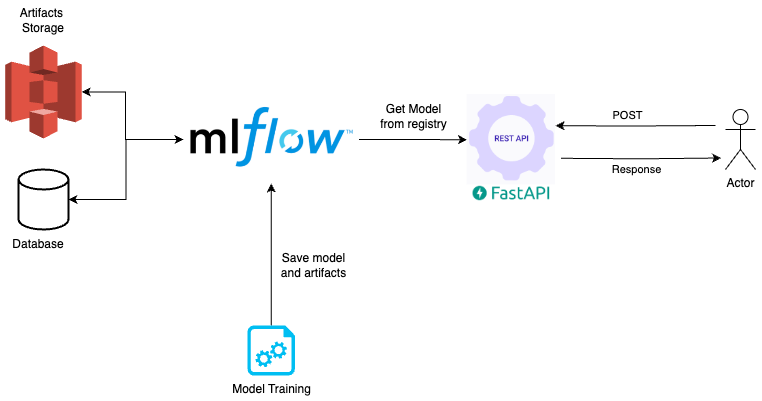

This project leverages mlflow as a comprehensive tool for model development, experimentation and model registry. By integrating mlflow within a Docker Compose setup, this project accurately simulates a production environment. The training and server stages are seamlessly incorporated into this setup, creating an unified and efficient development-to-deployment workflow process.

Configure mlflow with an object storage service and a database by executing:

make mlflowOptionally, you can run the command in detached mode by adding detached=true:

make mlflow detached=trueInitiate the training process by executing:

make trainAfter finishing the training step, promote the model to production to make serving possible. Enter this link, go to stage and choose Production:

After promoting the model to production, serve it by executing:

make apiTo stop and remove all the Docker containers associated with this setup, run:

make downPS: If you want to test the training outside Docker, you need to set:

export MLFLOW_TRACKING_URI=http://localhost:5000

export MLFLOW_S3_ENDPOINT_URL=http://localhost:9000

export AWS_ACCESS_KEY_ID=minio

export AWS_SECRET_ACCESS_KEY=minio123The diagram above illustrates the general system architecture. MLflow serves as both our experimentation platform and model registry. The model is served using the FastAPI framework, which retrieves the model set in the MLflow model registry at the production stage.

In this project, we utilize both Tiltfile and kind to simulate a Kubernetes local deployment. This approach enables a robust and efficient development environment that closely mirrors production settings.

If you want know more about kind and tilt you can check here.

Before proceeding, you'll need to install Tilt and Docker before.

-

To setup the K8s cluster, run:

make k8s-setup

-

To bring up the model API, run:

tilt up && tilt downOnce the API is up and running, it can be accessed at

localhost:8000. Additionally, MLflow can be accessed atlocalhost:5000/docs. -

To tear down the cluster, run:

make k8s-kill