It is a computer vision program which uses a convolutional neural network to find and enhance patterns in images, thus creating a dream-like hallucinogenic appearance in the deliberately over-processed images.

It adapts the input images to match the network weights with gradient ascent which results in visualizing network filters on the input images giving them psychodelic look.

This notebook recreates a style transfer method that is outlined in the paper Image Style Transfer Using Convolutional Neural Networks, by Gatys in PyTorch.

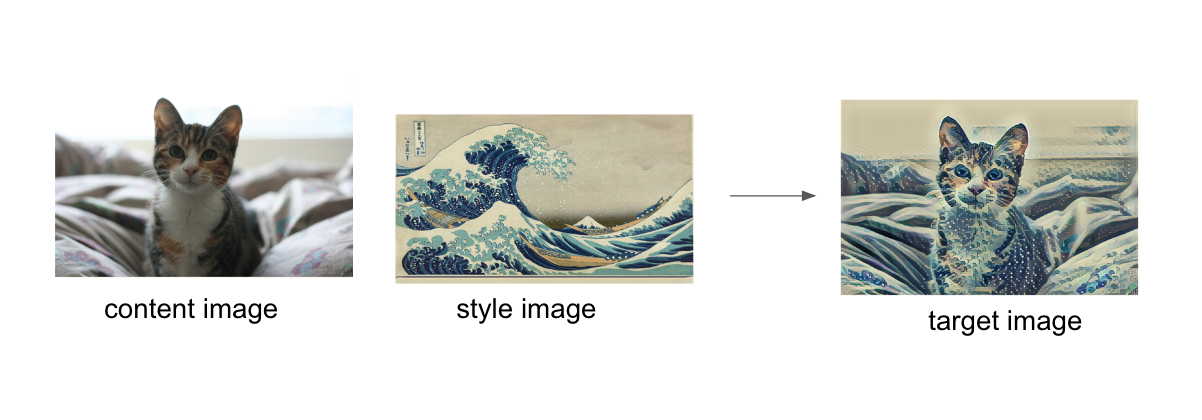

Style transfer relies on separating the content and style of an image. Given one content image and one style image, we aim to create a new, target image which should contain our desired content and style components:

- objects and their arrangement are similar to that of the content image

- style, colors, and textures are similar to that of the style image

An example is shown, where the content image is of a cat, and the style image is of Hokusai's Great Wave. The generated target image still contains the cat but is stylized with the waves, blue and beige colors, and block print textures of the style image.

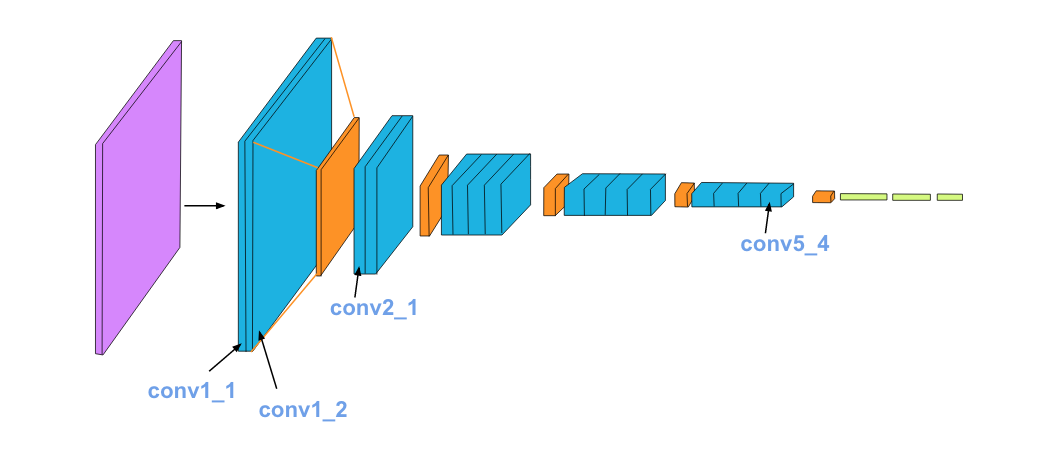

Using VGG-19 as a feature extractor and using backpropogation to minimize a defined loss function between our target and content images. Not training it to produce a specific output.

The above image shows the various layers of the model VGG-19.