the text-based terminal client for Ollama.

- intuitive and simple terminal UI, no need to run servers, frontends, just type

otermin your terminal. - multiple persistent chat sessions, stored together with system prompt & parameter customizations in sqlite.

- support for Model Context Protocol (MCP) tools & prompts integration.

- can use any of the models you have pulled in Ollama, or your own custom models.

- allows for easy customization of the model's system prompt and parameters.

- supports tools integration for providing external information to the model.

uvx otermSee Installation for more details.

- Support sixel graphics for displaying images in the terminal.

- Support for Model Context Protocol (MCP) tools & prompts!

- Create custom commands that can be run from the terminal using oterm. Each of these commands is a chat, customized to your liking and connected to the tools of your choice.

The splash screen animation that greets users when they start oterm.

The splash screen animation that greets users when they start oterm.

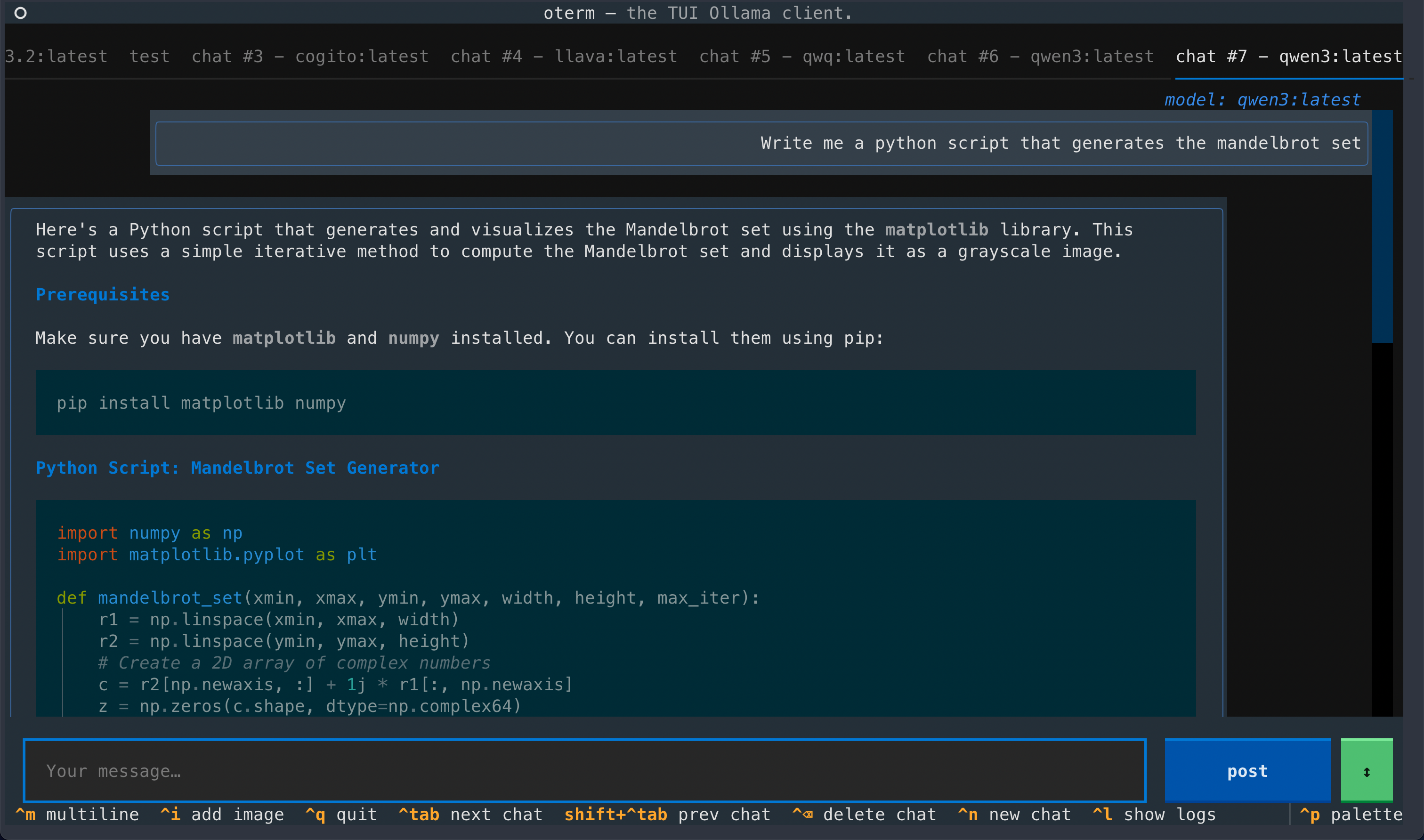

A view of the chat interface, showcasing the conversation between the user and the model.

A view of the chat interface, showcasing the conversation between the user and the model.

git MCP server to access its own repo.

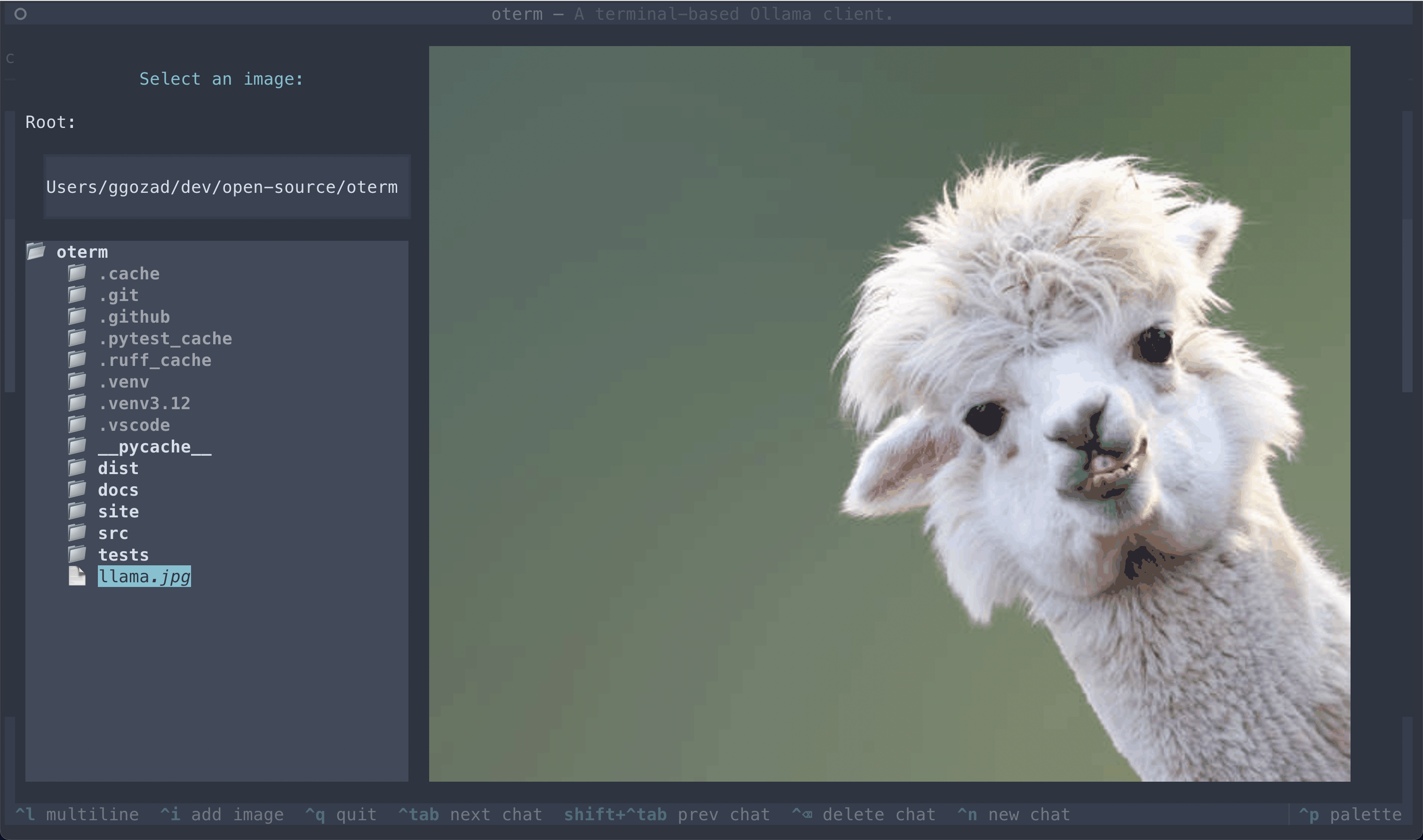

The image selection interface, demonstrating how users can include images in their conversations.

The image selection interface, demonstrating how users can include images in their conversations.

This project is licensed under the MIT License.