An insurance company that provides affordable health insurance to customers all across the USA. As the lead data scientist at the company, your responsibility is to create an automated system that calculates the annual medical expenditure for new customers. The system will use various pieces of information such as age, sex, BMI, number of children, smoking habits and region of residence to estimate the medical expenditure. The estimate provided by the system will be used to determine the annual insurance premium offered to the customer. It is important to be able to explain why the system outputs a certain prediction due to regulatory requirements.

The objectives are two folds:

- Predict the annual expenses of the insurance to each customer (Prediction)

- Explain the reasoning behind the prediction (interpretability of the model)

You can reproduce my local environment using the spec-file.txt or enviroment.yaml files with conda using the command:

conda create --name myenv --file spec-file.txt or conda env create -f environment.yaml

Find a jupyter notebook for this work here: part4

The bits on the data visualization can be found here: part1

The data was taken from download CSV file here. It is a labeled data of

The

This first part includes:

- Handling Missing Data or Nulls. There was no missing data.

- Checking for Duplicates and fremoving them. There was a single duplicates which was remmoved

- Checking for outliers using zscore but found no extreme values so no item was removed

- Checking for skewness or distribution of the data. There was mild skewness of the data, so no transformations (log or box-cox etc) where applied to the features nor the target.

The data has both categorical and numerical columns and hence requires different treatment in the data preprocessing stage.

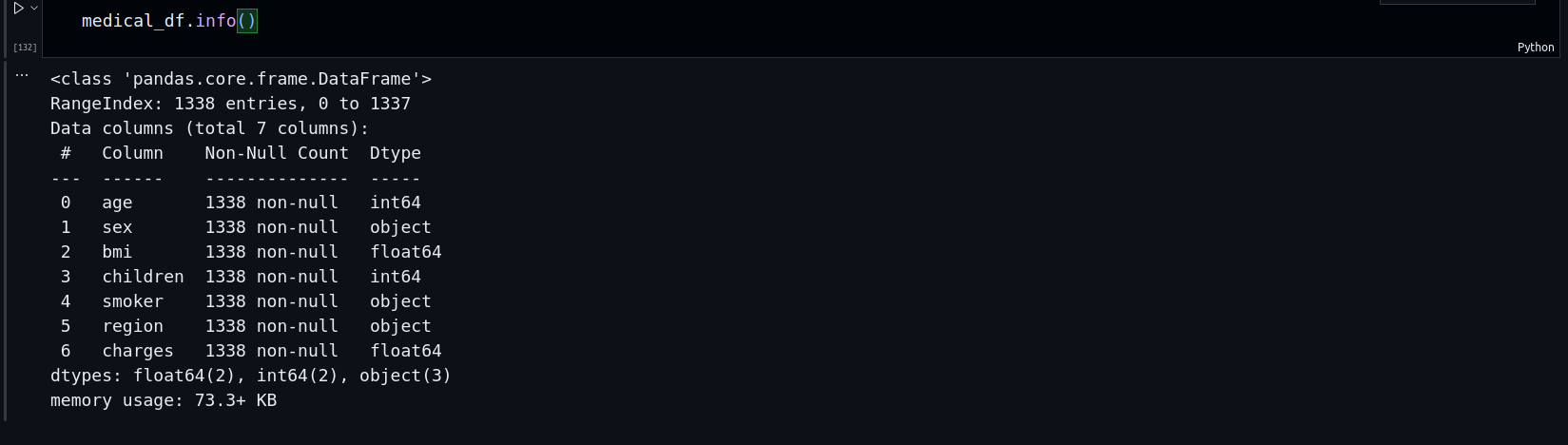

Here, I examine the correlation between the different predictors and the target variables to see how informative they will be towards the prediction of my model. All these features show a weak correlation signaling their poor predictive power and hence a need to come up with new features.

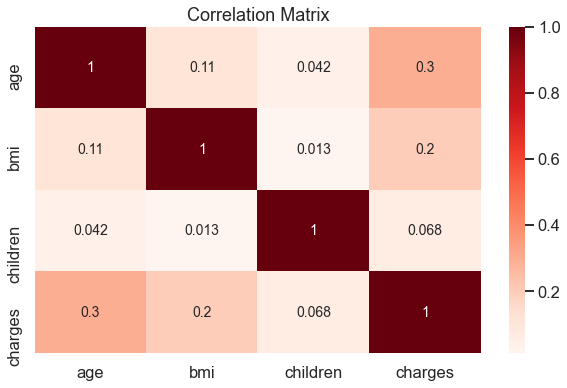

Correlation matrix for the numeric variablesThe new extracted features are highly correlated an hence informative.

Correlation matrix for the new feature engineered variables.The numeric features are also scaled to improve the learning rate of our ML and AI models.

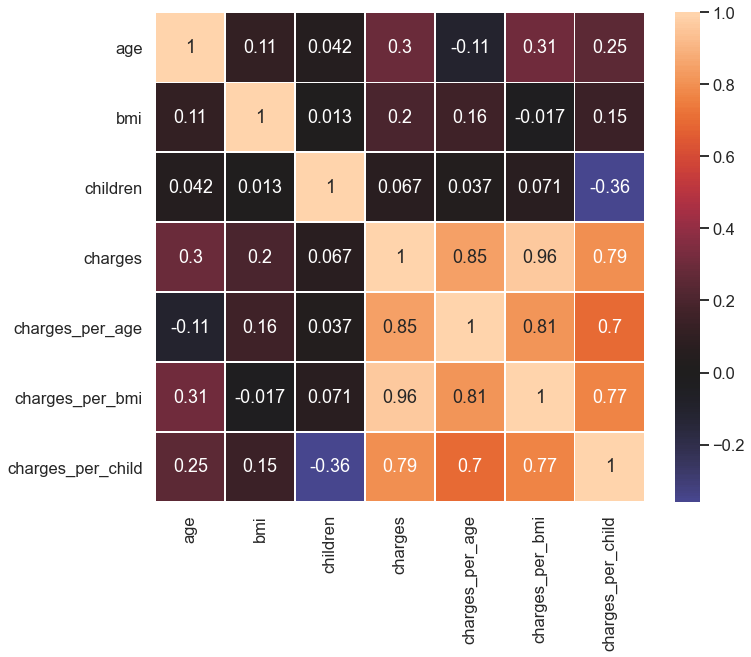

The categorical features were were one-hot encoded and their correlation with the target shown below:

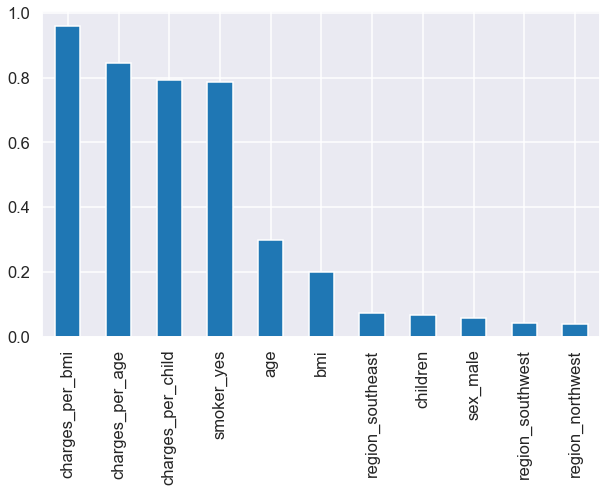

Correlation matrix for the all variables (numeric and categorical)There is still a weak correlation across all the original features except for "smokers_yes". Finally I show a bar plot of the correlation of all features with the traget variable, charges

Correlation value for the new feature engineered variables with the target variable, charges.Since the goal is not just about prediction but also interpretability, I started by considering interpretable models such as Linear regression and simple decision three and then proceed to a more complex model model, ensemble trees: RandomForest. I used these simple models to establish my baseline before using other complex models such as deep neural network.

-

Models:

- LinearRegression

- SGDRegressor (to compare its performance to a batch approach of LinearRegrssion)

- DecisionTreeRegressor

- RandomForestRegressor

- LinearSVR

-

Error Metric

- root mean squared error (rmse)

-

Training:

- Split the data into train-test sets using

$80-20%$ splits. Thesmoker_yeshas class imbalance of$80-20%$ and so in the train-test split, I ensured this ratio is well represented by making a stratified split - I applied cross-val technique on training the model with the training dataset. Then I calculated the validation/train error which was high for all this models. This signals a strong bias. RandomForest has the least validation error and hence was chosen. The validation error of the RandomForest model is

$973$ . - Since the model shows a high bias, I increased the complexity of the model by applying a polynomial feature of order

$2$ . The new validation and generalization errors are$651$ and$1532$ respectively. Using this value as my baseline, I will build a neural network to beat this performance.

- Split the data into train-test sets using

<!-- confidence interval is $(544.66, 874.43)$ -->

I built a single and double hidden neural network with tensorflow and the generalization errors as