Result video can be find here : https://www.youtube.com/watch?v=-3WLUxz6XKM

demo_1.mp4

This repository contains a Wav2Lip UHQ extension for Automatic1111.

It's an all-in-one solution: just choose a video and a speech file (wav or mp3), and it will generate a lip-sync video. It improves the quality of the lip-sync videos generated by the Wav2Lip tool by applying specific post-processing techniques with Stable diffusion.

- latest version of Stable diffusion webui automatic1111

- FFmpeg

- Install Stable Diffusion WebUI by following the instructions on the Stable Diffusion Webui repository.

- Download FFmpeg from the official FFmpeg site. Follow the instructions appropriate for your operating system. Note that FFmpeg should be accessible from the command line.

- Launch Automatic1111

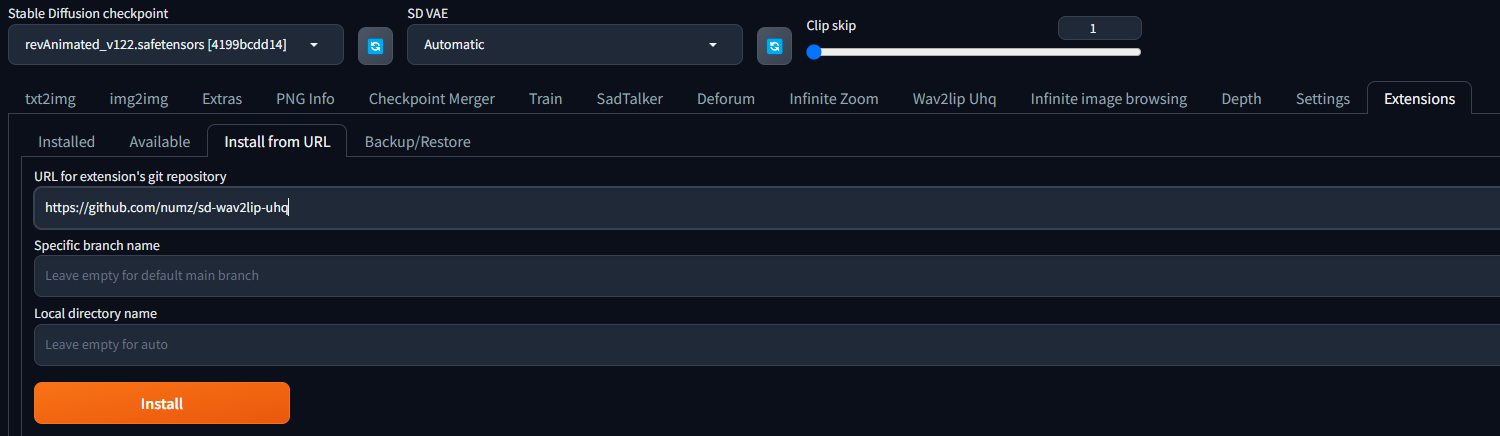

- In the extensions tab, enter the following URL in the "Install from URL" field and click "Install":

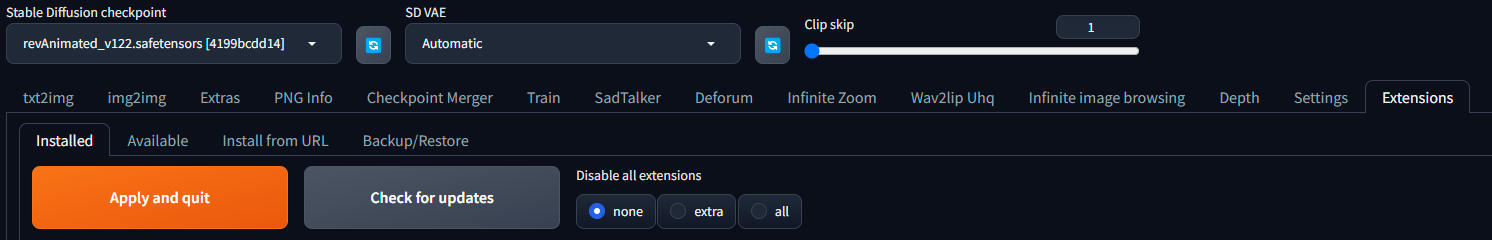

- Go to the "Installed Tab" in the extensions tab and click "Apply and quit".

-

if you don't see the "Wav2lip Uhq tab" restart automatic1111.

-

🔥 Important: Get the weights. Download the model weights from the following locations and place them in the corresponding directories:

| Model | Description | Link to the model | install folder |

|---|---|---|---|

| Wav2Lip | Highly accurate lip-sync | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\checkpoints\ |

| Wav2Lip + GAN | Slightly inferior lip-sync, but better visual quality | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\checkpoints\ |

| s3fd | Face Detection pre trained model | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\face_detection\detection\sfd\s3fd.pth |

| s3fd | Face Detection pre trained model (alternate link) | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\face_detection\detection\sfd\s3fd.pth |

| landmark predicator | Dlib 68 point face landmark prediction (click on the download icon) | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\predicator\shape_predictor_68_face_landmarks.dat |

| landmark predicator | Dlib 68 point face landmark prediction (alternate link) | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\predicator\shape_predictor_68_face_landmarks.dat |

| landmark predicator | Dlib 68 point face landmark prediction (alternate link click on the download icon) | Link | extensions\sd-wav2lip-uhq\scripts\wav2lip\predicator\shape_predictor_68_face_landmarks.dat |

- Choose a video or an image.

- Choose an audio file with speech.

- choose a checkpoint (see table above).

- Padding: Wav2Lip uses this to add a black border around the mouth, which is useful to prevent the mouth from being cropped by the face detection. You can change the padding value to suit your needs, but the default value gives good results.

- No Smooth: If checked, the mouth will not be smoothed. This can be useful if you want to keep the original mouth shape.

- Resize Factor: This is a resize factor for the video. The default value is 1.0, but you can change it to suit your needs. This is useful if the video size is too large.

- Choose a good Stable diffusion checkpoint, like delibarate_v2 or revAnimated_v122 (SDXL models don't seem to work, but you can generate a SDXL image and change model for wav2lip process).

- Click on the "Generate" button.

This extension operates in several stages to improve the quality of Wav2Lip-generated videos:

- Generate a Wav2lip video: The script first generates a low-quality Wav2Lip video using the input video and audio.

- Mask Creation: The script creates a mask around the mouth and try to keep other face motion like cheeks and chin.

- Video Quality Enhancement: It takes the low-quality Wav2Lip video and overlays the low-quality mouth onto the high-quality original video.

- Img2Img: The script then sends the original image with the low-quality mouth and the mouth mask into Img2Img.

- Use a high quality image/video as input

- Try to minimize the grain on the face on the input as much as possible, for example you can try to use "Restore faces" in img2img before use an image as wav2lip input.

- Use a high quality model in stable diffusion webui like delibarate_v2 or revAnimated_v122

Contributions to this project are welcome. Please ensure any pull requests are accompanied by a detailed description of the changes made.

- The code in this repository is released under the MIT license as found in the LICENSE file.