This is a project of XJTLU 2017 Summer Undergraduate Research Fellowship, it aims at designing a generative adversarial network to implement style transfer from a style image to content image. Related literature could be viewed from Wiki

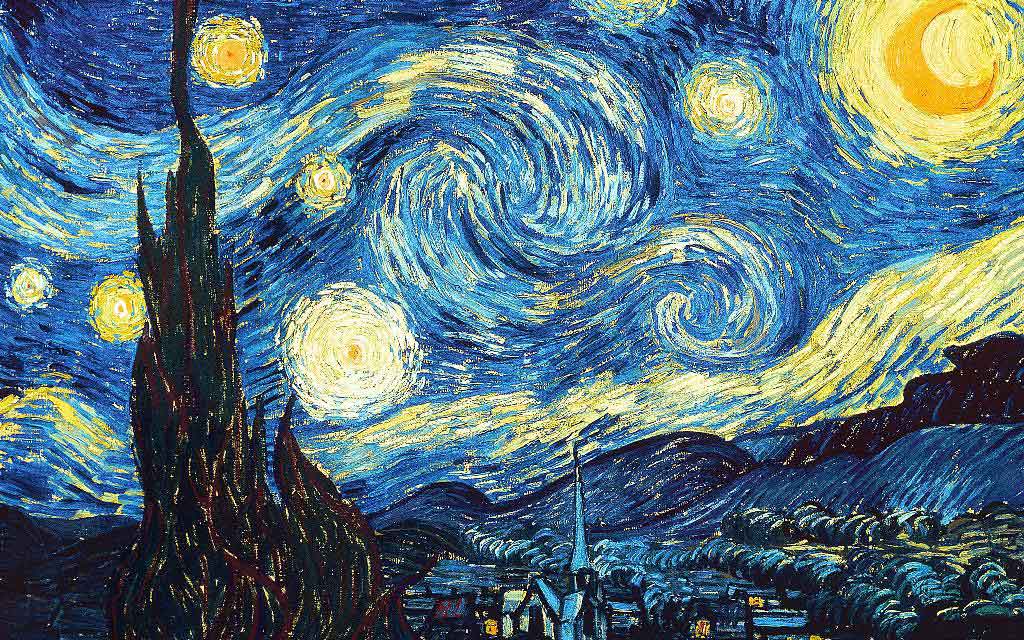

Neural Style Transfer is one of the cutting-edge topic in deep learning field. Given an colored image, like this proposed, and another image that contains the style desired, they could be combined by using Neural Style Transfer and it looks like this.

Our goal is to implement the neural style transfer by using cycleGAN. At the same time, we also want to take one step further by using CAN, which could generate image itself after a well-feed training process.

Despite so many existing and well-performed deep learning frameworks (like caffe, chainer etc), our group chooses Tensorflow for its reliability and adaptability.

Edge detection based on Kears deep learning framework has been implemented, and test image is

The performance is not bad, and for non-anime photo the output is

There are more results released by using Keras framework, please see this [link](http://stellarcoder.com/surf/anime_test) created by DexHunter. The network is trained on Professor Flemming 's workstation with 4 Titan X GPUs, which cost 2 weeks to implement.

This file is essential for the network, the download link could be viewed from here