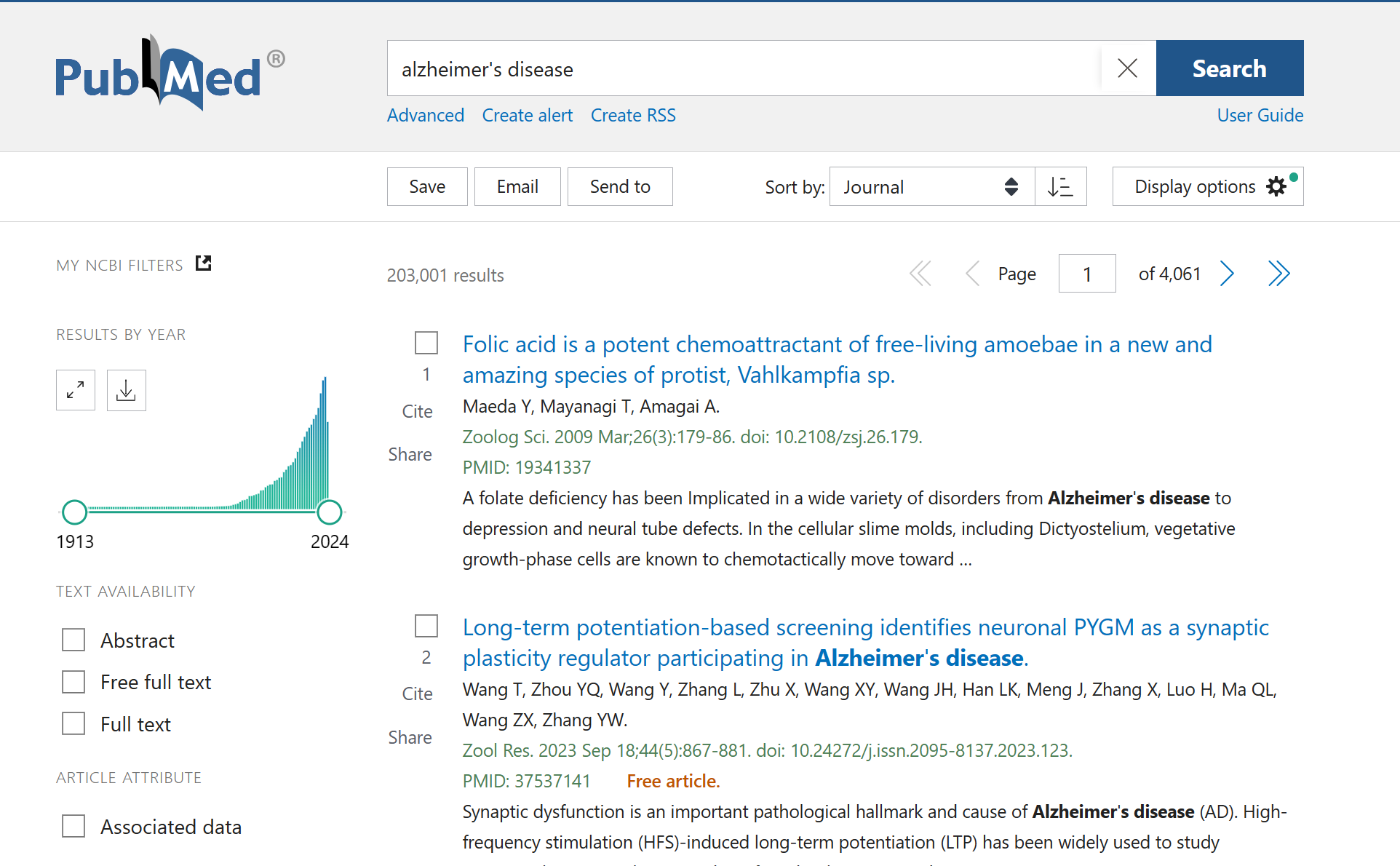

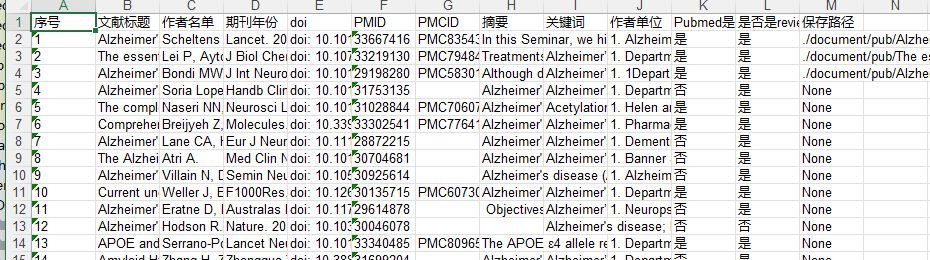

A tool for crawling and downloading PubMed literature information, written based on aiohttp, pandas, and xpath. It retrieves relevant literature information according to the parameters you provide and downloads the corresponding PDF originals. The download speed is approximately 1 second per article. It can extract most of the literature information and automatically generate an Excel file, including titles, abstracts, keywords, author lists, author affiliations, whether it is free, and whether it is a review type, etc.

After automatic downloading, some information will be stored in local text files for reference. Search data will be stored in an sqlite3 database. Finally, after execution, all information will be automatically exported and an Excel file will be generated.

Based on Python 3.9, also supports higher versions. Mainly uses pandas, xpath, asyncio, aiohttp.

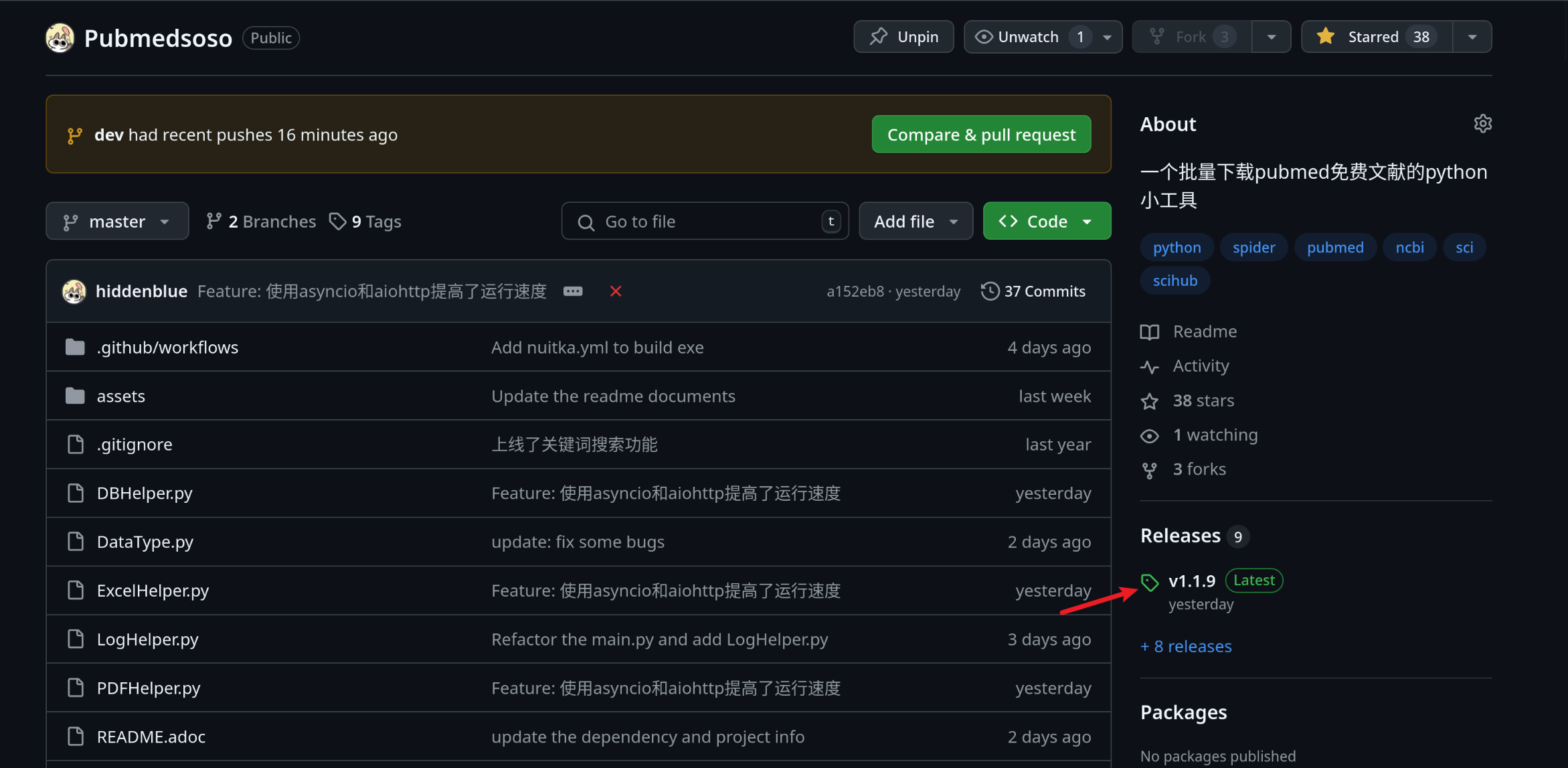

In the Release section on the right side of the project page, there is an executable file packed with Nuitka, which includes all dependencies and can be directly downloaded and executed in the Windows command line.

If you need to run it in a Python environment, please install the corresponding dependencies according to the requirements.txt file.

asyncio~=3.4.3

aiohttp~=3.11.8

requests~=2.32.3

lxml~=5.3.0

pandas~=2.2.3

openpyxl~=3.1.5

colorlog~=6.9.0-

Clone the project and install the required dependency files in the command line environment. It is recommended to use Python virtual environment tools such as

anacondaorminiconda3.

git clone https://github.com/hiddenblue/Pubmedsoso.git

cd Pubmedsoso

pip install -r requirements.txtIf it is inconvenient to install the git tool, you can directly download the ZIP from the Release on the right side of the page and unzip it for execution.

-

Switch to the project folder in the terminal and execute

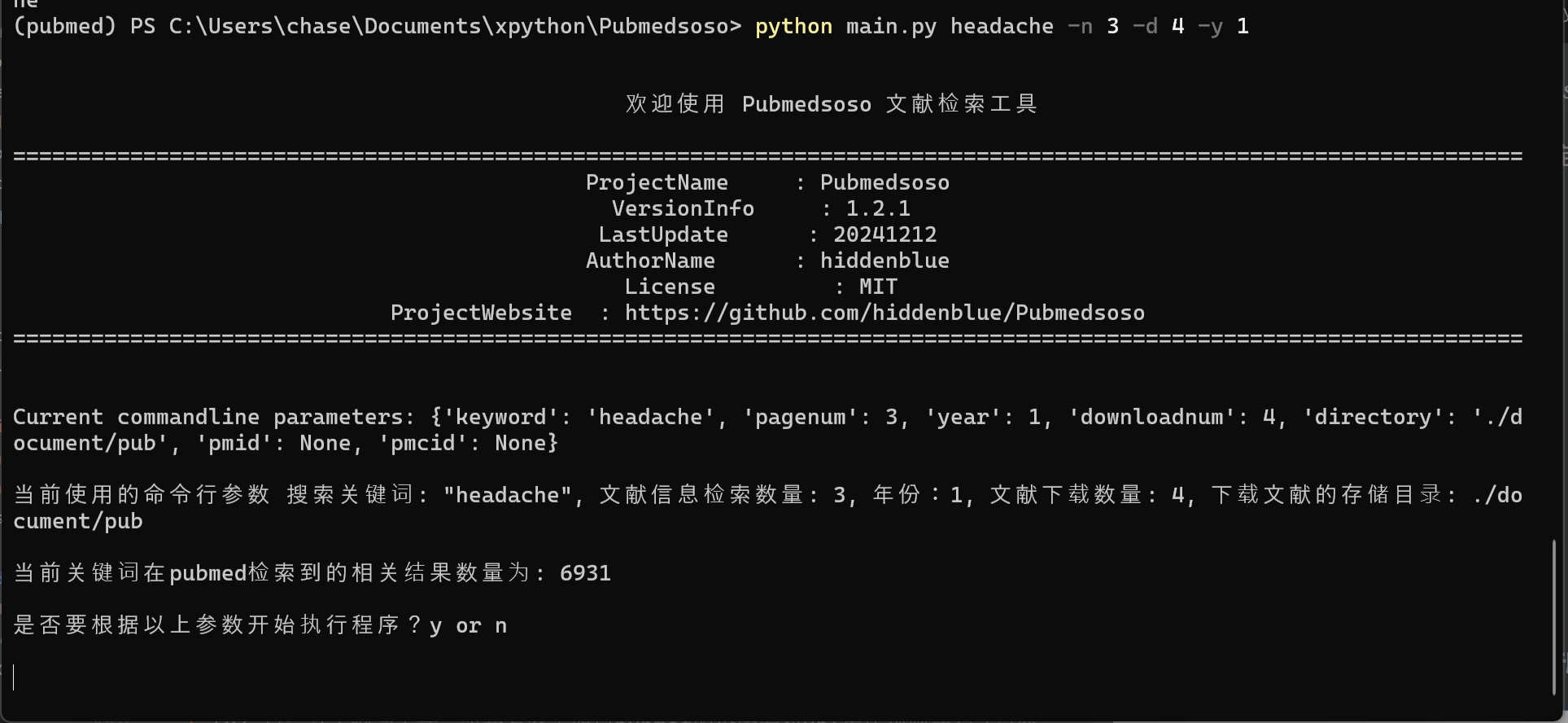

python main.pywith keyword parameters, or directly execute the executable filepubmedsoso.exe+ keywords. For example:

python main.py -k headache -n 5 -d 10 -y 5-k headache is the search keyword (keyword) input for this run. If your keyword contains spaces, please enclose the entire keyword in double quotes.

It supports PubMed advanced query box, such as "headache AND toothache", which includes logical expressions such as AND, NOT, OR.

-n parameter followed by a number refers to the number of pages to be searched, with 50 articles per page.

-d parameter followed by a number indicates the number of articles to be downloaded.

-y optional parameter, followed by the year range of the information you want to search, in years. For example, -y 5 means literature from the last five years.

When entering the number of pages, each page will contain 50 search results. There is no need to set a large number, otherwise, it will take a long time to execute.

Then enter the number of articles you need to download. The program will find free PMC articles from the search results and automatically download them. The download speed depends on your network condition.

Each article download will automatically timeout and skip to the next one if it exceeds 30 seconds.

PS > python main.py --help

usage: python main.py -k keyword

pubmedsoso is a python program for crawler article information and download pdf file

optional arguments:

--help, -h show this help message and exit

--version, -v use --version to show the version

--keyword, -k specify the keywords to search pubmed

--pagenum, -n add --pagenum or -n to specify the page number to

--year -y add --year or -y to specify year scale you would to

--downloadnum,-d a digit number to specify the number to download

--directory -D use a vaild dir path specify the pdf save directory.

--output -o add --output filename to appoint name of pdf file

--loglevel -l set the console log level, e.g debugIf you are familiar with IDEs, you can run main.py in Python environments such as pycharm or vscode.

-

According to the prompt, enter

yornto decide whether to execute the program with the given parameters.

pubmedsoso will crawl and download according to the normal search order.

-

The literature will be automatically downloaded to the "document/pub/" folder mentioned earlier, and a txt file with the original traversal information will be generated. Finally, an Excel file will be generated after the program execution is complete.

- WARNING

-

Please do not excessively crawl the PubMed website. Since this project uses asynchronous mechanisms, it has high concurrency capabilities. Parameters related to access speed can be set in

config.py, and the default values are not too large.

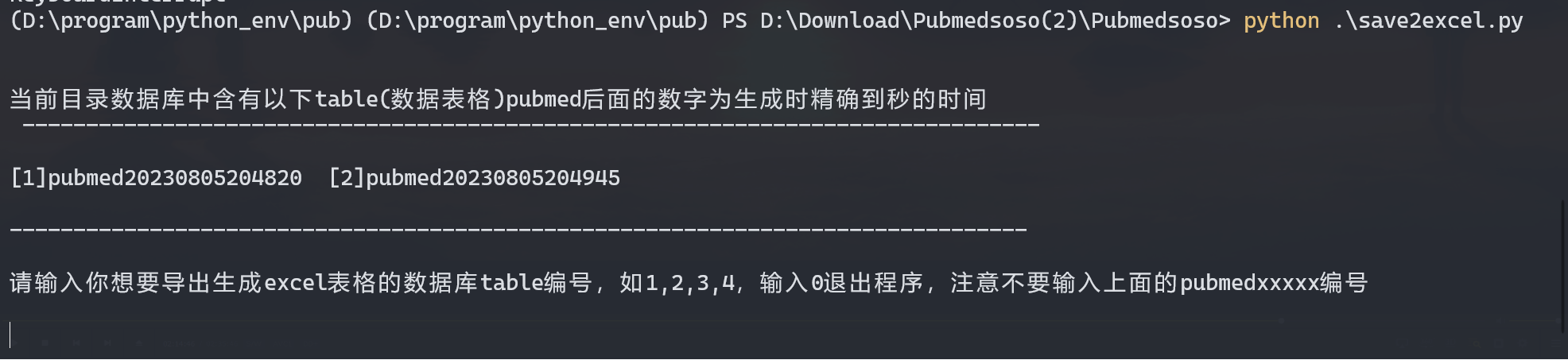

This is a module to facilitate exporting historical information to Excel after crawling. It can be executed separately, such as in an IDE or command line by executing python ExcelHelper.py.

With the above prompt, you can choose to export historical records from the sqlite3 database, which will automatically generate an exported file locally. Duplicate-named Excel files are not allowed and need to be deleted as prompted.

-

❏ Precise search and download, this is still a bit difficult*

-

✓ Custom keyword download, waiting for me to figure out the PubMed search parameter URL generation rules (already implemented)

-

❏ Automatic completion download of non-free literature via SciHub, perhaps allowing users to write adapters themselves*

-

❏ A usable GUI interface*

-

❏ It would be best to include a free Baidu translation plugin, which might be useful sometimes*

-

✓ Refactor the project using OOP and more modern tools

-

✓ Refactor the code using asynchronous methods to improve execution efficiency

-

✓ A usable logging system may also be needed

-

❏ A subscription-based, proactive literature push mechanism could be developed to push the latest literature to users

Due to the peculiarities of the asyncio asynchronous module, some special issues may arise during debugging on Windows.

If you need to develop and debug the code, you need to modify two places:

In GetSearchResult.py:

try:

if platform.system() == "Windows":

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy())

html_list = asyncio.run(WebHelper.getSearchHtmlAsync(param_list))If debugging on Windows, please comment out the conditional execution statement above, otherwise, it will take effect during debugging and cause errors.

Additionally, asyncio.run() is used in multiple places in the project. During debugging, the debug parameter needs to be enabled, otherwise, the runtime will get stuck and report a TypeError: 'Task' object is not callable error.

2022.5.16 Updated the automatic creation of the document/pub folder feature, no need to manually create the folder, it will automatically check and create.

2023.08.05 Updated to fix the bug where abstract crawling failed, and users no longer need to manually copy and paste webpage parameters.

2024.11.23 The author unexpectedly remembered this somewhat embarrassing project and quietly updated it, "Is this really the code I wrote? How could it be so bad?"

2024.12.02 Refactored the entire code based on OOP, xpath, and asyncio asynchronous, removed the runtime speed limit, the speed is about 100 times the original, "I’m so tired after writing this."