中文 | English

Due to the huge vocaburary size (151,936) of Qwen models, the Embedding and LM Head weights are excessively heavy. Therefore, this project provides a Tokenizer vocabulary pruning solution for Qwen and Qwen-VL.

If my open source projects have inspired you, giving me some sponsorship will be a great help to my subsequent open source work. Support my subsequent open source work❤️🙏 (Previous Supporters)

Run the following command to install required packages

pip install -r requirements.txt

This tokenizer vocabulary pruning tool supports the following LLM models.

Please download your base model from the above checkpoints.

We support two types of tokenizer vocabulary pruning: lossless (in support data) and lossy (to a target size)

To conduct lossless vocabulary pruning, you just need to simply run the following script with your own data/model pathes.

bash prune_lossless.sh

The script will first prune the vocabulary and save it to the output path, and then check whether old tokenizer and new tokenzer are equivalent.

Explaination of arguments used in the script

old_model_path="../../checkpoints/Qwen-VL-Chat/"

new_model_path="../../checkpoints/Qwen-VL-Chat-new-vocab/"

support_data="../../VLMEvalKit/raw_data/"

support_lang="" # optional (using "langdetect") e.g., support_lang="zh-cn en"

inherit_vocab_count="" # optional

Run the following bash script can conduct lossy vocabulary pruning to a target size.

bash prune_lossy.sh

This script add an argument 'target_size', which will remove the less frequent token and cause mismatch between old tokenizer and new tokenizer. Therefore, it will no longer conduct equivalence check.

- For support_lang, note that language detection is using langdetect package, please using the valid abbreviations of languages.

- Post processing

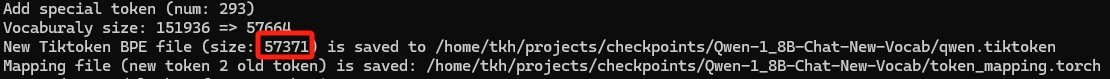

For Qwen models, change SPECIAL_START_ID in tokenization_qwen.py to your New Tiktoken BPE file Size, check printed log (see the following example).

We provide a list of sample data in "./sample_data/x.json" as an example of support data used in vocabulary pruning. Each file is either a dictionary of query and response, or a dictionary for a plain text.

Support Data Format A:

{

"query": Picture 1: <img>/YOUR_OWN_PATH/MMBench/demo.jpg</img>\nWhat is in the image? (This query will be tokenized with system prompt)",

"response": "A white cat. (This response will be directly tokenized from plain text)"

}

Support Data Format B:

{

"prompt": "In the heart of the open sky, Where the winds of change freely sigh, A soul finds its endless flight, In the boundless realms of light.(This prompt will be directly tokenized from plain text)"

}

If you find this project helps your research, please kindly consider citing our project in your publications.

@misc{tang2024tokenizerpruner,

title = {Qwen Tokenizer Pruner},

author = {Tang, Kaihua},

year = {2024},

note = {\url{https://github.com/KaihuaTang/Qwen-Tokenizer-Pruner}},

}