Subtasks | Data | Models | Contributors

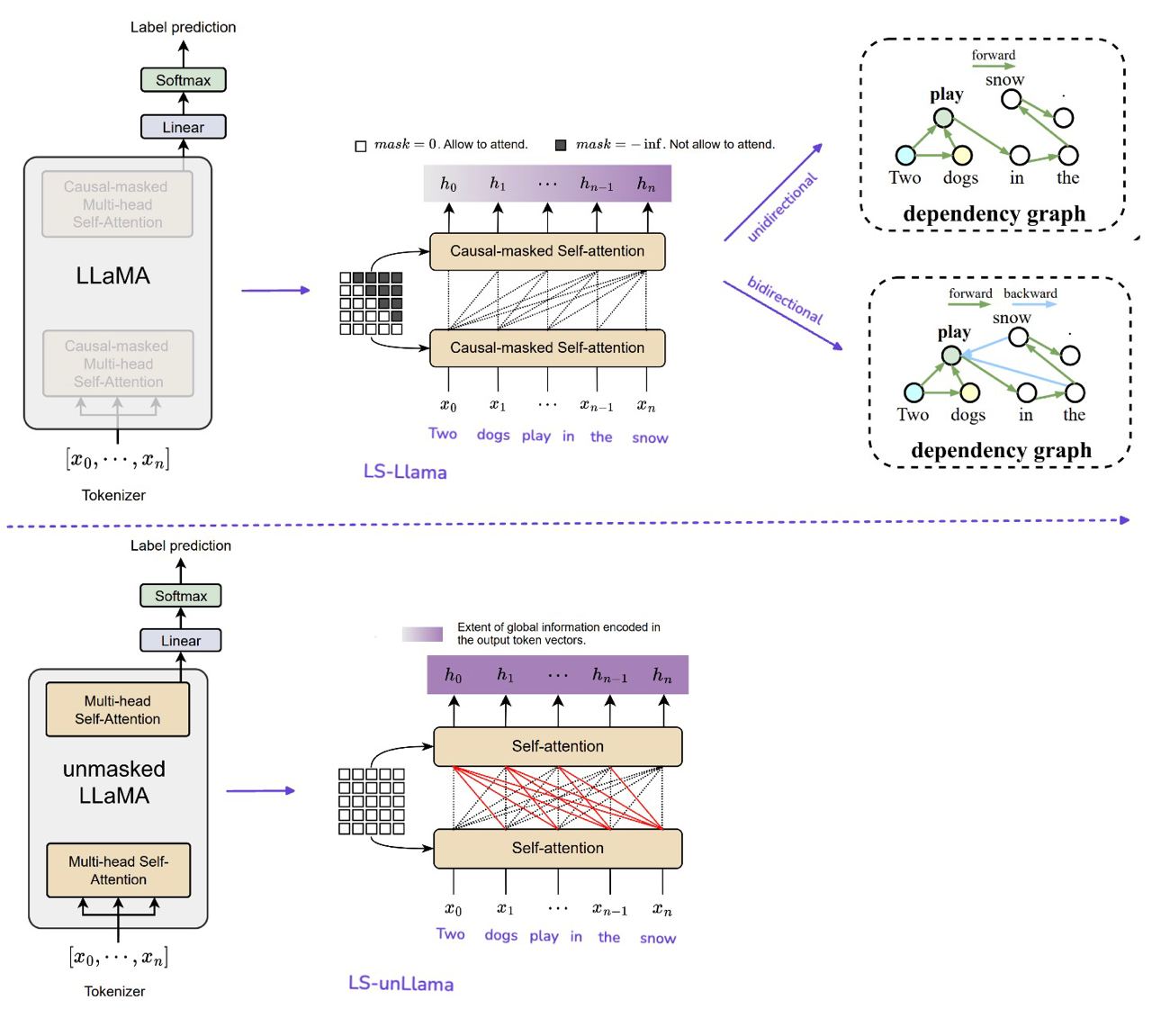

The widespread use of large language models (LLMs) influences different social media and educational contexts through the overwhelming generated text with a certain degree of coherence. To mitigate their potential misuse, this repo explores the feasibility of finetuning LLaMA with label supervision (named LS-LLaMA) in unidirectional and bidirectional settings, to discriminate the texts generated by machines and humans in monolingual and multilingual corpora.

Please checkout the webpage Workshop on Detecting AI Generated Content.

We evaluate the feasibility of our approach with English and multilingual corpora from Wang et al. (2025). Both corpora are the continuation and improvement of Wang et al. (2024a) with additional training and testing data generated from novel LLMs and including new languages.