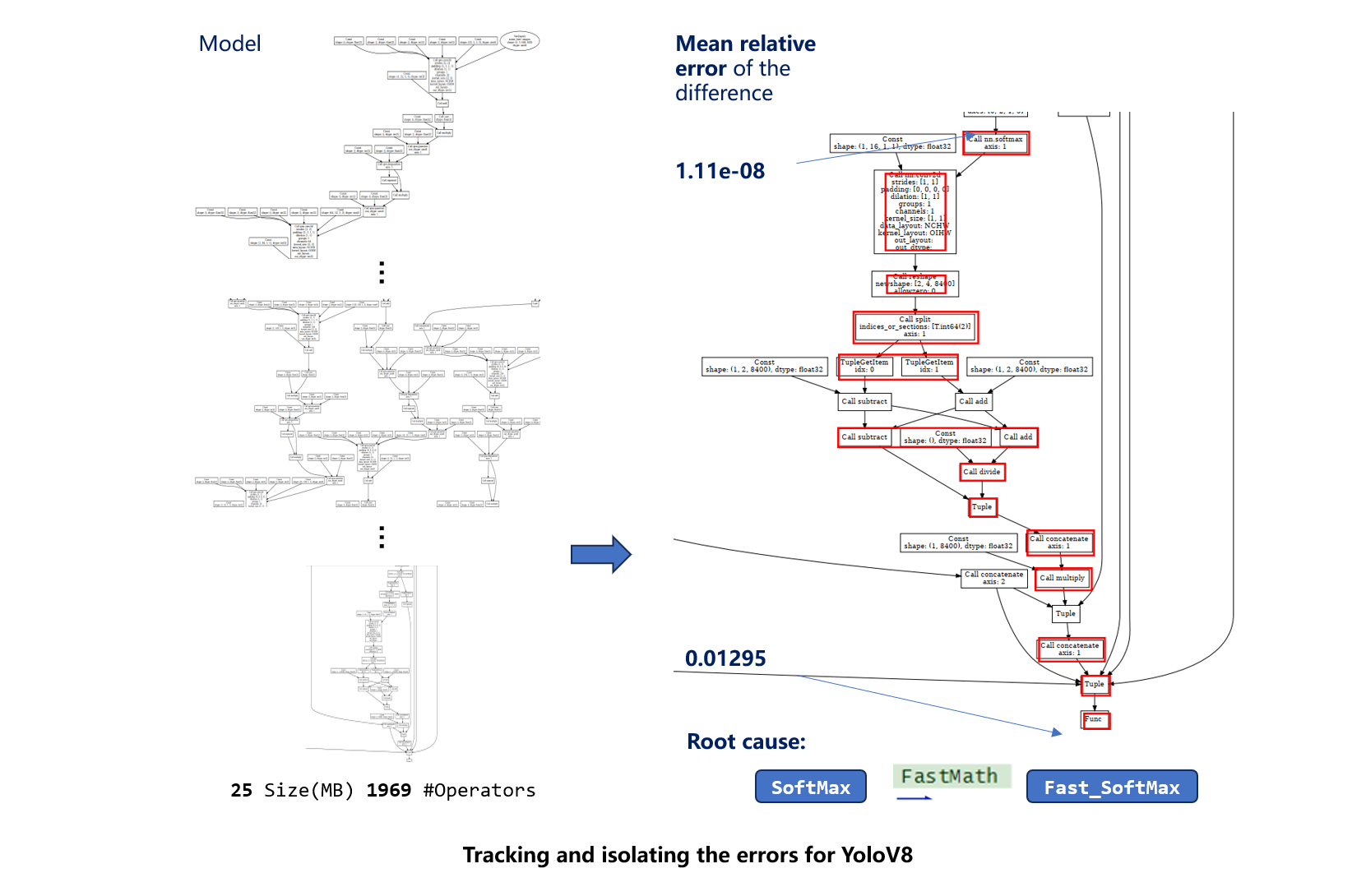

To diagnose compiler-introduced numerical errors in NN models, we introduce an automated approach TracNe, which consists of two tasks: detecting and tracing DLC-related numerical errors in a given NN model, a valid input range and specific compilation options. The results on two benchmarks show that TracNe is helpful in locating erroneous code and passes for both DL compiler users and developers. It can serve as a unit test for ensuring the model's robustness.

| Frontend Models | ONNX | PyTorch | TensorFlow2 |

|---|---|---|---|

TVM |

✅ | ✅ | ✅ |

XLA |

🔨 | ||

GLOW |

🔨 |

✅: Supported; 🔨: Developing;

src: Our approach implementation;op: Operators supperted by TracNe that can be extended.gencog: Scripts for generating benchmark. of Wang et al.

tests: The interfaces of our approach;bug: Bug list, TVM bug reports;

TracNe is written in Python. Run pip install -r requirements.txt to get python dependencies.

- TVM should be built in debugging mode.

- Download tvm code of version 0.12.dev0 at least.

- In config.cmake set the USE_PROFILER flag to ON.

- Build tvm from source. To achieve all functions, you needs to modify src/relay/backend/graph_executor_codegen.cc file according to the [PR16882]https://github.com/apache/tvm/pull/16882/files before build.

One benchmark has been prepared in the tests/out including 60 general NN models.

Industiral models can be downloaded following tests/dnn/readme.md.

cd tests

python test_fuzzer.py model_dir --low 0 --high 1 --optlevel 5The tested model should be placed in out/model_dir. After running the python script, the erroneous input triggering maximal errors will be stored in this directory. Augments low and high are the constraints for the input range. Users can control isolation granularity by

If the model is secure under the selected compilation option and input range, the errors found by the process are zero or less than the tolerance. Otherwise, the model is suspectable to the compilers' optimization.

python test_replay.py model_dirIt reproduces the errors by running optimized and un-optimized executable models under searched input. Meanwhile, the process stores concrete results of each function of the models.

python test_traceerror.py model_dirIt matches corresponding functions between symbolic optimized and up-optimized models and compares the results of each equivalent and paired function. The matching and comparison information are saved in the model_dir/trace.json.

python test_propa.py model_dirIt backtracks the error-accumulation changes along the calculation graph. For each discrepancy output, it generates an error-accumulation graph from which the generation and amplification of the errors can be clearly understood. If an error arises in function A, then developers can know how A are optimized and transformed when compilation from trace.json.

python test_pass.py model_dirThis process isolates optimization pass that incurs the numerical errors. Users can disable it to ensure the security and robustness of the model.

python test_batch.py model_dir1-model_dir9Above scripts are integrated to a single file which detects and diagnoses numerical errors in a batch of models.

We have provided comparison methods to evaluate the performance and efficiency of the TracNe.

python test_fuzzer.py model_dir --method MCMC/DEMC/MEGAMEGA is our detection algorithm, which MCMC is from Yu et al. and DEMC is devised by Yi et al.

python test_pliner.py model_dirThis method is implemented following Guo et al.

The utilities for searching and tracing methods can be reused, e.g., mutate_utils.py and fuzzer.py.

What is required for new DL compilers is to update the following:

- build_workload : function in base_utils.py to compile models and build executable files.

- run_mod : function in base_utils.py to run executable files.

- src/pass : passes' name in the DL compiler.

- src/op : unique operators of the DL compiler.